Since the first 3.5 TeV collisions in March 2010, the LHC has had three years of improving integrated luminosity. By the time that the first proton physics run ended in December 2012, the total integrated proton–proton luminosity delivered to each of the two general-purpose experiments – ATLAS and CMS – had reached nearly 30 fb–1 and enabled the discovery of a Higgs boson. ALICE, LHCb and TOTEM had also operated successfully and the LHC team was able to fulfil other objectives, including productive lead–lead and proton–lead runs.

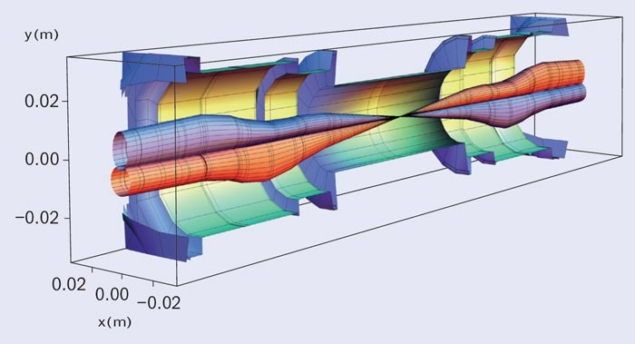

Establishing good luminosity depends on several factors but the goal is to have the largest number of particles potentially colliding in the smallest possible area at a given interaction point (IP). Following injection of the two beams into the LHC, there are three main steps to collisions. First, the beam energy is ramped to the required level. Then comes the squeeze. This second step involves decreasing the beam size at the IP using quadrupole magnets on both sides of a given experiment. In the LHC, the squeeze process is usually parameterized by β* (the beam size at the IP is proportional to the square root of β*). The third step is to remove the separation bumps that are formed by local corrector magnets. These bumps keep the beams separated at the IPs during the ramp and squeeze.

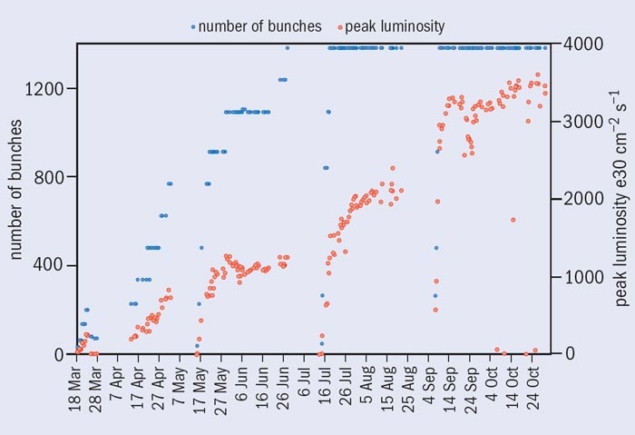

High luminosity translates into having many high-intensity particle bunches, an optimally focused beam size at the interaction point and a small emittance (a measure of the spread of the beam in transverse phase space). The three-year run saw relatively distinct phases in the increase of proton–proton luminosity, starting with basic commissioning then moving on through exploration of the limits to full physics production running in 2012.

The beam energy remained at 3.5 TeV in 2011 and the year saw exploitation combined with exploration of the LHC’s performance limits

The first year in 2010 was devoted to commissioning and establishing confidence in operational procedures and the machine protection system, laying the foundations for what was to follow. Commissioning of the ramp to 3.5 TeV went smoothly and the first (unsqueezed) collisions were established on 30 March. Squeeze commissioning then successfully reduced β* to 2 m in all four IPs.

With June came the decision to go for bunches of nominal intensity, i.e. around 1011 protons per bunch (see table below, p27). This involved an extended commissioning period and subsequent operation with beams of up to 50 or so widely separated bunches. The next step was to increase the number of bunches further. This required the move to bunch trains with 150 ns between bunches and the introduction of well defined beam-crossing angles in the interaction regions to avoid parasitic collisions. There was also a judicious back-off in the squeeze to a β* of 3.5 m. These changes necessitated setting up the tertiary collimators again and recommissioning the process of injection, ramp and squeeze – but provided a good opportunity to bed-in the operational sequence.

A phased increase in total intensity followed, with operational and machine protection validation performed before each step up in the number of bunches. Each increase was followed by a few days of running to check system performance. The proton run for the year finished with beams of 368 bunches of around 1.2 × 1011 protons per bunch and a peak luminosity of 2.1 × 1032 cm–2 s–1. The total integrated luminosity for both ATLAS and CMS in 2010 was around 0.04 fb–1.

The beam energy remained at 3.5 TeV in 2011 and the year saw exploitation combined with exploration of the LHC’s performance limits. The campaign to increase the number of bunches in the machine continued with tests with a 50 ns bunch spacing. An encouraging performance led to the decision to run with 50 ns. A staged ramp-up in the number of bunches ensued, reaching 1380 – the maximum possible with a bunch spacing of 50 ns – by the end of June. The LHC’s performance was increased further by reducing the emittances of the beams that were delivered by the injectors and by gently increasing the bunch intensity. The result was a peak luminosity of 2.4 × 1033 cm–2 s–1 and some healthy delivery rates that topped 90 pb–1 in 24 hours.

The next step up in peak luminosity in 2011 followed a reduction in β* in ATLAS and CMS from 1.5 m to 1 m. Smaller beam size at an IP implies bigger beam sizes in the neighbouring inner triplet magnets. However, careful measurements had revealed a better-than-expected aperture in the interaction regions, opening the way for this further reduction in β*. The lower β* and increases in bunch intensity eventually produced a peak luminosity of 3.7 × 1033 cm–2 s–1, beyond expectations at the start of the year. ATLAS and CMS had each received around 5.6 fb–1 by the end of proton–proton running for 2011.

An increase in beam energy to 4 TeV marked the start of operations in 2012 and the decision was made to stay at a 50 ns bunch spacing with around 1380 bunches. The aperture in the interaction regions, together with the use of tight collimator settings, allowed a more aggressive squeeze to β* of 0.6 m. The tighter collimator settings shadow the inner triplet magnets more effectively and allow the measured aperture to be exploited fully. The price to pay was increased sensitivity to orbit movements – particularly in the squeeze – together with increased impedance, which as expected had a clear effect on beam stability.

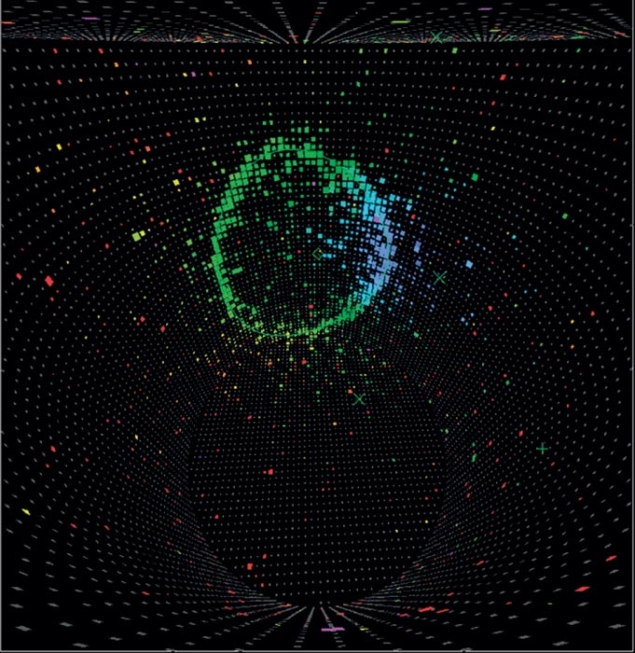

Credit: Courtesy J Jowett.

Peak luminosity soon came close to its highest for the year, although there were determined and long-running attempts to further improve performance. These were successful to a certain extent and revealed some interesting issues at high bunch and total beam intensity. Although never debilitating, instabilities were a recurring problem and there were phases when they cut into operational efficiency. Integrated luminosity rates, however, were generally healthy at around 1 fb–1 per week. This allowed a total of about 23 fb–1 to be delivered to both ATLAS and CMS during a long operational year with the proton–proton run extended until December.

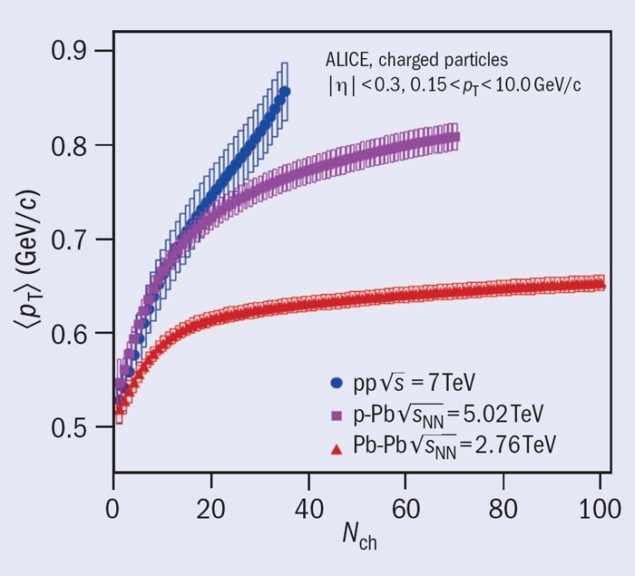

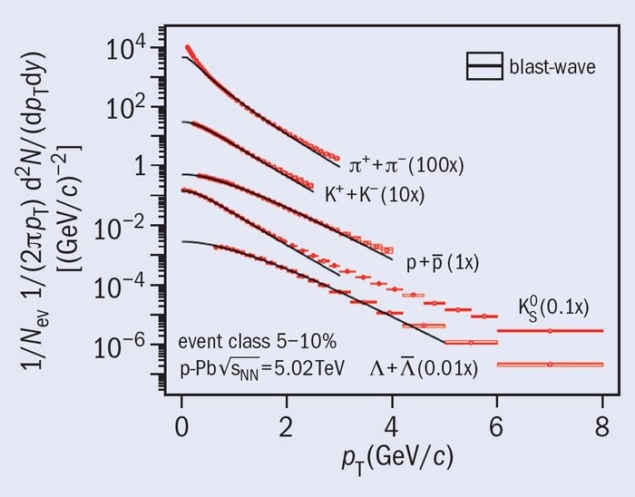

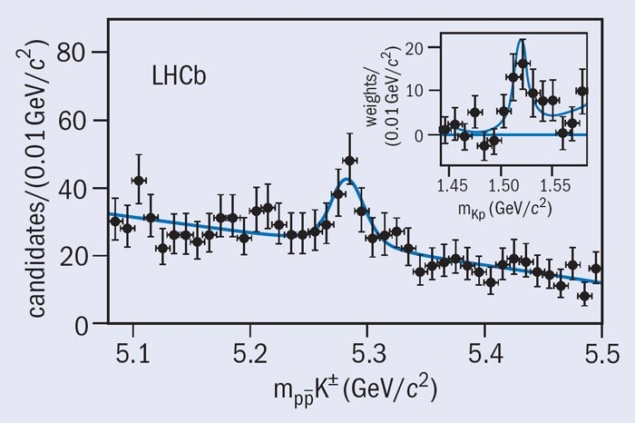

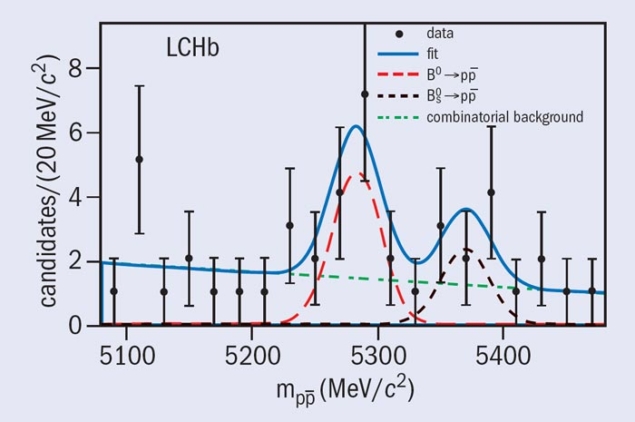

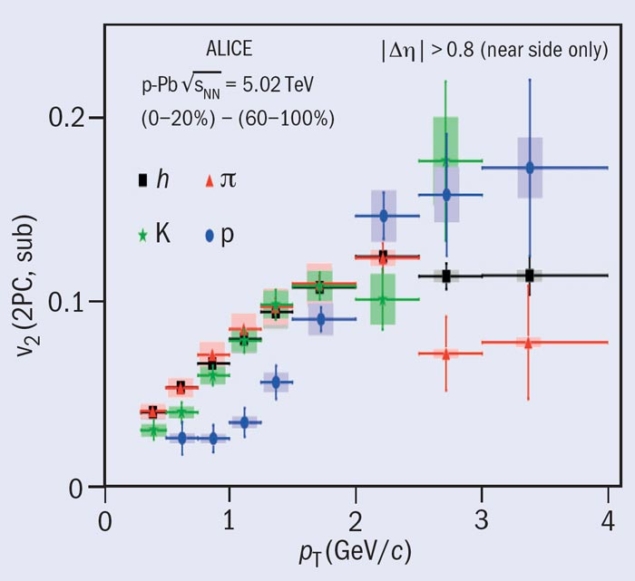

Apart from the delivery of high instantaneous and integrated proton–proton luminosity to ATLAS and CMS, the LHC team also satisfied other physics requirements. These included lead–lead runs in 2010 and 2011, which delivered 9.7 and 166 μb–1, respectively, at an energy of 3.5Z TeV (where Z is the atomic number of lead). Here the clients were ALICE, ATLAS and CMS. A process of luminosity levelling at around 4 × 1032 cm–2 s–1 via transverse separation with a tilted crossing angle enabled LHCb to collect 1.2 fb–1 and 2.2 fb–1 of proton–proton data in 2011 and 2012, respectively. ALICE enjoyed some sustained proton–proton running in 2012 at around 5 × 1030 cm–2 s–1, with collisions between enhanced satellite bunches and the main bunches. There was also a successful β* = 1 km run for TOTEM and the ATLAS forward detectors. This allowed the first LHC measurement in the Coulomb-nuclear interference region. Last, the three-year operational period culminated in a successful proton–lead run at the start of 2013, with ALICE, ATLAS, CMS and LHCb all taking data.

One of the main features of operation in 2011 and 2012 was the high bunch intensity and lower-than-nominal emittances offered by the excellent performance of the injector chain of Booster, Proton Synchrotron and Super Proton Synchrotron. The bunch intensity had been up to 150% of nominal with 50 ns bunch spacing, while the normalized emittance going into collisions had been around 2.5 mm mrad, i.e. 67% of nominal. Happily, the LHC proved to be capable of absorbing these brighter beams, notably in terms of beam–beam effects. The cost to the experiments was high pile-up, an issue that was handled successfully.

The table shows the values for the main luminosity-related parameters at peak performance of the LHC from 2010 to 2012 and the design values. It shows that, even though the beam size is naturally larger at lower energy, the LHC has achieved 77% of design luminosity at four-sevenths of the design energy with a β* of 0.6 m (compared with the design value of 0.55 m) with half of the nominal number of bunches.

Operational efficiency has been good with the integrated luminosity per week record reaching 1.3 fb–1. This is the result of outstanding system performance combined with fundamental characteristics of the LHC. The machine has a healthy single-beam lifetime before collisions of more than 300 hours and on the whole enjoys good vacuum conditions in both warm and cold regions. With a peak luminosity of around 7 × 1033 cm–2 s–1 at the start of a fill, the luminosity lifetime is initially in the range of 6–8 hours, increasing as the fill develops. There is minimal drift in beam overlap during physics data-taking and the beams are generally stable.

At the same time, a profound understanding of the beam physics and a good level of operational control have been established. The magnetic aspects of the machine are well understood thanks to modelling with FiDel (the Field Description for the LHC). A long and thorough magnet-measurement and analysis campaign meant that the deployed settings produced a machine with a linear optics that is close to the nominal model. Measurement and correction of the optics has aligned machine and model to an unprecedented level.

A robust operational cycle is now well established, with the steps of pre-cycle, injection, 450 GeV machine, ramp, squeeze and collide mostly sequencer-driven. A strict pre-cycling regime means that the magnetic machine is remarkably reproducible. Importantly, the resulting orbit stability – or the ability to correct back consistently to a reference – means that the collimator set-up remains good for a year’s run.

Considering the size, complexity and operating principles of the LHC, its availability has generally been good. The 257-day run in 2012 included around 200 days dedicated to proton–proton physics, with 36.5% of the time being spent in stable beams. This is encouraging for a machine that is only three years into its operational lifetime. Of note is the high availability of the critical LHC cryogenics system. In addition, many other systems also have crucial roles in ensuring that the LHC can run safely and efficiently.

In general the LHC beam-dump system (LBDS) worked impeccably, causing no major operational problems or long downtime. Beam-based set-up and checks are performed at the start of the operational year. The downstream protection devices form part of the collimator hierarchy and their proper positioning is verified periodically. The collimation system maintained a high proton-cleaning efficiency and semi-automatic tools have improved collimator set-up times during alignment.

The overall protection of the machine is ensured by rigorous follow-up, qualification and monitoring. The beam drives a subtle interplay of the LBDS, the collimation system and protection devices, which rely on a well defined aperture, orbit and optics for guaranteed safe operation. The beam dump, injection and collimation teams pursued well organized programmes of set-up and validation tests, permitting routine collimation of 140 MJ beams without a single quench of superconducting magnets from stored beams.

The beam instrumentation had great performance overall. Facilitating a deep understanding of the machine, it paved the way for the impressive improvement in performance during the three-year run. The power converters performed superbly, with good tracking between reference and measured currents and between the converters around the ring. There was good performance from the key RF systems. Software and controls benefited from a coherent approach, early deployment and tests on the injectors and transfer lines.

In summary, the LHC is performing well and a huge amount of experience and understanding has been gained during the past three years

There have inevitably been issues arising during the exploitation of the LHC. Initially, single-event upsets caused by beam-induced radiation to electronics in the tunnel were a serious cause of inefficiency. This problem had been foreseen and a sustained programme of mitigation measures, which included relocation of equipment, additional shielding and further equipment upgrades, resulted in a reduction of premature beam dumps from 12 per fb–1 to 3 per fb–1 in 2012. By contrast, an unforeseen problem concerned unidentified falling objects (UFOs) – dust particles falling into the beam causing fast, localized beam-loss events. These have now been studied and simulated but might still cause difficulties after the move to higher energy and a bunch spacing of 25 ns following the current long shutdown.

Beam-induced heating has been an issue. Essentially, all cases turned out to be localized and connected with nonconformities, either in design or installation. Design problems have affected the injection-protection devices and the mirror assemblies of the synchrotron-radiation telescopes, while installation problems have occurred in a low number of vacuum assemblies.

Beam instabilities dogged operations during 2012. The problems came with the push in bunch intensity, with the peak going into stable beams reaching around 1.7 × 1011 protons per bunch, i.e. ultimate bunch intensity. Other contributory factors included increased impedance from the tight collimator settings, smaller than nominal emittance and operation with low chromaticity during the first half of the run.

A final beam issue concerns the electron cloud. Here, electrons emitted from the vacuum chamber are accelerated by the electromagnetic fields of the circulating bunches. On impacting the vacuum chamber they cause further emission of one or more electrons and there is a potential avalanche effect. The effect is strongly bunch-spacing dependent and although it has not been a serious issue with the 50 ns beam, there are potential problems with 25 ns .

In summary, the LHC is performing well and a huge amount of experience and understanding has been gained during the past three years. There is good system performance, excellent tools and reasonable availability following targeted consolidation. Good luminosity performance has been achieved by harnessing the beam quality from injectors and fully exploiting the options in the LHC. This overall performance is the result of a remarkable amount of effort from all of the teams involved.

This article is based on “The first years of LHC operation for luminosity production”, which was presented at IPAC13.