Jesse Thaler argues that particle physicists must go beyond deep learning and design AI capable of deep thinking.

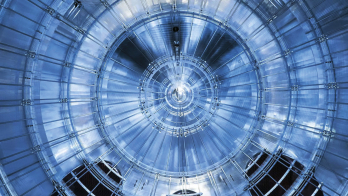

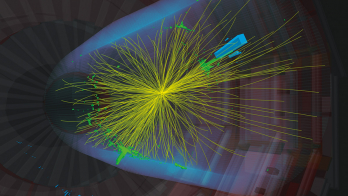

Can we trust physics decisions made by machines? In recent applications of artificial intelligence (AI) to particle physics, we have partially sidestepped this question by using machine learning to augment analyses, rather than replace them. We have gained trust in AI decisions through careful studies of “control regions” and painstaking numerical simulations. As our physics ambitions grow, however, we are using “deeper” networks with more layers and more complicated architectures, which are difficult to validate in the traditional way. And to mitigate 10 to 100-fold increases in computing costs, we are planning to fully integrate AI into data collection, simulation and analysis at the high-luminosity LHC.

To build trust in AI, I believe we need to teach it to think like a physicist.

I am the director of the US National Science Foundation’s new Institute for Artificial Intelligence and Fundamental Interactions, which was founded last year. Our goal is to fuse advances in deep learning with time-tested strategies for “deep thinking” in the physical sciences. Many promising opportunities are open to us. Core principles of fundamental physics such as causality and spacetime symmetries can be directly incorporated into the structure of neural networks. Symbolic regression can often translate solutions learned by AI into compact, human-interpretable equations. In experimental physics, it is becoming possible to estimate and mitigate systematic uncertainties using AI, even when there are a large number of nuisance parameters. In theoretical physics, we are finding ways to merge AI with traditional numerical tools to satisfy stringent requirements that calculations be exact and reproducible. High-energy physicists are well positioned to develop trustworthy AI that can be scrutinised, verified and interpreted, since the five-sigma standard of discovery in our field necessitates it.

It is equally important, however, that we physicists teach ourselves how to think like a machine.

Modern AI tools yield results that are often surprisingly accurate and insightful, but sometimes unstable or biased. This can happen if the problem to be solved is “underspecified”, meaning that we have not provided the machine with a complete list of desired behaviours, such as insensitivity to noise, sensible ways to extrapolate and awareness of uncertainties. An even more challenging situation arises when the machine can identify multiple solutions to a problem, but lacks a guiding principle to decide which is most robust. By thinking like a machine, and recognising that modern AI solves problems through numerical optimisation, we can better understand the intrinsic limitations of training neural networks with finite and imperfect datasets, and develop improved optimisation strategies. By thinking like a machine, we can better translate first principles, best practices and domain knowledge from fundamental physics into the computational language of AI.

Beyond these innovations, which echo the logical and algorithmic AI that preceded the deep-learning revolution of the past decade, we are also finding surprising connections between thinking like a machine and thinking like a physicist. Recently, computer scientists and physicists have begun to discover that the apparent complexity of deep learning may mask an emergent simplicity. This idea is familiar from statistical physics, where the interactions of many atoms or molecules can often be summarised in terms of simpler emergent properties of materials. In the case of deep learning, as the width and depth of a neural network grows, its behaviour seems to be describable in terms of a small number of emergent parameters, sometimes just a handful. This suggests that tools from statistical physics and quantum field theory can be used to understand AI dynamics, and yield deeper insights into their power and limitations.

If we don’t exploit the full power of AI, we will not maximise the discovery potential of the LHC and other experiments

Ultimately, we need to merge the insights gained from artificial intelligence and physics intelligence. If we don’t exploit the full power of AI, we will not maximise the discovery potential of the LHC and other experiments. But if we don’t build trustable AI, we will lack scientific rigour. Machines may never think like human physicists, and human physicists will certainly never match the computational ability of AI, but together we have enormous potential to learn about the fundamental structure of the universe.