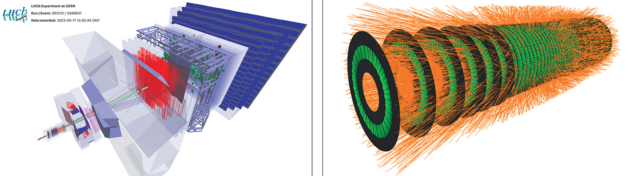

Oliver Brüning and Markus Zerlauth describe the latest progress and next steps for the validation of key technologies, tests of prototypes and the series production of equipmentince the start of physics operations in 2010, the Large Hadron Collider (LHC) has enabled a global user community of more than 10,000 physicists to explore the high-energy frontier. This unique scientific programme – which has seen the discovery of the Higgs boson, countless measurements of high-energy phenomena, and exhaustive searches for new particles – has already transformed the field. To increase the LHC’s discovery potential further, for example by enabling higher precision and the observation of rare processes, the High-Luminosity LHC (HL-LHC) upgrade aims to boost the amount of data collected by the ATLAS and CMS experiments by a factor of 10 and enable CERN’s flagship collider to operate until the early 2040s.

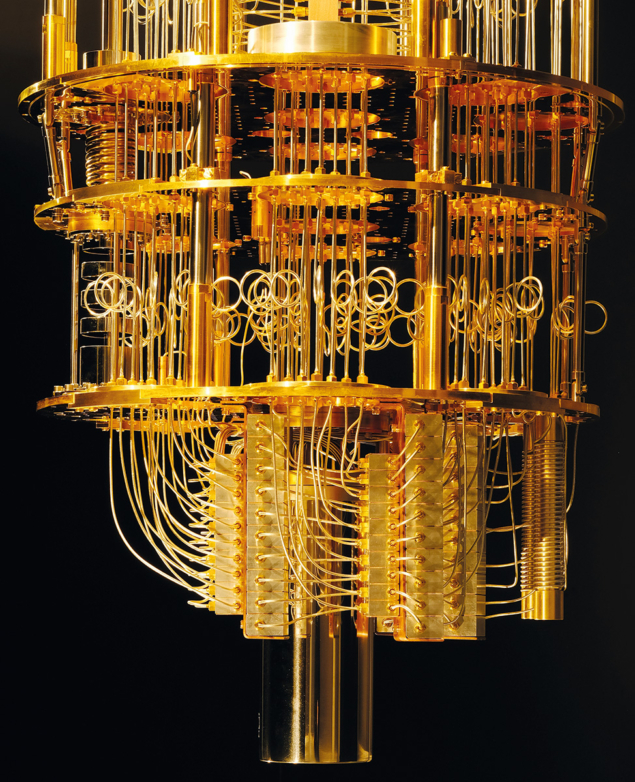

Following the completion of the second long shutdown (LS2) in 2022, during which the LHC injectors upgrade project was successfully implemented, Run 3 commenced at a record centre-of-mass energy of 13.6 TeV. Only two years of operation remain before the start of LS3 in 2026. This is when the main installation phase of the HL-LHC will commence, starting with the excavation of the vertical cores that will link the LHC tunnel to the new HL-LHC galleries and followed by the installation of new accelerator components. Approved in 2016, the HL-LHC project is driving several innovative technologies, including: niobium-tin (Nb3Sn) accelerator magnets, a cold powering system made from MgB2 high-temperature superconducting cables and a flexible cryostat, the integration of compact niobium crab cavities to compensate for the larger beam crossing angle, and new technology for beam collimation and machine protection.

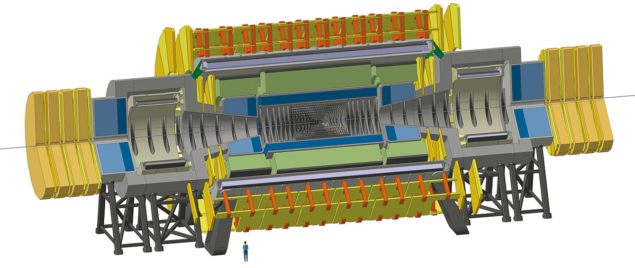

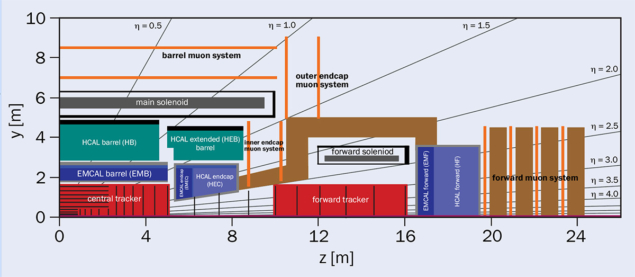

Efforts at CERN and across the HL-LHC collaboration are now focusing on the series production of all project deliverables in view of their installation and validation in the LHC tunnel. A centrepiece of this effort, which involves institutes from around the world and strong collaboration with industry, is the assembly and commissioning of the new insertion-region magnets that will be installed on either side of ATLAS and CMS to enable high-luminosity operations from 2029. In parallel, intense work continues on the corresponding upgrades of the LHC detectors: completely new inner trackers will be installed by ATLAS and CMS during LS3 (CERN Courier January/February 2023 p22 and 33), while LHCb and ALICE are working on proposals for radically new detectors for installation in the 2030s (CERN Courier March/April 2023 p22 and 35).

Civil-engineering complete

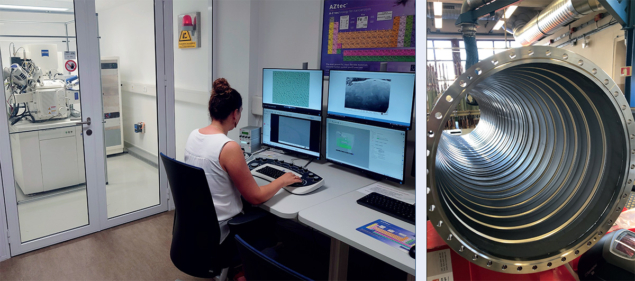

The targeted higher performance at the ATLAS and CMS interaction points (IPs) demands increased cooling capacity for the final focusing quadrupole magnets left and right of the experiments to deal with the larger flux of collision debris. Additional space is also needed to accommodate new equipment such as power converters and machine-protection devices, as well as shielding to reduce their exposure to radiation, and to allow easy access for faster interventions and thus improved machine availability. All these requirements have been addressed by the construction of new underground structures at ATLAS and CMS. Both sites feature a new access shaft and cavern that will house a new refrigerator cold box, a roughly 400 m-long gallery for the new power converters and protection equipment, four service tunnels and 12 vertical cores connecting the gallery to the existing LHC tunnel. A new staircase at each side of the experiment also connects the new underground structures to the existing LHC tunnel for personnel.

Civil-engineering works started at the end of 2018 to allow the bulk of the interventions requiring heavy machinery to be carried out during LS2, since it was estimated that the vibrations would otherwise have a detrimental impact on the LHC performance. All underground civil-engineering works were completed in 2022 and the construction of the new surface buildings, five at each IP, in spring 2023. The new access lifts encountered a delay of about six months due to some localised concrete spalling inside the shafts, but the installation at both sites was completed in autumn 2023.

The installation of the technical infrastructures is now progressing at full speed in both the underground and surface areas (see “Buildings and infrastructure” image). It is remarkable that, even though the civil-engineering work extended throughout the COVID-19 shutdown period and was exposed to market volatility in the aftermath of Russia’s invasion of Ukraine, it could essentially be completed on schedule and within budget. This represents a huge milestone for the HL-LHC project and for CERN.

A cornerstone of the HL-LHC upgrade are the new triplet quadrupole magnets with increased radiation tolerance

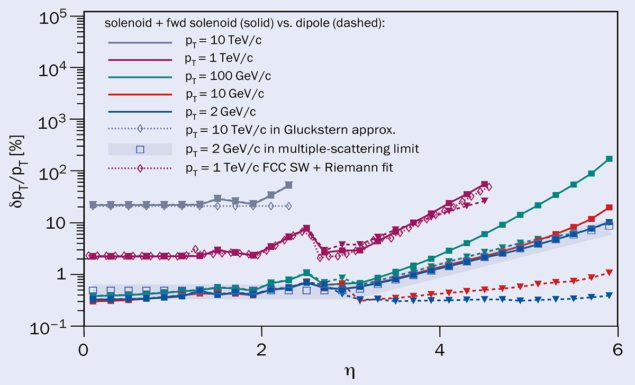

A cornerstone of the HL-LHC upgrade are the new triplet quadrupole magnets with increased radiation tolerance. A total of 24 large-aperture Nb3Sn focusing quadrupole magnets will be installed around ATLAS and CMS to focus the beams more tightly, representing the first use of Nb3Sn magnet technology in an accelerator for particle physics. Due to the higher collision rates in the experiments, radiation levels and integrated dose rates will increase accordingly, requiring particular care in the choice of materials used to construct the magnet coils (as well as the integration of additional tungsten shielding into the beam screens). In order to have sufficient space for the shielding, the coil apertures need to be roughly doubled compared to the existing Nb-Ti LHC triplets, thus reducing the β* parameter (which relates to the beam size at the collision points) by a factor of four compared to the nominal LHC design and fully exploiting the improved beam emittances following the upgrade of the LHC injector chain.

For the HL-LHC, reaching the required integrated magnetic gradient with Nb-Ti technology and twice the magnet aperture would require a much longer triplet. Choosing Nb3Sn allows fields of 12 T to be reached, and therefore a doubling of the triplet aperture while keeping the magnet relatively compact (the total length is increased from 23 m to 32 m). Intensive R&D and prototyping of Nb3Sn magnets started 20 years ago under the US-based LHC Accelerator Research Program (LARP), which united LBNL, SLAC, Fermilab and BNL. Officially launched as a design study in 2011, it has since been converted into the Accelerator Upgrade Program (AUP, which involves LBNL, Fermilab and BNL) in the industrialisation and series-production phase of all main components.

The HL-LHC inner-triplet magnets are designed and constructed in a collaboration between AUP and CERN. The 10 (eight for installation and two spares) Q1 and Q3 cryo-assemblies, which contain two 4.2 m-long individual quadrupole magnets (MQXFA), will be provided as an in-kind contribution from AUP, while the 10 longer versions for Q2 (containing a single 7.2 m-long quadrupole magnet, MQXFB, and one dipole orbit-corrector assembly) will be produced at CERN. The first of these magnets was tested and fully validated in the US in 2019 and the first cryo-assembly consisting of two individual magnets was assembled, tested and validated at Fermilab in 2023. This cryo-assembly arrived at CERN in November 2023 and is now being prepared for validation and testing. The US cable and coil production reached completion in 2023 and the magnet and cryo-assembly production is picking up pace for series production.

The first three Q2 prototype magnets showed some limitations. This prompted an extensive three-phase improvement plan after the second prototype test to address the different stages of coil production, the coil and stainless-steel shell assembly procedure, and welding for the final cold mass. All three improvement steps were implemented in the third prototype (MQXFBP3), which is the first magnet that no longer shows any limitations, neither at 1.9 K nor 4.5 K operating temperatures, and thus the first from the production that is earmarked for installation in the tunnel (see “Quadrupole magnets” image).

Beyond the triplets, the HL-LHC insertion regions require several other novel magnets to manipulate the beams. For some magnet types, such as the nonlinear corrector magnets (produced by LASA in Milan as an in-kind contribution from INFN), the full production has been completed and all magnets have been delivered to CERN. The new separation and recombination dipole magnets – which are located on the far side of the insertion regions to guide the two counterrotating beams from the separated apertures in the arc onto a common trajectory that allows collisions at the IPs – are produced as in-kind contributions from Japan and Italy. The single-aperture D1 dipole magnets are produced by KEK with Hitachi as the industrial partner, while the twin-aperture D2 dipole magnets are produced in industry by ASG in Genoa, again as an in-kind contribution from INFN. Even though both dipole types are based on established Nb-Ti superconductor technology (the workhorse of the LHC), they push the conductor into unchartered territory. For example, the D1 dipole features a large aperture of 150 mm and a peak dipole field of 5.6 T, resulting in very large forces in the coils during operation. Hitachi has already produced three of the six series magnets. The prototype D1 dipole magnet was delivered to CERN in 2023 and cryostated in its final configuration, and the D2 prototype magnet has been tested and fully validated at CERN in its final cryostat configuration and the first series D2 magnet has been delivered from ASG to CERN (see “Dipole magnets” image).

A novel cold powering system featuring a flexible cryostat and MgB2 cables can carry the required currents at temperatures of up to 50 K

Production of the remaining new HL-LHC magnets is also in full swing. The nested canted-cosine-theta magnets – a novel magnet design comprising two solenoids with canted coil layers, needed to correct the orbit next to the D2 dipole – is progressing well in China as an in-kind contribution from IHEP with Bama as the industrial partner. The nested dipole orbit-corrector magnets, required for the orbit correction within the triplet area, are based on Nb-Ti technology (an in-kind contribution from CIEMAT in Spain) and are also advancing well, with the final validation of the long-magnet version demonstrated in 2023 (see “Corrector magnets” image).

Superconducting link

With the new power converters in the HL-LHC underground galleries being located approximately 100 m away from and 8 m above the magnets in the tunnel, a cost- and energy-efficient way to carry currents of up to 18 kA between them was needed. It was foreseen that “simple” water-cooled copper cables and busbars would lead to an undesirable inefficiency in cooling-off the Ohmic losses, and that Nb-Ti links requiring cooling with liquid helium would be too technically challenging and expensive given the height difference between the new galleries and the LHC tunnel. Instead, it was decided to develop a novel cold powering system featuring a flexible cryostat and magnesium-diboride (MgB2) cables that can carry the required currents at temperatures of up to 50 K.

With this unprecedented system, helium boils off from the magnet cryostats in the tunnel and propagates through the flexible cryostat to the new underground galleries. This process cools both the MgB2 cable and the high-temperature superconducting current leads (which connect the normal-conducting power converters to the superconducting magnets) to nominal temperatures between 15 K and 35 K. The gaseous helium is then collected in the new galleries, compressed, liquefied and fed back into the cryogenic system. The new cables and cryostats have been developed with companies in Italy (ASG and Tratos) and the Netherlands (Cryoworld), and are now available as commercial materials for other projects (CERN Courier May/June 2023 p37).

Three demonstrator tests conducted in CERN’s SM18 facility have already fully validated the MgB2 cable and flexible-cryostat concept. The feed boxes that connect the MgB2 cable to the power converters in the galleries and the magnets in the tunnel have been developed and produced as in-kind contributions with the University of Southampton and Puma as industrial partner in the UK and the University of Uppsala and RFR as industrial partner in Sweden. A complete assembly of the superconducting link with the two feed boxes has been assembled and is being tested in SM18 in preparation for its installation in the inner-triplet string in 2024 (see “Superconducting feed” image).

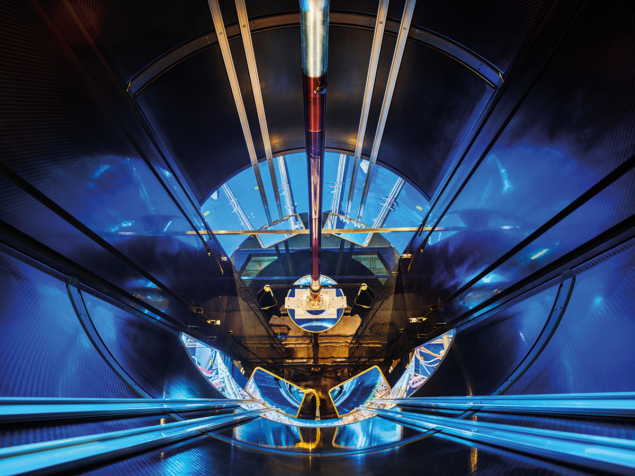

IT string assembly

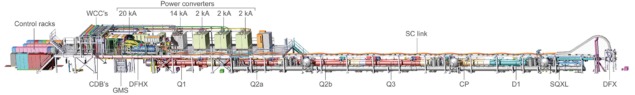

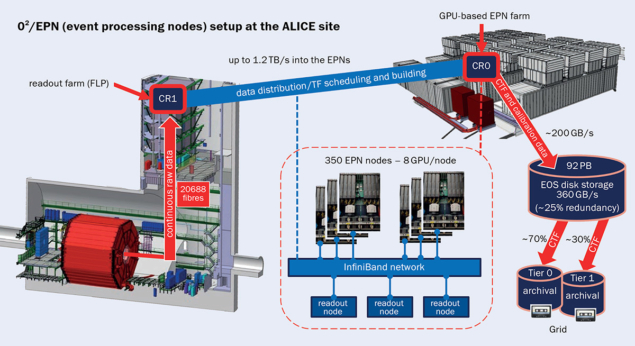

The inner-triplet (IT) string – which replicates the full magnet, powering and protection assembly left of CMS from the triplet magnets up to the D1 separation dipole magnet – is the next emerging milestone of the HL-LHC project (see “Inner-triplet string” image). The goal of the IT string is to validate the assembly and connection procedures and tools required for its construction. It also serves to assess the collective behaviour of the superconducting magnet chain in conditions as close as possible to those of their later operation in the HL-LHC, and as a training opportunity for the equipment teams for their later work in the LHC tunnel. The IT string includes all the systems required for operation at nominal conditions, such as the vacuum (albeit without the magnet beam screens), cryogenics, powering and protection systems. The installation is planned to be completed in 2024, and the main operational period will take place in 2025.

The entire IT string – measuring about 90 m long – just fits at the back of the SM18 test hall, where the necessary liquid-helium infrastructure is available. The new underground galleries are mimicked by a metallic structure situated above the magnets. The structure houses the power converters and quench-protection system, the electrical disconnector box, and the feed box that connects the superconducting link to normal-conducting powering systems. The superconducting link extends from the metallic structure above the magnet assembly to the D1 end of the IT string where (after a vertical descent mimicking the passage through the underground vertical cores) it is connected to a prototype of the feed box that connects to the magnets.

The inner-triplet string is the next emerging milestone of the HL-LHC project

The installation of the normal-conducting powering and machine-protection systems of the IT string is nearing completion. Together with the already completed infrastructures of the facility, the complete normal-conducting powering system of the string entered its first commissioning phase in December 2023 with the execution of short-circuit tests. The cryogenic distribution line for the IT string has been successfully tested at cold temperatures and will soon undergo a second cooldown to nominal temperature, ahead of the installation of the magnets and cold-powering system this year.

Collimation

Controlling beam losses caused by high-energy particles deviating from their ideal trajectory is essential to ensure the protection and efficient operation of accelerator components, and in particular superconducting elements such as magnets and cavities. The existing LHC collimation system, which already comprises more than 100 individual collimators installed around the ring, needs to be upgraded to address the unprecedented challenges brought about by the brighter HL-LHC beams. Following a first upgrade of the LHC collimation and shielding systems deployed during LS2, the production of new insertion-region collimators and the second batch of low-impedance collimators is now being launched in industry.

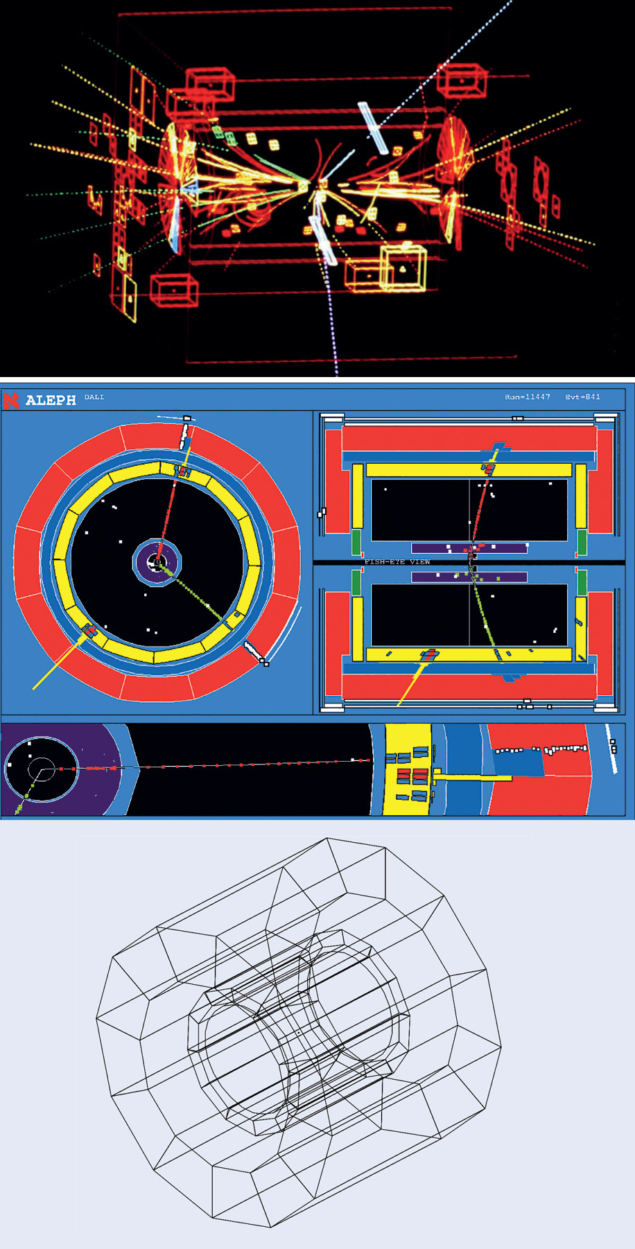

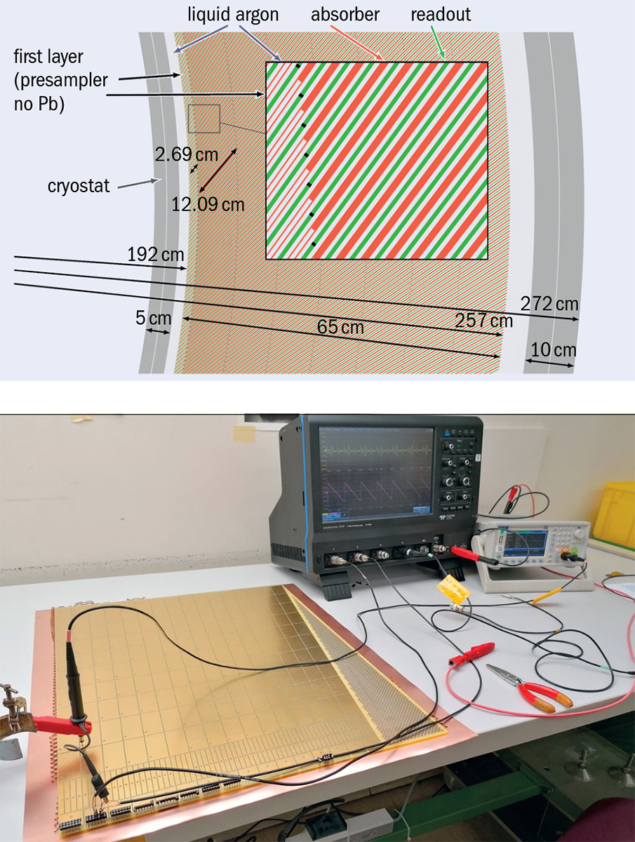

LS2 and the subsequent year-end technical stop also saw the completion of the novel crystal-collimation scheme (CERN Courier November/December 2022 p35). Located in “IR7” between CMS and LHCb, this scheme comprises four goniometers with bent crystals – one per beam and plane – to channel halo particles onto a downstream absorber (see “Crystal collimators” image). After extensive studies with beam during the past few years, crystal collimation was used operationally in a nominal physics run for the first time during the 2023 heavy-ion run, where it was shown to increase the cleaning efficiency by a factor of up to five compared to the standard collimation scheme. Following this successful deployment and comprehensive machine-development tests, the HL-LHC performance goals have been conclusively confirmed for both proton and ion operations. This has enabled the baseline solution using a standard collimator inserted in IR7 (which would have required replacing a standard 8.3 T LHC dipole with two short 11 T Nb3Sn dipoles to create the necessary space) to be descoped from the HL-LHC project.

Crab cavities

A second cornerstone of the HL-LHC project after the triplet magnets are the superconducting radiofrequency “crab” cavities. Positioned next to the D2 dipole and the Q4 matching-section quadrupole magnet in the insertion regions, these are necessary to compensate for the detrimental effect of the crossing angle on luminosity by applying a transverse momentum kick to each bunch entering the interaction regions of ATLAS and CMS. Two different types of cavities will be installed: the radio-frequency dipole (RFD) and the double quarter wave (DQW), deflecting bunches in the horizontal and vertical crossing planes, respectively (see “Crab cavities” image). Series production of the RFD cavities is about to begin at Zanon, Italy under the lead of AUP, while the DQW cavity series production is well underway at RI in Germany under the lead of CERN following the successful validation of two pre-series bare cavities.

A fully assembled DQW cryomodule has been undergoing highly successful beam tests in the Super Proton Synchrotron (SPS) since 2018, demonstrating the crabbing of proton beams and allowing for the development and validation of the necessary low-level RF and machine-protection systems (CERN Courier March/April 2022 p45). For the RFD, two dressed cavities were delivered at the end of 2021 to the UK collaboration after their successful qualification at CERN. These were assembled into a first complete RFD cryomodule that was returned to CERN in autumn 2023 and is currently undergoing validation tests at 1.9 K, revealing some non-conformities to be resolved before it is ready for installation in the SPS in 2025 for tests with beams. Series production of the necessary ancillaries and higher-order-mode couplers has also started for both cavity types at CERN and AUP after the successful validation of prototypes. Prior to fabrication, the crab-cavity concept underwent a long period of R&D with the support of LARP, JLAB, UK-STFC and KEK.

On schedule

2023 and 2024 are the last two years of major spending and allocation of industrial contracts for the HL-LHC project. With the completion of the civil-engineering contracts and the placement of contracts for the new cryogenic compressors and distribution systems, the project has now committed more than 75% of its budget at completion. An HL-LHC cost-and-schedule review held at CERN in November 2023, conducted by an international panel of accelerator experts from other laboratories, congratulated the project on the overall good progress and agreed with the projection to be ready for installation of the major equipment during LS3 starting in 2026.

The major milestones for the HL-LHC project over the next two years will be the completion and operation of the IT-string installation in 2024 and 2025, and the completion of the installation of the technical infrastructures in the new underground galleries. All new magnet components should be delivered to CERN by the end of 2026, while the drilling of the vertical cores connecting the new and old underground areas should complete the major construction activities and mark the start of the installation of the new equipment in the LHC tunnel.

The HL-LHC will push the largest scientific instrument ever built to unprecedented levels of performance and extend the flagship collider of the European and US high-energy physics programme by another 15 years. It is the culmination of more than 25 years of R&D, with close cooperation with industry in CERN’s member states and the establishment of new accelerator technologies for the use of future projects. All hands are now on deck to ensure the brightest future possible for the LHC.

Jonathan Edelen, president, earned a PhD in accelerator physics from Colorado State University, after which he was selected for the prestigious Bardeen Fellowship at Fermilab. While at Fermilab he worked on RF systems and thermionic cathode sources at the Advanced Photon Source. Currently, Jon is focused on building advanced control algorithms for particle accelerators including solutions involving machine learning.

Jonathan Edelen, president, earned a PhD in accelerator physics from Colorado State University, after which he was selected for the prestigious Bardeen Fellowship at Fermilab. While at Fermilab he worked on RF systems and thermionic cathode sources at the Advanced Photon Source. Currently, Jon is focused on building advanced control algorithms for particle accelerators including solutions involving machine learning.