Completed in 2022, China’s Tiangong space station represents one of the biggest projects in space exploration in recent decades. Like the International Space Station, its ability to provide large amounts of power, support heavy payloads and access powerful communication and computing facilities give it many advantages over typical satellite platforms. As such, both Chinese and international collaborations have been developing a number of science missions ranging from optical astronomy to the detection of cosmic rays with PeV energies.

For optical astronomy, the space station will be accompanied by the Xuntian telescope, which can be translated to “survey the heavens”. Xuntian is currently planned to be launched in mid-2025 to fly alongside Tiangong, thereby allowing for regular maintenance. Although its spatial resolution will be similar to that of the Hubble Space Telescope, Xuntian’s field of view will be about 300 times larger, allowing the observation of many objects at the same time. In addition to producing impressive images similar to those sent by Hubble, the instrument will be important for cosmological studies where large statistics for astronomical objects are typically required to study their evolution.

Another instrument that will observe large portions of the sky is LyRIC (Lyman UV Radiation from Interstellar medium and Circum-galactic medium). After being placed on the space station in the coming years, LyRIC will probe the poorly studied far-ultraviolet regime that contains emission lines from neutral hydrogen and other elements. While difficult to measure, this allows studies of baryonic matter in the universe, which can be used to answer important questions such as why only about half of the total baryons in the standard “ΛCDM” cosmological model can be accounted for.

At slightly higher energies, the Diffuse X-ray Explorer (DIXE) aims to use a novel type of X-ray detector to reach an energy resolution better than 1% in the 0.1 to 10 keV energy range. It achieves this using cryogenic transition-edge sensors (TESs), which exploit the rapid change in resistance that occurs during a superconducting phase transition. In this regime, the resistivity of the material is highly dependent on its temperature, allowing the detection of minuscule temperature increases resulting from X-rays being absorbed by the material. Positioned to scan the sky above the Tiangong space station, DIXE will be able, among other things, to measure the velocity of material that appears to have been emitted by the Milky Way during an active stage of its central black hole. Its high-energy resolution will allow Doppler shifts of the order of several eV to be measured, requiring the TES detectors to operate at 50 mK. Achieving such temperatures demands a cooling system of 640 W – a power level that is difficult to achieve on a satellite, but relatively easy to acquire on a space station. As such, DIXE will be one of the first detectors using this new technology when it launches in 2025, leading the way for missions such as the European ATHENA mission that plans to use it starting in 2037.

Although not as large or mature as the International Space Station, Tiangong’s capacity to host cutting-edge astrophysics missions is catching up

POLAR-2 was accepted as an international payload on the China space station through the United Nations Office for Outer Space Affairs and has since become a CERN-recognised experiment. The mission started as a Swiss, German, Polish and Chinese collaboration building on the success of POLAR, which flew on the space station’s predecessor Tiangong-2. Like its earlier incarnation, POLAR-2 measures the polarisation of high-energy X rays or gamma rays to provide insights into, for example, the magnetic fields that produced the emission. As one of the most sensitive gamma-ray detectors in the sky, POLAR-2 can also play an important role in alerting other instruments when a bright gamma-ray transient, such as a gamma-ray burst, appears. The importance of such alerts has resulted in the expansion of POLAR-2 to include an accompanying imaging spectrometer, which will provide detailed spectral and location information on any gamma-ray transient. Also now foreseen for this second payload is an additional wide-field-of-view X-ray polarimeter. The international team developing the three instruments, which are scheduled to be launched in 2027, is led by the Institute of High Energy Physics in Beijing.

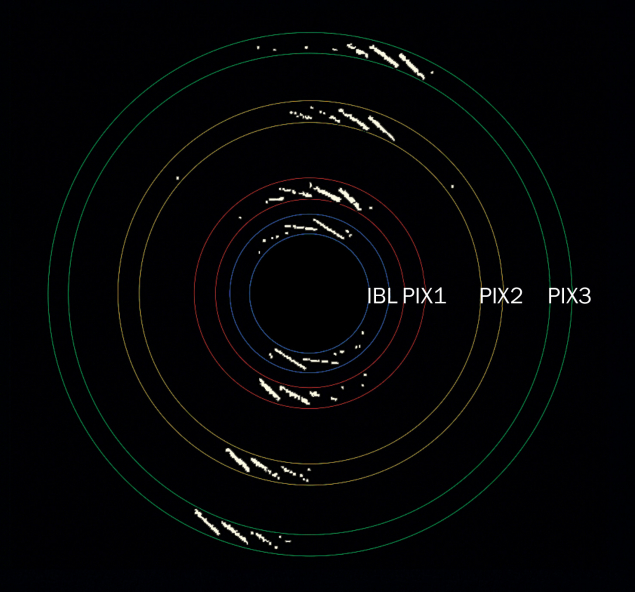

For studying the universe using even higher energy emissions, the space station will host the High Energy cosmic-Radiation Detection Facility (HERD). HERD is designed to study both cosmic rays and gamma rays at energies beyond those accessible to instruments like AMS-02, CALET (CERN Courier July/August 2024 p24) and DAMPE. It aims to achieve this, in part, by simply being larger, resulting in a mass that is currently only possible to support on a space station. The HERD calorimeter will be 55 radiation lengths long and consist of several tonnes of scintillating cubic LYSO crystals. The instrument will also use high-precision silicon trackers, which in combination with the deep calorimeter, will provide a better angular resolution and a geometrical acceptance 30 times larger than the present AMS-02 (which is due to be upgraded next year). This will allow HERD to probe the cosmic-ray spectrum up to PeV energies, filling in the energy gap between current space missions and ground-based detectors. HERD started out as an international mission with a large European contribution, however delays on the European side regarding participation, in combination with a launch requirement of 2027, mean that it is currently foreseen to be a fully Chinese mission.

Although not as large or mature as the International Space Station, Tiangong’s capacity to host cutting-edge astrophysics missions is catching up. As well as providing researchers with a pristine view of the electromagnetic universe, instruments such as HERD will enable vital cross-checks of data from AMS-02 and other unique experiments in space.