Artificial-intelligence techniques have been used in experimental particle physics for 30 years, and are becoming increasingly widespread in theoretical physics. Anima Anandkumar and John Ellis explore the possibilities.

How might artificial intelligence make an impact on theoretical physics?

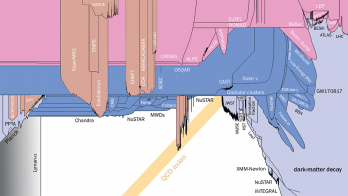

John Ellis (JE): To phrase it simply: where do we go next? We have the Standard Model, which describes all the visible matter in the universe successfully, but we know dark matter must be out there. There are also puzzles, such as what is the origin of the matter in the universe? During my lifetime we’ve been playing around with a bunch of ideas for tackling those problems, but haven’t come up with solutions. We have been able to solve some but not others. Could artificial intelligence (AI) help us find new paths towards attacking these questions? This would be truly stealing theoretical physicists’ lunch.

Anima Anandkumar (AA): I think the first steps are whether you can understand more basic physics and be able to come up with predictions as well. For example, could AI rediscover the Standard Model? One day we can hope to look at what the discrepancies are for the current model, and hopefully come up with better suggestions.

JE: An interesting exercise might be to take some of the puzzles we have at the moment and somehow equip an AI system with a theoretical framework that we physicists are trying to work with, let the AI loose and see whether it comes up with anything. Even over the last few weeks, a couple of experimental puzzles have been reinforced by new results on B-meson decays and the anomalous magnetic moment of the muon. There are many theoretical ideas for solving these puzzles but none of them strike me as being particularly satisfactory in the sense of indicating a clear path towards the next synthesis beyond the Standard Model. Is it imaginable that one could devise an AI system that, if you gave it a set of concepts that we have, and the experimental anomalies that we have, then the AI could point the way?

AA: The devil is in the details. How do we give the right kind of data and knowledge about physics? How do we express those anomalies while at the same time making sure that we don’t bias the model? There are anomalies suggesting that the current model is not complete – if you are giving that prior knowledge then you could be biasing the models away from discovering new aspects. So, I think that delicate balance is the main challenge.

JE: I think that theoretical physicists could propose a framework with boundaries that AI could explore. We could tell you what sort of particles are allowed, what sort of interactions those could have and what would still be a well-behaved theory from the point of view of relativity and quantum mechanics. Then, let’s just release the AI to see whether it can come up with a combination of particles and interactions that could solve our problems. I think that in this sort of problem space, the creativity would come in the testing of the theory. The AI might find a particle and a set of interactions that would deal with the anomalies that I was talking about, but how do we know what’s the right theory? We have to propose some other experiments that might test it – and that’s one place where the creativity of theoretical physicists will come into play.

AA: Absolutely. And many theories are not directly testable. That’s where the deeper knowledge and intuition that theoretical physicists have is so critical.

Is human creativity driven by our consciousness, or can contemporary AI be creative?

AA: Humans are creative in so many ways. We can dream, we can hallucinate, we can create – so how do we build those capabilities into AI? Richard Feynman famously said “What I cannot create, I do not understand.” It appears that our creativity gives us the ability to understand the complex inner workings of the universe. With the current AI paradigm this is very difficult. Current AI is geared towards scenarios where the training and testing distributions are similar, however, creativity requires extrapolation – being able to imagine entirely new scenarios. So extrapolation is an essential aspect. Can you go from what you have learned and extrapolate new scenarios? For that we need some form of invariance or understanding of the underlying laws. That’s where physics is front and centre. Humans have intuitive notions of physics from early childhood. We slowly pick them up from physical interactions with the world. That understanding is at the heart of getting AI to be creative.

JE: It is often said that a child learns more laws of physics than an adult ever will! As a human being, I think that I think. I think that I understand. How can we introduce those things into AI?

Could AI rediscover the Standard Model?

AA: We need to get AI to create images, and other kinds of data it experiences, and then reason about the likelihood of the samples. Is this data point unlikely versus another one? Similarly to what we see in the brain, we recently built feedback mechanisms into AI systems. When you are watching me, it’s not just a free-flowing system going from the retina into the brain; there’s also a feedback system going from the inferior temporal cortex back into the visual cortex. This kind of feedback is fundamental to us being conscious. Building these kinds of mechanisms into AI is the first step to creating conscious AI.

JE: A lot of the things that you just mentioned sound like they’re going to be incredibly useful going forward in our systems for analysing data. But how is AI going to devise an experiment that we should do? Or how is AI going to devise a theory that we should test?

AA: Those are the challenging aspects for an AI. A data-driven method using a standard neural network would perform really poorly. It will only think of the data that it can see and not about data that it hasn’t seen – what we call “zero-short generalisation”. To me, the past decade’s impressive progress is due to a trinity of data, neural networks and computing infrastructure, mainly powered by GPUs [graphics processing units], coming together: the next step for AI is a wider generalisation to the ability to extrapolate and predict hitherto unseen scenarios.

Across the many tens of orders of magnitude described by modern physics, new laws and behaviours “emerge” non-trivially in complexity (see Emergence). Could intelligence also be an emergent phenomenon?

JE: As a theoretical physicist, my main field of interest is the fundamental building blocks of matter, and the roles that they play very early in the history of the universe. Emergence is the word that we use when we try to capture what happens when you put many of these fundamental constituents together, and they behave in a way that you could often not anticipate if you just looked at the fundamental laws of physics. One of the interesting developments in physics over the past generation is to recognise that there are some universal patterns that emerge. I’m thinking, for example, of phase transitions that look universal, even though the underlying systems are extremely different. So, I wonder, is there something similar in the field of intelligence? For example, the brain structure of the octopus is very different from that of a human, so to what extent does the octopus think in the same way that we do?

AA: There’s a lot of interest now in studying the octopus. From what I learned, its intelligence is spread out so that it’s not just in its brain but also in its tentacles. Consequently, you have this distributed notion of intelligence that still works very well. It can be extremely camouflaged – imagine being in a wild ocean without a shell to protect yourself. That pressure created the need for intelligence such that it can be extremely aware of its surroundings and able to quickly camouflage itself or manipulate different tools.

JE: If intelligence is the way that a living thing deals with threats and feeds itself, should we apply the same evolutionary pressure to AI systems? We threaten them and only the fittest will survive. We tell them they have to go and find their own electricity or silicon or something like that – I understand that there are some first steps in this direction, computer programs competing with each other at chess, for example, or robots that have to find wall sockets and plug themselves in. Is this something that one could generalise? And then intelligence could emerge in a way that we hadn’t imagined?

Similarly to what we see in the brain, we recently built feedback mechanisms into AI systems

AA: That’s an excellent point. Because what you mentioned broadly is competition – different kinds of pressures that drive towards good, robust objectives. An example is generative adversarial models, which can generate very realistic looking images. Here you have a discriminator that challenges the generator to generate images that look real. These kinds of competitions or games are getting a lot of traction and we have now passed the Turing test when it comes to generating human faces – you can no longer tell very easily whether it is generated by AI or if it is a real person. So, I think those kinds of mechanisms that have competition built into the objective they optimise are fundamental to creating more robust and more intelligent systems.

JE: All this is very impressive – but there are still some elements that I am missing, which seem very important to theoretical physics. Take chess: a very big system but finite nevertheless. In some sense, what I try to do as a theoretical physicist has no boundaries. In some sense, it is infinite. So, is there any hope that AI would eventually be able to deal with problems that have no boundaries?

AA: That’s the difficulty. These are infinite-dimensional spaces… so how do we decide how to move around there? What distinguishes an expert like you from an average human is that you build your knowledge and develop intuition – you can quickly make judgments and find which narrow part of the space you want to work on compared to all the possibilities. That’s the aspect that is so difficult for AI to figure out. The space is enormous. On the other hand, AI does have a lot more memory, a lot more computational capacity. So can we create a hybrid system, with physicists and machine learning in tandem, to help us harness the capabilities of both AI and humans together? We’re currently exploring theorem provers: can we use the theorems that humans have proven, and then add reinforcement learning on top to create very fast theorem solvers? If we can create such fast theorem provers in pure mathematics, I can see them being very useful for understanding the Standard Model and the gaps and discrepancies in it. It is much harder than chess, for example, but there are exciting programming frameworks and data sets available, with efforts to bring together different branches of mathematics. But I don’t think humans will be out of the loop, at least for now.