Instrumentation manufacturer INFICON used multiphysics modelling to develop an ionization gauge for measuring pressure in high-vacuum and ultrahigh-vacuum (HV/UHV) environments.

Innovation often becomes a form of competition. It can be thought of as a race among creative people, where standardized tools measure progress toward the finish line. For many who strive for technological innovation, one such tool is the vacuum gauge.

High-vacuum and ultra-high-vacuum (HV/UHV) environments are used for researching, refining and producing many manufactured goods. But how can scientists and engineers be sure that pressure levels in their vacuum systems are truly aligned with those in other facilities? Without shared vacuum standards and reliable tools for meeting these standards, key performance metrics – whether for scientific experiments or products being tested – may not be comparable. To realize a better ionization gauge for measuring pressure in HV/UHV environments, INFICON of Liechtenstein used multiphysics modelling and simulation to refine its product design.

A focus on gas density

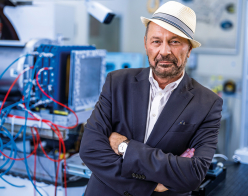

The resulting Ion Reference Gauge 080 (IRG080) from INFICON is more accurate and reproducible when compared with existing ionization gauges. Development of the IRG080 was coordinated by the European Metrology Programme for Innovation and Research (EMPIR). This collaborative R&D effort by private companies and government research organizations aims to make Europe’s “research and innovation system more competitive on a global scale”. The project participants, working within EMPIR’S 16NRM05 Ion Gauge project, considered multiple options before agreeing that INFICON’s gauge design best fulfilled the performance goals.

Of course, different degrees of vacuum require their own specific approaches to pressure measurement. “Depending on conditions, certain means of measuring pressure work better than others,” explained Martin Wüest, head of sensor technology at INFICON. “At near-atmospheric pressures, you can use a capacitive diaphragm gauge. At middle vacuum, you can measure heat transfer occurring via convection.” Neither of these approaches is suitable for HV/UHV applications. “At HV/UHV pressures, there are not enough particles to force a diaphragm to move, nor are we able to reliably measure heat transfer,” added Wüest. “This is where we use ionization to determine gas density and corresponding pressure.”

The most common HV/UHV pressure-measuring tool is a Bayard–Alpert hot-filament ionization gauge, which is placed inside the vacuum chamber. The instrument includes three core building blocks: the filament (or hot cathode), the grid and the ion collector. Its operation requires the supply of low-voltage electric current to the filament, causing it to heat up. As the filament becomes hotter, it emits electrons that are attracted to the grid, which is supplied with a higher voltage. Some of the electrons flowing toward and within the grid will collide with any free-floating gas molecules that are circulating in the vacuum chamber. Electrons that collide with gas molecules will form ions that then flow toward the collector, with the measurable ion current in the collector proportional to the density of gas molecules in the chamber.

“We can then convert density to pressure, according to the ideal gas law,” explained Wüest. “Pressure will be proportional to the ion current divided by the electron current, [in turn] divided by a sensitivity factor that is adjusted depending on what gas is in the chamber.”

Better by design

Unfortunately, while the operational principles of the Bayard–Alpert ionization gauge are sound and well understood, their performance is sensitive to heat and rough handling. “A typical ionization gauge contains fine metal structures that are held in spring-loaded tension,” said Wüest. “Each time you use the device, you heat the filament to between 1200 and 2000 °C. That affects the metal in the spring and can distort the shape of the filament, [thereby] changing the starting location of the electron flow and the paths the electrons follow.”

At the same time, the core components of a Bayard–Alpert gauge can become misaligned all too easily, introducing measurement uncertainties of 10 to 20% – an unacceptably wide range of variation. “Most vacuum-chamber systems are overbuilt as a result,” noted Wüest, and the need for frequent gauge recalibration also wastes precious development time and money.

With this in mind, the 16NRM05 Ion Gauge project team set a measurement uncertainty target of 1% or less for its benchmark gauge design (when used to detect nitrogen gas). Another goal was to eliminate the need to recalibrate gas sensitivity factors for each gauge and gas species under study. The new design also needed to be unaffected by minor shocks and reproducible by multiple manufacturers.

To achieve these goals, the project team first dedicated itself to studying HV/UHV measurement. Their research encompassed a broad review of 260 relevant studies. After completing their review, the project partners selected one design that incorporates current best practice for ionization gauge design: INFICON’s IE514 extractor-type gauge. Subsequently, three project participants – at NOVA University Lisbon, CERN and INFICON – each developed their own simulation models of the IE514 design. Their results were compared to test results from a physical prototype of the IE514 gauge to ensure the accuracy of the respective models before proceeding towards an optimized gauge design.

Computing the sensitivity factor

Francesco Scuderi, an INFICON engineer who specializes in simulation, used the COMSOL Multiphysics® software to model the IE514. The model enabled analysis of thermionic electron emissions from the filament and the ionization of gas by those electrons. The model can also be used for comray-tracing the paths of generated ions toward the collector. With these simulated outputs, Scuderi could calculate an expected sensitivity factor, which is based on how many ions are detected per emitted electron – a useful metric for comparing the overall fidelity of the model with actual test results.

“After constructing the model geometry and mesh, we set boundary conditions for our simulation,” Scuderi explained. “We are looking to express the coupled relationship of electron emissions and filament temperature, which will vary from approximately 1400 to 2000 °C across the length of the filament. This variation thermionically affects the distribution of electrons and the paths they will follow.”

He continued: “Once we simulate thermal conditions and the electric field, we can begin our ray-tracing simulation. The software enables us to trace the flow of electrons to the grid and the resulting coupled heating effects.”

Next, the model is used to calculate the percentage of electrons that collide with gas particles. From there, ray-tracing of the resulting ions can be performed, tracing their paths toward the collector. “We can then compare the quantity of circulating electrons with the number of ions and their positions,” noted Scuderi. “From this, we can extrapolate a value for ion current in the collector and then compute the sensitivity factor.”

INFICON’s model did an impressive job of generating simulated values that aligned closely with test results from the benchmark prototype. This enabled the team to observe how changes to the modelled design affected key performance metrics, including ionization energy, the paths of electrons and ions, emission and transmission current, and sensitivity.

The end-product of INFICON’s design process, the IRG080, incorporates many of the same components as existing Bayard–Alpert gauges, but key parts look quite different. For example, the new design’s filament is a solid suspended disc, not a thin wire. The grid is no longer a delicate wire cage but is instead made from stronger formed metal parts. The collector now consists of two components: a single pin or rod that attracts ions and a solid metal ring that directs electron flow away from the collector and toward a Faraday cup (to catch the charged particles in vacuum). This arrangement, refined through ray-tracing simulation with the COMSOL Multiphysics® software, improves accuracy by better separating the paths of ions and electrons.

A more precise, reproducible gauge

INFICON, for its part, built 13 prototypes for evaluation by the project consortium. Testing showed that the IRG080 achieved the goal of reducing measurement uncertainty to below 1%. As for sensitivity, the IRG080 performed eight times better than the consortium’s benchmark gauge design. Equally important, the INFICON prototype yielded consistent results during multiple testing sessions, delivering sensitivity repeatability performance that was 13 times better than that of the benchmark gauge. In all, 23 identical gauges were built and tested during the project, confirming that INFICON had created a more precise, robust and reproducible tool for measuring HV/UHV conditions.

“We consider [the IRG080] a good demonstration of [INFICON’s] capabilities,” said Wüest.

• This story has been abridged. Read the full article at https://www.comsol.com/c/f6rx.

COMSOL Multiphysics is a registered trademark of COMSOL