The third edition of Triggering Discoveries in High Energy Physics (TDHEP) attracted 55 participants to Slovakia’s High Tatras mountains from 9 to 13 December 2024. The workshop is the only conference dedicated to triggering in high-energy physics, and follows previous editions in Jammu, India in 2013 and Puebla, Mexico in 2018. Given the upcoming High-Luminosity LHC (HL-LHC) upgrade, discussions focused on how trigger systems can be enhanced to manage high data rates while preserving physics sensitivity.

Triggering systems play a crucial role in filtering the vast amounts of data generated by modern collider experiments. A good trigger design selects features in the event sample that greatly enrich the proportion of the desired physics processes in the recorded data. The key considerations are timing and selectivity. Timing has long been at the core of experiment design – detectors must capture data at the appropriate time to record an event. Selectivity has been a feature of triggering for almost as long. Recording an event makes demands on running time and data-acquisition bandwidth, both of which are limited.

Evolving architecture

Thanks to detector upgrades and major changes in the cost and availability of fast data links and storage, the past 10 years have seen an evolution in LHC triggers away from hardware-based decisions using coarse-grain information.

Detector upgrades mean higher granularity and better time resolution, improving the precision of the trigger algorithms and the ability to resolve the problem of having multiple events in a single LHC bunch crossing (“pileup”). Such upgrades allow more precise initial-level hardware triggering, bringing the event rate down to a level where events can be reconstructed for further selection via high-level trigger (HLT) systems.

To take advantage of modern computer architecture more fully, HLTs use both graphics processing units (GPUs) and central processing units (CPUs) to process events. In ALICE and LHCb this leads to essentially triggerless access to all events, while in ATLAS and CMS hardware selections are still important. All HLTs now use machine learning (ML) algorithms, with the ATLAS and CMS experiments even considering their use at the first hardware level.

ATLAS and CMS are primarily designed to search for new physics. At the end of Run 3, upgrades to both experiments will significantly enhance granularity and time resolution to handle the high-luminosity environment of the HL-LHC, which will deliver up to 200 interactions per LHC bunch crossing. Both experiments achieved efficient triggering in Run 3, but higher luminosities, difficult-to-distinguish physics signatures, upgraded detectors and increasingly ambitious physics goals call for advanced new techniques. The step change will be significant. At HL-LHC, the first-level hardware trigger rate will increase from the current 100 kHz to 1 MHz in ATLAS and 760 kHz in CMS. The price to pay is increasing the latency – the time delay between input and output – to 10 µsec in ATLAS and 12.5 µsec in CMS.

The proposed trigger systems for ATLAS and CMS are predominantly FPGA-based, employing highly parallelised processing to crunch huge data streams efficiently in real time. Both will be two-level triggers: a hardware trigger followed by a software-based HLT. The ATLAS hardware trigger will utilise full-granularity calorimeter and muon signals in the global-trigger-event processor, using advanced ML techniques for real-time event selection. In addition to calorimeter and muon data, CMS will introduce a global track trigger, enabling real-time tracking at the first trigger level. All information will be integrated within the global-correlator trigger, which will extensively utilise ML to enhance event selection and background suppression.

Substantial upgrades

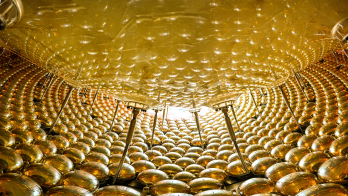

The other two big LHC experiments already implemented substantial trigger upgrades at the beginning of Run 3. The ALICE experiment is dedicated to studying the strong interactions of the quark–gluon plasma – a state of matter in which quarks and gluons are not confined in hadrons. The detector was upgraded significantly for Run 3, including the trigger and data-acquisition systems. The ALICE continuous readout can cope with 50 kHz for lead ion–lead ion (PbPb) collisions and several MHz for proton–proton (pp) collisions. In PbPb collisions the full data is continuously recorded and stored for offline analysis, while for pp collisions the data is filtered.

Unlike in Run 2, where the hardware trigger reduced the data rate to several kHz, Run 3 uses an online software trigger that is a natural part of the common online–offline computing framework. The raw data from detectors is streamed continuously and processed in real time using high-performance FPGAs and GPUs. ML plays a crucial role in the heavy-flavour software trigger, which is one of the main physics interests. Boosted decision trees are used to identify displaced vertices from heavy quark decays. The full chain from saving raw data in a 100 PB buffer to selecting events of interest and removing the original raw data takes about three weeks and was fully employed last year.

The third edition of TDHEP suggests that innovation in this field is only set to accelerate

The LHCb experiment focuses on precision measurements in heavy-flavour physics. A typical example is measuring the probability of a particle decaying into a certain decay channel. In Run 2 the hardware trigger tended to saturate in many hadronic channels when the luminosity was instantaneously increased. To solve this issue for Run 3 a high-level software trigger was developed that can handle 30 MHz event readout with 4 TB/s data flow. A GPU-based partial event reconstruction and primary selection of displaced tracks and vertices (HLT1) reduces the output data rate to 1 MHz. The calibration and detector alignment (embedded into the trigger system) are calculated during data taking just after HLT1 and feed full-event reconstruction (HLT2), which reduces the output rate to 20 kHz. This represents 10 GB/s written to disk for later analysis.

Away from the LHC, trigger requirements differ considerably. Contributions from other areas covered heavy-ion physics at Brookhaven National Laboratory’s Relativistic Heavy Ion Collider (RHIC), fixed-target physics at CERN and future experiments at the Facility for Antiproton and Ion Research at GSI Darmstadt and Brookhaven’s Electron–Ion Collider (EIC). NA62 at CERN and STAR at RHIC both use conventional trigger strategies to arrive at their final event samples. The forthcoming CBM experiment at FAIR and the ePIC experiment at the EIC deal with high intensities but aim for “triggerless” operation.

Requirements were reported to be even more diverse in astroparticle physics. The Pierre Auger Observatory combines local and global trigger decisions at three levels to manage the problem of trigger distribution and data collection over 3000 km2 of fluorescence and Cherenkov detectors.

These diverse requirements will lead to new approaches being taken, and evolution as the experiments are finalised. The third edition of TDHEP suggests that innovation in this field is only set to accelerate.