Quarks and gluons are the only known elementary particles that cannot be seen in isolation. Once produced, they immediately start a cascade of radiation (the parton shower), followed by confinement, when the partons bind into (colour-neutral) hadrons. These hadrons form the jets that we observe in detectors. The different phases of jet formation can help physicists understand various aspects of quantum chromodynamics (QCD), from parton interactions to hadron interactions – including the confinement transition leading to hadron formation, which is particularly difficult to model. However, jet formation cannot be directly observed. Recently, theorists proposed that the footprints of jet formation are encoded in the energy and angular correlations of the final particles, which can be probed through a set of observables called energy correlators. These observables record the largest angular distance between N particles within a jet (xL), weighted by the product of their energy fractions.

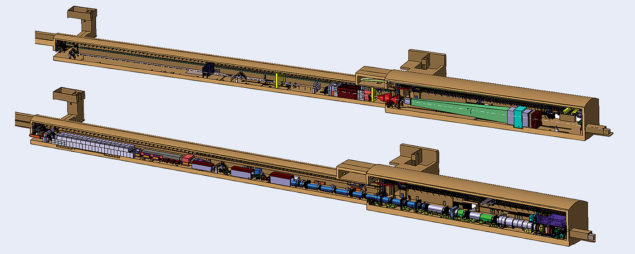

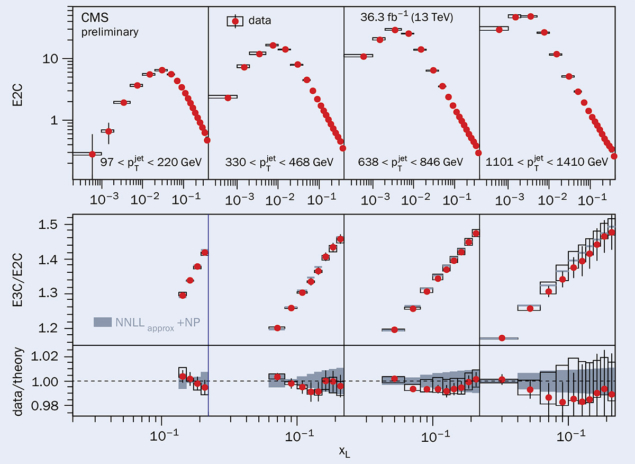

The CMS collaboration recently reported a measurement of the energy correlators between two (E2C) and three (E3C) particles inside a jet, using jets with pT in the 0.1–1.8 TeV range. Figure 1 (top) shows the measured E2C distribution. In each jet pT range, three scaling regions can be seen, corresponding to three stages in jet-formation evolution: parton shower, colour confinement and free hadrons (from right to left). The opposite E2C trends in the low and high xL regions indicate that the interactions between partons and those between hadrons are rather different; the intermediate region reflects the confinement transition from partons to hadrons.

Theorists have recently calculated the dynamics of the parton shower with unprecedented precision. Given the high precision of the calculations and of the measurements, the CMS team used the E3C over E2C ratio, shown in figure 1 (bottom), to evaluate the strong coupling constant αS. The ratio reduces the theoretical and experimental uncertainties, and therefore minimises the challenge of distinguishing the effects of αS variations from those of changes in quark–gluon composition. Since αS depends on the energy scale of the process under consideration, the measured value is given for the Z-boson mass: αS = 0.1229 with an uncertainty of 4%, dominated by theory uncertainties and by the jet-constituent energy-scale uncertainty. This value, which is consistent with the world average, represents the most precise measurement of αS using a method based on jet evolution.