A major step toward shaping the future of European particle physics was reached on 2 October, with the release of the Physics Briefing Book of the 2026 update of the European Strategy for Particle Physics. Despite its 250 pages, it is a concise summary of the vast amount of work contained in the 266 written submissions to the strategy process and the deliberations of the Open Symposium in Venice in June (CERN Courier September/October 2025 p24).

The briefing book compiled by the Physics Preparatory Group is an impressive distillation of our current knowledge of particle physics, and a preview of the exciting prospects offered by future programmes. It provides the scientific basis for defining Europe’s long-term particle-physics priorities and determining the flagship collider that will best advance the field. To this end, it presents comparisons of the physics reach of the different candidate machines, which often have different strengths in probing new physics beyond the Standard Model (SM).

Condensing all this in a few sentences is difficult, though two messages are clear: if the next collider at CERN is an electron–positron collider, the exploration of new physics will proceed mainly through high-precision measurements; and the highest physics reach into the structure of physics beyond the SM via indirect searches will be provided by the combined exploration of the Higgs, electroweak and flavour domains.

Following a visionary outlook for the field from theory, the briefing book divides its exploration of the future of particle physics into seven sectors of fundamental physics and three technology pillars that underpin them.

1. Higgs and electroweak physics

In the new era that has dawned with the discovery of the Higgs boson, numerous fundamental questions remain, including whether the Higgs boson is an elementary scalar, part of an extended scalar sector, or even a portal to entirely new phenomena. The briefing book highlights how precision studies of the Higgs boson, the W and Z bosons, and the top quark will probe the SM to unprecedented accuracy, looking for indirect signs of new physics.

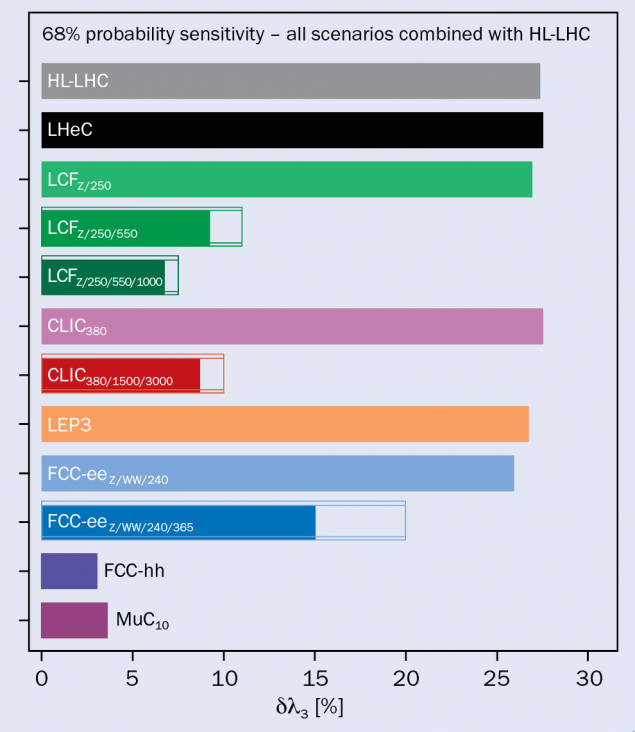

Addressing these requires highly precise measurements of its couplings, self-interaction and quantum corrections. While the High-Luminosity LHC (HL-LHC) will continue to improve several Higgs and electroweak measurements, the next qualitative leap in precision will be provided by future electron–positron colliders, such as the FCC-ee, the Linear Collider Facility (LCF), CLIC or LEP3. And while these would provide very important information, it would fall upon the shoulders of an energy-frontier machine like the FCC-hh or a muon collider to access potential heavy states. Using the absolute HZZ coupling from the FCC-ee, such machines would measure the single-Higgs-boson couplings with a precision better than 1%, and the Higgs self-coupling at the level of a few per cent (see “Higgs self-coupling” figure).

This anticipated leap in experimental precision will necessitate major advances in theory, simulation and detector technology. In the coming decades, electroweak physics and the Higgs boson in particular will remain a cornerstone of particle physics, linking the precision and energy frontiers in the search for deeper laws of nature.

2. Strong interaction physics

Precise knowledge of the strong interaction will be essential for understanding visible matter, exploring the SM with precision, and interpreting future discoveries at the energy frontier. Building upon advanced studies of QCD at the HL-LHC, future high-luminosity electron–positron colliders such as FCC-ee and LEP3 would, like LHeC, enable per-mille precision on the strong coupling constant, and a greatly improved understanding of the transition between the perturbative and non-perturbative regimes of QCD. The LHeC would bring increased precision on parton-distribution functions that would be very useful for many physics measurements at the FCC-hh. FCC-hh would itself open up a major new frontier for strong-interaction studies.

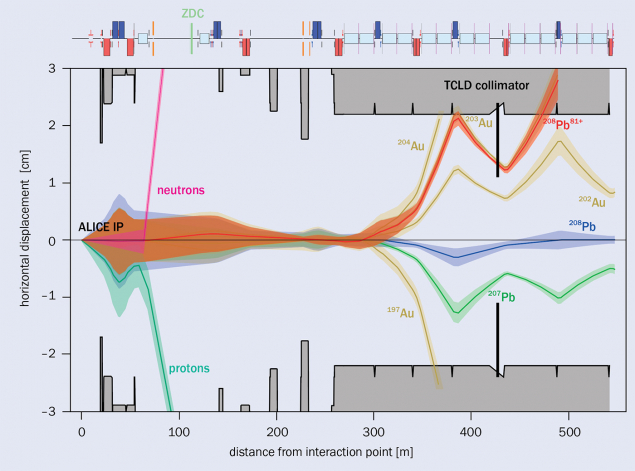

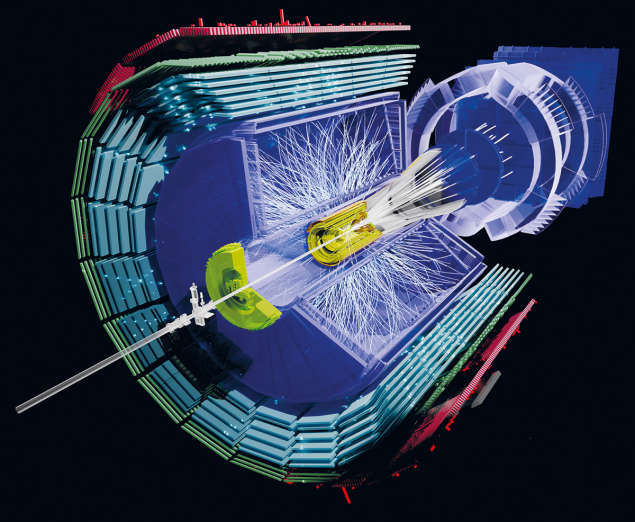

A deep understanding of the strong interaction also necessitates the study of strongly interacting matter under extreme conditions with heavy-ion collisions. ALICE and the other experiments at the LHC will continue to illuminate this physics, revealing insights into the early universe and the interiors of neutron stars.

3. Flavour physics

With high-precision measurements of quark and lepton processes, flavour studies test the SM at energy scales far above those directly accessible to colliders, thanks to their sensitivity to the effects of virtual particles in quantum loops. Small deviations from theoretical predictions could signal new interactions or particles influencing rare processes or CP-violating effects, making flavour physics one of the most sensitive paths toward discovering physics beyond the SM.

The book highlights how precision studies of the Higgs boson, the W and Z bosons, and the top quark will probe the SM to unprecedented accuracy

Global efforts are today led by the LHCb, ATLAS and CMS experiments at the LHC and by the Belle II experiment at SuperKEKB. These experiments have complementary strengths: huge data samples from proton–proton collisions at CERN and a clean environment in electron–positron collisions at KEK. Combining the two will provide powerful tests of lepton-flavour universality, searches for exotic decays and refinements in the understanding of hadronic effects.

The next major step in precision flavour physics would require “tera-Z” samples of a trillion Z bosons from a high-luminosity electron–positron collider such as the FCC-ee, alongside a spectrum of focused experimental initiatives at a more modest scale.

4. Neutrino physics

Neutrino physics addresses open fundamental questions related to neutrino masses and their deep connections to the matter–antimatter asymmetry in the universe and its cosmic evolution. Upcoming experiments including long-baseline accelerator-neutrino experiments (DUNE and Hyper-Kamiokande), reactor experiments such as JUNO (see “JUNO takes aim at neutrino-mass hierarchy” and astroparticle observatories (KM3NeT and IceCube, see also CERN Courier May/June 2025 p23) will likely unravel the neutrino mass hierarchy and discover leptonic CP violation.

In parallel, the hunt for neutrinoless-double-beta decay continues. A signal would indicate that neutrinos are Majorana fermions, which would be indisputable evidence for new physics! Such efforts extend the reach of particle physics beyond accelerators and deepen connections between disciplines. Efforts to determine the absolute mass of neutrinos are also very important.

The chapter highlights the growing synergy between neutrino experiments and collider, astrophysical and cosmological studies, as well as the pivotal role of theory developments. Precision measurements of neutrino interactions provide crucial support for oscillation measurements, and for nuclear and astroparticle physics. New facilities at accelerators explore neutrino scattering at higher energies, while advances in detector technologies have enabled the measurement of coherent neutrino scattering, opening new opportunities for new physics searches. Neutrino physics is a truly global enterprise, with strong European participation and a pivotal role for the CERN neutrino platform.

5. Cosmic messengers

Astroparticle physics and cosmology increasingly provide new and complementary information to laboratory particle-physics experiments in addressing fundamental questions about the universe. A rich set of recent achievements in these fields includes high-precision measurements of cosmological perturbations in the cosmic microwave background (CMB) and in galaxy surveys, a first measurement of an extragalactic neutrino flux, accurate antimatter fluxes and the discovery of gravitational waves (GWs).

Leveraging information from these experiments has given rise to the field of multi-messenger astronomy. The next generation of instruments, from neutrino telescopes to ground- and space-based CMB and GW observatories, promises exciting results with important clues for

particle physics.

6. Beyond the Standard Model

The landscape for physics beyond the SM is vast, calling for an extended exploration effort with exciting prospects for discovery. It encompasses new scalar or gauge sectors, supersymmetry, compositeness, extra dimensions and dark-sector extensions that connect visible and invisible matter.

Many of these models predict new particles or deviations from SM couplings that would be accessible to next-generation accelerators. The briefing book shows that future electron–positron colliders such as FCC-ee, CLIC, LCF and LEP3 have sensitivity to the indirect effects of new physics through precision Higgs, electroweak and flavour measurements. With their per-mille precision measurements, electron–positron colliders will be essential tools for revealing the virtual effects of heavy new physics beyond the direct reach of colliders. In direct searches, CLIC would extend the energy frontier to 1.5 TeV, whereas FCC-hh would extend it to tens of TeV, potentially enabling the direct observation of new physics such as new gauge bosons, supersymmetric particles and heavy scalar partners. A muon collider would combine precision and energy reach, offering a compact high-energy platform for direct and indirect discovery.

This chapter of the briefing book underscores the complementarity between collider and non-collider experiments. Low-energy precision experiments, searches for electric dipole moments, rare decays and axion or dark-photon experiments probe new interactions at extremely small couplings, while astrophysical and cosmological observations constrain new physics over sprawling mass scales.

7. Dark matter and the dark sector

The nature of dark matter, and the dark sector more generally, remains one of the deepest mysteries in modern physics. A broad range of masses and interaction strengths must be explored, encompassing numerous potential dark-matter phenomenologies, from ultralight axions and hidden photons to weakly interacting massive particles, sterile neutrinos and heavy composite states. The theory space of the dark sector is just as crowded, with models involving new forces or “portals” that link visible and invisible matter.

As no single experimental technique can cover all possibilities, progress will rely on exploiting the complementarity between collider experiments, direct and indirect searches for dark matter, and cosmological observations. Diversity is the key aspect of this developing experimental programme!

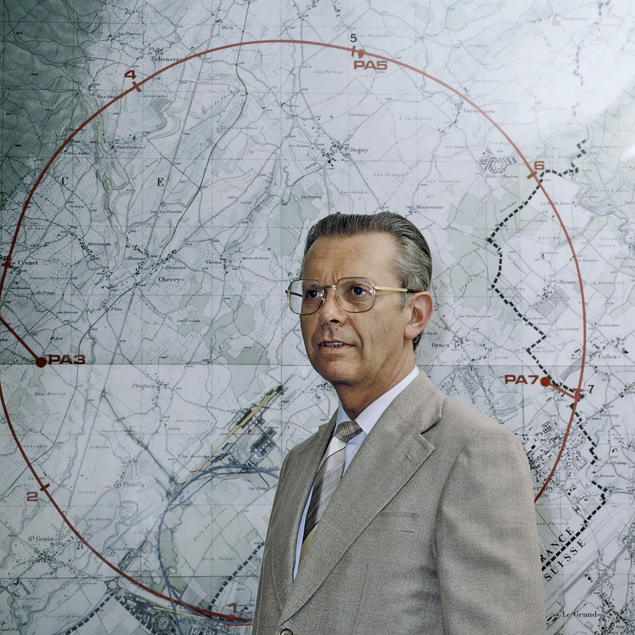

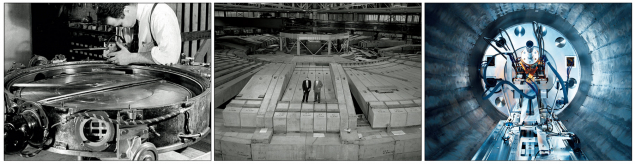

8. Accelerator science and technology

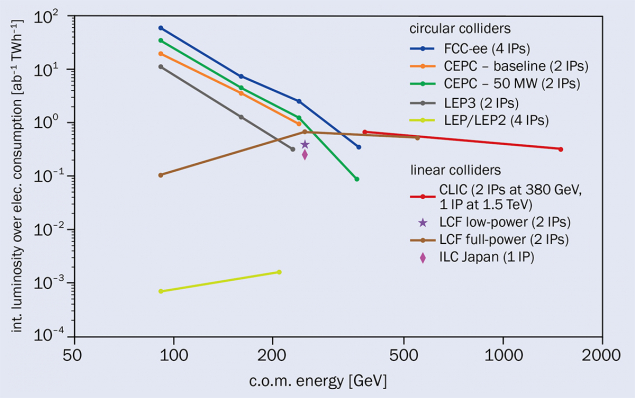

The briefing book considers the potential paths to higher energies and luminosities offered by each proposal for CERN’s next flagship project: the two circular colliders FCC-ee and FCC-hh, the two linear colliders LCF and CLIC, and a muon collider; LEP3 and LHeC are also considered as colliders that could potentially offer a physics programme to bridge the time between the HL-LHC and the next high-energy flagship collider. The technical readiness, cost and timeline of each collider are summarised, alongside their environmental impact and energy efficiency (see “Energy efficiency” figure).

The two main development fronts in this technology pillar are high-field magnets and efficient radio-frequency (RF) cavities. High-field superconducting magnets are essential for the FCC-hh, while high-temperature superconducting magnet technology, which presents unique opportunities and challenges, might be relevant to the FCC-hh as a second-stage machine after the FCC-ee. Efficient RF systems are required by all accelerators (CERN Courier May/June 2025 p30). Research and development (R&D) on advanced acceleration concepts, such as plasma-wakefield acceleration and muon colliders, also present much promise but necessitate significant work before they can present a viable solution for a future collider.

Preserving Europe’s leadership in accelerator science and technology requires a broad and extensive programme of work with continuous support for accelerator laboratories and test facilities. Such investments will continue to be very important for applications in medicine, materials science and industry.

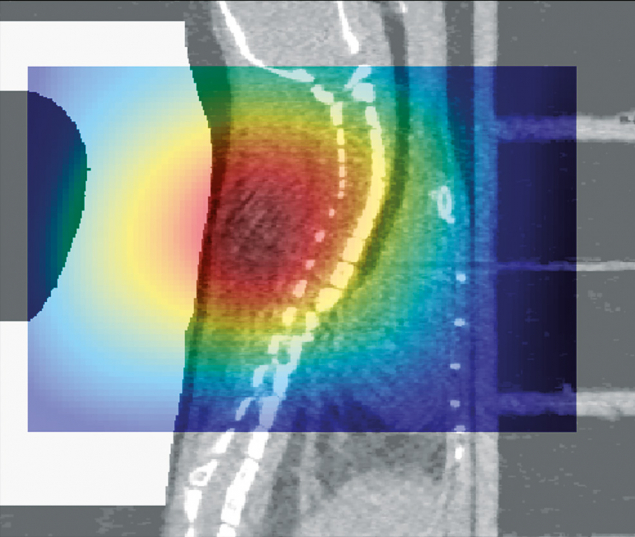

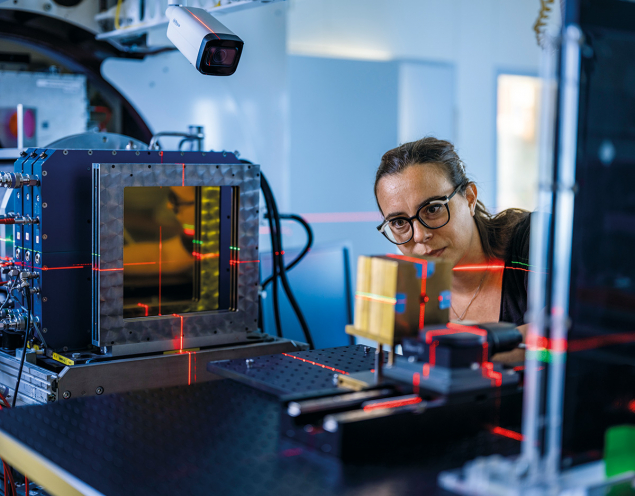

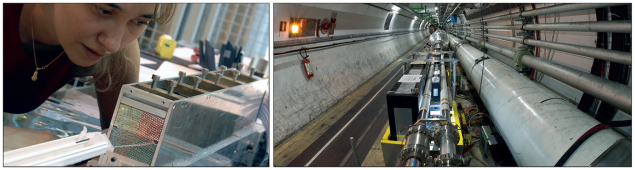

9. Detector instrumentation

A wealth of lessons learned from the LHC and HL-LHC experiments are guiding the development of the next generation of detectors, which must have higher granularity, and – for a hadron collider – a higher radiation tolerance, alongside improved timing resolution and data throughput.

As the eyes through which we observe collisions at accelerators, detectors require a coherent and long-term R&D programme. Central to these developments will be the detector R&D collaborations, which have provided a structured framework for organising and steering the work since the previous update to the European Strategy for Particle Physics. These span the full spectrum of detector systems, with high-rate gaseous detectors, liquid detectors and high-performance silicon sensors for precision timing, precision particle identification, low-mass tracking and advanced calorimetry.

If detectors are the eyes that explore nature, computing is the brain that deciphers the signals they receive

All these detectors will also require advances in readout electronics, trigger systems and real-time data processing. A major new element is the growing role of AI and quantum sensing, both of which already offer innovative methods for analysis, optimisation and detector design (CERN Courier July/August 2025 p31). As in computing, there are high hopes and well-founded expectations that these technologies will transform detector design and operation.

To maintain Europe’s leadership in instrumentation, it is important to maintain sustained investments in test-beam infrastructures and engineering. This supports a mutually beneficial symbiosis with industry. Detector R&D is a portal to sectors as diverse as medical diagnostics and space exploration, providing essential tools such as imaging technologies, fast electronics and radiation-hard sensors for a wide range of applications.

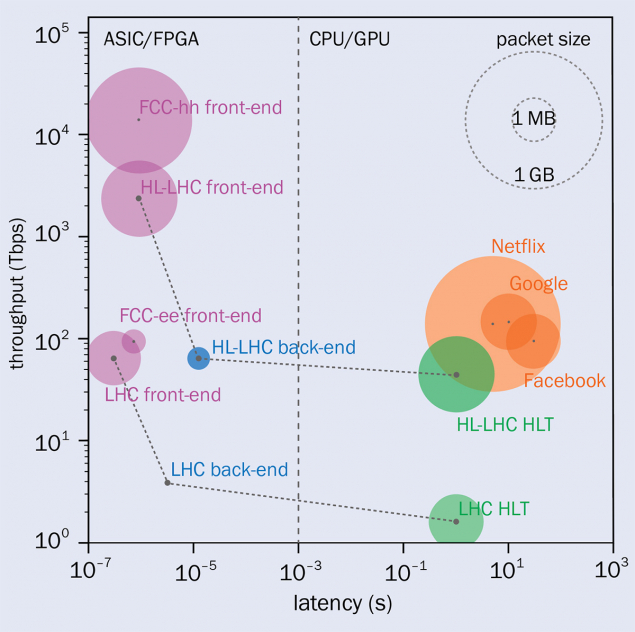

10. Computing

If detectors are the eyes that explore nature, computing is the brain that deciphers the signals they receive. The briefing book pays much attention to the major leaps in computation and storage that are required by future experiments, with simulation, data management and processing at the top of the list (see “Data challenge” figure). Less demanding in resources, but equally demanding of further development, is data analysis. Planning for these new systems is guided by sustainable computing practices, including energy-efficient software and data centres. The next frontier is the HL-LHC, which will be the testing ground and the basis for future development, and serves as an example for the preservation of the current wealth of experimental data and software (CERN Courier September/October 2025 p41).

Several paradigm shifts hold great promise for the future of computing in high-energy physics. Heterogeneous computing integrates CPUs, GPUs and accelerators, providing hugely increased capabilities and better scaling than traditional CPU usage. Machine learning is already being deployed in event simulation, reconstruction and even triggering, and the first signs from quantum computing are very positive. The combination of AI with quantum technology promises a revolution in all aspects of software and of the development, deployment and usage of computing systems.

Some closing remarks

Beyond detailed physics summaries, two overarching issues appear throughout the briefing book.

First, progress will depend on a sustained interplay between experiment, theory and advances in accelerators, instrumentation and computing. The need for continued theoretical development is as pertinent as ever, as improved calculations will be critical for extracting the full physics potential of future experiments.

Second, all this work relies on people – the true driving force behind scientific programmes. There is an urgent need for academia and research institutions to attract and support experts in accelerator technologies, instrumentation and computing by offering long-term career paths. A lasting commitment to training the new generation of physicists who will carry out these exciting research programmes is equally important.

Revisiting the briefing book to craft the current summary brought home very clearly just how far the field of particle physics has come – and, more importantly, how much more there is to explore in nature. The best is yet to come!