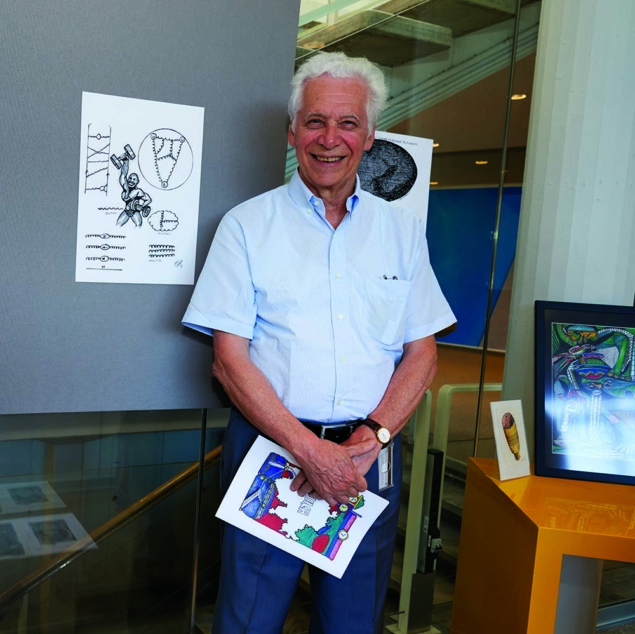

In the summer of 1968, while a visitor in CERN’s theory division, Gabriele Veneziano wrote a paper titled “Construction of a crossing-symmetric, Regge behaved amplitude for linearly-rising trajectories”. He was trying to explain the strong interaction, but his paper wound up marking the beginning of string theory.

What led you to the 1968 paper for which you are most famous?

In the mid-1960s we theorists were stuck in trying to understand the strong interaction. We had an example of a relativistic quantum theory that worked: QED, the theory of interacting electrons and photons, but it looked hopeless to copy that framework for the strong interactions. One reason was the strength of the strong coupling compared to the electromagnetic one. But even more disturbing was that there were so many (and ever growing in number) different species of hadrons that we felt at a loss with field theory – how could we cope with so many different states in a QED-like framework? We now know how to do it and the solution is called quantum chromodynamics (QCD).

But things weren’t so clear back then. The highly non-trivial jump from QED to QCD meant having the guts to write a theory for entities (quarks) that nobody had ever seen experimentally. No one was ready for such a logical jump, so we tried something else: an S-matrix approach. The S-matrix, which relates the initial and final states of a quantum-mechanical process, allows one to directly calculate the probabilities of scattering processes without solving a quantum field theory such as QED. This is why it looked more promising. It was also looking very conventional but, eventually, led to something even more revolutionary than QCD – the idea that hadrons are actually strings.

Is it true that your “eureka” moment was when you came across the Euler beta function in a textbook?

Not at all! I was taking a bottom-up approach to understand the strong interaction. The basic idea was to impose on the S-matrix a property now known as Dolen–Horn–Schmid (DHS) duality. It relates two apparently distinct processes contributing to an elementary reaction, say a+b → c+d. In one process, a+b fuse to form a metastable state (a resonance) which, after a characteristic lifetime, decays into c+d. In the other process the pair a+c exchanges a virtual particle with the pair b+d. In QED these two processes have to be added because they correspond to two distinct Feynman diagrams, while, according to DHS duality, each one provides, for strong interactions, the whole story. I’d heard about DHS duality from Murray Gell-Mann at the Erice summer school in 1967, where he said that DHS would lead to a “cheap bootstrap” for the strong interaction. Hearing this being said by a great physicist motivated me enormously. I was in the middle of my PhD studies at the Weizmann Institute in Israel. Back there in the fall, a collaboration of four people was formed. It consisted of Marco Ademollo, on leave at Harvard from Florence, and of Hector Rubinstein, Miguel Virasoro and myself at the Weizmann Institute. We worked intensively for a period of eight-to-nine months trying to solve the (apparently not so) cheap bootstrap for a particularly convenient reaction. We got very encouraging results hinting, I was feeling, for the existence of a simple exact solution. That solution turned out to be the Euler beta function.

But the 1968 paper was authored by you alone?

Indeed. The preparatory work done by the four of us had a crucial role, but the discovery that the Euler beta function was an exact realisation of DHS duality was just my own. It was around mid-June 1968, just days before I had to take a boat from Haifa to Venice and then continue to CERN where I would spend the month of July. By that time the group of four was already dispersing (Rubinstein on his way to NYU, Virasoro to Madison, Wisconsin via Argentina, Ademollo back to Florence before a second year at Harvard). I kept working on it by myself, first on the boat, then at CERN until the end of July when, encouraged by Sergio Fubini, I decided to send the preprint to the journal Il Nuovo Cimento.

Was the significance of the result already clear?

Well, the formula had many desirable features, but the reaction of the physics community came to me as a shock. As soon as I had submitted the paper I went on vacation for about four weeks in Italy and did not think much about it. At the end of August 1968, I attended the Vienna conference – one of the biennial Rochester-conference series – and found out, to my surprise, that the paper was already widely known and got mentioned in several summary talks. I had sent the preprint as a contribution and was invited to give a parallel-session talk about it. Curiously, I have no recollection of that event, but my wife remembers me telling her about it. There was even a witness, the late David Olive, who wrote that listening to my talk changed his life. It was an instant hit, because the model answered several questions at once, but it was not at all apparent then that it had anything to do with strings, not to mention quantum gravity.

When was the link to “string theory” made?

The first hints that a physical model for hadrons could underlie my mathematical proposal came after the latter had been properly generalised (to processes involving an arbitrary number of colliding particles) and the whole spectrum of hadrons it implied was unraveled (by Fubini and myself and, independently, by Korkut Bardakci and Stanley Mandelstam). It came out, surprisingly, to closely resemble the exponentially growing (with mass) spectrum postulated almost a decade earlier by CERN theorist Rolf Hagedorn and, at least naively, it implied an absolute upper limit on temperature (the so-called Hagedorn temperature).

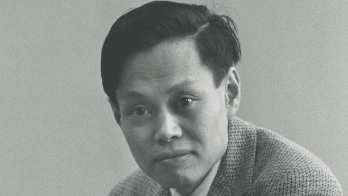

The spectrum coincides with that of an infinite set of harmonic oscillators and thus resembles the spectrum of a quantised vibrating string with its infinite number of higher harmonics. Holger Nielsen and Lenny Susskind independently suggested a string (or a rubber-band) picture. But, as usual, the devil was in the details. Around the end of the decade Yoichiro Nambu (and independently Goto) gave the first correct definition of a classical relativistic string, but it took until 1973 for Goddard, Goldstone, Rebbi and Thorn to prove that the correct application of quantum mechanics to the Nambu–Goto string reproduced exactly the above-mentioned generalisation of my original work. This also included certain consistency conditions that had already been found, most notably the existence of a massless spin-1 state (by Virasoro) and the need for extra spatial dimensions (from Lovelace’s work). At that point it became clear that the original model had a clear physical interpretation of hadrons being quantised strings. Some details were obviously wrong: one of the most striking features of strong interactions is their short-range nature, while a massless state produces long-range interactions. The model being inconsistent for three spatial dimensions (our world!) was also embarrassing, but people kept hoping.

So string theory was discovered by accident?

Not really. Qualitatively speaking, however, having found that hadrons are strings was no small achievement for those days. It was not precisely the string we now associate with quark confinement in QCD. Indeed the latter is so complicated that only the most powerful computers could shed some light on it many decades later. A posteriori, the fact that by looking at hadronic phenomena we were driven into discovering string theory was neither a coincidence nor an accident.

When was it clear that strings offer a consistent quantum-gravity theory?

This very bold idea came as early as 1974 from a paper by Joel Scherk and John Schwarz. Confronted with the fact that the massless spin-1 string state refused to become massive (there is no Brout–Englert–Higgs mechanism at hand in string theory!) and that even a massless spin-2 string had to be part of the string spectrum, they argued that those states should be identified with the photon and the graviton, i.e. with the carriers of electromagnetic and gravitational interactions, respectively. Other spin-1 particles could be associated with the gluons of QCD or with the W and Z bosons of the weak interaction. String theory would then become a theory of all interactions, at a deeper, more microscopic level. The characteristic scale of the hadronic string (~10–13 cm) had to be reduced by 20 orders of magnitude (~10–33 cm, the famous Planck-length) to describe the quarks themselves, the electron, the muon and the neutrinos, in fact every elementary particle, as a string.

In addition, it turned out that a serious shortcoming of the old string (namely its “softness”, meaning that string–string collisions cannot produce events with large deflection angles) was a big plus for the Scherk–Schwarz proposal. While the data were showing that hard hadron collisions were occurring at substantial rates, in agreement with QCD predictions, the softness of string theory could free quantum gravity from its problematic ultraviolet divergences – the main obstacle to formulating a consistent quantum-gravity theory.

Did you then divert your attention to string theory?

Not immediately. I was still interested in understanding the strong interactions and worked on several aspects of perturbative and non-perturbative QCD and their supersymmetric generalisations. Most people stayed away from string theory during the 1974–1984 decade. Remember that the Standard Model had just come to life and there was so much to do in order to extract its predictions and test it. I returned to string theory after the Green–Schwarz revolution in 1984. They had discovered a way to reconcile string theory with another fact of nature: the parity violation of weak interactions. This breakthrough put string theory in the hotspot again and since then the number of string-theory aficionados has been steadily growing, particularly within the younger part of the theory community. Several revolutions have followed since then, associated with the names of Witten, Polchinski, Maldacena and many others. It would take too long to do justice to all these beautiful developments. Personally, and very early on, I got interested in applying the new string theory to primordial cosmology.

Was your 1991 paper the first to link string theory with cosmology?

I think there was at least one already, a model by Brandenberger and Vafa trying to explain why our universe has only three large spatial dimensions, but it was certainly among the very first. In 1991, I (and independently Arkadi Tseytlin) realised that the string-cosmology equations, unlike Einstein’s, admit a symmetry (also called, alas, duality!) that connects a decelerating expansion to an accelerating one. That, I thought, could be a natural way to get an inflationary cosmology, which was already known since the 1980s, in string theory without invoking an ad-hoc “inflaton” particle.

The problem was that the decelerating solution had, superficially, a Big Bang singularity in its past, while the (dual) accelerating solution had a singularity in the future. But this was only the case if one neglected effects related to the finite size of the string. Many hints, including the already mentioned upper limit on temperature, suggested that Big Bang-like singularities are not really there in string theory. If so, the two duality-related solutions could be smoothly connected to provide what I dubbed a “pre-Big Bang scenario” characterised by the lack of a beginning of time. I think that the model (further developed with Maurizio Gasperini and by many others) is still alive, at least as long as a primordial B-mode polarisation is not discovered in the cosmic microwave background, since it is predicted to be insignificant in this cosmology.

Did you study other aspects of the new incarnation of string theory?

A second line of string-related research, which I have followed since 1987, concerns the study of thought experiments to understand what string theory can teach us about quantum gravity in the spirit of what people did in the early days of quantum mechanics. In particular, with Daniele Amati and Marcello Ciafaloni first, and then also with many others, I have studied string collisions at trans-Planckian energies (> 1019 GeV) that cannot be reached in human-made accelerators but could have existed in the early universe. I am still working on it. One outcome of that study, which became quite popular, is a generalisation of Heisenberg’s uncertainty principle implying a minimal value of Δx of the order of the string size.

50 years on, is the theory any closer to describing reality?

People say that string theory doesn’t make predictions, but that’s simply not true. It predicts the dimensionality of space, which is the only theory so far to do so, and it also predicts, at tree level (the lowest level of approximation for a quantum-relativistic theory), a whole lot of massless scalars that threaten the equivalence principle (the universality of free-fall), which is by now very well tested. If we could trust this tree-level prediction, string theory would be already falsified. But the same would be true of QCD, since at tree level it implies the existence of free quarks. In other words: the new string theory, just like the old one, can be falsified by large-distance experiments provided we can trust the level of approximation at which it is solved. On the other hand, in order to test string theory at short distance, the best way is through cosmology. Around (i.e. at, before, or soon after) the Big Bang, string theory may have left its imprint on the early universe and its subsequent expansion can bring those to macroscopic scales today.

What do you make of the ongoing debate on the scientific viability of the landscape, or “swamp”, of string-theory solutions?

I am not an expert on this subject but I recently heard (at the Strings 2018 conference in Okinawa, Japan) a talk on the subject by Cumrun Vafa claiming that the KKLT solution [which seeks to account for the anomalously small value of the vacuum energy, as proposed in 2003 by Kallosh, Kachru, Linde and Trivedi] is in the swampland, meaning it’s not viable at a fundamental quantum-gravity level. It was followed by a heated discussion and I cannot judge who is right. I can only add that the absence of a metastable de-Sitter vacuum would favour quintessence models of the kind I investigated with Thibault Damour several years ago and that could imply interestingly small (but perhaps detectable) violations of the equivalence principle.

What’s the perception of strings from outside the community?

Some of the popular coverage of string theory in recent years has been rather superficial. When people say string theory can’t be proved, it is unfair. The usual argument is that you need unconceivably high energies. But, as I have already said, the new incarnation of string theory can be falsified just like its predecessor was; it soon became very clear that QCD was a better theory. Perhaps the same will happen to today’s string theory, but I don’t think there are serious alternatives at the moment. Clearly the enthusiasm of young people is still there. The field is atypically young – the average age of attendees of a string-theory conference is much lower than that for, say, a QCD or electroweak physics conference. What is motivating young theorists? Perhaps the mathematical beauty of string theory, or perhaps the possibility of carrying out many different calculations, publishing them and getting lots of citations.

What advice do you offer young theorists entering the field?

I myself regret that most young string theorists do not address the outstanding physics questions with quantum gravity, such as what’s the fate of the initial singularity of classical cosmology in string theory. These are very hard problems and young people these days cannot afford to spend a couple of years on one such problem without getting out a few papers. When I was young I didn’t care about fashions, I just followed my nose and took risks that eventually paid off. Today it is much harder to do so.

How has theoretical particle physics changed since 1968?

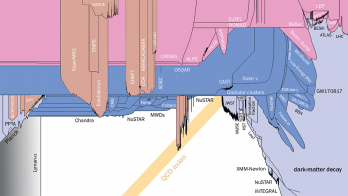

In 1968 we had a lot of data to explain and no good theory for the weak and strong interactions. There was a lot to do and within a few years the Standard Model was built. Today we still have essentially the same Standard Model and we are still waiting for some crisis to come out of the beautiful experiments at CERN and elsewhere. Steven Weinberg used to say that physics thrives on crises. The crises today are more in the domain of cosmology (dark matter, dark energy), the quantum mechanics of black holes and really unifying our understanding of physics at all scales, from the Planck length to our cosmological horizon, two scales that are 60 orders of magnitude apart. Understanding such a hierarchy (together with the much smaller one of the Standard Model) represents, in my opinion, the biggest theoretical challenge for 21st century physics.