How the collaboration responded to 21st century challenges.

The ALICE software environment (AliRoot) first saw light in 1998, at a time when computing in high-energy physics was facing a challenging task. A community of several thousand users and developers had to be converted from a procedural language (FORTRAN) that had been in use for 40 years to a comparatively new object-oriented language (C++) with which there was no previous experience. Coupled to this was the transition from loosely connected computer centres to a highly integrated Grid system. Again, this would involve a risky but unavoidable evolution from a well known model where, for experiments at CERN, for example, most of the computing was done at CERN with analysis performed at regional computer centres to a highly integrated system based on the Grid “vision”, for which neither experience nor tools were available.

In the ALICE experiment, we had a small offline team that was concentrated at CERN. The effect of having this small, localized team was to favour pragmatic solutions that did not require a long planning and development phase and that would, at the same time, give maximum attention to automation of the operations. So, on one side we concentrated on “taking what is there and works”, so as to provide the physicists quickly with the tools they needed, while on the other we devoted attention towards ensuring that the solutions we adopted would lend themselves to resilient hands-off operation and would evolve with time. We could not afford to develop “temporary” solutions but still we had to deliver quickly and develop the software incrementally in ways that would involve no major rewrites.

The rise of AliRoot

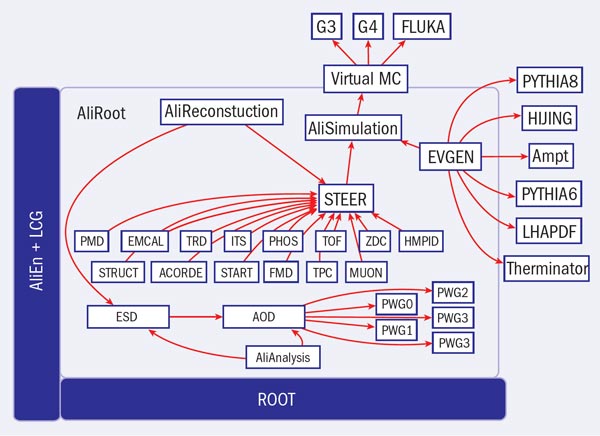

When development of the current ALICE computing infrastructure started, the collaboration decided to make an immediate transition to C++ for its production environment. This meant the use of existing and proven elements. For the detector simulation package, the choice fell on GEANT3, appropriately “wrapped” into a C++ “class”, together with ROOT, the C++ framework for data manipulation and analysis that René Brun and his team developed for the LHC experiments. This led to a complete, albeit embryonic, framework that could be used for the experiment’s detector-performance reports. AliRoot was born.

The initial design was exceedingly simple. There was no insulation layer between AliRoot and ROOT; no software-management layer beyond a software repository accessible to the whole ALICE collaboration; and only a single executable for simulation, calibration, reconstruction and analysis. The software was delivered in a single package, which just needed GEANT3 and ROOT to be operational.

To allow the code to evolve, we relied heavily on virtual interfaces that insulated the steering part from the code from the 18 ALICE subdetectors and the event generators. This proved to be a useful choice because it made the addition of new event generators – and even of new detectors, easy and seamless.

To protect simulation code by users (geometry description, scoring and signal generation) and to ease the transition from GEANT3 to GEANT4, we also developed a “virtual interface” with the Monte Carlo simulator, which allowed us to reuse the ALICE simulation code with other detector-simulation packages. The pressure from the users, who relied on AliRoot as their only working tool, prompted us to assume an “agile” working style, with frequent releases and “merciless” refactorizations of the code whenever needed. In open-source jargon we were working in a “bazaar style”, guided by the users’ feedback and requirements, as opposed to the “cathedral style” process where the code is restricted to an elite group of developers between major releases. The difficulty of working with a rapidly evolving system while also balancing a rapid response to the users’ needs, long-term evolution and stability was largely offset by the flexibility and robustness of a simple design, as well as the consistency of a unique development line where the users’ investment in code and algorithms has been preserved over more than a decade.

The design of the analysis framework also relied directly on the facilities provided by the ROOT framework. We used the ROOT tasks to implement the so called “analysis train”, where one event is read in memory and then passed to the different analysis tasks, which are linked like wagons of a train. Virtuality with respect to the data is achieved via “readers” that can accept different kinds of input and take care of the format conversion. At ALICE we have two analysis objects: the event summary data (ESD) that result from the reconstruction and the analysis object data (AOD) in the form of compact event information derived from the ESD. AODs can be customized with additional files that add information to each event without the need to rewrite them (the delta-AOD). Figure 1 gives a schematic representation that attempts to catch the essence of AliRoot.

The framework is such that the same code can be run on a local workstation, or on a parallel system enabled by the “ROOT Proof” system, where different events are dispatched to different cores, or on the Grid. A plug-in mechanism takes care of hiding the differences from the user.

The early transition to C++ and the “burn the bridge” approach encouraged (or rather compelled) several senior physicists to jump the fence and move to the new language. That the framework was there more than 10 years before data-taking began and that its principles of operation did not change during its evolution allowed several of them to become seasoned C++ programmers and AliRoot experts by the time that the detector started producing data.

AliRoot today

Today’s AliRoot retains most of the features of the original even if the code provides much more functionality and is correspondingly more complex. Comprising contributions from more than 400 authors, it is the framework within which all ALICE data are processed and analysed. The release cycle has been kept nimble. We have one update a week and one full new release of AliRoot every six months. Thanks to an efficient software-distribution scheme, the deployment of a full new version on the Grid takes as little as half a day. This has proved useful for “emergency fixes” during critical productions. A farm of “virtual” AliRoot builders is in continuous operation building the code on different combinations of operating system and compiler. Nightly builds and tests are automatically performed to assess the quality of the code and the performance parameters (memory and CPU).

The next challenge will be to adapt the code to new parallel and concurrent architectures to make the most of the performance of the modern hardware, for which we are currently exploiting only a small fraction of the potential. This will probably require a profound rethinking of the class and data structures, as well as of the algorithms. It will be the major subject of the offline upgrade that will take place in 2013 and 2014 during the LHC’s long shutdown. This challenge is made more interesting because new (and not quite compatible) architectures are continuously being produced.

An AliEn runs the Grid

Work on the Grid implementation for ALICE had to follow a different path. The effort required to develop a complete Grid system from scratch would have been prohibitive and in the Grid world there was no equivalent to ROOT that would provide a solid foundation. There was, however, plenty of open-source software with the elements necessary for building a distributed computing system that would embody major portions of the Grid “vision”.

Following the same philosophy used in the development of AliRoot, but with a different technique, we built a lightweight framework written in the Perl programming language, which linked together several tens of individual open-source components. This system used web services to create a “grid in a box” – a “shrink-wrapped” environment, called Alice Environment or AliEn – or implement a functional Grid system, which already allowed us to run large Monte Carlo productions as early as 2002. From the beginning, the core of this system consisted of a distributed file catalogue and a workload-management system based on the “pull” mechanism, where computer centres fetch appropriate workloads from a central queue.

AliEn was built as a metasystem from the start with the aim of presenting the user with a seamless interface while joining together the different Grid systems (a so-called overlay Grid) that harness the various resources. As AliEn could offer the complete set of services that ALICE needed from the Grid, the interface with the different systems consisted of replacing as far as possible the AliEn services with the ones of the native Grids.

This has proved to be a good principle because the Advanced Resource Connector (ARC) services of the NorduGrid collaboration are now integrated with AliEn. ALICE users access transparently three Grids (EGEE, OSC and ARC), as well as the few remaining native AliEn sites. One important step was achieved with the tight integration of AliEn with the MonALISA monitoring system, which allows large quantities of dynamic parameters related to the Grid operation to be stored and processed. This integration will continue in the direction of provisioning and scheduling Grid resources based on past and current performance, and load as recorded by MonALISA.

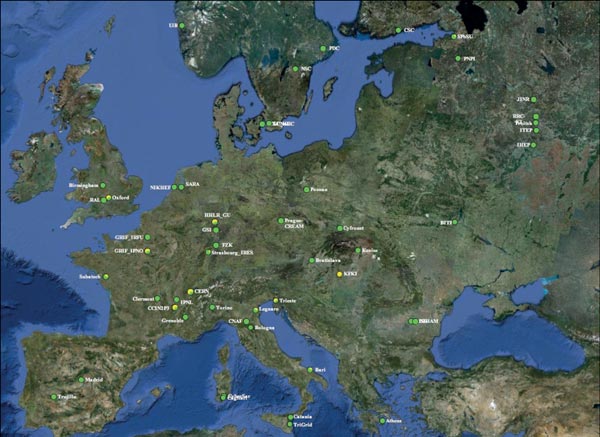

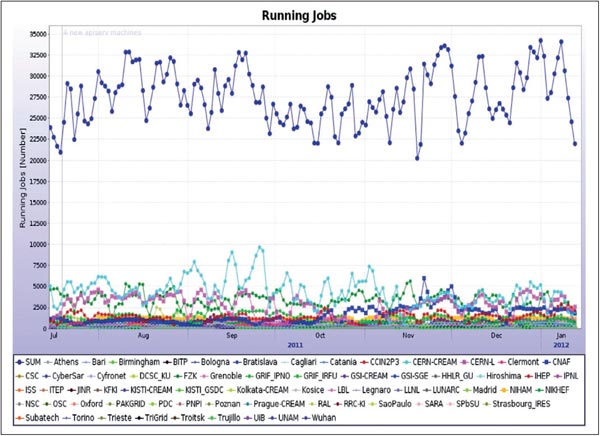

The AliEn Grid has also seen substantial evolution, its core components having been upgraded and replaced several times. However, the user interface has changed little. Thanks to AliEn and MonALISA, the central operation of the entire ALICE Grid takes the equivalent of only three or four full-time operators. It routinely runs complicated job chains fully automated at all times, totalling an average of 28,000 jobs in continuous execution on 80 computer centres in four continents (figure 3).

The next step

Despite the generous efforts of the funding agencies, computing resources in ALICE remain tight. To alleviate the problem and ensure that resources are used at the maximum efficiency, all ALICE computing resources are pooled into AliEn. The corollary is that the Grid is the most natural place for all ALICE users to run any job that exceeds the capacity of a laptop. This has put considerable stress on the ALICE Grid developers to provide a friendly environment, where even running short, test jobs on the Grid should be as simple and fast as running them on a personal computer. This still remains the goal but much ground has been covered in making Grid usage as transparent and efficient as possible; indeed, all ALICE analysis is performed on the Grid. Before a major conference, it is not uncommon to see more than half of the total Grid resources being used by private-analysis jobs.

The challenges ahead for the ALICE Grid are to improve the optimization tools for workload scheduling and data access, thereby increasing the capabilities to exploit opportunistic computing resources. The availability of the comprehensive and highly optimized monitoring tools and data provided by MonALISA are assets that have not yet been completely exploited to provide predictive provisioning of resources for optimized usage. This is an example of a “boundary pushing” research subject in computer science, which promises to yield urgently needed improvements to the everyday life of ALICE physicists.

It will also be important to exploit interactivity and parallelism at the level of the Grid, to improve the “time-to-solution” and to come a step closer to the original Grid vision of making a geographically distributed, heterogeneous system appear similarly to a desktop computer. In particular, the evolution of AliRoot to exploit parallel computing architectures should be extended as seamlessly as possible from multicore and multi-CPU machines – first to different machines and then to Grid nodes. This implies both an evolution of the Grid environment as well as the ALICE software, which will have to be transformed to expose the intrinsic parallelism of the problem in question (event processing) at its different levels of granularity.

Although it is difficult to define success for a computing project in high-energy physics, and while ALICE computing certainly offers much room for improvement, it cannot be denied that it has fulfilled its mandate of allowing the processing and analysis of the initial ALICE data. However, this should not be considered as a result acquired once and for all, or subject only to incremental improvements. Requirements from physicists are always evolving – or rather, growing qualitatively and quantitatively. While technology offers the possibilities to satisfy these requirements, this will entail major reshaping of ALICE’s code and Grid tools to ride the technology wave while preserving as much as possible of the users’ investment. This will be a challenging task for the ALICE computing people for years to come.