CERN’s demanding data processing requirements provide the testbed for a new range of semiconductor chips and associated software developed jointly by industrial giants Hewlett-Packard and Intel and which are aimed at new generations of computers.

In the long run, technology always benefits from new scientific insights. Modern semiconductor technology, for example, is one of the ultimate applications of quantum mechanics.

But there are other ways in which science can drive technology. For science to advance, researchers look at the world around us in new ways and under special conditions. The further science advances, the more unusual these conditions become. Irrespective of the ultimate scientific breakthrough, these increasingly stringent demands frequently catalyse new technological developments. Cryogenics, high vacuum and data acquisition and processing are examples of areas where the special requirements of particle physics have fostered industrial progress.

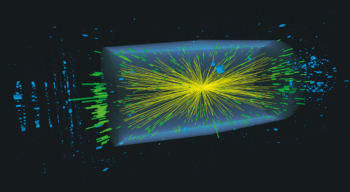

At CERN’s LHC collider now under construction, the very high event-rates call for a major push in data acquisition and processing power for the experiments. Each LHC experiment will generate a torrent of information, about 100 Mbyte per second, equivalent to a small library. With raw events happening every 25 nanoseconds, the data volumes will grow at an alarming rate, and a major upgrade in data handling capability is called for in order that precious physics information is not to be lost.

In addition, to minimize the requirement for expensive computer power downstream, as much processing as possible has to be done by hardware strategically distributed upstream.

Moore’s Law

At the same time, the highly competitive computer industry is continually at work developing its next generation of products. The industry accepts a law first put forward by Intel founder Gordon Moore, which states that the number of transistors that can be harnessed for a particular task doubles every 18 months. With processing speed thus also increasing at an annual rate of 60%, new approaches are continually sought to take maximum advantage of this incredible rate of advance.

It is extremely expensive for a computer manufacturer to embark on major development alone. Computer supplier Hewlett Packard and semiconductor giant Intel announced a joint research and development project in 1994 with the objective of catering for systems to appear on the market at the end of the 90s. At the time, it was already clear that 32-bit technology would soon have yielded all it could, and future developments would have to turn to a more flexible approach.

The outcome the new Explicitly Parallel Instruction Computing (EPIC) technology is a milestone in processor development. EPIC is the foundation for a new generation of 64-bit instruction set architecture driving the flow of operations through the microprocessor.

The main advance is the chip’s capacity for parallel processing, handling different operations at the same time rather than the traditional sequential approach. A good example of sequential processing is a traditional airline check-in, where although there are normally many parallel counters, each customer can only use one. At each counter a single clerk handles a long sequence of operations ticket, seat allocation, baggage, boarding pass etc.

Throughput could be increased with more clerks behind each counter, each clerk being responsible for a specific operation, but this is not true parallelism. Even in the traditional check-in approach, sequential operations eventually become parallel baggage is accepted item by item before being assembled into parallel loads for different aircraft.

However, in a fully parallel processing environment, all check-in tasks would be handled at separate counters coordinated by a central processor. Customers would be tagged as they entered the airport building and the whole check-in operation would become scheduled to occur in parallel.

The new EPIC design advances on current X86 Intel architecture by allowing the software to tell the processor when parallel operations are needed. This reduces the number of branches and optimizes the links between processing and memory. Under the codename Merced, the first 64-bit processor is scheduled for production next year.