Deep learning is bringing new levels of performance to the analysis of growing datasets in high-energy physics.

It is 1965 and workers at CERN are busy analysing photographs of trajectories of particles travelling through a bubble chamber. These and other scanning workers were employed by CERN and laboratories across the world to manually scan countless such photographs, seeking to identify specific patterns contained in them. It was their painstaking work – which required significant skill and a lot of visual effort – that put particle physics in high gear. Researchers used the photographs (see figures 1 and 3) to make discoveries that would form a cornerstone of the Standard Model of particle physics, such as the observation of weak neutral currents with the Gargamelle bubble chamber in 1973.

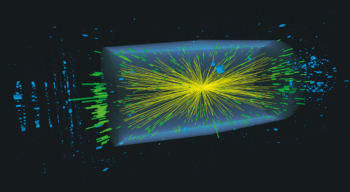

In the subsequent decades the field moved away from photographs to collision data collected with electronic detectors. Not only had data volumes become unmanageable, but Moore’s law had begun to take hold and a revolution in computing power was under way. The marriage between high-energy physics and computing was to become one of the most fruitful in science. Today, the Large Hadron Collider (LHC), with its hundreds of millions of proton–proton collisions per second, generates data at a rate of 25 GB/s – leading the CERN data centre to pass the milestone of 200 PB of permanently archived data last summer. Modelling, filtering and analysing such datasets would be impossible had the high-energy-physics community not invested heavily in computing and a distributed-computing network called the Grid.

Learning revolution

The next paradigm change in computing, now under way, is based on artificial intelligence. The so-called deep learning revolution of the late 2000s has significantly changed how scientific data analysis is performed, and has brought machine-learning techniques to the forefront of particle-physics analysis. Such techniques offer advances in areas ranging from event selection to particle identification to event simulation, accelerating progress in the field while offering considerable savings in resources. In many cases, images of particle tracks are making a comeback – although in a slightly different form from their 1960s counterparts.

Artificial neural networks are at the centre of the deep learning revolution. These algorithms are loosely based on the structure of biological brains, which consist of networks of neurons interconnected by signal-carrying synapses. In artificial neural networks these two entities – neurons and synapses – are represented by mathematical equivalents. During the algorithm’s “training” stage, the values of parameters such as the weights representing the synapses are modified to lower the overall error rate and improve the performance of the network for a particular task. Possible tasks vary from identifying images of people’s faces to isolating the particles into which the Higgs boson decays from a background of identical particles produced by other Standard Model processes.

Artificial neural networks have been around since the 1960s. But it took several decades of theoretical and computational development for these algorithms to outperform humans, in some specific tasks. For example: in 1996, IBM’s chess-playing computer Deep Blue won its first game against the then world chess champion Garry Kasparov; in 2016 Google DeepMind’s AlphaGo deep neutral-network algorithm defeated the best human players in the game of Go; modern self-driving cars are powered by deep neural networks; and in December 2017 the latest DeepMind algorithm, called AlphaZero, learned how to play chess in just four hours and defeated the world’s best chess-playing computer program. So important is artificial intelligence in potentially addressing intractable challenges that the world’s leading economies are establishing dedicated investment programmes to better harness its power.

Computer vision

The immense computing and data challenges of high-energy physics are ideally suited to modern machine-learning algorithms. Because the signals measured by particle detectors are stored digitally, it is possible to recreate an image from the outcome of particle collisions. This is most easily seen for cases where detectors offer discrete pixelised position information, such as in some neutrino experiments, but it also applies, on a more complex basis, to collider experiments. It was not long after computer-vision techniques, which are based on so-called convolutional neural networks (figure 2), were applied to the analysis of images that particle physicists applied them to detector images – first of jets and then of photons, muons and neutrinos, simplifying and making the task of understanding ever-larger and more abstract datasets more intuitive.

Particle physicists were among the first to use artificial-intelligence techniques in software development, data analysis and theoretical calculations. The first of a series of workshops on this topic, titled Artificial Intelligence in High-Energy and Nuclear Physics (AIHENP), was held in 1990. At the time, several changes were taking effect. For example, neural networks were being evaluated for event-selection and analysis purposes, and theorists were calling on algebraic or symbolic artificial-intelligence tools to cope with a dramatic increase in the number of terms in perturbation-theory calculations.

Over the years, the AIHENP series was renamed ACAT (Advanced Computing and Analysis Techniques) and expanded to span a broader range of topics. However, following a new wave of adoption of machine learning in particle physics, the focus of the 18th edition of the workshop, ACAT 2017, was again machine learning – featuring its role in event reconstruction and classification, fast simulation of detector response, measurements of particle properties, and AlphaGo-inspired calculations of Feynman loop integrals, to name a few examples.

Learning challenge

For these advances to happen, machine-learning algorithms had to improve and a physics community dedicated to machine learning needed to be built. In 2014 a machine-learning challenge set up by the ATLAS experiment to identify the Higgs boson garnered close to 2000 participants on the machine-learning competition platform Kaggle. To the surprise of many, the challenge was won by a computer scientist armed with an ensemble of artificial neural networks. In 2015 the Inter-experimental LHC Machine Learning working group was born at CERN out of a desire of physicists from across the LHC to have a platform for machine-learning work and discussions. The group quickly grew to include all the LHC experiments and to involve others outside CERN, like the Belle II experiment in Japan and neutrino experiments worldwide. More dedicated training efforts in machine learning are now emerging, including the Yandex machine learning school for high-energy physics and the INSIGHTS and AMVA4NewPhysics Marie Skłodowska-Curie Innovative Training Networks (see Learning machine learning).

Event selection, reconstruction and classification are arguably the most important particle-physics tasks to which machine learning has been applied. As in the time of manual scanning, when the photographs of particle trajectories were analysed to select events of potential physics interest, modern trigger systems are used by many particle-physics experiments, including those at the LHC, to select events for further analysis (figure 3). The decision of whether to save or throw away an event has to be made in a split microsecond and requires specialised hardware located directly on the trigger systems’ logic boards. In 2010 the CMS experiment introduced machine-learning algorithms to its trigger system to better estimate the momentum of muons, which may help identify physics beyond the Standard Model. At around the same time, the LHCb experiment also began to use such algorithms in their trigger system for event selection.

Neutrino experiments such as NOvA and MicroBooNE at Fermilab in the US have also used computer-vision techniques to reconstruct and classify various types of neutrino events. In the NOvA experiment, using deep learning techniques for such tasks is equivalent to collecting 30% more data, or alternatively building and using more expensive detectors – potentially saving global taxpayers significant amounts of money. Similar efficiency gains are observed by the LHC experiments.

Currently, about half of the Worldwide LHC Computing Grid budget in computing is spent simulating the numerous possible outcomes of high-energy proton–proton collisions. To achieve a detailed understanding of the Standard Model and any physics beyond it, a tremendous number of such Monte Carlo events needs to be simulated. But despite the best efforts by the community worldwide to optimise these simulations, the speed is still a factor of 100 short of the needs of the High-Luminosity LHC, which is scheduled to start taking data around 2026. If a machine-learning model could directly learn the properties of the reconstructed particles and bypass the complicated simulation process of the interactions between the particles and the material of the detectors, it could lead to simulations orders of magnitude faster than those currently available.

Competing networks

One idea for such a model relies on algorithms called generative adversarial networks (GANs). In these algorithms, two neural networks compete with each other for a particular goal, with one of them acting as an adversary that the other network is trying to fool. CERN’s openlab and software for experiments group, along with others in the LHC community and industry partners, are starting to see the first results of using GANs for faster event and detector simulations.

Particle physics has come a long way from the heyday of manual scanners in understanding elementary particles and their interactions. But there are gaps in our understanding of the universe that need to be filled – the nature of dark matter, dark energy, matter–antimatter asymmetry, neutrinos and colour confinement, to name a few. High-energy physicists hope to find answers to these questions using the LHC and its upcoming upgrades, as well as future lepton colliders and neutrino experiments. In this endeavour, machine learning will most likely play a significant part in making data processing, data analysis and simulation, and many other tasks, more efficient.

Driven by the promise of great returns, big companies such as Google, Apple, Microsoft, IBM, Intel, Nvidia and Facebook are investing hundreds of millions of dollars in deep learning technology including dedicated software and hardware. As these technologies find their way into particle physics, together with high-performance computing, they will boost the performance of current machine-learning algorithms. Another way to increase the performance is through collaborative machine learning, which involves several machine-learning units operating in parallel. Quantum algorithms running on quantum computers might also bring orders-of-magnitude improvement in algorithm acceleration, and there are probably more advances in store that are difficult to predict today. The availability of more powerful computer systems together with deep learning will likely allow particle physicists to think bigger and perhaps come up with new types of searches for new physics or with ideas to automatically extract and learn physics from the data.

That said, machine learning in particle physics still faces several challenges. Some of the most significant include understanding how to treat systematic uncertainties while employing machine-learning models and interpreting what the models learn. Another challenge is how to make complex deep learning algorithms work in the tight time window of modern trigger systems, to take advantage of the deluge of data that is currently thrown away. These challenges aside, the progress we are seeing today in machine learning and in its application to particle physics is probably just the beginning of the revolution to come.