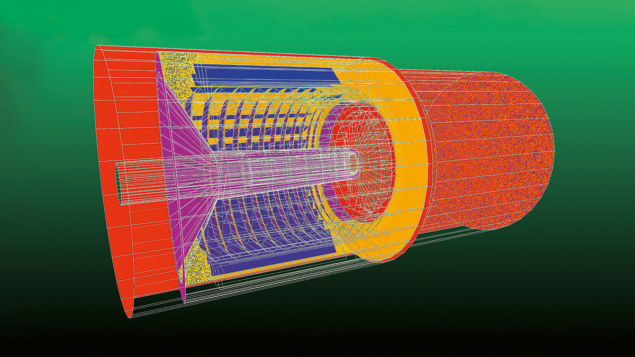

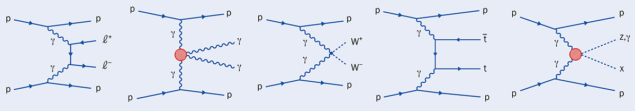

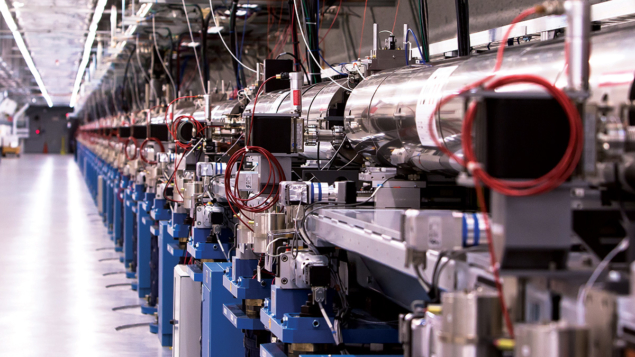

In particle accelerators, large vacuum systems guarantee that the beams travel as freely as possible. Despite being one 25-trillionth the density of Earth’s atmosphere, however, a tiny concentration of gas molecules remain. These pose a problem: their collisions with accelerated particles reduce the beam lifetime and induce instabilities. It is therefore vital, from the early design stage, to plan efficient vacuum systems and predict residual pressure profiles.

Surprisingly, it is almost impossible to find commercial software that can carry out the underlying vacuum calculations. Since the background pressure in accelerators (of the order 10–9–10–12 mbar) is so low, molecules rarely collide with one other and thus the results of codes based on computational fluid dynamics aren’t valid. Although workarounds exist (solving vacuum equations analytically, modelling a vacuum system as an electrical circuit, or taking advantage of similarities between ultra-high-vacuum and thermal radiation), a CERN-developed simulator “Molflow”, for molecular flow, has become the de-facto industry standard for ultra-high-vacuum simulations.

Instead of trying to analytically solve the surprisingly difficult gas behaviour over a large system in one step, Molflow is based on the so-called test-particle Monte Carlo method. In a nutshell: if the geometry is known, a single test particle is created at a gas source and “bounced” through the system until it reaches a pump. Then, repeating this millions of times, with each bounce happening in a random direction, just like in the real world, the program can calculate the hit-density anywhere, from which the pressure is obtained.

The idea for Molflow emerged in 1988 when the author (RK) visited CERN to discuss the design of the Elettra light source with CERN vacuum experts (see “From CERN to Elettra, ESRF, ITER and back” panel). Back then, few people could have foreseen the numerous applications outside particle physics that it would have. Today, Molflow is used in applications ranging from chip manufacturing to the exploration of the Martian surface, with more than 1000 users worldwide and many more downloads from the dedicated website.

Molflow in space

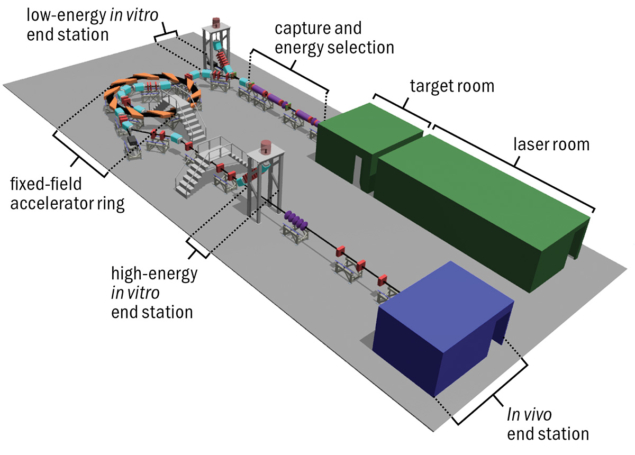

While at CERN we naturally associate ultra-high vacuum with particle accelerators, there is another domain where operating pressures are extremely low: space. In 2017, after first meeting at a conference, a group from German satellite manufacturer OHB visited the CERN vacuum group, interested to see our chemistry lab and the cleaning process applied to vacuum components. We also demoed Molflow for vacuum simulations. It turned out that they were actively looking for a modelling tool that could simulate specific molecular-contamination transport phenomena for their satellites, since the industrial code they were using had very limited capabilities and was not open-source.

Molflow has complemented NASA JPL codes to estimate the return flux during a series of planned fly-bys around Jupiter’s moon Europa

A high-quality, clean mirror for a space telescope, for example, must spend up to two weeks encapsulated in the closed fairing from launch until it is deployed in orbit. During this time, without careful prediction and mitigation, certain volatile compounds (such as adhesive used on heating elements) present within the spacecraft can find their way to and become deposited on optical elements, reducing their reflectivity and performance. It is therefore necessary to calculate the probability that molecules migrate from a certain location, through several bounces, and end up on optical components. Whereas this is straightforward when all simulation parameters are static, adding chemical processes and molecule accumulation on surfaces required custom development. Even though Molflow could not handle these processes “out of the box”, the OHB team was able to use it as a basis that could be built on, saving the effort of creating the graphical user interface and the ray-tracing parts from scratch. With the help of CERN’s knowledge-transfer team, a collaboration was established with the Technical University of Munich: a “fork” in the code was created; new physical processes specific to their application were added; and the code was also adapted to run on computer clusters. The work was made publicly available in 2018, when Molflow became open source.

From CERN to Elettra, ESRF, ITER and back

Molflow emerged in 1988 during a visit to CERN from its original author (RK), who was working at the Elettra light source in Trieste at the time. CERN vacuum expert Alberto Pace showed him a computer code written in Fortran that enabled the trajectories of particles to be calculated, via a technique called ray tracing. On returning to Trieste, and realising that the CERN code couldn’t be run there due to hardware and software incompatibilities, RK decided to rewrite it from scratch. Three years later the code was formally released. Once more, credit must be given to CERN for having been the birthplace of new ideas for other laboratories to develop their own applications.

Molflow was originally written in Turbo Pascal, had (black and white) graphics, and visualised geometries in 3D – even allowing basic geometry editing and pressure plots. While today such features are found in every simulator, at the time the code stood out and was used in the design of several accelerator facilities, including the Diamond Light Source, Spallation Neutron Source, Elettra, Alba and others – as well as for the analysis of a gas-jet experiment for the PANDA experiment at GSI Darmstadt. That said, the early code had its limitations. For example, the upper limit of user memory (640 kB for MS-DOS) significantly limited the number of polygons used to describe the geometry, and it was single-processor.

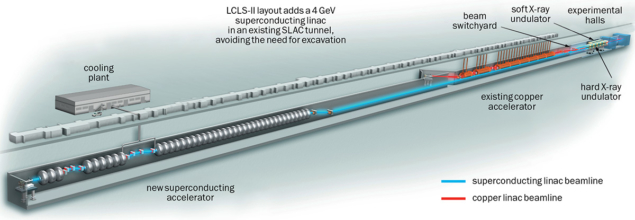

In 2007 the original code was given a new lease of life at the European Synchrotron Radiation Facility in Grenoble, where RK had moved as head of the vacuum group. Ported to C++, multi-processor capability was added, which is particularly suitable for Monte Carlo calculations: if you have eight CPU cores, for example, you can trace eight molecules at the same time. OpenGL (Open Graphics Library) acceleration made the visualisation very fast even for large structures, allowing the usual camera controls of CAD editors to be added. Between 2009 and 2011 Molflow was used at ITER, again following its original author, for the design and analysis of vacuum components for the international tokamak project.

In 2012 the project was resumed at CERN, where RK had arrived the previous year. From here, the focus was on expanding the physics and applications: ray-tracing terms like “hit density” and “capture probability” were replaced with real-world quantities such as pressure and pumping speed. To publish the code within the group, a website was created with downloads, tutorial videos and a user forum. Later that year, a sister code “Synrad” for synchrotron-radiation calculations, also written in Trieste in the 1990s, was ported to the modern environment. The two codes could, for the first time, be used as a package: first, a synchrotron-radiation simulation could determine where light hits a vacuum chamber, then the results could be imported to a subsequent vacuum simulation to trace the gas desorbed from the chamber walls. This is the so-called photon-stimulated desorption effect, which is a major hindrance to many accelerators, including the LHC.

Molflow and Synrad have been downloaded more than 1000 times in the past year alone, and anonymous user metrics hint at around 500 users who launch it at least once per month. The code is used by far the most in China, followed by the US, Germany and Japan. Switzerland, including users at CERN, places only fifth. Since 2018, the roughly 35,000-line code has been available open-source and, although originally written for Windows, it is now available for other operating systems, including the new ARM-based Macs and several versions of Linux.

One year later, the Contamination Control Engineering (CCE) team from NASA’s Jet Propulsion Laboratory (JPL) in California reached out to CERN in the context of its three-stage Mars 2020 mission. The Mars 2020 Perseverance Rover, built to search for signs of ancient microbial life, successfully landed on the Martian surface in February 2021 and has collected and cached samples in sealed tubes. A second mission plans to retrieve the cache canister and launch it into Mars orbit, while a third would locate and capture the orbital sample and return it to Earth. Each spacecraft experiences and contributes to its own contamination environment through thruster operations, material outgassing and other processes. JPL’s CCE team performs the identification, quantification and mitigation of such contaminants, from the concept-generation to the end-of-mission phase. Key to this effort is the computational physics modelling of contaminant transport from materials outgassing, venting, leakage and thruster plume effects.

Contamination consists of two types: molecular (thin-film deposition effects) and particulate (producing obscuration, optical scatter, erosion or mechanical damage). Both can lead to degradation of optical properties and spurious chemical composition measurements. As more sensitive space missions are proposed and built – particularly those that aim to detect life – understanding and controlling outgassing properties requires novel approaches to operating thermal vacuum chambers.

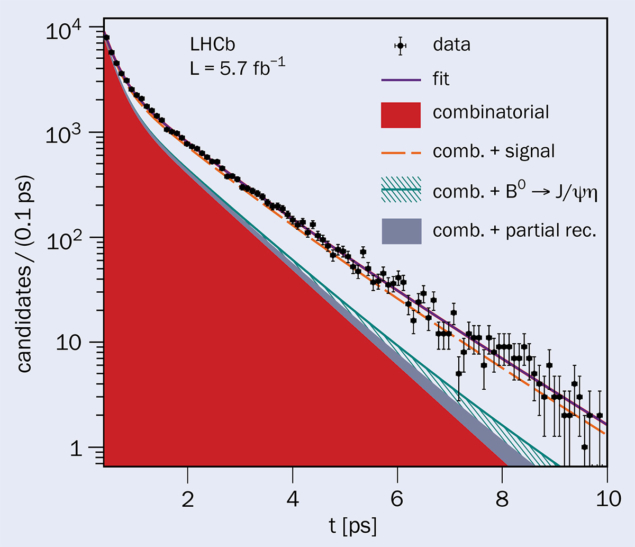

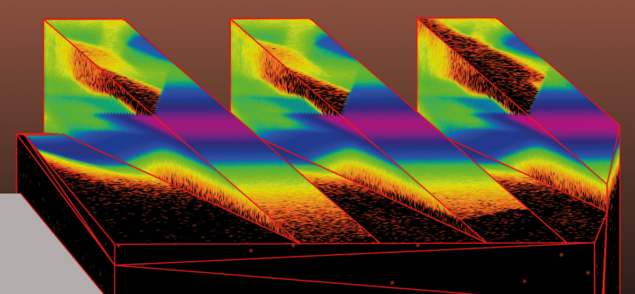

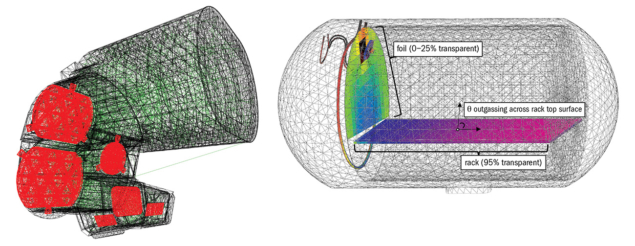

Just like accelerator components, most spacecraft hardware undergoes long-duration vacuum baking at relatively high temperatures to reduce outgassing. Outgassing rates are verified with quartz crystal microbalances (QCMs), rather than vacuum gauges as used at CERN. These probes measure the resonance frequency of oscillation, which is affected by the accumulation of adsorbed molecules, and are very sensitive: a 1 ng deposition on 1 cm2 of surface de-tunes the resonance frequency by 2 Hz. By performing free-molecular transport simulations in the vacuum-chamber test environment, measurements by the QCMs can be translated to outgassing rates of the sources, which are located some distance from the probes. For these calculations, JPL currently uses both Monte Carlo schemes (via Molflow) and “view factor matrix” calculations (through in-house solvers). During one successful Molflow application (see “Molflow in space” image, top) a vacuum chamber with a heated inner shroud was simulated, and optimisation of the chamber geometry resulted in a factor-40 increase of transmission to the QCMs over the baseline configuration.

From SPHEREx to LISA

Another JPL project involving free molecular-flow simulations is the future near-infrared space observatory SPHEREx (Spectro-Photometer for the History of the Universe and Ices Explorer). This instrument has cryogenically cooled optical surfaces that may condense molecules in vacuum and are thus prone to significant performance degradation from the accumulation of contaminants, including water. Even when taking as much care as possible during the design and preparation of the systems, some elements, such as water, cannot be entirely removed from a spacecraft and will desorb from materials persistently. It is therefore vital to know where and how much contamination will accumulate. For SPHEREx, water outgassing, molecular transport and adsorption were modelled using Molflow against internal thermal predictions, enabling a decontamination strategy to keep its optics free from performance-degrading accumulation (see “Molflow in space” image, left). Molflow has also complemented other NASA JPL codes to estimate the return flux (whereby gas particles desorbing from a spacecraft return to it after collisions with a planet’s atmosphere) during a series of planned fly-bys around Jupiter’s moon Europa. For such exospheric sampling missions, it is important to distinguish the actual collected sample from return-flux contaminants that originated from the spacecraft but ended up being collected due to atmospheric rebounds.

It is the ability to import large, complex geometries (through a triangulated file format called STL, used in 3D printing and supported by most CAD software) that makes Molflow usable for JPL’s molecular transport problems. In fact, the JPL team “boosted” our codes with external post-processing: instead of built-in visualisation, they parsed the output file format to extract pressure data on individual facets (polygons representing a surface cell), and sometimes even changed input parameters programmatically – once again working directly on Molflow’s own file format. They also made a few feature requests, such as adding histograms showing how many times molecules bounce before adsorption, or the total distance or time they travel before being adsorbed on the surfaces. These were straightforward to implement, and because JPL’s scientific interests also matched those of CERN users, such additions are now available for everyone in the public versions of the code. Similar requests have come from experiments employing short-lived radioactive beams, such as those generated at CERN’s ISOLDE beamlines. Last year, against all odds during COVID-related restrictions, the JPL team managed to visit CERN. While showing the team around the site and the chemistry laboratory, they held a seminar for our vacuum group about contamination control at JPL, and we showed the outlook for Molflow developments.

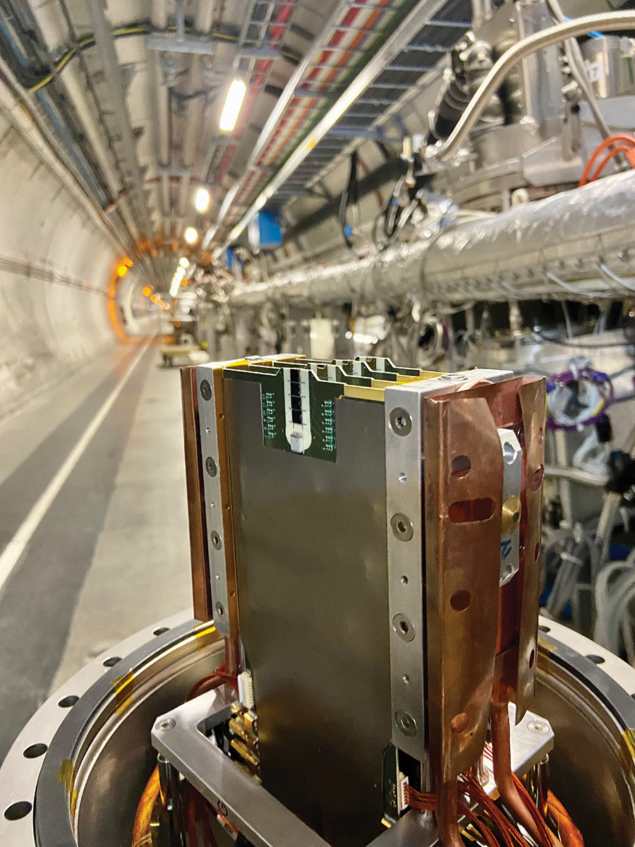

Our latest space-related collaboration, started in 2021, concerns the European Space Agency’s LISA mission, a future gravitational-wave interferometer in space (see CERN Courier September/October 2022 p51). Molflow is being used to analyse data from the recently completed LISA Pathfinder mission, which explored the feasibility of keeping two test masses in gravitational free-fall and using them as inertial sensors by measuring their motion with extreme precision. Because the satellite’s sides have different temperatures, and because the gas sources are asymmetric around the masses, there is a difference in outgassing between two sides. Moreover, the gas molecules that reach the test mass are slightly faster on one side than the other, resulting in a net force and torque acting on the mass, of the order of femtonewtons. When such precise inertial measurements are required, this phenomenon has to be quantified, along with other microscopic forces, such as Brownian noise resulting from the random bounces of molecules on the test mass. To this end, Molflow is currently being modified to add molecular force calculations for LISA, along with relevant physical quantities such as noise and resulting torque.

Sky’s the limit

Molflow has proven to be a versatile and effective computational physics model for the characterisation of free-molecular flow, having been adopted for use in space exploration and the aerospace sector. It promises to continue to intertwine different fields of science in unexpected ways. Thanks to the ever-growing gaming industry, which uses ray tracing to render photorealistic scenes of multiple light sources, consumer-grade graphics cards started supporting ray-tracing in 2019. Although intended for gaming, they are programmable for generic purposes, including science applications. Simulating on graphics-processing units is much faster than traditional CPUs, but it is also less precise: in the vacuum world, tiny imprecisions in the geometry can result in “leaks” or some simulated particles crossing internal walls. If this issue can be overcome, the speedup potential is huge. In-house testing carried out recently at CERN by PhD candidate Pascal Bahr demonstrated a speedup factor of up to 300 on entry-level Nvidia graphics cards, for example.

Our latest space-related collaboration concerns the European Space Agency’s LISA mission

Another planned Molflow feature is to include surface processes that change the simulation parameters dynamically. For example, some getter films gradually lose their pumping ability as they saturate with gas molecules. This saturation depends on the pumping speed itself, resulting in two parameters (pumping speed and molecular surface saturation) that depend on each other. The way around this is to perform the simulation in iterative time steps, which is straightforward to add but raises many numerical problems.

Finally, a much-requested feature is automation. The most recent versions of the code already allow scripting, that is, running batch jobs with physics parameters changed step-by-step between each execution. Extending these automation capabilities, and adding export formats that allow easier post-processing with common tools (Matlab, Excel and common Python libraries) would significantly increase usability. If adding GPU ray tracing and iterative simulations are successful, the resulting – much faster and more versatile – Molflow code will remain an important tool to predict and optimise the complex vacuum systems of future colliders.

What happened? A tragedy fell upon Ukraine and found many in despair or in a dilemma. After 70 mainly peaceful years for much of Europe, we were surprised by war, because we had forgotten that it takes an effort to maintain peace.

What happened? A tragedy fell upon Ukraine and found many in despair or in a dilemma. After 70 mainly peaceful years for much of Europe, we were surprised by war, because we had forgotten that it takes an effort to maintain peace.