Pierre Charrue describes the infrastructure to ensure correct operation

The scale and complexity of the Large Hadron Collider (LHC) under construction at CERN are unprecedented in the field of particle accelerators. It has the largest number of components and the widest diversity of systems of any accelerator in the world. As many as 500 objects around the 27 km ring, from passive valves to complex experimental detectors, could in principle move into the beam path in either the LHC ring or the transfer lines. Operation of the machine will be extremely complicated for a number of reasons, including critical technical subsystems, a large parameter space, real-time feedback loops and the need for online magnetic and beam measurements. In addition, the LHC is the first superconducting accelerator built at CERN and will use four large-scale cryoplants with 1.8 K refrigeration capability.

The complexity means that repairs of any damaged equipment will take a long time. For example, it will take about 30 days to change a superconducting magnet. Then there is the question of damage if systems go wrong. The energy stored in the beams and magnets is more than twice the levels of other machines. That accumulated in the beam could, for example, melt 500 kg of copper. All of this means that the LHC machine must be protected at all costs. If an incident occurs during operation, it is critical that it is possible to determine what has happened and trace the cause. Moreover, operation should not resume if the machine is not back in a good working state.

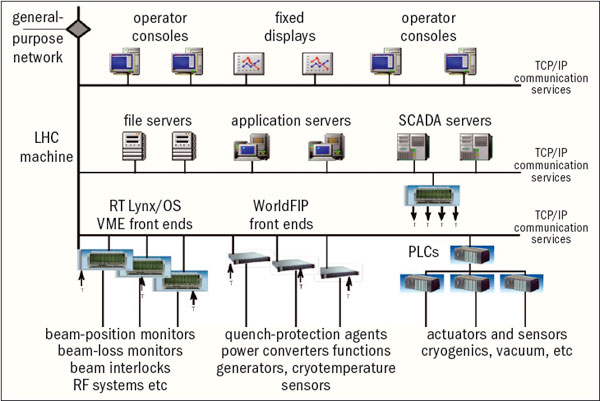

The accelerator controls group at CERN has spent the past four years developing a new software and hardware control system architecture based on the many years of experience in controlling the particle injector chain at CERN. The resulting LHC controls infrastructure is based on a classic three-tier architecture: a basic resource tier that gathers all of the controls equipment located close to the accelerators; a middle tier of servers; and a top tier that interfaces with the operators (figure 1).

Complex architecture

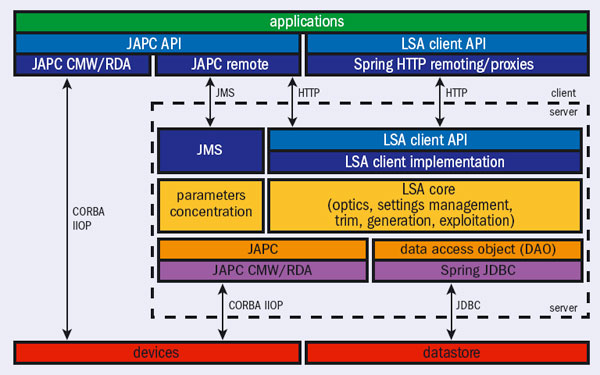

The LHC Software Application (LSA) system covers all of the most important aspects of accelerator controls: optics (twiss, machine layout), parameter space, settings generation and management (generation of functions based on optics, functions and scalar values for all parameters), trim (coherent modifications of settings, translation from physics to hardware parameters), operational exploitation, hardware exploitation (equipment control, measurements) and beam-based measurements. The software architecture is based on three main principles (figure 2). It is modular (each module has high cohesion, providing a clear application program interface to its functionality), layered (with three isolated logical layers – database and hardware access layer, business layer, user applications) and distributed (when deployed in the three-tier configuration). It provides homogenous application software to operate the SPS accelerator, its transfer lines and the LHC, and it has already been used successfully in 2005 and 2006 to operate the Low Energy Ion Ring (LEIR) accelerator, the SPS and LHC transfer lines.

The front-end hardware of the resource tier consists of 250 VMEbus64x sub-racks and 120 industrial PCs distributed in the surface buildings around the 27 km ring of the LHC. The mission of these systems is to perform direct real-time measurements and data acquisition close to the machine, and to deliver this information to the application software running in the upper levels of the control system. These embedded systems use home-made hardware and commercial off-the-shelf technology modules, and they serve as managers for various types of fieldbus such as WorldFIP, a deterministic bus used for the real-time control of the LHC power converters and the quench-protection system. All front ends in the LHC have a built-in timing receiver that guarantees synchronization to within 1 μs. This is required for time tagging of post-mortem data. The tier also covers programmable logic controllers, which drive various kinds of industrial actuator and sensor for systems, such as the LHC cryogenics systems and the LHC vacuum system.

The middle tier of the LHC controls system is mostly located in the Central Computer Room, close to the CERN Control Centre (CCC). This tier consists of various servers: application servers, which host the software required to operate the LHC beams and run the supervisory control and data acquisition (SCADA) systems; data servers that contain the LHC layout and the controls configuration, as well as all of the machine settings needed to operate the machine or to diagnose machine behaviours; and file servers containing the operational applications. More than 100 servers provide all of these services. The middle tier also includes the central timing that provides the information for cycling the whole complex of machines involved in the production of the LHC beam, from the linacs onwards.

At the top level – the presentation tier – consoles in the CCC run GUIs that will allow machine operators to control and optimize the LHC beams and supervise the state of key systems. Dedicated displays provide real-time summaries of key machine parameters. The CCC is divided into four “islands”, each devoted to a specific task: CERN’s PS complex; the SPS; technical services; and the LHC. Each island is made of five operational consoles and a typical LHC console is composed of five computers (figure 3). These are PCs running interactive applications, fixed displays and video displays, and they include a dedicated PC connected only to the public network. This can be used for general office activities such as e-mail and web browsing, leaving the LHC control system isolated from exterior networks.

Failsafe mechanisms

In building the infrastructure for the LHC controls, the controls groups developed a number of technical solutions to the many challenges facing them. Security was of paramount concern: the LHC control system must be protected, not only from external hackers, but also from inadvertent errors by operators and failures in the system. The Computing and Network Infrastructure for Controls is a CERN-wide working group set up in 2004 to define a security policy for all of CERN, including networking aspects, operating systems configuration (Windows and Linux), services and support (Lüders 2007). One of the group’s major outcomes is the formal separation of the general-purpose network and the technical network, where connection to the latter requires the appropriate authorization.

Another solution has been to deploy, in close collaboration with Fermilab, “role-based” access (RBAC) to equipment in the communication infrastructure. The main motivation to have RBAC in a control system is to prevent unauthorized access and provide an inexpensive way to protect the accelerator. A user is prevented from entering the wrong settings – or from even logging into the application at all. RBAC works by giving people roles and assigning permissions to those roles to make settings. An RBAC token – containing information about the user, the application, the location, the role and so on – is obtained during the authentication phase (figure 4). This is then attached to any subsequent access to equipment and is used to grant or deny the action. Depending on the action made, who is making the call and from where, and when it is executed, access will be either granted or denied. This allows for filtering, control and traceability of modifications to the equipment.

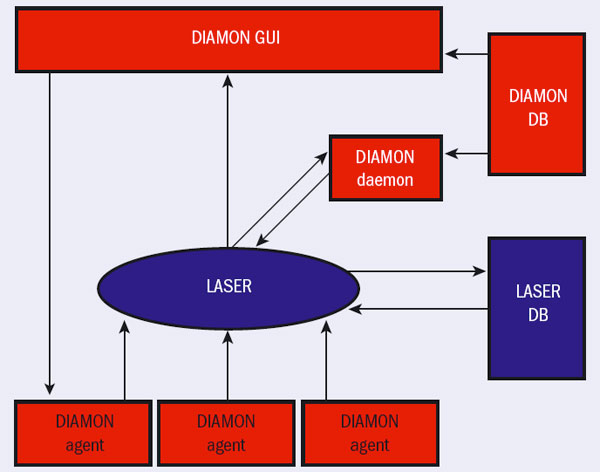

An alarm service for the operation of all of the CERN accelerator chain and technical infrastructure exists in the form of the LHC Alarm SERvice (LASER). This is used operationally for the transfer lines, the SPS, the CERN Neutrinos to Gran Sasso (CNGS) project, the experiments and the LHC, and it has recently been adapted for the PS Complex (Sigerud et al. 2005). LASER provides the collection, analysis, distribution, definition and archiving of information about abnormal situations – fault states – either for dedicated alarm consoles, running mainly in the control rooms, or for specialized applications.

LASER does not actually detect the fault states. This is done by user surveillance programs, which run either on distributed front-end computers or on central servers. The service processes about 180,000 alarm events each day and currently has more than 120,000 definitions. It is relatively simple for equipment specialists to define and send alarms, so one challenge has been to keep the number of events and definitions to a practical limit for human operations, according to recommended best practice.

The controls infrastructure of the LHC and its whole injector chain spans large distances and is based on a diversity of equipment, all of which needs to be constantly monitored. When a problem is detected, the CCC is notified and an appropriate repair has to be proposed. The purpose of the diagnostics and monitoring (DIAMON) project is to provide the operators and equipment groups with tools to monitor the accelerator and beam controls infrastructure with easy-to-use first-line diagnostics, as well as to solve problems or help to decide on responsibilities for the first line of intervention.

The scope of DIAMON covers some 3000 “agents”. These are pieces of code, each of which monitors a part of the infrastructure, from the fieldbuses and frontends to the hardware of the control-room consoles. It uses LASER and works in two main parts: the monitoring part constantly checks all items of the controls infrastructure and reports on problems; while the diagnostic part displays the overall status of the controls infrastructure and proposes support for repairs.

The frontend of the controls system has its own dedicated real-time frontend software architecture (FESA). This framework offers a complete environment for equipment specialists to design, develop, deploy and test equipment software. Despite the diversity of devices – such as beam-loss monitors, power converters, kickers, cryogenic systems and pick-ups – FESA has successfully standardized a high-level language and an object-oriented framework for describing and developing portable equipment software, at least across CERN’s accelerators. This reduces the time spent developing and maintaining equipment software and brings consistency across the equipment software deployed across all accelerators at CERN.

This article illustrates only some of the technical solutions that have been studied, developed and deployed in the controls infrastructure in the effort to cope with the stringent and demanding challenges of the LHC. This infrastructure has now been tested almost completely on machines and facilities that are already operational, from LEIR to the SPS and CNGS, and LHC hardware commissioning. The estimated collective effort amounts to some 300 person-years and a cost of SFr21 m. Part of the enormous human resource comes from international collaborations, the valuable contributions of which are hugely appreciated. Now the accelerator controls group is confident that they can meet the challenges of the LHC.

• This article is based on the author’s presentation at ICALEPCS ’07 (Control systems for big physics reach maturity).