In the quest for new, higher-precision measurements, handling experimental results is an essential and increasingly important part of modern research, but it is rarely discussed in the open. A recent workshop at CERN remedied this.

The first conference for high-energy physicists devoted entirely to statistical data analysis was the Workshop on Confidence Limits, held at CERN on 17-18 January. The idea was to bring together a small group of specialists, but interest proved to be so great that attendance was finally limited by the size of the CERN Council Room to 136 participants. Others were able to follow the action by video retransmission to a room nearby. A second workshop on the same topic was held at Fermilab at the end of March.

Confidence limits are what scientists use to express the uncertainty in their measurements. They are a generalization of the more common “experimental error” recognizable by the “plus-or-minus sign”, as in x= 2.5 ± 0.3, which means that 0.3 is the expected error (the standard deviation) of the value 2.5. In more complicated real-life situations, errors may be asymmetric or even completely one-sided, the latter being the case when one sets an upper limit to a phenomenon looked for but not seen. When more than one parameter is estimated simultaneously, the confidence limits become a confidence region, which may assume bizarre shapes, as happens in the search for neutrino oscillations.

Analysis methods

Why the sudden interest in confidence limits? As co-convenors Louis Lyons (Oxford) and Fred James (CERN) had realized, the power and sophistication of modern experiments, probing rare phenomena and searching for ever-more exotic particles, require the most advanced techniques, not only in accelerator and detector design, but also in statistical analysis, to bring out all of the information contained in the data (but not more!).

As invited speaker Bob Cousins (UCLA) wrote several years ago in the American Journal of Physics:“Physicists embarking on seemingly routine error analyses are finding themselves grappling with major conceptual issues which have divided the statistics community for years. While the philosophical aspects of the debate may be endless, a practising experimenter must choose a way to report results.”

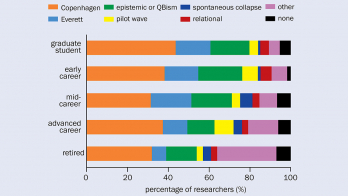

One of these “major conceptual issues” is the decades-old debate between the two methodologies: frequentist (or classical) methods, which have been the basis of almost all scientific statistics ever since they were developed in the early part of the 20th century; and Bayesian methods, which are much older but have been making a comeback and are considered by some to have distinct advantages.

With this in mind, the invited speakers included prominent proponents of both of the techniques. In the week leading up to the workshop, Fred James gave a series of lectures on statistics in the CERN Academic Training series. This provided some useful background for a number of the participants. The workshop benefited from a special Web site that was designed by Yves Perrin and that now contains write-ups of the majority of the talks.

One of the items that caused some amusement was the list of required reading material to be studied beforehand. The rumour that participants would be required to pass a written examination was untrue. Also unfounded was the suggestion that the convenors had something to do with the headline of the CERN weekly Bulletinthat appeared on the opening day of the workshop: “CERN confronts the New Millennium with Confidence”.

In his introductory talk, Fred James proposed some ground rules: that all methods should be based on recognized statistical principles and therefore should be labelled either classical or Bayesian; for classical methods, the frequentist properties should be known, especially the coverage; a method is said to have coverage if the 90% confidence limits are guaranteed to contain the true value of the parameter in 90% of the cases, were the experiment to be repeated many times, no matter what the true value is.

Bayesian methods, on the other hand, require the specification of a prior distribution of “degrees of belief” in the values of the parameter being measured, so proponents of Bayesian methods were invited to explain what prior distribution was assumed and how they justified it.

Bayesians were also asked to explain how they define probability and how they reconcile their definition with the probability of quantum mechanics, which is a frequentist probability. James then offered the view that, in the last analysis, a method would be judged on how well its confidence limits fulfilled three criteria:

* Do they give a good measure of the experiment’s sensitivity?

* Can they be combined with limits given by other experiments?

* Can they be used as input to a personal judgement about the validity of a hypothesis?

Provocative views

Bayesian methods were illustrated with several examples by Harrison Prosper (Florida) and Giulio D’Agostini (Rome). D’Agostini gave some very provocative views, completely rejecting orthodox (classical) statistics. He said: “Coverage means nothing to me.” Prosper presented a more moderate Bayesian viewpoint, explaining that he was led to Bayesian methods because the frequentist approach failed to answer the questions that he wanted to answer. He was aware of the arbitrariness inherent in the choice of the Bayesian prior, but felt that it was no worse than that of the ensemble of virtual experiments necessary for the frequentist concept of coverage. He also found that the practical problem of nuisance parameters (such as incompletely known background or efficiency) is easier to handle in the Bayesian approach.

Bob Cousins (UCLA) summarized the main problems besetting the two approaches, contrasting the questions addressed by the various methods and the different kinds of input required. The influential 1998 article that he wrote with Gary Feldman (Harvard) on a “unified approach” to classical confidence limits was quickly adopted by the neutrino community and occupied an important place already in the 1998 edition of the widely used statistics section of the Review of Particle Physics,published by the Particle Data Group. He summarized his hopes for this workshop with a list of 10 points “we might agree on”.

Different approaches

The rest of the workshop largely confirmed Cousins’ hopes, because there was little serious opposition to any of his points, and in the final session the most important practical suggestion – that experiments should report their likelihood functions – was actually put to a vote, with the result that there were no voices against.

Variations of the method of Feldman and Cousins were proposed by Carlo Giunti (Turin), Giovanni Punzi (Pisa), and Byron Roe and Michael Woodroofe (Michigan). Woodroofe and Peter Clifford (Oxford) were the two statisticians invited to the workshop. They played an important role as arbiters of the degree to which various methods were in agreement with accepted statistical theory and practice. From time to time they gently reminded the physicists that they were reinventing the wheel, and that books had been written by statisticians since the famous tome by Kendall and Stuart.

Searches for the Higgs boson are being conducted at CERN’s LEP Collider and at Fermilab’s Tevatron. At LEP, Higgs events are expected to have a relatively clear signature, so selection cuts to reduce unwanted backgrounds can be fairly tight, and the number of candidate events is relatively small.

To make optimum use of the small statistics, it has been found that a modified frequentist approach – the CLs method, described by Alex Read – provides, on average, more stringent limits than a Bayesian method. The required computing time is significant, so techniques to help reduce this were discussed.

In contrast, Higgs searches in proton-antiproton collisions at the Tevatron are far more complicated and result in a larger background. Because of the larger numbers of events, the use of a Bayesian approach is quite reasonable here. These and other searches in the CDF experiment at the Tevatron were described by John Conway (Rutgers). The final session of the workshop was devoted to a panel discussion that was led by Glen Cowan (Royal Holloway), Gary Feldman, Don Groom (Berkeley), Tom Junk (Carleton) and Harrison Prosper.

Workshop participants had been encouraged to submit topics or questions to be discussed by the panel. As was fitting for a workshop devoted to small signals, the number of submitted questions was nil. This did not prevent an active discussion, with Bayesian versus frequentist views again being apparent. The overwhelming view was that the workshop had been very useful. It brought together many of the leading experts in the field, who appreciated the opportunity to hear other points of view, and to have detailed discussions about their methods. For most of the audience it provided a unique chance to learn about the advantages and limitations of the various methods available, and to hear about the underlying philosophy. Animated discussions spilled over from the sessions into the coffee breaks, lunch and dinner.

New challenges

Several problems clearly remain. Producing a single method that incorporates only the good features of each approach still looks somewhat Utopian. More accessible may be challenges such as how to combine different experiments in a relatively straightforward manner (this will be particularly important in Higgs searches in the Tevatron run due to start in 2001); dealing with “nuisance parameters” (such as uncertainties in detection efficiencies or backgrounds) in classical methods; and reducing computation time. It was also apparent that simply quoting a limit is not going to be enough. If it is to be useful, it is necessary to specify in some detail the assumptions and methods involved.

The organizers are now putting together proceedings, which will include all of the discussions and hopefully summaries of all of the talks. It should appear as a CERN Yellow Report. The audience at this workshop was predominantly from European laboratories. A similar meeting was held at Fermilab on 27-28 March.