Extracting neutrino properties from long-baseline neutrino experiments relies on modelling neutrino-nucleus interactions.

A major focus of experiments at the Large Hadron Collider (LHC) is to search for new phenomena that cannot be explained by the Standard Model of particle physics. In addition to sophisticated analysis routines, this requires detailed measurements of particle tracks and energy deposits produced in large detectors by the LHC’s proton–proton collisions and, in particular, precise knowledge of the collision energy. The LHC’s counter-rotating proton beams each carry an energy of 6.5 TeV and this quantity is known to a precision of about 0.1 per cent – a feat that requires enormous technical expertise and equipment.

So far, no clear signs of physics beyond the Standard Model (BSM) have been detected at the LHC or at other colliders where a precise knowledge of the beam energy is needed. Indeed, the only evidence for BSM physics has come from experiments in which the beam energy is known very poorly. In 1998, in work that would lead to the 2015 Nobel Prize in Physics, researchers discovered that neutrinos have mass and that, therefore, these elementary particles cannot be purely left-handed, as had been assumed by the Standard Model. The discovery came from the observation of oscillations of atmospheric and solar neutrinos. The energies of the latter are determined by various elementary processes in the Sun and cover a wide range from a few keV up to about 20 MeV.

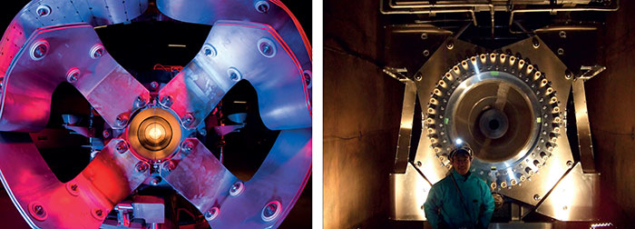

Since then, dedicated “long-baseline” experiments have started to explore neutrino oscillations under controlled conditions by sending neutrino beams produced in accelerator labs to detectors located hundreds of kilometres away. The T2K experiment in Japan shoots a beam from JPARC into the Super-Kamiokande underground detector about 300 km away, while the NOvA experiment in the US aims a beam produced at Fermilab to an above-ground detector near the Canadian border about 800 km away. Finally, the international Deep Underground Neutrino Experiment (DUNE), for which prototype detectors are being assembled at CERN (see “Viewpoint: CERN’s recipe for knowledge transfer”), will send a neutrino beam from Fermilab over a distance of 1300 km to a detector in the old Homestake gold mine in South Dakota. The targets in these experiments are all nuclei, rather than single protons, and the neutrino-beam energies range from a few 100 MeV to about 30 GeV.

Image credits: Fermilab; J-PARC.

Such poor knowledge of neutrino-beam energies is no longer acceptable for the science that awaits us. All long-baseline experiments aim to determine crucial neutrino properties, namely: the neutrino mixing angles; the value of a CP-violating phase in the neutrino sector; and the so far unknown mass ordering of the three neutrino flavours. Extracting these parameters is only possible by knowing the incoming neutrino energies, and these have to be reconstructed from observations of the final state of a neutrino–nucleus reaction. This calls for Monte Carlo generators that, unlike those used in high-energy physics, not only describe elementary particle reactions and their decays but also reactions with the nuclear environment.

Beam-energy problem

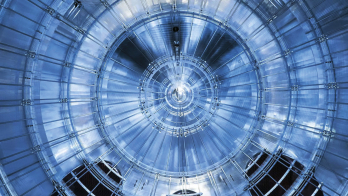

Neutrino beams have been produced for more than 50 years, famously allowing the discovery of the muon neutrino at Brookhaven National Laboratory in 1962. The difficulty in knowing the energy of a neutrino beam, as opposed to the situation at colliders such as the LHC, stems from the way the beams are produced. First, a high-current proton beam is fired into a thick target to produce many secondary particles such as charged pions and kaons, which are emitted in the forward direction. A device called a magnetic horn, invented by Simon van der Meer at CERN in 1961, then bundles the charged particles into a given direction as they decay into neutrinos and their corresponding charged leptons. Once the leptons have been removed by appropriate absorber materials, a neutrino beam emerges.

Whereas a particle beam in a high-energy accelerator has a diameter of about 10 μm, the width of the neutrino beam at its origin is determined by the dimensions of the horn, which is typically of the order of 1 m. Since the pions and kaons are produced with their own energy spectra, which have been measured by experiments such as HARP and NA61/SHINE at CERN, their two-body decays into a charged lepton and a neutrino lead to a broad neutrino-energy distribution. By the time a neutrino beam reaches a long-baseline detector, it may be as wide as a few kilometres and its energy is known only in broad ranges. For example, the beam envisaged for DUNE will have a distribution of energies with a maximum at about 2.5 GeV, with tails all the way down to 0 GeV on one side and 30 GeV on the other. While the high-energy tail may be small, the neutrino–nucleon cross-section in this region scales roughly linearly with the neutrino energy, so that even small tails contribute to interactions in the detector (figure 1).

The neutrino energy is a key parameter in the formula governing neutrino-oscillation probabilities and must be reconstructed on an event-by-event basis. In a “clean” two-body reaction such as νμ + n → μ + p, where a neutrino undergoes quasi-elastic (QE) scattering off a neutron at rest, the neutrino energy can be determined from the kinematics (energy and angle) of the outgoing muon alone. This kinematical or QE-based approach requires a sufficiently good detector to make sure that no inelastic excitations of the nucleon have taken place. Alternatively, the so-called calorimetric method measures the energies of all the outgoing particles to yield the incoming neutrino energy. Since both methods suffer from less-than-perfect detectors with limitations in acceptance and efficiency, the reconstructed energy may not be equal to the true energy and detector simulations are therefore essential.

A major additional complication comes about because all modern neutrino experiments use nuclear targets, such as water in T2K and argon in DUNE. Even assuming that the neutrino–nucleus interaction can be described as a superposition of quasi-free interactions of the neutrino with individual nucleons, the latter are bound and move with their Fermi motion. As a result, the kinematical method suffers because the initial-state neutron is no longer at rest but moves with a momentum of up to about 225 MeV, smearing the reconstructed neutrino energy around its true value by a few tens of MeV. Furthermore, final-state interactions concerning the hadrons produced – both between themselves and with the nuclear environment of the detector – significantly complicate the energy reconstruction procedure. Even true initial QE events cannot be distinguished from events in which first a pion is produced and then is absorbed in the nuclear medium (figure 2), and the kinematical approach to energy reconstruction necessarily leads to a wrong neutrino energy.

Image credit: T Leitner.

The calorimetric method, on the other hand, suffers because detectors often do not see all particles at all energies. Here, the challenge to determine the neutrino energy is to “calculate backwards” from the final state, which is only partly known due to detector imperfections, to the incoming state of the reaction. One can gain an impression of how good this backwards calculation has to be by considering figure 3, which shows the sensitivity of the oscillation signal to changes in the CP-violating phase angle: for DUNE and T2K an accuracy of about 100 MeV and 50 MeV is required, respectively, to distinguish between the various curves showing the expected oscillation signal for different assumptions about the phase and the neutrino mass-ordering. At the same time, one sees that the oscillation maxima have to be determined within about 20% to be able to measure the phase and mass-ordering.

Detectors near and far

Neutrino physicists working on long-baseline experiments have long been aware of the problems in extracting the oscillation signal. The standard remedy is to build a detector not only at the oscillation distance (called the far detector, FD) but also one close to the neutrino production target (the near detector, ND). By dividing the event rates seen in the FD by those in the ND, the oscillation probability follows directly. The division also leads one to hope that uncertainties in our knowledge of cross-sections and reaction mechanisms cancel out, making the resulting probability less sensitive to uncertainties in the energy reconstruction. In practice, however, there are obstacles to such an approach. For instance, often the ND contains a different active material and has a different geometry to the FD, the latter simply because of the significant broadening of the neutrino beam with distance between the ND and the FD. Furthermore, due to the oscillation the energy spectrum of neutrinos is different in the ND than it is in the FD. It is therefore vital that we have a good understanding of the interactions in different target nuclei and energy regimes because the energy reconstruction has to be done separately both at the ND and the FD.

To place neutrino–nucleus reactions on a more solid empirical footing, neutrino researchers have started to measure the relevant cross-sections to a much higher accuracy than was possible at previous experiments such as CERN’s NOMAD. MiniBooNE at Fermilab has provided the world’s largest sample of charged-current events (QE-like reactions and pion production) on a target consisting of mineral oil, for example, and the experiment is now being followed by the nearby MicroBooNE, which uses an argon target. At higher energies, the MINERvA experiment (also at Fermilab) is dedicated to determining neutrino–nucleus cross-sections in an energy distribution that peaks at about 3.5 GeV and is thus close to that expected for DUNE. Cross-section measurements are also taking place in the NDs of T2K and NOvA, which are crucial to our understanding of neutrino–nucleus interactions and for benchmarking new neutrino generators.

Image credit: Ann. Rev. Nucl. Part. Sci. 66 47.

Neutrino generators provide simulations of the entire neutrino–nucleus interaction, from the very first initial neutrino–nucleon interaction to the final state of many outgoing and interacting hadrons, and are needed to perform the backwards computation from the final state to the initial state. Such generators are also needed to estimate effects of detector acceptance and efficiency, similar to the role of GEANT in other nuclear and high-energy experiments. These generators should be able to describe all of the interactions over the full energy range of interest in a given experiment, and should also be able to describe neutrino–nucleus and hadron–nucleus interactions involving different nuclei. Obviously, the generators should therefore be based on state-of-the-art nuclear physics, both for the nuclear structure and for the actual reaction process.

Presently used neutrino generators, such as NEUT or GENIE, deal with the final-state interactions by employing Monte Carlo cascade codes in which the nucleons move freely between collisions and nuclear-structure information is kept at a minimum. The challenge here is to deal correctly with questions such as relativity in many-body systems, nuclear potentials and possible in-medium changes of the hadronic interactions. Significant progress has been made recently in describing the structure of target nuclei and their excitations in so-called Green’s function Monte Carlo theory. A similarly sophisticated approach to the hadronic final-state interactions is provided by the non-equilibrium Green’s function method. This method, the foundations of which were written down half a century ago, is the only known way to describe high-multiplicity events while taking care of possible in-medium effects on the interaction rates and the off-shell transport between collisions. Only during the last two decades have numerical implementations of this quantum-kinetic transport theory become possible. A neutrino generator built on this method (GiBUU) has recently been used to explore the uncertainties that are inherent in the kinematical-energy reconstruction method for the very same process shown in figure 3, and the result of that study (figure 4) gives an idea of the errors inherent in such an energy reconstruction.

Generators that contain all the physics of neutrino–nucleus interactions are absolutely essential to get at the neutrino’s intriguing properties in long-baseline experiments. The situation is comparable to that in experiments at the LHC and at the Relativistic Heavy Ion Collider at Brookhaven that study the quark–gluon plasma (QGP). Here, the existence and properties of the QGP can be inferred only by calculating backwards from the final-state observations with “normal” hadrons to the hot and dense collision zone with gluons and quarks. Without a full knowledge of the neutrino–nucleus interactions the neutrino energy in current and future long-baseline neutrino experiments cannot be reliably reconstructed. Thus, generators for neutrino experiments should clearly be of the same quality as the corresponding experimental apparatus. This is where the expertise and methods of nuclear physicists are needed in experiments with neutrino beams.

A natural test bed for these generators is provided by data from electron–nucleus reactions. These test the vector part of the neutrino–nucleus interaction and thus constitute a mandatory test for any generator. Experiments with electrons at JLAB are presently in a planning stage. Since the energy reconstruction has to start from the final state of the reaction, the four-vectors of all final-state particles are needed for the backwards calculation to the initial state. Inclusive lepton–nucleus cross-sections, with no information on the final state, are therefore not sufficient.

Call to action

All of this has been realised only recently and there is now a growing community that tries to bring together experimental high-energy physicists that work on long-baseline experiments with nuclear theorists. There is a dedicated conference series called NUINT, in addition to meetings such as WIN or NUFACT, which now all have sessions on neutrino–nucleus interactions.

We face a challenge that is completely new to high-energy physics experiments: the reconstruction of the incoming energy from the final state requires a good description of the nuclear ground state, control of neutrino–nucleus interactions and, on top of all this, control of the final-state interactions of the hadrons when they cascade through the nucleus after the primary neutrino–nucleon interaction. Neutrino generators that fulfil all of these requirements can minimise the uncertainties in the energy reconstruction. They should therefore attract the same attention and support as the development of new equipment for long-baseline neutrino experiments, since their quality ultimately determines the precision of the extracted neutrino properties.