In 1991, the year that the CERN Council voted unanimously that the Large Hadron Collider (LHC) is “the right machine for the advance of the subject and for the future of CERN”, the Courier took a look at the challenges facing detectors in dealing with the LHC’s unprecedented collision rate. The same challenges faced the Superconducting Super Collider (SSC) in the US. Alas, while the LHC was approved three years later, the SSC never had the chance to put these ideas into practice.

The new generation of big proton–proton colliders now being planned in Europe and the US aims to open up the collision physics of the constituent quarks and gluons hidden deep inside the proton. Locked inside nuclear particles, quarks and gluons cannot be liberated as free particles, at least under current laboratory conditions. To study them needs microscopes the size of the LHC collider foreseen for CERN’s 27-kilometre LEP tunnel and the 87-kilometre Superconducting Supercollider (SSC) planned in the US. But the researchers using these gigantic new microscopes have to have good eyesight – they need the right detectors.

Seeing things this small needs collision energies of some 1 TeV (1000 GeV) per constituent quark/gluon, or at least 15 TeV viewed at the proton–proton level. Most of the time, the collisions would be ‘soft’, involving big pieces of proton, rather than quarks and gluons. To see enough ‘hard’ collisions, when the innermost proton constituents clash against each other, physicists need very high proton–proton collision rates.

These rates are measured by luminosity. (The luminosity of a two-beam collider is the number of particles per second in one beam multiplied by the number of collisions per unit area of the other beam.) For LHC, luminosities of up to a few times 1034 are needed.

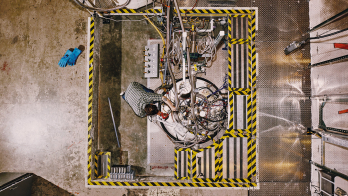

Quite apart from the challenge of delivering this number of high energy protons, having such intense beams continually smashing through physics apparatus makes problems for detector designers.

As well as quickly wearing out detector components, these conditions imply new dimensions of data handling. Proton bunches would sweep past each other some 60 million times per second, each time producing about 20 interactions of one kind or another. Only one in a billion of these interactions would be of the hard kind which interests the physicists, and the instrumentation and data systems would have to filter out interesting physics fast enough to avoid being swamped by the subsequent tide of raw data.

It is as though a passenger in a train, watching haystacks flash past the window at high speed during a thunderstorm, had to locate a single needle hidden in one haystack, without stopping the train.

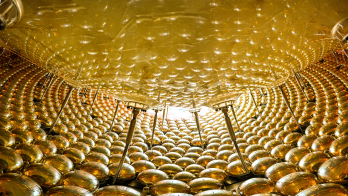

To look for these needles, physicists use detectors built like a series of boxes packed one inside the other, each box doing a special job before the particles pass through to the next. The innermost box is the tracker which takes a snapshot of the collision, tracing the path of the emerging particles.

Quark/gluon collisions, each expected to give a few hundred tracks, would be superimposed on many soft proton collisions, each producing about 25 tracks.

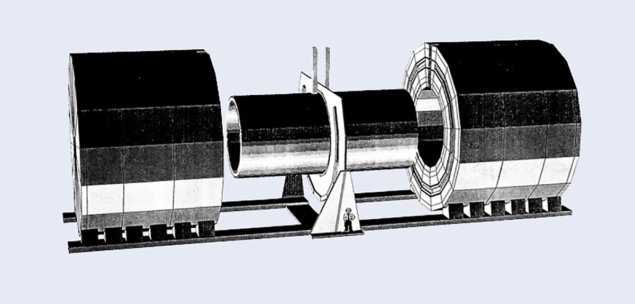

LHC proton collider. For giving an idea of the scale, some people have suggested that the ‘standard man’ could be usefully replaced by a ‘standard dinosaur’.

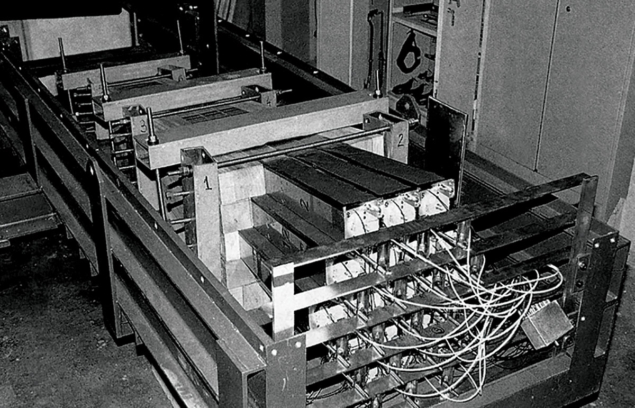

Traditional tracking, with a full ‘picture’ of emerging charged particle tracks bent by a magnetic field (to measure momentum) looks feasible at luminosities up to about 1033. Physics would certainly need higher rates, but this would blind the innermost tracker, and for these runs it would be best removed. However a certain amount of tracking has to be retained, even at higher rates, to pick up the isolated electrons accompanying special processes. Promising tracking technologies include semiconductor microstrips and scintillating fibres.

After the tracker traditionally follows the calorimeter to measure the energy deposited by the emerging particles. As well as measuring the energies of special particles, like photons and electrons, the calorimeter has to be ‘watertight’. Any mismatch in energy flow between two sides of the detector (‘missing energy’) can then be attributed to invisible particles, like neutrinos, escaping the detector, and not to otherwise visible particles disappearing through cracks.

The signals from hard quark/gluon interactions would necessarily be obscured by ‘pile-up’ from soft interactions. Overlap from different interactions can be minimized by having a fine-grained calorimeter, and some soft background can be allowed for. Promising general-purpose calorimeter technologies include liquid argon and scintillating fibre/lead matrices, while dedicated calorimeters for electromagnetic energy measurement could be based on special crystals or noble liquids.

Electrons and muons are very important for this kind of physics, and could be a vital part of special signatures indicative of new processes. Muons, with their ability to pass through thick sheets of absorber, remain an ‘easy’ option, however requirements for precise momentum resolution could have a major impact on the design of these anyway very large detectors.

Electrons are much less easy to isolate than muons, and would also tend to be masked by other signals. Accurate location with a fine-grain detector would help, but additional electron identification still would be needed. Such information could be given by correlating calorimeter measurements with upstream signals from the tracker or a dedicated track/preshower detector, or by independent electron identification (using a transition radiation detector).

Seeing anything at all depends on the initial level of event filtering by electronic triggers. These will have to select out one event in ten or even a hundred thousand within a microsecond. In addition, the information coming from different parts of the very large detectors will have to be synchronized, all this some 60 million times a second!

For the SSC, ‘generic’ research and development work began in 1986, eventually overlapping with the R&D for specific detector subsystems. This overlaps in turn with development work for the two major proposed experiments (October, page 12). For the LHC at CERN, research and development work on detector components and techniques is pushing ahead on a wide front pending the appearance of initial proposals for complete detectors (October, page 25), while a magnet study group coordinates the designs for the big magnets at the heart of new detector schemes.

- This article was adapted from text in CERN Courier vol. 31, November 1991, pp2–7