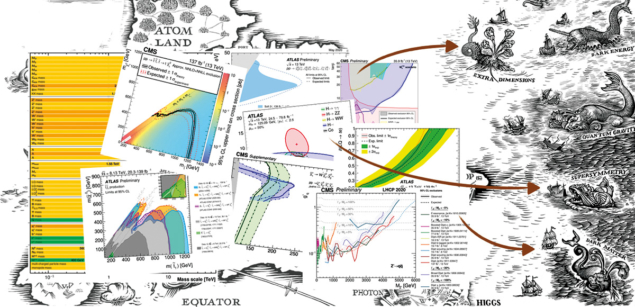

The ATLAS, CMS and LHCb collaborations perform precise measurements of Standard Model (SM) processes and direct searches for physics beyond the Standard Model (BSM) in a vast variety of channels. Despite the multitude of BSM scenarios tested this way by the experiments, it still constitutes only a small subset of the possible theories and parameter combinations to which the experiments are sensitive. The (re)interpretation of the LHC results in order to fully understand their implications for new physics has become a very active field, with close theory–experiment interaction and with new computational tools and related infrastructure being developed.

From 15 to 19 February, almost 300 theorists and experimental physicists gathered for a week-long online workshop to discuss the latest developments. The topics covered ranged from advances in public software packages for reinterpretation to the provision of detailed analysis information by the experiments, from phenomenological studies to global fits, and from long-term preservation to public data.

Open likelihoods

One of the leading questions throughout the workshop was that of public likelihoods. The statistical model of an experimental analysis provides its complete mathematical description; it is essential information for determining the compatibility of the observations with theoretical predictions. In his keynote talk “Open science needs open likelihoods’’, Harrison Prosper (Florida State University) explained why it is in our scientific interest to make the publication of full likelihoods routine and straightforward. The ATLAS collaboration has recently made an important step in this direction by releasing full likelihoods in a JSON format, which provides background estimates, changes under systematic variations, and observed data counts at the same fidelity as used in the experiment, as presented by Eric Schanet (LMU Munich). Matthew Feickert (University of Illinois) and colleagues gave a detailed tutorial on how to use these likelihoods with the pyhf python package. Two public reinterpretation tools, MadAnalysis5 presented by Jack Araz (IPPP Durham) and SModelS presented by Andre Lessa (UFABC Santo Andre) can already make use of pyhf and JSON likelihoods, and others are to follow. An alternative approach to the plain-text JSON serialisation is to encode the experimental likelihood functions in deep neural networks, as discussed by Andrea Coccaro (INFN Genova) who presented the DNNLikelihood framework. Several more contributions from CMS, LHCb and from theorists addressed the question of how to present and use likelihood information, and this will certainly stay an active topic at future workshops.

The question of making research data findable, accessible, interoperable and reusable is a burning one throughout modern science

A novelty for the Reinterpretation workshop was that the discussion was extended to experiences and best practices beyond the LHC, to see how experiments in other fields address the need for publicly released data and reusable results. This included presentations on dark-matter direct detection, the high-intensity frontier, and neutrino oscillation experiments. Supporting Prosper’s call for data reusability 40 years into the future – “for science 2061” – Eligio Lisi (INFN Bari) pointed out the challenges met in reinterpreting the 1998 Super-Kamiokande data, initially published in terms of the then-sufficient two-flavour neutrino-oscillation paradigm, in terms of contemporary three-neutrino descriptions, and beyond. On the astrophysics side, the LIGO and Virgo collaborations actively pursue an open-science programme. Here, Agata Trovato (APC Paris) presented the Gravitational Wave Open Science Center, giving details on the available data, on their format and on the tools to access them. An open-data policy also exists at the LHC, spearheaded by the CMS collaboration, and Edgar Carrera Jarrin (USF Quito) shared experiences from the first CMS open-data workshop.

The question of making research data findable, accessible, interoperable and reusable (“FAIR” in short) is a burning one throughout modern science. In a keynote talk, the head of the GO FAIR Foundation, Barend Mons, explained the FAIR Guiding Principles together with the technical and social aspects of FAIR data management and data reuse, using the example of COVID-19 disease modelling. There is much to be learned here for our field.

The wrap-up session revolved around the question of how to implement the recommendations of the Reinterpretation workshop in a more systematic way. An important aspect here is the proper recognition, within the collaborations as well as the community at large, of the additional work required to this end. More rigorous citation of HEPData entries by theorists may help in this regard. Moreover, a “Reinterpretation: Auxiliary Material Presentation” (RAMP) seminar series will be launched to give more visibility and explicit recognition to the efforts of preparing and providing extensive material for reinterpretation. The first RAMP meetings took place on 9 and 23 April.