Quantum gravity continues to confound theorists.

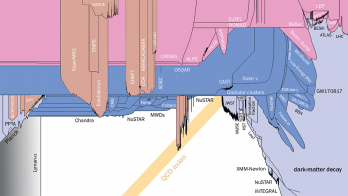

Image credit: T Nutma.

There is little doubt that, in spite of their overwhelming success in describing phenomena over a vast range of distances, general relativity (GR) and the Standard Model (SM) of particle physics are incomplete theories. Concerning the SM, the problem is often cast in terms of the remaining open issues in particle physics, such as its failure to account for the origin of the matter–antimatter asymmetry or the nature of dark matter. But the real problem with the SM is theoretical: it is not clear whether it makes sense at all as a theory beyond perturbation theory, and these doubts extend to the whole framework of quantum field theory (QFT) (with perturbation theory as the main tool to extract quantitative predictions). The occurrence of “ultraviolet” (UV) divergences in Feynman diagrams, and the need for an elaborate mathematical procedure called renormalisation to remove these infinities and make testable predictions order-by-order in perturbation theory, strongly point to the necessity of some other and more complete theory of elementary particles.

On the GR side, we are faced with a similar dilemma. Like the SM, GR works extremely well in its domain of applicability and has so far passed all experimental tests with flying colours, most recently and impressively with the direct detection of gravitational waves (see “General relativity at 100”). Nevertheless, the need for a theory beyond Einstein is plainly evident from the existence of space–time singularities such as those occurring inside black holes or at the moment of the Big Bang. Such singularities are an unavoidable consequence of Einstein’s equations, and the failure of GR to provide an answer calls into question the very conceptual foundations of the theory.

Unlike quantum theory, which is rooted in probability and uncertainty, GR is based on notions of smoothness and geometry and is therefore subject to classical determinism. Near a space–time singularity, however, the description of space–time as a continuum is expected to break down. Likewise, the assumption that elementary particles are point-like, a cornerstone of QFT and the reason for the occurrence of ultraviolet infinities in the SM, is expected to fail in such extreme circumstances. Applying conventional particle-physics wisdom to Einstein’s theory by quantising small fluctuations of the metric field (corresponding to gravitational waves) cannot help either, since it produces non-renormalisable infinities that undermine the predictive power of perturbatively quantised GR.

In the face of these problems, there is a wide consensus that the outstanding problems of both the SM and GR can only be overcome by a more complete and deeper theory: a theory of quantum gravity (QG) that possibly unifies gravity with the other fundamental interactions in nature. But how are we to approach this challenge?

Planck-scale physics

Unlike with quantum mechanics, whose development was driven by the need to explain observed phenomena such as the existence of spectral lines in atomic physics, nature gives us very few hints of where to look for QG effects. One main obstacle is the sheer smallness of the Planck length, of the order 10−33 cm, which is the scale at which QG effects are expected to become visible (conversely, in terms of energy, the relevant scale is 1019 GeV, which is 15 orders of magnitude greater than the energy range accessible to the LHC). There is no hope of ever directly measuring genuine QG effects in the laboratory: with zillions of gravitons in even the weakest burst of gravitational waves, realising the gravitational analogue of the photoelectric effect will forever remain a dream.

One can nevertheless speculate that QG might manifest itself indirectly, for instance via measurable features in the cosmic microwave background, or cumulative effects originating from a more granular or “foamy” space–time. Alternatively, perhaps a framework will emerge that provides a compelling explanation for inflation, dark energy and the origin of the universe. Although not completely hopeless, available proposals typically do not allow one to unambiguously discriminate between very different approaches, for instance when contrarian schemes like string theory and loop quantum gravity vie to explain features of the early universe. And even if evidence for new effects was found in, say, cosmic-ray physics, these might very well admit conventional explanations.

In the search for a consistent theory of QG, it therefore seems that we have no other choice but to try to emulate Einstein’s epochal feat of creating a new theory out of purely theoretical considerations.

Emulating Einstein

Yet, after more than 40 years of unprecedented collective intellectual effort, different points of view have given rise to a growing diversification of approaches to QG – with no convergence in sight. It seems that theoretical physics has arrived at crossroads, with nature remaining tight-lipped about what comes after Einstein and the SM. There is currently no evidence whatsoever for any of the numerous QG schemes that have been proposed – no signs of low-energy supersymmetry, large extra dimensions or “stringy” excitations have been seen at the LHC so far. The situation is no better for approaches that do not even attempt to make predictions that could be tested at the LHC.

Existing approaches to QG fall roughly into two categories, reflecting a basic schism that has developed in the community. One is based on the assumption that Einstein’s theory can stand on its own feet, even when confronted with quantum mechanics. This would imply that QG is nothing more than the non-perturbative quantisation of Einstein’s theory and that GR, suitably treated and eventually complemented by the SM, correctly describes the physical degrees of freedom also at the very smallest distances. The earliest incarnation of this approach goes back to the pioneering work of John Wheeler and Bryce DeWitt in the early 1960s, who derived a GR analogue of the Schrödinger equation in which the “wave function of the universe” encodes the entire information about the universe as a quantum system. Alas, the non-renormalisable infinities resurface in a different guise: the Wheeler–DeWitt equation is so ill-defined mathematically that no one until now has been able to make sense of it beyond mere heuristics. More recent variants of this approach in the framework of loop quantum gravity (LQG), spin foams and group field theory replace the space–time metric by new variables (Ashtekar variables, or holonomies and fluxes) in a renewed attempt to overcome the mathematical difficulties.

The opposite attitude is that GR is only an effective low-energy theory arising from a more fundamental Planck-scale theory, whose basic degrees of freedom are very different from GR or quantum field theory. In this view, GR and space–time itself are assumed to be emergent, much like macroscopic physics emerges from the quantum world of atoms and molecules. The perceived need to replace Einstein’s theory by some other and more fundamental theory, having led to the development of supersymmetry and supergravity, is the basic hypothesis underlying superstring theory (see “The many lives of supergravity”). Superstring theory is the leading contender for a perturbatively finite theory of QG, and widely considered the most promising possible pathway from QG to SM physics. This approach has spawned a hugely varied set of activities and produced many important ideas. Most notable among these, the AdS/CFT correspondence posits that the physics that takes place in some volume can be fully encoded in the surface bounding that volume, as for a hologram, and consequently that QG in the bulk should be equivalent to a pure quantum field theory on its boundary.

Apart from numerous technical and conceptual issues, there remain major questions for all approaches to QG. For LQG-like or “canonical” approaches, the main unsolved problems concern the emergence of classical space–time and the Einstein field equations in the semiclassical limit, and their inability to recover standard QFT results such as anomalies. On the other side, a main shortcoming is the “background dependence” of the quantisation procedure, for which both supergravity and string theory have to rely on perturbative expansions about some given space–time background geometry. In fact, in its presently known form, string theory cannot even be formulated without reference to a specific space–time background.

These fundamentally different viewpoints also offer different perspectives on how to address the non-renormalisability of Einstein’s theory, and consequently on the need (or not) for unification. Supergravity and superstring theory try to eliminate the infinities of the perturbatively quantised theory, in particular by including fermionic matter in Einstein’s theory, thus providing a raison d’être for the existence of matter in the world. They therefore automatically arrive at some kind of unification of gravity, space–time and matter. By contrast, canonical approaches attribute the ultraviolet infinities to basic deficiencies of the perturbative treatment. However, to reconcile this view with semiclassical gravity, they will have to invoke some mechanism – a version of Weinberg’s asymptotic safety – to save the theory from the abyss of non-renormalisability.

Conceptual challenges

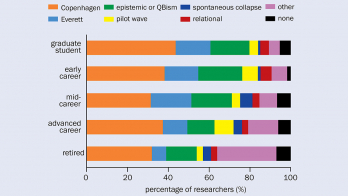

Beyond the mathematical difficulties to formulating QG, there are a host of issues of a more conceptual nature that are shared by all approaches. Perhaps the most important concerns the very ground rules of quantum mechanics: even if we could properly define and solve the Wheeler–DeWitt equation, how are we to interpret the resulting wave function of the universe? After all, the latter pretends to describe the universe in its entirety, but in the absence of outside classical observers, the Copenhagen interpretation of quantum mechanics clearly becomes untenable. On a slightly less grand scale, there are also unresolved issues related to the possible loss of information in connection with the Hawking evaporation of black holes.

A further question that any theory of QG must eventually answer concerns the texture of space–time at the Planck scale: do there exist “space–time atoms” or, more specifically, web-like structures like spin networks and spin foams, as claimed by LQG-like approaches? (see diagram) Or does the space–time continuum get dissolved into a gas of strings and branes, as suggested by some variants of string theory, or emerge from holographic entanglement, as advocated by AdS/CFT aficionados? There is certainly no lack of enticing ideas, but without a firm guiding principle and the prospect of making a falsifiable prediction, such speculations may well end up in the nirvana of undecidable propositions and untestable expectations.

Why then consider unification? Perhaps the strongest argument in favour of unification is that the underlying principle of symmetry has so far guided the development of modern physics from Maxwell’s theory to GR all the way to Yang–Mills theories and the SM (see diagram). It is therefore reasonable to suppose that unification and symmetry may also point the way to a consistent theory of QG. This point of view is reinforced by the fact that the SM, although only a partially unified theory, does already afford glimpses of trans-Planckian physics, independently of whether new physics shows up at the LHC or not. This is because the requirements of renormalisability and vanishing gauge anomalies put very strong constraints on the particle content of the SM, which are indeed in perfect agreement with what we see in detectors. There would be no more convincing vindication of a theory of QG than its ability to predict the matter content of the world (see panel below).

In search of SUSY

Among the promising ideas that have emerged over the past decades, arguably the most beautiful and far reaching is supersymmetry. It represents a new type of symmetry that relates bosons and fermions, thus unifying forces (mediated by vector bosons) with matter (quarks and leptons), and which endows space–time with extra fermionic dimensions. Supersymmetry is very natural from the point of view of cancelling divergences because bosons and fermions generally contribute with opposite signs to loop diagrams. This aspect means that low-energy (N = 1) supersymmetry can stabilise the electroweak scale with regard to the Planck scale, thereby alleviating the so-called hierarchy problem via the cancellation of quadratic divergences. These models predict the existence of a mirror world of superpartners that differ from the SM particles only by their opposite statistics (and their mass), but otherwise have identical internal quantum numbers.

To the great disappointment of many, experimental searches at the LHC so far have found no evidence for the superpartners predicted by N = 1 supersymmetry. However, there is no reason to give up on the idea of supersymmetry as such, since the refutation of low-energy supersymmetry would only mean that the most simple-minded way of implementing this idea does not work. Indeed, the initial excitement about supersymmetry in the 1970s had nothing to do with the hierarchy problem, but rather because it offered a way to circumvent the so-called Coleman–Mandula no-go theorem – a beautiful possibility that is precisely not realised by the models currently being tested at the LHC.

In fact, the reduplication of internal quantum numbers predicted by N = 1 supersymmetry is avoided in theories with extended (N > 1) supersymmetry. Among all supersymmetric theories, maximal N = 8 supergravity stands out as the most symmetric. Its status with regard to perturbative finiteness is still unclear, although recent work has revealed amazing and unexpected cancellations. However, there is one very strange agreement between this theory and observation, first emphasised by Gell-Mann: the number of spin-1/2 fermions remaining after complete breaking of supersymmetry is 48 = 3 × 16, equal to the number of quarks and leptons (including right-handed neutrinos) in three generations (see “The many lives of supergravity”). To go beyond the partial matching of quantum numbers achieved so far will, however, require some completely new insights, especially concerning the emergence of chiral gauge interactions.

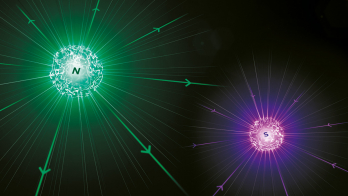

Then again, perhaps supersymmetry is not the end of the story. There is plenty of evidence that another type of symmetry may be equally important, namely duality symmetry. The first example of such a symmetry, electromagnetic duality, was discovered by Dirac in 1931. He realised that Maxwell’s equations in vacuum are invariant under rotations of the electric and magnetic fields into one another – an insight that led him to predict the existence of magnetic monopoles. While magnetic monopoles have not been seen, duality symmetries have turned out to be ubiquitous in supergravity and string theory, and they also reveal a fascinating and unsuspected link with the so-called exceptional Lie groups.

More recently, hints of an enormous symmetry enhancement have also appeared in a completely different place, namely the study of cosmological solutions of Einstein’s equations near a space-like singularity. This mathematical analysis has revealed tantalising evidence of a truly exceptional infinite-dimensional duality symmetry, which goes by the name of E10, and which “opens up” as one gets close to the cosmological (Big Bang) singularity (see image at top). Could it be that the near-singularity limit can tell us about the underlying symmetries of QG in a similar way as the high-energy limit of gauge theories informs us about the symmetries of the SM? One can validly argue that this huge and monstrously complex symmetry knows everything about maximal supersymmetry and the finite-dimensional dualities identified so far. Equally important, and unlike conventional supersymmetry, E10 may continue to make sense in the Planck regime where conventional notions of space and time are expected to break down. For this reason, duality symmetry could even supersede supersymmetry as a unifying principle.

Outstanding questions

Our summary, then, is very simple: all of the important questions in QG remain wide open, despite a great deal of effort and numerous promising ideas. In the light of this conclusion, the LHC will continue to play a crucial role in advancing our understanding of how everything fits together, no matter what the final outcome of the experiments will be. This is especially true if nature chooses not to abide by current theoretical preferences and expectations.

Over the past decades, we have learnt that the SM is a most economical and tightly knit structure, and there is now mounting evidence that minor modifications may suffice for it to survive to the highest energies. To look for such subtle deviations will therefore be a main task for the LHC in the years ahead. If our view of the Planck scale remains unobstructed by intermediate scales, the popular model-builders’ strategy of adding ever more unseen particles and couplings may come to an end. In that case, the challenge of explaining the structure of the low-energy world from a Planck-scale theory of quantum gravity looms larger than ever.

| Einstein on unification |

It is well known that Albert Einstein spent much of the latter part of his life vainly searching for unification, although disregarding the nuclear forces and certainly with no intention of reconciling quantum mechanics and GR. Already in 1929, he published a paper on the unified theory (pictured above right, click to enlarge). In this paper, he states with wonderful and characteristic lucidity what the criteria should be of a “good” unified theory: to describe as far as possible all phenomena and their inherent links, and to do so on the basis of a minimal number of assumptions and logically independent basic concepts. The second of these goals (also known as the principle of Occam’s razor) refers to “logical unity”, and goes on to say: “Roughly but truthfully, one might say: we not only want to understand how nature works, but we are also after the perhaps utopian and presumptuous goal of understanding why nature is the way it is and not otherwise.” |