Chris Jones takes a look back at the heyday of the computer mainframe through a selection of “memory bytes”.

In June 1996 computing staff at CERN turned off the IBM 3090 for the last time, so marking the end of an era that had lasted 40 years. In May 1956 CERN had signed the purchasing contract for its first mainframe computer – a Ferranti Mercury with a clock cycle 200,000 times slower than modern PCs. Now, the age of the mainframe is gone, replaced by “scalable solutions” based on Unix “boxes” and PCs, and CERN and its collaborating institutes are in the process of installing several tens of thousands of PCs to help satisfy computing requirements for the Large Hadron Collider.

The Mercury was a first-generation vacuum tube (valve) machine with a 60 microsecond clock cycle. It took five cycles – 300 microseconds – to multiply 40-bit words and had no hardware division, a function that had to be programmed. The machine took two years to build, arriving at CERN in 1958, which was a year later than originally foreseen. Programming by users was possible from the end of 1958 with a language called Autocode. Input and output (I/O) was by paper tape, although magnetic tape units were added in 1962. Indeed, the I/O proved something of a limitation, for example when the Mercury was put to use in the analysis of paper tape produced by the instruments used to scan and measure bubble-chamber film. The work of the fast and powerful central processing unit (CPU) was held up by the sluggish I/O. By 1959 it was already clear that a more powerful system was needed to deal with the streams of data coming from the experiments at CERN.

The 1960s arrived at the computing centre initially in the form of an IBM 709 in January 1961. Although it was still based on valves, it could be programmed in FORTRAN, read instructions written on cards, and read and write magnetic tape. Its CPU was four to five times faster than that of the Mercury, but it came with a price tag of 10 millions Swiss francs (in 1960 prices!). Only two years later it was replaced by an IBM 7090, a transistorized version of the same machine with a 2.18 microsecond clock cycle. This marked the end for the valve machines, and after a period in which it was dedicated to a single experiment at CERN (the Missing Mass Spectrometer), the Mercury was given to the Academy of Mining and Metallurgy in Krakow. With the 7090 the physicists could really take advantage of all the developments that had begun on the 709, such as on-line connection to devices including the flying spot digitizers to measure film from bubble and spark chambers. More than 300,000 frames of spark-chamber film were automatically scanned and measured in record time with the 7090. This period also saw the first on-line connection to film-less detectors, recording data on magnetic tape.

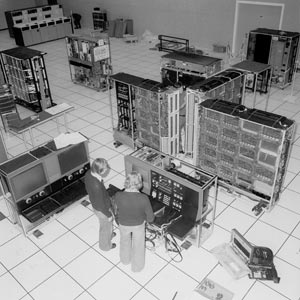

In 1965 the first CDC machine arrived at CERN – the 6600 designed by computer pioneer Seymour Cray, with a CPU clock cycle of 100 ns and a processing power 10 times that of the IBM 7090. With serial number 3, it was a pre-production series machine. It had disks more than 1 m in diameter – which could hold 500 million bits (64 megabytes) and subsequently made neat coffee tables – tape units and a high-speed card reader. However, as Paolo Zanella, who became division leader from 1976 until 1988, recalled, “The introduction of such a complex system was by no means trivial and CERN experienced one of the most painful periods in its computing history. The coupling of unstable hardware to shaky software resulted in a long traumatic effort to offer a reliable service.” Eventually the 6600 was able to realise its potential, but only after less-powerful machines had been brought in to cope with the increasing demands of the users. Then in 1972 it was joined by a still more powerful sibling, the CDC 7600, the most powerful computer of the time and five times faster than the 6600, but again there were similar painful “teething problems”.

With a speed of just over 10 Mips (millions of instructions per second) and superb floating-point performance, the 7600 was, for its time, a veritable “Ferrari” of computing. But it was a Ferrari with a very difficult running-in period. The system software was again late and inadequate. In the first months the machine had a bad ground-loop problem causing intermittent faults and eventually requiring all modules to be fitted with sheathed rubber bands. It was a magnificent engine for its time whose reliability and tape handling just did not perform to the levels needed, in particular by the electronic experiments. Its superior floating-point capabilities were valuable for processing data from bubble-chamber experiments with their relatively low data rates, but for the fast electronic experiments the “log jam” of the tape drives was a major problem.

So a second revolution occurred with the reintroduction of an IBM system, the 370/168, in 1976, which was able to meet a wider range of users’ requirements. Not only did this machine bring dependable modern tape drives, it also demonstrated that computer hardware could work reliably and it ushered in the heyday of the mainframe, with its robotic mass storage system and a laser printer operating at 19,000 lines per minute. With a CPU cycle of 80 ns, 4 megabytes (later 5) of semiconductor memory and a high-speed multiply unit, it became the “CERN unit” of physics data-processing power, corresponding to 3-4 Mips. Moreover, the advent of the laser printer, with its ability to print bitmaps rather than simple mono-spaced characters, heralded the beginning of scientific text processing and the end for the plotters with their coloured pens (to say nothing of typewriters).

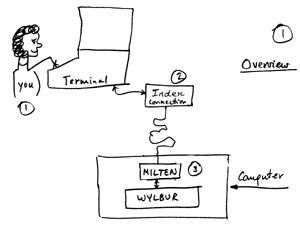

The IBM also brought with it the MVS (Multiple Virtual Storage) operating system, with its pedantic Job Control Language, and it provided the opportunity for CERN to introduce WYLBUR, the well-loved, cleverly designed and friendly time-sharing system developed at SLAC, together with its beautifully handwritten and illustrated manual by John Ehrman. WYLBUR was a masterpiece of design, achieving miracles with little power (at the time) shared amongst many simultaneous users. It won friends with its accommodating character and began the exit of punch-card machinery as computer terminals were introduced across the lab. It was also well interfaced with the IBM Mass Store, a unique file storage device, and this provided great convenience for file handling and physics data sample processing. At its peak WYLBUR served around 1200 users per week.

The IBM 370/168 was the starting point for the IBM-based services in the computer centre and was followed by a series of more powerful machines: the 3032, the 3081, several 3090s and finally the ES/9000. In addition, a sister line of compatible machines from Siemens/Fujitsu was introduced and together they provided a single system in a manner transparent to the users. This service carried the bulk of the computer users, more than 6000 per week, and most of the data handing right up to the end of its life in 1996. At its peak around 1995 the IBM service provided a central processor power around a quarter of a top PC today, but the data-processing capacity was outstanding.

During this period CERN’s project for the Large Electron Positron (LEP) collider brought its own challenges, together with a planning review in 1983 of the computing requirements for the LEP era. Attractive alternative systems to the mainframe began to appear over the horizon, presenting computing services with some difficult choices. The DEC VAX machines, used by many physics groups – and subsequently introduced as a successful central facility – were well liked for the excellent VMS operating system. On another scale the technical jump in functionality that was appearing on the new personal workstations, for example from Apollo – such as a fully bit-mapped screen and a “whole half a megabyte of memory” for a single user – were an obvious major attraction for serious computer-code developers, albeit at a cost that was not yet within the reach of many. It is perhaps worth reflecting that in 1983 the PC used the DOS operating system and a character-based screen, whilst the Macintosh had not yet been announced, so bit-mapped screens were a major step forward. (To put that in context, another recommendation of the above planning review was that CERN should install a local-area network and that Ethernet was the best candidate for this.)

The future clearly held exciting times, but some pragmatic decisions about finances, functionality, capacity and tape handling capacity had to be made. It was agreed that for the LEP era the IBM-based services would move to the truly interactive VM/CMS operating system as used at SLAC. (WYLBUR was really a clever editor submitting jobs to batch processing.) This led to a most important development, the HEPVM collaboration. It was possible and indeed desirable to modify the VM/CMS operating system to suit the needs of the user community. All the high-energy physics (HEP) sites running VM/CMS were setting out to do exactly this, as indeed they had done with many previous operating systems. To some extent each site started off as if it were their sovereign right to do this better than the others. In order to defend the rights of the itinerant physicist, in 1983 Norman McCubbin from the Rutherford Appleton Laboratory made the radical but irresistible proposal: “don’t do it better, do it the same!”

The HEPVM collaboration comprised most of the sites who ran VM/CMS as an operating system and who had LEP physicists as clients. This ranged from large dedicated sites such as SLAC, CERN and IN2P3, to university sites where the physicists were far from being the only clients. It was of course impossible to impose upon the diverse managements involved, so it was a question of discussion and explanation and working at the issues. Two important products resulted from this collaboration. A HEPVM tape was distributed to more than 30 sites, containing all the code necessary for producing a unified HEP environment, and the “concept of collaboration between sites” was established as a normal way to proceed. The subsequent off-spring, HEPiX and HEPNT, have continued the tradition of collaboration and it goes without saying that such collaboration will have to take a higher level again in order to make Grid computing successful.

The era of the supercomputer

The 1980s also saw the advent of the supercomputer. The CRAY X-MP supercomputer, which arrived at CERN in January 1988, was the logical successor to Seymour Cray’s CDC 7600 at CERN, and a triumph of price negotiation. The combined scalar performance of its four processors was about a quarter of the largest IBM installed at CERN, but it had strong vector floating-point performance. Its colourful presence resolved the question as to whether the physics codes could really profit from vector capabilities, and probably the greatest benefit to CERN from the CRAY was to the engineers whose applications, for example in finite element analysis and accelerator design, excelled on this machine. The decision was also taken to work together with CRAY to pioneer Unix as the operating system, and this work was no doubt of use to later generations of machines running Unix at CERN.

Throughout most of the mainframe period the power delivered to users had doubled approximately every 3.5 years – the CDC 7600 lasted an astonishing 12 years. The arrival of the complete processor on a CMOS chip, which conformed to Moore’s law of doubling speed every 18 months, was an irresistible force that sounded the eventual replacement of mainframe systems, although a number of other issues had to be solved first, including notably the provision of reliable tape-handling facilities. The heyday of the mainframe thus eventually came to an inevitable end.

One very positive feature of the mainframe era at CERN was the joint project teams with the major manufacturers, in particular those of IBM and DEC. The presence of around say 20 engineers from such a company on-site led to extremely good service, not only from the local staff but also through direct contacts to the development teams in America. It was not unknown for a critical bug, discovered during the evening at CERN, to be fixed overnight by the development team in America and installed for the CERN service the next morning, a sharp contrast to the service available in these days of commodity computing. The manufacturers on their side saw the physicists’ use of their computers as pushing the limits of what was possible and pointing the way to the needs of other more straightforward customers in several years time. Hence their willingness to install completely new products, sometimes from their research laboratories, and often free of charge, as a way of getting them used, appraised and de-bugged. The requirements from the physicists made their way back into products and into the operating systems. This was one particular and successful way for particle physics to transfer its technology and expertise to the world at large. In addition, the joint projects provided a framework for excellent pricing, allowing particle physics to receive much more computer equipment than they could normally have paid for.

Further reading

This article has been a personal look at some of the aspects of mainframe computing at CERN, and could not in the space available provide anything more than a few snapshots. It has benefited from some of the details contained in the more in-depth look at the first 30 years of computing at CERN written for the CERN Computing Newsletter by Paolo Zanella in 1990 and available on the Web in three parts at: http://cnlart.web.cern.ch/cnlart/2001/002/cern-computing; http://cnlart.web.cern.ch/cnlart/2001/003/comp30; http://cnlart.web.cern.ch/cnlart/2001/003/comp30-last.