Work in LS1 touched almost all of the sub-detetcors and online systems.

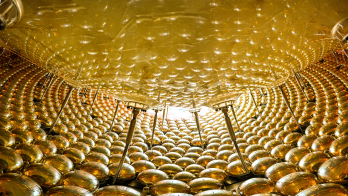

Image credit: Jeremi Niedziela.

It is nearly two years since the beams in the LHC were switched off and Long Shutdown 1 (LS1) began. Since then, a myriad of scientists and engineers have been repairing and consolidating the accelerator and the experiments for running at the unprecedented energy of 13 TeV (or 6.5 TeV/beam) – almost twice that of 2012.

In terms of installation work, ALICE is now complete. The remaining five super modules of the transition radiation detector (TRD), which were missing in Run 1, have been produced and installed. At the same time, the low-voltage distribution system for the TRD was re-worked to eliminate intermittent overheating problems that were experienced during the previous operational phase. On the read-out side, the data transmission over the optical links was upgraded to double the throughput to 4 GB/s. The TRD pre-trigger system used in Run 1 – a separate, minimum-bias trigger derived from the ALICE veto (V0) and start-counter (T0) detectors – was replaced by a new, ultrafast (425 ns) level-0 trigger featuring a complete veto and “busy” logic within the ALICE central trigger processor (CTP). This implementation required the relocation of racks hosting the V0 and T0 front-end cards to reduce cable delays to the CTP, together with optimization of the V0 front-end firmware for faster generation of time hits in minimum-bias triggers.

The ALICE electromagnetic calorimeter system was augmented with the installation of eight (six full-size and two one-third-size) super modules of the brand new dijet calorimeter (DCal). This now sits back-to-back with the existing electromagnetic calorimeter (EMCal), and brings the total azimuthal calorimeter coverage to 174° – that is, 107° (EMCal) plus 67° (DCal). One module of the photon spectrometer calorimeter (PHOS) was added to the pre-existing three modules and equipped with one charged-particle veto (CPV) detector module. The CPV is based on multiwire proportional chambers with pad read-out, and is designed to suppress the detection of charged hadrons in the PHOS calorimeter.

The overall PHOS/DCal set-up is located in the bottom part of the ALICE detector, and is now held in place by a completely new support structure. During LS1, the read-out electronics of the three calorimeters was fully upgraded from serial to parallel links, to allow operation at a 48 kHz lead–lead interaction rate with a minimum-bias trigger. The PHOS level-0 and level-1 trigger electronics was also upgraded, the latter being interfaced with the neighbouring DCal modules. This will allow the DCal/PHOS system to be used as a single calorimeter able to produce both shower and jet triggers from its full acceptance.

The gas mixture of the ALICE time-projection chamber (TPC) was changed from Ne(90):CO2(10) to Ar(90):CO2(10), to allow for a more stable response to the high particle fluxes generated during proton–lead and lead–lead running without significant degradation of momentum resolution at the lowest transverse momenta. The read-out electronics for the TPC chambers was fully redesigned, doubling the data lines and introducing more field-programmable gate-array (FPGA) capacity for faster processing and online noise removal. One of the 18 TPC sectors (on one side) is already instrumented with a pre-production series of the new read-out cards, to allow for commissioning before operation with the first proton beams in Run 2. The remaining boards are being produced and will be installed on the TPC during the first LHC Technical Stop (TS1). The increased read-out speed will be exploited fully during the four weeks of lead collisions foreseen for mid November 2015. For lead running, ALICE will operate mainly with minimum-bias triggers at a collision rate of 8 kHz or higher, which will produce a track load in the TPC equivalent to operation at 700 kHz in proton running.

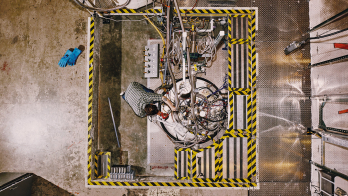

Image credit: Federico Ronchetti.

LS1 has also seen the design and installation of a new subsystem – the ALICE diffractive (AD) detector. This consists of two double layers of scintillation counters placed far from the interaction region on both sides, one in the ALICE cavern (at z = 16 m) and one in the LHC tunnel (at z = –19 m). The AD photomultiplier tubes are all accessible from the ALICE cavern, and the collected light is transported via clear optical fibres.

The ALICE muon chambers (MCH) underwent a major hardware consolidation of the low-voltage system in which the bus bars were fully re-soldered to minimize the effects of spurious chamber occupancies. The muon trigger (MTR) gas-distribution system was switched to closed-loop operation, and the gas inlet and outlet “beaks” were replaced with flexible material to avoid cracking from mechanical stress. One of the MTR resistive-plate chambers was instrumented with a pre-production front-end card being developed for the upgrade programme in LS2.

The increased read-out rates of the TPC and TRD have been matched by a complete upgrade (replacement) of both the data-acquisition (DAQ) and high-level trigger (HLT) computer clusters. In addition, the DAQ and HLT read-out/receiver cards have been redesigned, and now feature higher-density parallel optical connectivity on a PCIe-bus interface and a common FPGA design. The ALICE CTP board was also fully redesigned to double the number of trigger classes (logic combinations of primary inputs from trigger detectors) from 50 to 100, and to handle the new, faster level-0 trigger architecture developed to increase the efficiency of the TRD minimum-bias inspection.

Regarding data-taking operations, a full optimization of the DAQ and HLT sequences was performed with the aim of maximizing the running efficiency. All of the detector-initialization procedures were analysed to identify and eliminate bottlenecks, to speed up the start- and end-of-run phases. In addition, an in-run recovery protocol was implemented on both the DAQ/HLT/CTP and the detector sides to allow, in case of hiccups, on-the-fly front-end resets and reconfiguration without the need to stop the ongoing run. The ALICE HLT software framework was in turn modified to discard any possible incomplete events originating during online detector recovery. At the detector level, the leakage of “busy time” between the central barrel and muon-arm read-out detectors has been minimized by implementing multievent buffers on the shared trigger detectors. In addition, the central barrel and the muon-arm triggers can now be paused independently to allow for the execution of the in-run recovery.

Towards routine running

The ALICE control room was renovated completely during LS1, with the removal of the internal walls to create an ergonomic open space with 29 universal workstations. Desks in the front rows face 11 extra-large-format LED screens displaying the LHC and ALICE controls and status. They are reserved for the shift crew and the run-co-ordination team. Four concentric lateral rows of desks are reserved for the work of detector experts. The new ALICE Run Control Centre also includes an access ramp for personnel with reduced mobility. In addition, there are three large windows – one of which can be transformed into a semi-transparent, back-lit touchscreen – for the best visitor experience with minimal disturbance to the ALICE operators.

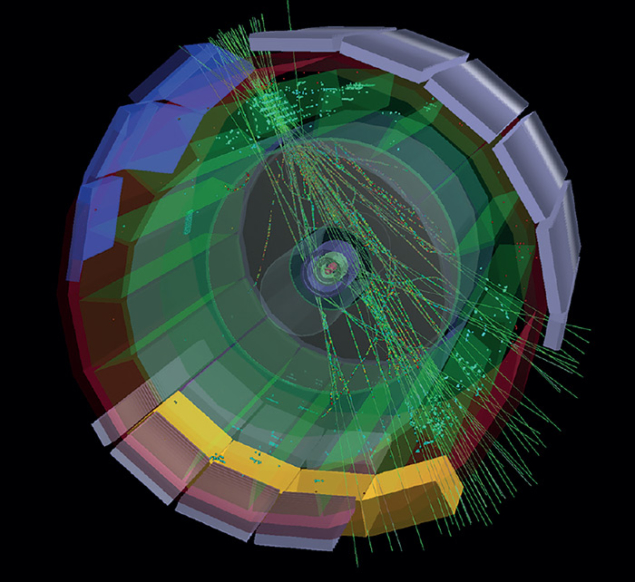

Image credit: Philippe Pillot.

Following the detector installations and interventions on almost all of the components of the hardware, electronics, and supporting systems, the ALICE teams began an early integration campaign at the end of 2014, allowing the ALICE detector to start routine cosmic running with most of the central-barrel detectors by the end of December. The first weeks of 2015 have seen intensive work on performing track alignment of the central-barrel detectors using cosmic muons under different magnetic-field settings. Hence, ALICE’s solenoid magnet has also been extensively tested – together with the dipole magnet in the muon arm – after almost two years of inactivity. Various special runs, such as TPC and TRD krypton calibrations, have been performed, producing a spectacular 5 PB of raw data in a single week, and providing a challenging stress test for the online systems.

The ALICE detector is located at point 2 of the LHC, and the end of the TI2 transfer line – which injects beam 1 (the clockwise beam) into the LHC from the Super Proton Synchrotron (SPS) – is 300 m from the interaction region. This set-up implies additional vacuum equipment and protection collimators close (80 m) to the ALICE cavern, which are a potential source of background interactions. The LHC teams have refurbished most of these components during LS1 to improve the background conditions during proton operations in Run 2.

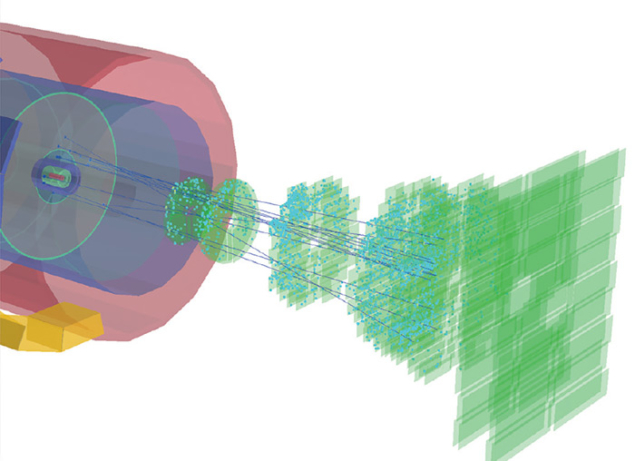

ALICE took data during the injection tests in early March when beam from the SPS was injected into the LHC and dumped half way along the ring (CERN Courier April 2015 p5). The tests also produced so-called beam-splash events on the SPS beam dump and the TI2 collimator, which were used by ALICE to perform the time alignment for the trigger detectors and to calibrate the beam-monitoring system. The splash events were recorded using all of the ALICE detectors that could be operated safely in such conditions, including the muon arm.

The LHC sector tests mark the beginning of Run 2. The ALICE collaboration plans to exploit fully the first weeks of LHC running with proton collisions at a luminosity of about 1031 Hz/cm2. The aim will be to collect rare triggers and switch to a different trigger strategy (an optimized balance of minimum bias and rare triggers) when the LHC finally moves to operation with a proton bunch separation of 25 ns.

Control of ALICE’s operating luminosity during the 25 ns phase will be challenging, because the experiment has to operate with very intense beam currents but relatively low luminosity in the interaction region. This requires using online systems to monitor the luminous beam region continuously, to control its transverse size and ensure proper feedback to the LHC operators. At the same time, optimized trigger algorithms will be employed to reduce the fraction of pile-up events in the detector.

The higher energy of proton collisions of Run 2 will result in a significant increase in the cross-sections for hard probes, and the long-awaited first lead–lead run after LS1 will see ALICE operating at a luminosity of 1027 Hz/cm2. However, the ALICE collaboration is already looking into the future with its upgrade plans for LS2, focusing on physics channels that do not exhibit hardware trigger signatures in a high-multiplicity environment like that in lead–lead collisions. At the current event storage rate of 0.5 kHz, the foreseen boost of luminosity from the present 1027 Hz/cm2 to more than 6 × 1027 Hz/cm2 will increase the collected statistics by a factor of 100. This will require free-running data acquisition and storage of the full data stream to tape for offline analysis.

In this way, the LS2 upgrades will allow ALICE to exploit the full potential of the LHC for a complete characterization of quark–gluon plasma through measurements of unprecedented precision.