CERN’s Graeme Stewart surveys six decades of computing milestones in high-energy physics and describes the immense challenges in taming the data volumes from future experiments in a rapidly changing technological landscape.

It is impossible to envisage high-energy physics without its foundation of microprocessor technology, software and distributed computing. Almost as soon as CERN was founded the first contract to provide a computer was signed, but it took manufacturer Ferranti more than two years to deliver “Mercury”, our first valve-based behemoth, in 1958. So early did this machine arrive that the venerable FORTRAN language had yet to be invented! A team of about 10 people was required for operations and the I/O system was already a bottleneck. It was not long before faster and more capable machines were available at the lab. By 1963, an IBM 7090 based on transistor technology was available with a FORTRAN compiler and tape storage. This machine could analyse 300,000 frames of spark-chamber data – a big early success. By the 1970s, computers were important enough that CERN hosted its first Computing and Data Handling School. It was clear that computers were here to stay.

By the time of the LEP era in the late 1980s, CERN hosted multiple large mainframes. Workstations, to be used by individuals or small teams, had become feasible. DEC VAX systems were a big step forward in power, reliability and usability and their operating system, VMS, is still talked of warmly by older colleagues in the field. Even more economical machines, personal computers (PCs), were also reaching a threshold of having enough computing power to be useful to physicists. Moore’s law, which predicted the doubling of transistor densities every two years, was well established and PCs were riding this technological wave. More transistors meant more capable computers, and every time transistors got smaller, clock speeds could be ramped up. It was a golden age where more advanced machines, running ever faster, gave us an exponential increase in computing power.

Key also to the computing revolution, alongside the hardware, was the growth of open-source software. The GNU project had produced many utilities that could be used by hackers and coders on which to base their own software. With the start of the Linux project to provide a kernel, humble PCs became increasingly capable machines for scientific computing. Around the same time, Tim Berners-Lee’s proposal for the World Wide Web, which began as a tool for connecting information for CERN scientists, started to take off. CERN realised the value in releasing the web as an open standard and in doing so enabled a success that today connects almost the entire planet.

LHC computing

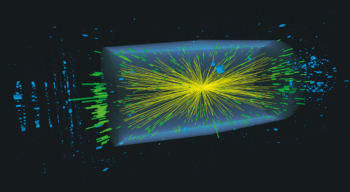

This interconnected world was one of the cornerstones of the computing that was envisaged for the Large Hadron Collider (LHC). Mainframes were not enough, nor were local clusters. What the LHC needed was a worldwide system of interconnected computing systems: the Worldwide LHC Computing Grid (WLCG). Not only would information need to be transferred, but huge amounts of data and millions of computer jobs would need to be moved and executed, all with a reliability that would support the LHC’s physics programme. A large investment in brand new grid technologies was undertaken, and software engineers and physicists in the experiments had to develop, deploy and operate a new grid system utterly unlike anything that had gone before. Despite rapid progress in computing power, storage space and networking, it was extremely hard to make a reliable, working distributed system for particle physics out of these pieces. Yet we achieved this incredible task. During the past decade, thousands of physics results from the four LHC experiments, including the Higgs-boson discovery, were enabled by the billions of jobs executed and the petabytes of data shipped around the world.

The software that was developed to support the LHC is equally impressive. The community had made a wholesale migration from the LEP FORTRAN era to C++ and millions of lines of code were developed. Huge software efforts in every experiment produced frameworks that managed data taking and reconstruction of raw events to analysis data. In simulation, the Geant4 toolkit enabled the experiments to begin data-taking at the LHC with a fantastic level of understanding of the extraordinarily complex detectors, enabling commissioning to take place at a remarkable rate. The common ROOT foundational libraries and analysis environment allowed physicists to process the billions of events that the LHC supplied and extract the physics from them successfully at previously unheard of scales.

Changes in the wider world

While physicists were busy preparing for the LHC, the web became a pervasive part of people’s lives. Internet superpowers like Google, Amazon and Facebook grew up as the LHC was being readied and this changed the position of particle physics in the computing landscape. Where particle physics had once been a leading player in software and hardware, enjoying good terms and some generous discounts, we found ourselves increasingly dwarfed by these other players. Our data volumes, while the biggest in science, didn’t look so large next to Google; the processing power we needed, more than we had ever used before, was small beside Amazon; and our data centres, though growing, were easily outstripped by Facebook.

Technology, too, started to shift. Since around 2005, Moore’s law, while still largely holding, has no longer been accompanied by increases in CPU clock speeds. Programs that ran in a serial mode on a single CPU core therefore started to become constrained in their performance. Instead, performance gains would come from concurrent execution on multiple threads or from using vectorised maths, rather than from faster cores. Experiments adapted by executing more tasks in parallel – from simply running more jobs at the same time to adopting multi-process and multi-threaded processing models. This post hoc parallelism was often extremely difficult because the code and frameworks written for the LHC had assumed a serial execution model.

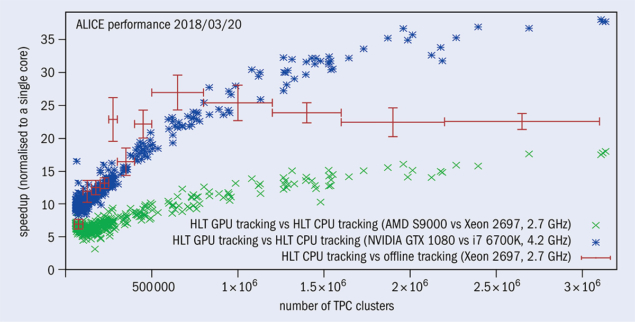

The barriers being discovered for CPUs also caused hardware engineers to rethink how to exploit CMOS technology for processors. The past decade has witnessed the rise of the graphics processing unit (GPU) as an alternative way to exploit transistors on silicon. GPUs run with a different execution model: much more of the silicon is devoted to floating-point calculations, and there are many more processing cores, but each core is smaller and less powerful than a CPU. To utilise such devices effectively, algorithms often have to be entirely rethought and data layouts have to be redesigned. Much of the convenient, but slow, abstraction power of C++ has to be given up in favour of more explicit code and simpler layouts. However, this rapid evolution poses other problems for the code long term. There is no single way to programme a GPU and vendors’ toolkits are usually quite specific to their hardware.

It is both a challenge and also an opportunity to work with new scientific partners in the era of exascale science

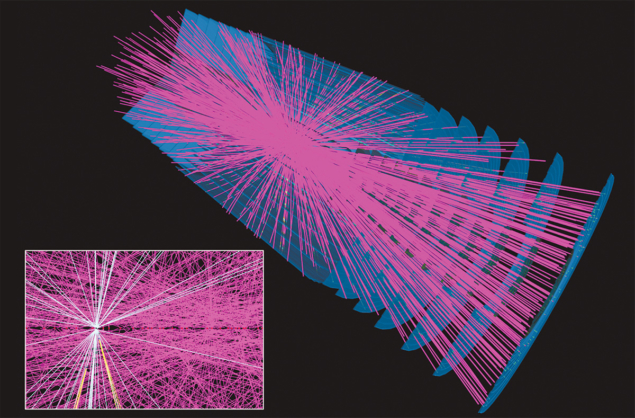

All of this would be less important were it the case that the LHC experiments were standing still, but nothing could be further from the truth. For Run 3 of the LHC, scheduled to start in 2021, the ALICE and LHCb collaborations are installing new detectors and preparing to take massively more data than they did up to now. Hardware triggers are being dropped in favour of full software processing systems and continuous data processing. The high-luminosity upgrade of the LHC for Run 4, from 2026, will be accompanied by new detector systems for ATLAS and CMS, much higher trigger rates and greatly increased event complexity. All of this physics needs to be supported by a radical evolution of software and computing systems, and in a more challenging sociological and technological environment. The LHC will also not be the only scientific big player in the future. Facilities such as DUNE, FAIR, SKA and LSST will come online and have to handle as much, if not more, data than at CERN and in the WLCG. That is both a challenge but also an opportunity to work with new scientific partners in the era of exascale science.

There is one solution that we know will not work: simply scaling up the money spent on software and computing. We will need to live with flat budgets, so if the event rate of an experiment increases by a factor of 10 then we have a budget per event that just shrank by the same amount! Recognising this, the HEP Software Foundation (HSF) was invited by the WLCG in 2016 to produce a roadmap for how to evolve software and computing in the 2020s – resulting in a community white paper supported by hundreds of experts in many institutions worldwide (CERN Courier April 2018 p38). In parallel, CERN open lab – a public–private partnership through which CERN collaborates with leading ICT companies and other research organisations – published a white paper setting out specific challenges that are ripe for tackling through collaborative R&D projects with leading commercial partners.

Facing the data onslaught

Since the white paper was published, the HSF and the LHC-experiment collaborations have worked hard to tackle the challenges it lays out. Understanding how event generators can be best configured to get good physics at minimum cost is a major focus, while efforts to get simulation speed-ups from classical fast techniques, as well as new machine-learning approaches, have intensified. Reconstruction algorithms have been reworked to take advantage of GPUs and accelerators, and are being seriously considered for Run 3 by CMS and LHCb (as ALICE makes even more use of GPUs since their successful deployment in Run 2). In the analysis domain, the core of ROOT is being reworked to be faster and also easier for analysts to work with. Much inspiration is taken from the Python ecosystem, using Jupyter notebooks and services like SWAN.

These developments are firmly rooted in the new distributed models of software development based on GitHub or GitLab and with worldwide development communities, hackathons and social coding. Open source is also vital, and all of the LHC experiments have now opened up their software. In the computing domain there is intense R&D into improving data management and access, and the ATLAS-developed Rucio data management system is being adopted by a wide range of other HEP experiments and many astronomy communities. Many of these developments got a shot in the arm from the IRIS–HEP project in the US; other European initiatives, such as IRIS in the UK and the IDT-UM German project are helping, though much more remains to be done.

All this sets us on a good path for the future, but still, the problems remain significant, the implementation of solutions is difficult and the level of uncertainty is high. Looking back to the first computers at CERN and then imagining the same stretch of time into the future, predictions are next to impossible. Disruptive technology, like quantum computing, might even entirely revolutionise the field. However, if there is one thing that we can be sure of, it’s that the next decades of software and computing at CERN will very likely be as interesting and surprising as the ones already passed.