Gerard ’t Hooft reflects on how renormalisation elevated the Brout–Englert–Higgs mechanism to a consistent theory capable of making testable predictions.

Often in physics, experimentalists observe phenomena that theorists had not been able to predict. When the muon was discovered, theoreticians were confused; a particle had been predicted, but not this one. Isidor Rabi came with his famous outcry: “who ordered that?” The J/ψ is another special case. A particle was discovered with properties so different from the particles that were expected, that the first guesses as to what it was were largely mistaken. Soon it became evident that it was a predicted particle after all, but it so happened that its features were more exotic than was foreseen. This was an experimental discovery requiring new twists in the theory, which we now understand very well. The Higgs particle also has a long and interesting history, but from my perspective, it was to become a triumph for theory.

From the 1940s, long before any indications were seen in experiments, there were fundamental problems in all theories of the weak interaction. Then we learned from very detailed and beautiful measurements that the weak force seemed to have a vector-minus axial-vector (V-A) structure. This implied that, just as in Yukawa’s theory for the strong nuclear force, the weak force can also be seen as resulting from an exchange of particles. But here, these particles had to be the energy quanta of vector and axial-vector fields, so they must have spin one, with positive and negative parities mixed up. They also must be very heavy. This implied that, certainly in the 1960s, experiments would not be able to detect these intermediate particles directly. But in theory, we should be able to calculate accurately the effects of the weak interaction in terms of just a few parameters, as could be done with the electromagnetic force.

Electromagnetism was known to be renormalisable – that is, by carefully redefining and rearranging the mass and interaction parameters, all observable effects would become calculable and predictable, avoiding meaningless infinities. But now we had a difficulty: the weak exchange particles differed from the electromagnetic ones (the photons) because they had mass. The mass was standing in the way when you tried to do what was well understood in electromagnetism. How exactly a correct formalism should be set up was not known, and the relationship between renormalisability and gauge invariance was not understood at all. Indeed, today we can say that the first hints were already there by 1954, when C N Yang and Robert Mills wrote a beautiful paper in which they generalised the principle of local gauge invariance to include gauge transformations that affect the nature of the particles involved. In its most basic form, their theory described photons with electric charge.

Thesis topic

In 1969 I began my graduate studies under the guidance of Martinus J G Veltman. He explained to me the problem he was working on: if photons were to have mass, then renormalisation would not work the same way. Specifically, the theory would fail to obey unitarity, a quantum mechanical rule that guarantees probabilities are conserved. I was given various options for my thesis topic, but they were not as fundamental as the issues he was investigating. “I want to work with you on the problem you are looking at now,” I said. Veltman replied that he had been working on his problem for almost a decade; I would need lots of time to learn about his results. “First, read this,” he said, and he gave me the Yang–Mills paper. “Why?” I asked. He said, “I don’t know, but it looks important.”

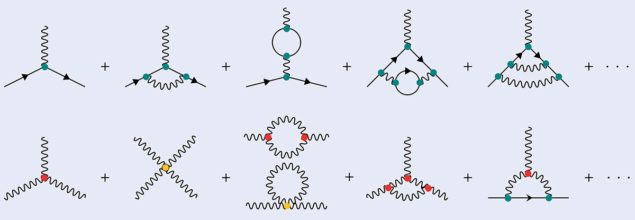

That, I could agree with. This was a splendid idea. Why can’t you renormalise this? I had convinced myself that it should be possible, in principle. The Yang–Mills theory was a relativistic quantised field theory. But Veltman explained that, in such a theory, you must first learn what the Feynman rules are. These are the prescriptions that you have to follow to get the amplitudes generated by the theory. You can read off whether the amplitudes are unitary, obey dispersion relations, and check that everything works out as expected.

Many people thought that renormalisation – even quantum field theory – was suspect. They had difficulties following Veltman’s manipulations with Feynman diagrams, which required integrations that do not converge. To many investigators, he seemed to be sweeping the difficulties with the infinities under the rug. Nature must be more clever than this! Yang–Mills seemed to be a divine theory with little to do with reality, so physicists were trying all sorts of totally different approaches, such as S-matrix theory and Regge trajectories. Veltman decided to ignore all that.

Solid-state inspiration

Earlier in the decade, some investigators had been inspired by results from solid-state physics. Inside solids, vibrating atoms and electrons were described by nonrelativistic quantum field theories, and those were conceptually easier to understand. Philip Anderson had learned to understand the phenomenon of superconductivity as a process of spontaneous symmetry breaking; photons would obtain a mass, and this would lead to a remarkable rearrangement of the electrons as charge carriers that would no longer generate any resistance to electric currents. Several authors realised that this procedure might apply to the weak force. In the summer of 1964, Peter Higgs submitted a manuscript to Physical Review Letters, where he noted that the mechanism of making photons massive should also apply to relativistic particle systems. But there was a problem. Jeffrey Goldstone had sound mathematical arguments to expect the emergence of massless scalar particles as soon as a continuous symmetry breaks down spontaneously. Higgs put forward that this theorem should not apply to spontaneously broken local symmetries, but critics were unconvinced.

The journal sent Higgs’s manuscript out to be peer reviewed. The reviewer did not see what the paper would add to our understanding. “If this idea has anything to do with the real world, would there be any possibility to check it experimentally?” The correct question would have been what the paper would imply for the renormalisation procedure, but this question was in nobody’s mind. Anyway, Higgs gave a clear and accurate answer: “Yes, there is a consequence: this theory not only explains where the photon mass comes from, but it also predicts a new particle, a scalar particle (a particle with spin zero), which unlike all other particles, forms an incomplete representation of the local gauge symmetry.” In the meantime, other papers appeared about the photon mass-generation process, not only by François Englert and Robert Brout in Brussels, but also by Tom Kibble, Gerald Guralnik and Carl Hagen in London. And Sheldon Glashow, Abdus Salam and Steven Weinberg were formulating their first ideas (all independently) about using local gauge invariance to create models for the weak interaction.

I started to study everything from the ground up

At the time spontaneous symmetry breaking was being incorporated into quantum field theory, the significance of renormalisation and the predicted scalar particles were hardly mentioned. Certainly, researchers were not able to predict the mass of such particles. Personally, although I had heard about these ideas, I also wasn’t sure I understood what they were saying. I had my own ways of learning how to understand things, so I started to study everything from the ground up.

If you work with quantum mechanics, and you start from a relativistic classical field theory, to which you add the Copenhagen procedure to turn that into quantum mechanics, then you should get a unitary theory. The renormalisation procedure amounts to transforming all expressions that threaten to become infinite due to divergence of the integrals, to apply only to unobservable qualities of particles and fields, such as their “bare mass” and “bare charge”. If you understand how to get such things under control, then your theory should become a renormalised description of massive particles. But there were complications.

The infinities that require a renormalisation procedure to tame them originate from uncontrolled behaviour at very tiny distances, where the effective energies are large and consequently the effects of mass terms for the particles should become insignificant. This revealed that you first have to renormalise the theory without any masses in them, where also the spontaneous breakdown of the local symmetry becomes insignificant. You had to get the particle book-keeping right. A massless photon has only two observable field components (they can be left- or right-rotating), whereas a massive particle with the same spin can rotate in three different ways. One degree of freedom did not match. This was why an extra field was needed. If you wanted massive photons with electric charges +, 0 or –, you would need a scalar field with four components; one of these would represent the total field strength, and would behave as an extra, neutral, spin-0 particle – the observable particle that Higgs had talked about – but the others would turn the number of spinning degrees of freedom of the three other bosons from two to three each (see “Dynamical” figure).

One question

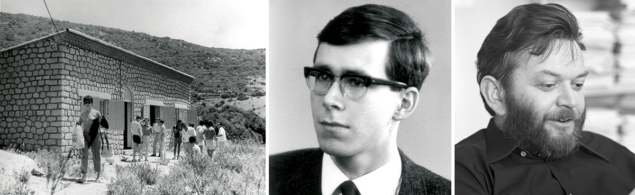

In 1970 Veltman sent me to a summer school organised by Maurice Lévy in a new science institute at Cargèse on the French island of Corsica. The subject would be the study of the Gell–Mann–Lévy model for pions and nucleons, in particular its renormalisation and the role of spontaneous symmetry breaking. Will renormalisation be possible in this model, and will it affect its symmetry? The model was very different from what I had just started to study: Yang–Mills theory with spontaneous breaking of its symmetry. There were quite a few reputable lecturers besides Lévy himself: Benjamin Lee and Kurt Symanzik had specialised in renormalisation. Shy as I was, I only asked one question to Lee, and the same to Symanzik: does your analysis apply to the Yang–Mills case?

Both gave me the same answer: if you are Veltman’s student, ask him. But I had, and Veltman did not believe that these topics were related. I thought that I had a better answer, and I fantasised that I was the only person on the planet who knew how to do it right. It was not obvious at all; I had two German roommates at the hotel where I had been put, who tried to convince me that renormalisation of Feynman graphs where lines cross each other would be unfathomably complicated.

Veltman had not only set up detailed, fully running machinery to handle the renormalisation of all sorts of models, but he had also designed a futuristic computer program to do the enormous amount of algebra required to handle the numerous Feynman diagrams that appear to be relevant for even the most basic computations. I knew he had those programs ready and running. He was now busy with some final checks: if his present attempts to check the unitarity of his renormalised model still failed, we should seriously consider giving this up. Yang–Mills theories for the weak interactions would not work as required.

But Veltman had not thought of putting a spin-zero, neutral particle in his model, certainly not if it wasn’t even in a complete representation of the gauge symmetry. Why should anyone add that? After returning from Cargèse I went to lunch with Veltman, during which I tried to persuade him. Walking back to our institute, he finally said, “Now look, what I need is not an abstract mathematical idea, what I want is a model, with a Lagrangian, from which I can read off the Feynman diagrams to check it with my program…”. “But that Lagrangian I can give you,” I said. Next, he walked straight into a tree! A few days after I had given him the Lagrangian, he came to me, quite excited. “Something strange,” he said, “your theory isn’t right because it still isn’t unitary, but I see that at several places, if the numbers had been a trifle different, it could have worked out.” Had he copied those factors ¼ and ½ that I had in my Lagrangian, I wondered? I knew they looked odd, but they originated from the fact that the Higgs field has isospin ½ while all other fields have isospin one.

No, Veltman had thought that those factors came from a sloppy notation I must have been using. “Try again,” I asked. He did, and everything fell into place. Most of all, we had discovered something important. This was the beginning of an intensive but short collaboration. My first publication “Renormalization of massless Yang–Mills fields”, published in October 1971, concerned the renormalisation of the Yang–Mills theory without the mass terms. The second publication that year, “Renormalizable Lagrangians for massive Yang–Mills fields,” where it was explained how the masses had to be added, had a substantial impact.

There was an important problem left wide open, however: even if you had the correct Feynman diagrams, the process of cancelling out the infinities could still leave finite, non-vanishing terms that ruin the whole idea. These so-called “anomalies” must also cancel out. We found a trick called dimensional renormalisation, which would guarantee that anomalies cancel except in the case where particles spin preferentially in one direction. Fortunately, as charged leptons tend to rotate in opposite directions compared to quarks, it was discovered that the effects of the quarks would cancel those of the leptons.

The fourth component

Within only a few years, a complete picture of the fundamental interactions became visible, where experiment and theory showed a remarkable agreement. It was a fully renormalisable model where all quarks and all leptons were represented as “families” that were only complete if each quark species had a leptonic counterpart. There was an “electroweak force”, where electromagnetism and the weak force interfere to generate the force patterns observed in experiments, and the strong force was tamed at almost the same time. Thus the electroweak theory and quantum chromodynamics were joined into what is now known as the Standard Model.

Be patient, we are almost there, we have three of the four components of this particle’s field

This theory agreed beautifully with observations, but it did not predict the mass of the neutral, spin-0 Higgs particle. Much later, when the W and the Z bosons were well-established, the Higgs was still not detected. I tried to reassure my colleagues: be patient, we are almost there, we have three of the four components of this particle’s field. The fourth will come soon.

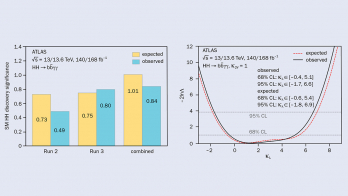

As the theoretical calculations and the experimental measurements became more accurate during the 1990s and 2000s, it became possible to derive the most likely mass value from indirect Higgs-particle effects that had been observed, such as those concerning the top-quark mass. On 4 July 2012 a new boson was directly detected close to where the Standard Model said the Higgs would be. After these first experimental successes, it was of utmost importance to check whether this was really the object we had been expecting. This has kept experimentalists busy for the past 10 years, and will continue to do so for the foreseeable future.

The discovery of the Higgs particle is a triumph for high technology and basic science, as well as accurate theoretical analyses. Efforts spanning more than half a century paid off in the summer of 2012, and a new era of understanding the particles, their masses and interactions began.