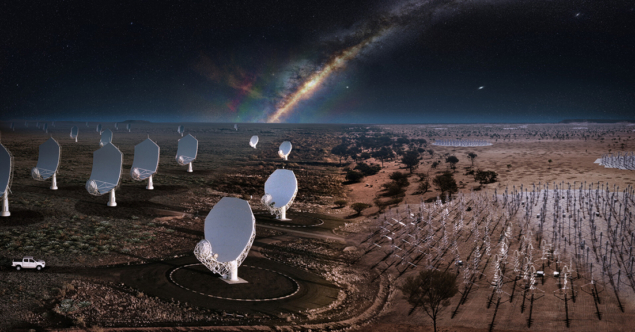

CERN has welcomed more than 120 delegates to an online kick-off workshop for a new collaboration on high-performance computing (HPC). CERN, SKAO (the organisation leading the development of the Square Kilometre Array), GÉANT (the pan-European network and services provider for research and education) and PRACE (the Partnership for Advanced Computing in Europe) will work together to realise the full potential of the coming generation of HPC technology for data-intensive science.

It is an exascale project for an exascale problem

Maria Girone

“It is an exascale project for an exascale problem,” said Maria Girone, CERN coordinator of the collaboration and CERN openlab CTO, in opening remarks at the workshop. “HPC is at the intersection of several important R&D activities: the expansion of computing resources for important data-intensive science projects like the HL-LHC and the SKA, the adoption of new techniques such as artificial intelligence and machine learning, and the evolution of software to maximise the potential of heterogeneous hardware architectures.”

The 29 September workshop, which was organised with the support of CERN openlab, saw participants establish the collaboration’s foundations, outline initial challenges and begin to define the technical programme. Four main initial areas of work were discussed at the event: training and centres of expertise, benchmarking, data access, and authorisation and authentication.

One of the largest challenges in using new HPC technology is the need to adapt to heterogeneous hardware. This involves the development and dissemination of new programming skills, which is at the core of the new HPC collaboration’s plan. A number of examples showing the potential of heterogeneous systems were discussed. One is the EU-funded DEEP-EST project, which is developing a modular supercomputing prototype for exascale computing. DEEP-EST has already contributed to the re-engineering of high-energy physics algorithms for accelerated architectures, highlighting the significant mutual benefits of collaboration across fields when it comes to HPC. PRACE’s excellent record of providing support and training will also be critical to the success of the collaboration.

Benchmarking press

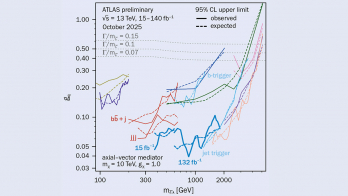

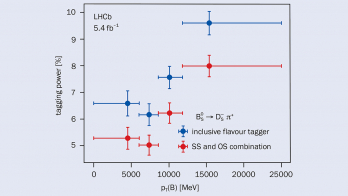

Establishing a common benchmark suite will help the organisations to measure and compare the performance of different types of computing resources for data-analysis workflows from astronomy and particle physics. The suite will include applications representative of the HEP and astrophysics communities – reflecting today’s needs, as well as those of the future – and augment the existing Unified European Applications Benchmark Suite.

Access is another challenge when using HPC resources. Data from the HL-LHC and the SKA will be globally distributed and will be moved over high-capacity networks, staged and cached to reduce latency, and eventually processed, analysed and redistributed. Accessing the HPC resources themselves involves adherence to strict cyber-security protocols. A technical area devoted to authorisation and authentication infrastructure is defining demonstrators to enable large scientific communities to securely access protected resources.

The collaboration will now move forward with its ambitious technical programme. Working groups are forming around specific challenges, with the partner organisations providing access to appropriate testbed resources. Important activities are already taking place in all four areas of work, and a second collaboration workshop will soon be organised.