Résumé

ALICE : sur les traces d’un nouvel état de la matière

Après deux périodes de collisions plomb-plomb au LHC, complétées par des campagnes de collisions proton-plomb, de nouvelles perspectives s’ouvrent pour la compréhension de la matière à hautes température et densité, conditions dans lesquelles la chromodynamique quantique prédit l’existence d’un plasma de quarks et de gluons. Conçue pour supporter les fortes densités de particules générées par les collisions d’ions lourds, l’expérience ALICE a fourni de nombreuses mesures du milieu produit au LHC, qui sont ici résumées.Élément nouveau, la large section efficace pour les processus dits durs tels que la production de jets et de saveurs lourdes peut être utilisée pour ” voir ” à l’intérieur du milieu.

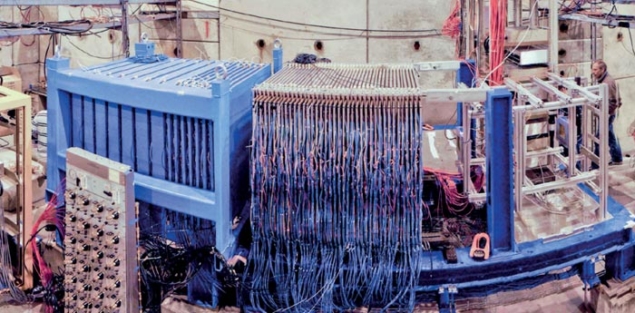

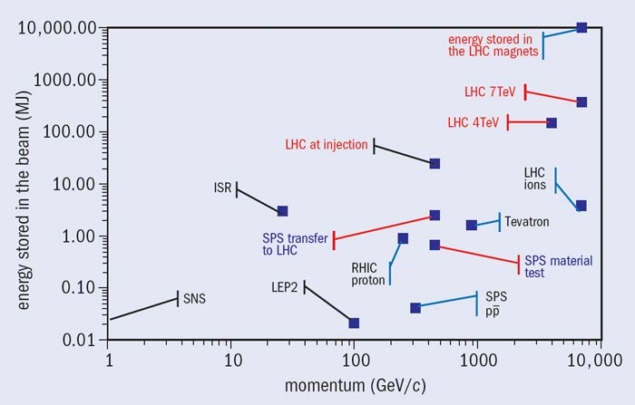

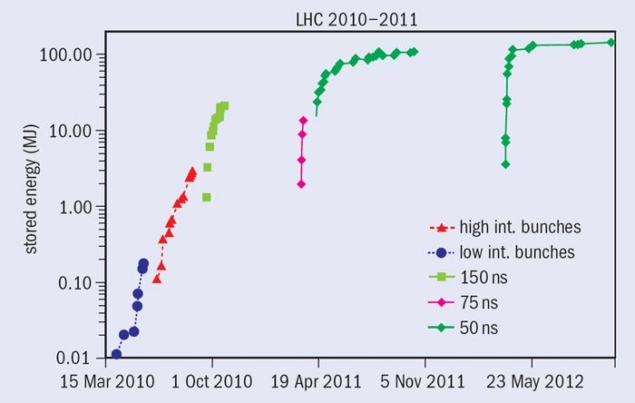

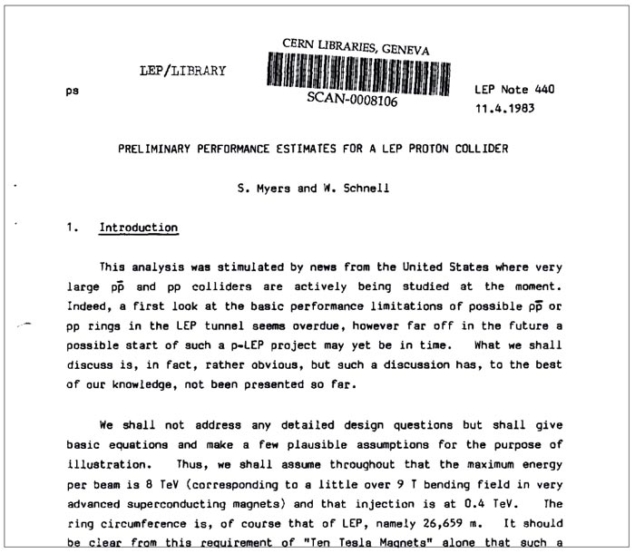

The dump of the lead beam in the early morning of 10 February this year marked the end of a successful and exciting first LHC running phase with heavy-ion beams. It started in November 2010 with the first lead–lead (PbPb) collisions at √sNN = 2.76 TeV per nucleon pair, when in one month of running the machine delivered an integrated luminosity of about 10 μb–1 for each experiment. In the second period a year later, the LHC’s heavy-ion performance exceeded expectation because the instantaneous luminosity reached more than 1026 cm–2 s–1 and the experiments collected about 10 times more integrated luminosity. A pilot proton–lead (pPb) run at √sNN = 5.02 TeV took place in September 2012, providing enough events for first surprises and publications. A full run followed in February, delivering 30 nb–1 of pPb collisions – precious reference data for the PbPb studies.

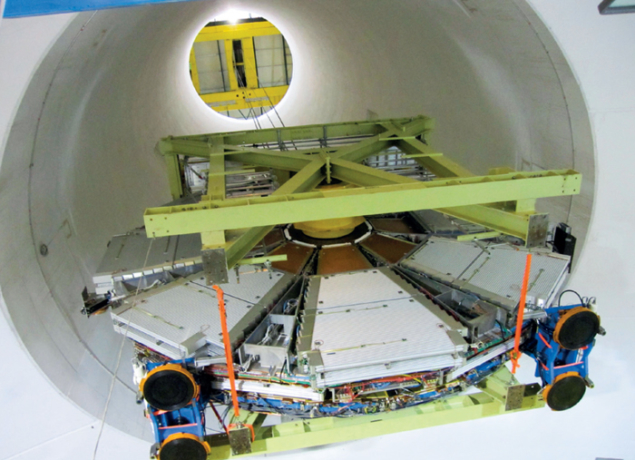

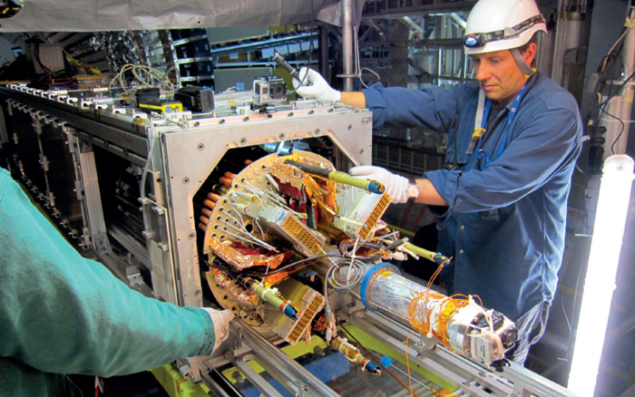

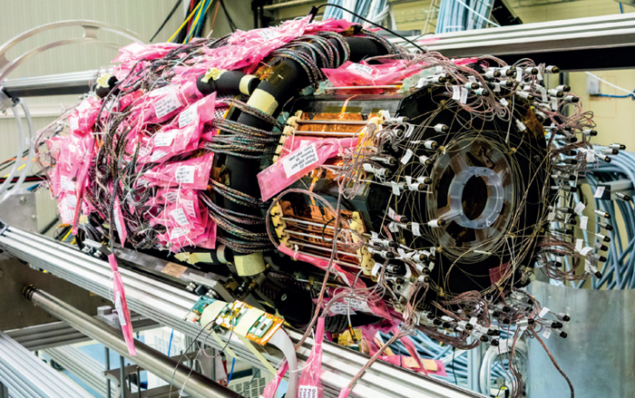

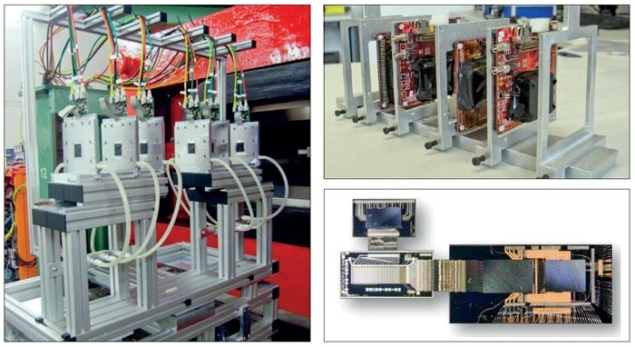

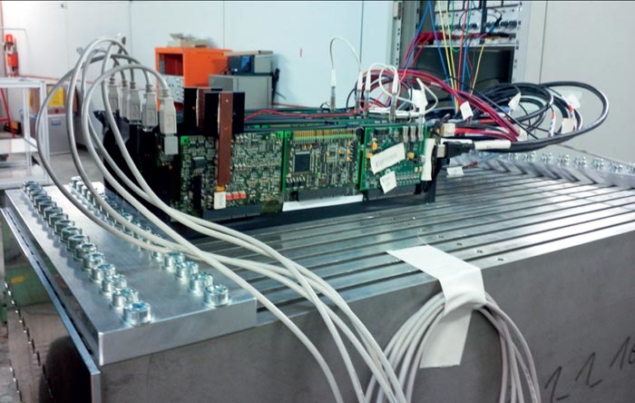

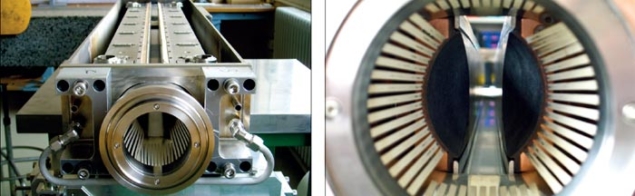

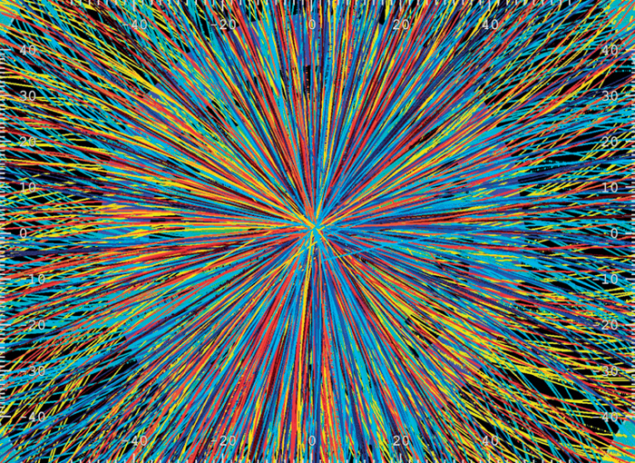

The ALICE experiment is optimized to cope with the large particle-densities produced in PbPb collisions and nothing was left unprepared for the first heavy-ion run in 2010. Nevertheless, immediately before the first collisions the tension was palpable until the first event displays appeared (figure 1). The image of the star-like burst with thousands of particles recorded by the time-projection chamber became an emblem for the accomplishment of a collaboration that had worked for 20 years on developing, building and operating the ALICE detector. With the arrival of a wealth of data, a new era for comprehension of the nature of matter at high temperature and density began, where QCD predicts that quark–gluon plasma (QGP) – a de-confined medium of quarks and gluons – exists.

Before the LHC started up, the Relativistic Heavy-Ion Collider (RHIC) at Brookhaven was the most powerful machine of this kind, producing collisions between gold (Au) ions with a maximum energy of 200 GeV. After 10 years of analysis – just before the first PbPb data-taking at the LHC – the experimental collaborations at RHIC came to the surprising conclusion that central AuAu collisions create droplets of an almost perfect, dense fluid of partonic matter that is 250,000 times hotter than the core of the Sun. Their results indicate that because of the strength of the colour forces the plasma of partons (quarks and gluons) produced in these collisions has not yet reached its asymptotically gas-like state and, therefore, it has been dubbed strongly interacting QGP (sQGP). This finding raised several questions. What would the newly created medium look like at the LHC? How much denser and hotter would it be? Would it still be a perfect liquid or would it be closer to a weakly coupled gas-like state? How would the abundantly produced hard probes be modified by the medium?

Denser, hotter, bigger

In the most central PbPb collisions at the LHC, the charged-particle density at mid-rapidity amounts to dN/dη ≈ 1600, which is about 2.1 times more per nucleon pair participating in the collision than at RHIC. Since the particles are also, on average, more energetic at the LHC, the transverse-energy density is about 2.5 times higher. This allows a rough estimate of the energy density of the medium that is produced. Assuming the same equilibration time of the plasma at RHIC and the LHC, the energy density has increased at the LHC by at least a factor of three, corresponding to an increase in temperature of more than 30% (CERN Courier June 2011 p17).

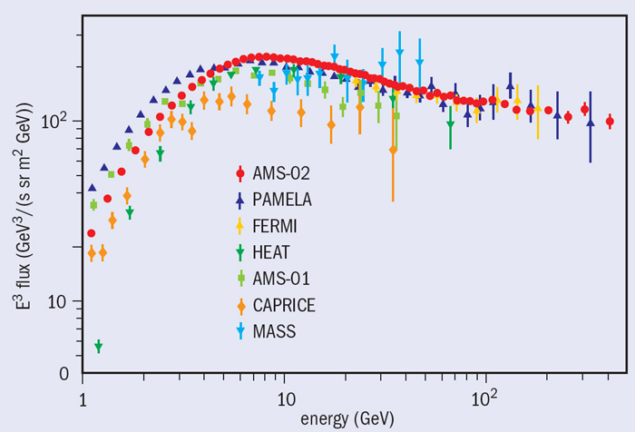

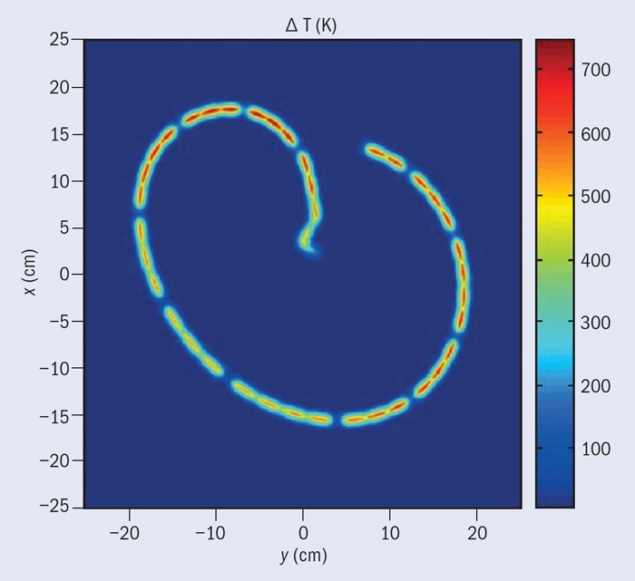

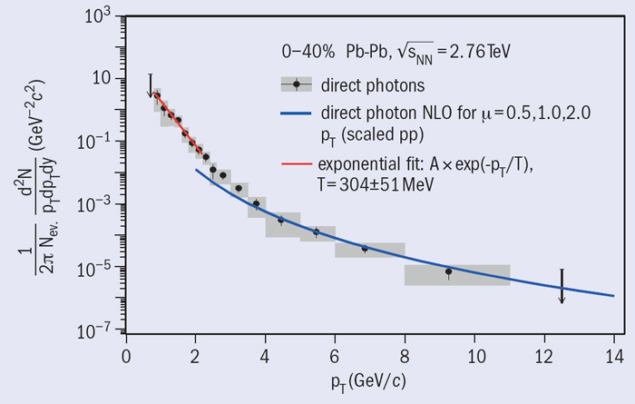

A more accurate thermometer is provided by the spectrum of the thermal photons emitted by the plasma that reach the detector unscathed. Whereas counting inclusive charged particles is a relatively easy task, the thermal photons have to be arduously separated from a large background of photons from meson decays and photons produced by QCD processes in collisions with large momentum-transfer, pT. The thermal photons appear in the low-energy region of the direct-photon spectrum (pgT <2 GeV/c) as an excess above the yield expected from next-to-leading order QCD and have an exponential shape (figure 2). The inverse slope of this exponential measured by ALICE gives a value for the temperature, T = 304±51 MeV, about 40% higher than at RHIC. In hydrodynamic models, this parameter corresponds to an effective temperature averaged over the time evolution of the reaction. The measured values suggest initial temperatures that are well above the critical temperature of 150–160 MeV.

In the same way that astronomers determine the space–time structure of extended sources using Hanbury-Brown–Twiss optical intensity interferometry, heavy-ion physicists use 3D momentum correlation-functions of identical bosons to determine the size of the medium produced (the freeze-out volume) and its lifetime. In line with predictions from hydrodynamics, the volume increases between RHIC and the LHC by a factor of two and the system lifetime increases by 30% (CERN Courier May 2011 p6).

Perfect quantum liquids are characterized by a low shear-viscosity to entropy ratio, η/s, for which a lower limit of h/4πkB is postulated. This property is directly related to the ability of the medium to transform spatial anisotropy of the initial energy density into momentum-space anisotropy. Experimentally, the momentum-space anisotropy is quantified by the Fourier decomposition of the distribution in azimuthal angle of the produced particles with respect to the reaction plane. The second Fourier coefficient, v2, is commonly denoted as elliptic flow. With respect to RHIC, v2 is found to increase in mid-central collisions by 30% (CERN Courier April 2011 p7). Calculations based on hydrodynamical models show that the v2 measured at the LHC is consistent with a low η/s, close to or challenging the postulated limit.

Further knowledge on the collective behaviour of the medium has been obtained from the spectral analysis of pions, kaons and protons. The so-called blast-wave fit can be used to determine the common radial expansion velocity, <βr>. The velocity measured by ALICE comes to about 0.65 and has increased by 10% with respect to RHIC.

In di-hadron azimuthal correlations, elliptic flow manifests as cosine-shaped modulations that extend over large rapidity ranges. At the LHC, for central collisions, more complicated structures become prominent. These can be quantified by higher-order Fourier coefficients. Hydrodynamical models can then relate them to fluctuations of the initial density distribution of the interacting nucleons. Wavy structures that were formerly discussed at RHIC, such as Mach-cones and soft ridges, have now found a simpler explanation. By selecting events with larger than average higher-order Fourier coefficients, it is possible to select and study certain initial-state configurations. “Event shape engineering” has therefore been born.

As discussed above, using hydrodynamical models, the basic parameters of the medium can be extrapolated in a continuous manner between the energies of RHIC and the LHC and they turn out to show a moderate increase. Although this might not seem to be a spectacular discovery, its importance for the field should not be underestimated: it marks a transition from data-driven discoveries to precision measurements that constrain model parameters.

Hard probes

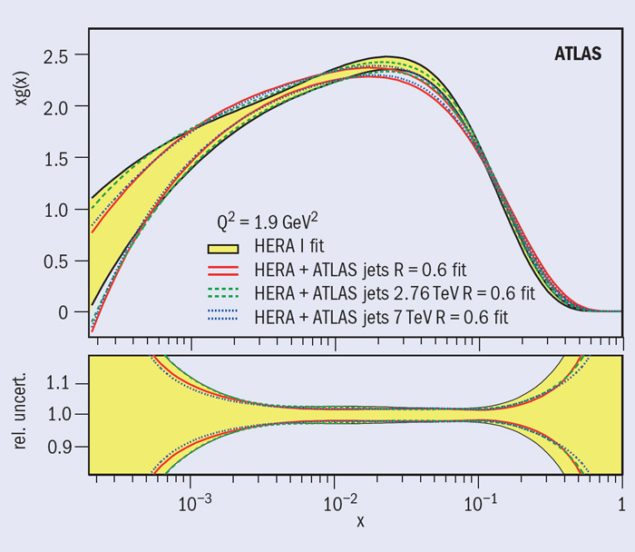

What is new at the LHC is the large cross-section (several orders of magnitude higher with respect to RHIC) for so-called hard processes, e.g. the production of jets and heavy flavour. In these cases, the production is decoupled from the formation of the medium and, therefore, as quasi-external probes traversing the medium they can be used for tomography measurements – in effect, to see inside the medium. Furthermore, they are well calibrated probes because their production rates in the absence of the medium can be calculated using perturbative QCD. Hard probes open a new window for the study of the QGP through high-pT parton and heavy-quark transport coefficients, as well as the possible thermalization and recombination of heavy quarks.

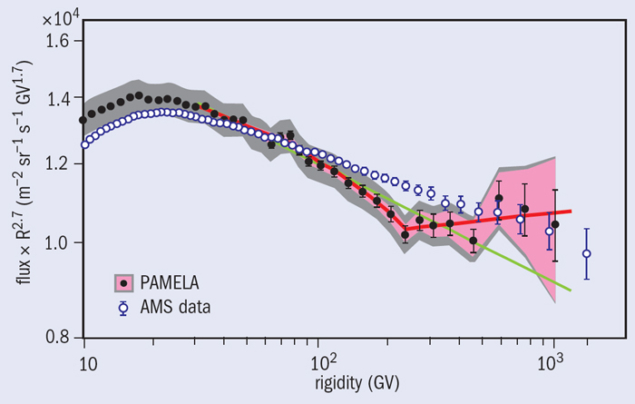

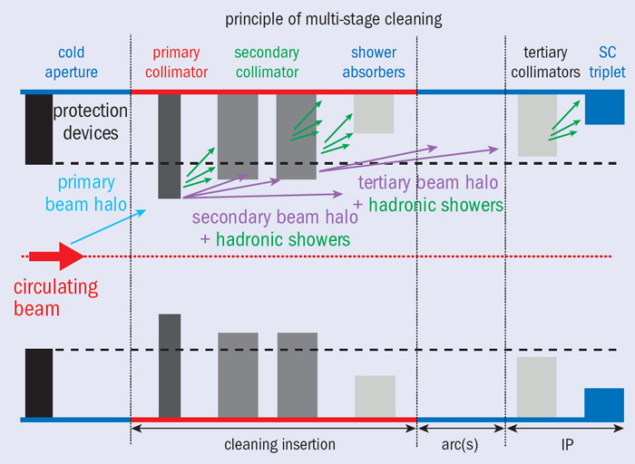

High-pT partons are produced in hard interactions at the early stage of heavy-ion collisions. They are ideal probes because they traverse the medium and their yield and kinematics are influenced by its presence. The ability of a parton to transfer momentum to the medium is particularly interesting. Described by a transport parameter, it is related to the density of colour charges and the coupling of the medium: the stronger the coupling, the larger the transport coefficient and, therefore, the modification of the probe. Energy loss of partons in the medium is caused by multiple elastic-scattering and gluon radiation (jet quenching). This was first observed at RHIC in the suppression of high-pT particles with respect to the appropriately scaled proton–proton (pp) and proton–nucleus (pA) references (the nuclear-modification factor RAA) and from the disappearance of back-to-back particle correlations.

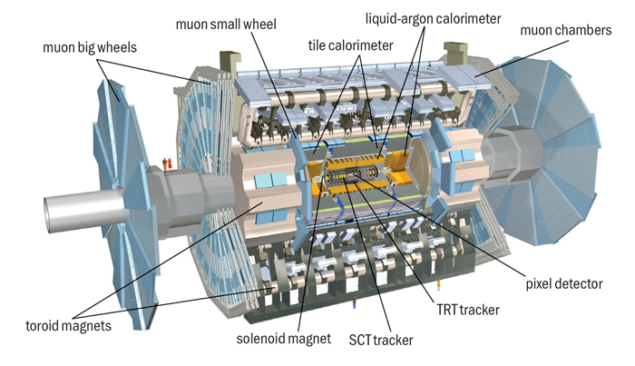

At the LHC, rates are high at transverse energies where jets can be reconstructed above the fluctuations of the background-energy contribution from the underlying event. In particular, for jet transverse energies ET >100 GeV, the influence of the underlying event is relatively small, allowing robust jet-measurements. The ATLAS and CMS collaborations – whose detectors have almost complete calorimetric coverage – were the first to report direct observation of jet-quenching via the di-jet energy imbalance at the LHC (CERN Courier January/February 2011 p6 and March 2011 p6). However, the measurements of the suppression of inclusive single-particle production show that quenching effects are strongest for intermediate transverse momenta, 4 <pT <20 GeV/c, corresponding to parton pT values in the range around 6–30 GeV/c (CERN Courier June 2011 p17).

ALICE can approach this region – while introducing the smallest possible bias on the jet fragmentation – by measuring jet fragments down to low pT (pT >150 MeV/c). Although in jet reconstruction more of the original parton energy is recovered than with single particles, for the most central collisions the observed single inclusive jet suppression is similar to the one for single hadrons, with RjetAA = 0.2–0.4 in the range 30 <pjetT <100 GeV/c. Furthermore, no indication of energy redistribution within experimental uncertainties is observed from the ratios of jet yields with different cone sizes (CERN Courier June 2013 p8).

The suppression patterns are qualitatively and – to some extent – quantitatively similar for single hadrons and jets. This can be best explained by partonic energy loss through radiation mainly outside the jet cone that is used by the jet reconstruction algorithm and in-vacuum (pp-like) fragmentation of the remnant parton. Before the LHC start-up, it was widely believed that jets are more robust objects, i.e. jet quenching would soften their fragmentation without changing the total energy inside the cone. The study of jet fragmentation would have allowed insight into the details of the energy-loss mechanism. The latter is still true, but the energy lost by the partons has to be searched for at large distances from the jet axis, where the background from the underlying event is large. Detailed studies of the momentum and angular distribution of the radiated energy – which require future higher-statistics jet samples – will provide more detailed information on the nature of the energy-loss mechanisms.

Heavy versus light

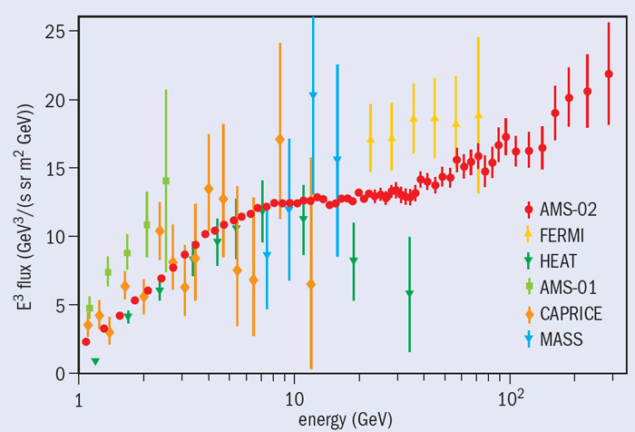

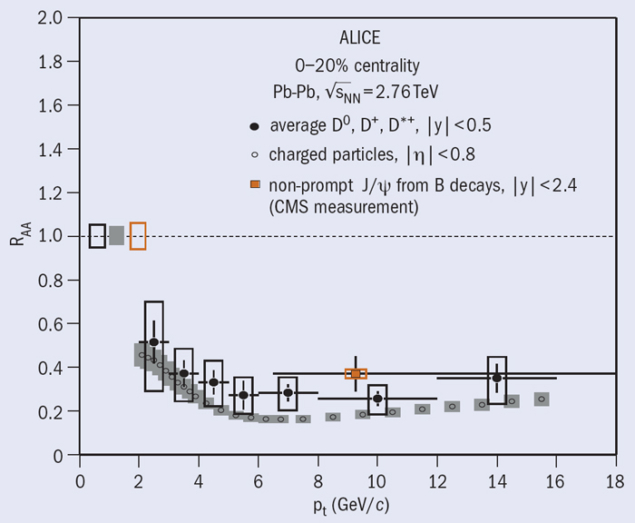

At the LHC, high-pT hadron-production is dominated by gluon fragmentation. In QCD, quarks have a smaller colour-coupling factor with respect to gluons, so the energy loss for quarks is expected to be smaller than for gluons. In addition, for heavy quarks with pT <mq, small-angle gluon radiation is reduced by the so-called “dead-cone effect”. This will reduce further the effect of the medium. ALICE has measured the nuclear-modification factor for the charm mesons D0, D+ and D*+ for 2 <pT <16 GeV/c (figure 3). For central PbPb collisions, a strong in-medium energy loss of 0.2–0.34 is observed in the range pT >5 GeV/c. For lower transverse momenta there is a tendency for the suppression of D0 mesons to decrease (CERN Courier June 2012 p15, January/February 2013 p7).

The suppression is almost as large as that observed for charged particles that are dominated by pions from gluon fragmentation. This observation favours models that explain heavy-quark energy loss by additional mechanisms, such as in-medium hadron formation and dissociation or partial thermalization of heavy quarks through re-scatterings and in-medium resonant interactions. Such a scenario is further corroborated by measurement of the D-meson elliptic-flow coefficient, v2. For semi-central PbPb collisions, a positive flow is observed in the range 2 <pT <6 GeV/c indicating that the interactions with the medium transfer information on the azimuthal anisotropy of the system to charm quarks.

The suppression of the J/ψ and other charmonia states, as a result of short-range screening of the strong interaction, was one of the first signals predicted for QGP formation and has been observed both at CERN’s Super Proton Synchrotron and at RHIC. At the LHC, heavy quarks are abundantly produced – about 100 cc pairs per event in central PbPb collisions. If these charm quarks roam freely in the medium and the charm density is high enough, they can recombine to form quarkonia states, competing with the suppression mechanism.

Indeed, in the most central collisions a lower J/ψ suppression than at RHIC is observed. Also, a smaller suppression is observed at low pT compared with high pT and it is lower at mid-rapidity than in the forward direction (CERN Courier March 2012 p14). In line with regeneration models, suppression is reduced in regions where the charm-quark density is highest. In semi-central PbPb collisions, ALICE sees a hint of nonzero elliptic flow of the J/ψ. This also favours a scenario in which a significant fraction of J/ψ particles are produced by regeneration. The significance of these results will be improved with future heavy-ion data-taking.

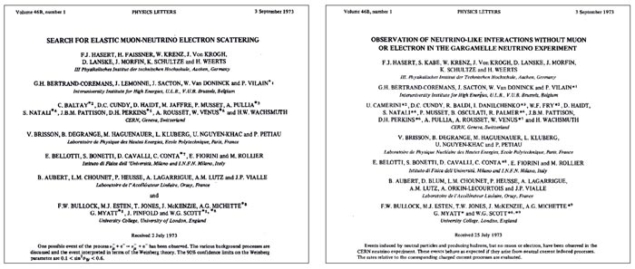

Surprises from pPb reference data

The analysis of pPb collisions allows the ALICE collaboration to study initial and final state effects in cold nuclear matter, to establish a baseline for the interpretation of the heavy-ion results. However, the results from the data taken in the pilot run have already shown that pPb collisions are also good for surprises. First, the CMS collaboration observed from the analysis of two-particle angular correlations in high-multiplicity pPb collisions the presence of a ridge structure that is elongated in the pseudo-rapidity direction (CERN Courier January/February 2013 p9). Using low-multiplicity events as a reference, the ALICE and ATLAS collaborations found that this ridge-structure actually has a perfectly symmetrical counterpart, back-to-back in azimuth (CERN Courier March 2013 p7). The amplitude and shape of the observed double-ridge structure are similar to the modulations that are caused by the elliptic flow that is observed in PbPb collisions, therefore indicating collective behaviour in pPb. Other models attribute the effect to gluon saturation in the lead nucleus or to parton-induced final-state effects. These effects and their similarity to PbPb phenomena are intriguing. Their further investigation and theoretical interpretation will shed new light on the properties of matter at high temperatures and densities.

If pPb collisions do produce a QGP-like medium, its extension is expected to be much smaller than the one produced in PbPb collisions. However, the relevant quantity is not size but the ratio of the system size to the mean-free path of partons. If it is high enough, hydrodynamic models can explain the observed phenomena. If the observations can be explained by coherent effects between strings formed in different proton–nucleon scatterings, we must understand to what extent these effects contribute also to PbPb collisions. While the LHC takes a pause, the ALICE collaboration is looking forward to more exciting results from the existing data.