Image credit: Sophia Bennett/CERN.

When I meet Jack in his office in Building 2, he has just returned from a “splendid” birthday celebration – a classical-music concert “with a lady conductor”, he is quick to add. It had been organised by members of his town of birth, Bad Kissingen in South Germany, and was held at the local gymnasium that bears his name. Steinberger’s memories of the town are those of a 13 year-old child in pre-war Germany during the Nazi election propaganda. “Hitler was psychopathic when it came to Jews,” he says. “In making me leave, however, he did me a great favour because I had a wonderful education in America.”

Talking to this extraordinary man and physicist – who is too modest to dwell on the 1962 discovery of the muon neutrino that won him, Leon Lederman and Melvin Schwartz the 1988 Nobel Prize in Physics – is like taking a trip back in the history of particle physics. With the help of a scholarship from the University of Chicago, Steinberger completed a first degree in chemistry in 1942. He owes his first contact with physics to Ed Purcell and Julian Schwinger, with whom he worked at the MIT radiation laboratory where he had been assigned a military role in 1941 – the year that Japan attacked the US at Pearl Harbour.

“We were making bombsights for bombers, something that could be mounted on airplanes and could see the ground with radar and so you could find military targets,” he explains. “The bombsight we succeeded in developing had a very limited accuracy and you couldn’t see a military target, but you could see cities.” With a heavy heart, Steinberger adds that the radar system was used in the infamous Dresden bombing. “That was my contribution during the war,” he states flatly.

The Fermi years

When the war ended, Steinberger went back to Chicago with the intention of completing a thesis in theoretical physics. Then he met Enrico Fermi. “Fermi was the biggest luck I had in my life!” he exclaims, with a spark in his striking blue eyes. “He asked me to look into a problem raised by an experiment by Rossi and Sands on stopping cosmic-ray muons, and suggested that I do an experiment instead of waiting for a theoretical topic to surface,” recalls Steinberger. At the time, most experiments required just a handful of Geiger counters and a detector measuring about 20 cm long, he says. “The experiment I wanted to do required 80 of those and was 50 cm long, so it was not trivial to build it.”

It was the time before computers, when vacuum tubes were the height of technology, and Fermi had identified the resources required in the physics department of the University of Chicago. Once the experiment was up and running, however, Fermi suggested it would produce results more quickly if it were located on top of a mountain, where there would be more mesons from cosmic rays. “He found a young driver – I didn’t know how to drive, it was the beginning of cars – who took me to the only mountain in the US with a road to the top,” says Steinberger. “It was almost as high as Mt Blanc, and I could do the experiment faster by being on top of that thing.”

The experiment showed that the energy spectrum of the electron in certain meson decays is continuous. It suggested that the muon undergoes a three-body decay, probably into an electron and two neutrinos, and helped to lay the experimental foundation for the concept of a universal weak interaction. What followed is history, leading to the discovery of the muon neutrino (see “DUMAND and the origins of large neutrino detectors”). “It is likely that we had no prejudice on the question of whether the neutrino in muon decay is the same as the one in beta decay.”

Apart from the discovery of the muon neutrino, Steinberger’s pioneering work in physics overlaps 40 years of history of electroweak theory and experiment. At each turn of a decade, Steinberger was the first user of the latest device available for experimentalists, starting with McMillan’s electron synchrotron when it had just been completed in 1949, or Columbia’s 380 MeV cyclotron in 1950. In 1954, he published the first bubble-chamber paper with Leitner, Samios and Schwartz, making a substantial contribution to the technique itself and achieving important results on the properties of the new unstable (strange) particles.

Lasting legacy

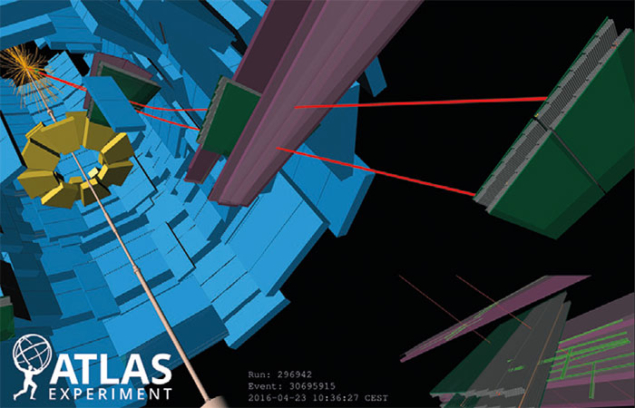

What brought Steinberger to CERN in 1968 was the availability of Charpak’s wire chamber, which he realised was a much more powerful way to study K0 decays – to which he says he had “become addicted”. Then he conceived and led the ALPEH experiment at the Large Electron–Positron (LEP) collider. The results of this and the other LEP experiments, he says, “dominated CERN physics, perhaps the world’s, for a dozen or more years, with crucial precise measurements that confirmed the Standard Model of the unified electroweak and strong interactions”.

These days, Jack still comes to CERN with the same curiosity for the field that he always had. He says he is “trying to learn astrophysics, in spite of my mental deficiencies”, and thinks that the most interesting question today is dark matter. “You have a Standard Model which does not predict everything and it does not predict dark matter, but you can conceive of mechanisms for making dark matter in the Standard Model,” he says. “You don’t know if you really understand it, but you can imagine it. And I am not the only one who doesn’t know.”