By Luca Lista

Springer

Also available at the CERN bookshop

Particle-physics experiments are very expensive, not only in terms of the cost of building accelerators and detectors, but also due to the time spent by physicists and engineers in designing, building and running them. With the statistical analysis of the resulting data being relatively inexpensive, it is worth trying to use it optimally to extract the maximum information about the topic of interest, whilst avoiding claiming more than is justified. Thus, lectures on statistics have become regular in graduate courses, and workshops have been devoted to statistical issues in high-energy physics analysis. This also explains the number of books written by particle physicists on the practical applications of statistics to their field.

This latest book by Lista is based on the lectures that he has given at his home university in Naples, and elsewhere. As part of the Springer series of “Lecture Notes in Particle Physics”, it has the attractive feature of being short – a mere 172 pages. The disadvantage of this is that some of the explanations of statistical concepts would have benefited from a somewhat fuller treatment.

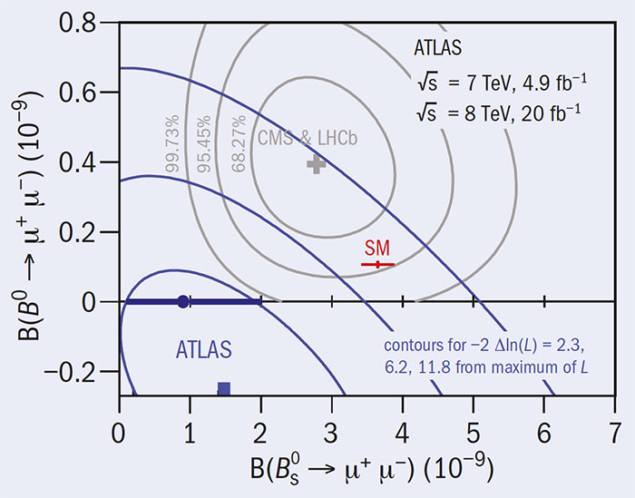

The range of topics covered is remarkably wide. The book starts with definitions of probability, while the final chapter is about discovery criteria and upper limits in searches for new phenomena, and benefits from Lista’s direct involvement in one of the large experiments at CERN’s LHC. It mentions such topics as the Feldman–Cousins method for confidence intervals, the CLs approach for upper limits, and the “look elsewhere effect”, which is relevant for discovery claims. However, there seems to be no mention of the fact that a motivation for the Feldman–Cousins method was to avoid empty intervals; the CLs method was introduced to protect against the possibility of excluding the signal plus background hypothesis when the analysis had little or no sensitivity to the presence or absence of the signal.

The book has no index, nor problems for readers to solve. The latter is unfortunate. In common with learning to swim, play the violin and many other activities, it is virtually impossible to become proficient at statistics by merely reading about it: some practical exercise is also required. However, many worked examples are included.

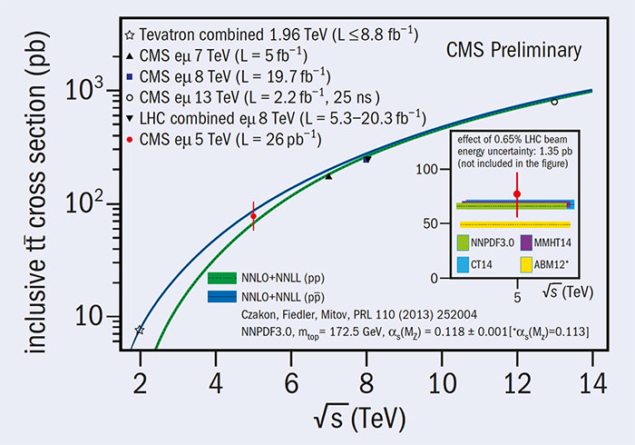

There are several minor typos that the editorial system failed to notice; and in addition, figure 2.17, in which the uncertainty region for a pair of parameters is compared to the uncertainties in each of them separately, is confusing.

There are places where I disagree with Lista’s emphasis (although statistics is a subject that often does produce interesting discussions). For example, Lista claims it is counter-intuitive that, for a given observed number of events, an experiment that has a larger than expected number of background events (b) provides a tighter upper limit than one with a smaller background (i.e. a better experiment). However, if there are 10 observed events, it is reasonable that the upper limit on any possible signal is better if b = 10 than if b = 0. What is true is that the expected limit is better for the experiment with smaller backgrounds.

Finally, the last three chapters could be useful to graduate students and postdocs entering the exciting field of searching for signs of new physics in high energy or non-accelerator experiments, provided that they have other resources to expand on some of Lista’s shorter explanations.