Faced with the difficulty of doing exact calculations, theorists are turning to approximation techniques to understand and predict what happens at the quark level.

It follows from the underlying principles of quantum mechanics that the investigation of the structure of

matter at progressively smaller scales demands ever-increasing effort and ingenuity in constructing new accelerators.

As these updated machines come into operation, it becomes more and more important to as certain whether any deviation from theoretical predictions is the result of new physics or is due to extra (non-perturbative) effects within our current understanding – the Standard Model. Confronted with the difficulties of doing precise calculations, the lattice approach to quantum field theory attempts to provide a decisive test by simulating the continuum of nature with a discrete lattice of space-time points.

While this is necessarily an approximation, it is not as approximate as perturbation theory, which employs only selected terms from a series field theory expansion. Moreover, the lattice approximation can often be removed at the end in a controlled manner. However, despite its space-time economy, the lattice approach still needs the power of the world’s largest supercomputers to perform all of the calculations that are required to solve the complicated equations describing elementary particle interactions.

Berlin workshop

A recent workshop on High Performance Computing in Lattice Field Theory held at DESY Zeuthen, near Berlin, looked at the future of high-performance computing within the European lattice community. The workshop was organized by DESY and the John von Neumann Institute for Computing (NIC).

NIC is a joint enterprise between DESY and the Jülich research centre. Its elementary particle research group moved to Zeuthen on 1 October 2000 and will boost the already existing lattice gauge theory effort in Zeuthen. Although the lattice physics community in Europe is split into several groups, this arrangement fortunately does not prevent subsets of these groups working together on particular problems.

Physics potential

The workshop originated from a recommendation by working panel set up by the European Committee for Future Accelerators (ECFA) to examine the needs of high-performance computing for lattice quantum chromodynamics (QCD, the field theory of quarks and gluons; see Where did the ‘No-go’ theorems go?). It found that the physics potential of lattice field theory is within the reach of multiTeraflop machines, and the panel recommended that such machines should be developed. Another suggestion was to aim to coordinate European activities whenever possible.

Organized locally at Zeuthen by K Jansen (chair), F Jegerlehner, G Schierholz, H Simma and R Sommer, the workshop provided ample time to discuss this report. All members of the panel were present. The ECFA panel’s chairman, C Sachrajda of Southampton, gave an overview of the report, emphasizing again the main results and recommendations. The members of the ECFA panel then presented updated reports on the topics discussed in the ECFA report. These presentations laid the ground for discussions (led by K Jansen and C Sachrajda) that were lively and to some extent controversial. However, the emerging sentiment was a broad overall agreement with the ECFA panel’s conclusions.

Interpreting all of the data that results from experiments is an increasing challenge for the physics community, but lattice methods can make this process considerably easier. During the presentations made by major European lattice groups at the workshop, it became apparent that the lattice community is meeting the challenge head-on.

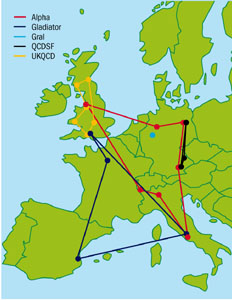

On behalf of the UK QCD group, R Kenway of Edinburgh dealt with a variety of aspects of QCD, which ranged from the particle spectrum to decay form factors.

Similar questions were addressed by G Schierholz of the QCDSF (QCD structure functions) group, located mainly in Zeuthen, who added a touch of colour by looking at structure functions on the lattice. R Sommer of the ALPHA collaboration, also based at Zeuthen, concentrated on the variation (“running”) of the quark-gluon coupling strength as (hence the collaboration’s name) and quark masses with the energy scale.

The chosen topic of the APE group (named after its computer) was weak decay amplitude, presented by F Rapuano of INFN/Rome. This difficult problem has gained fresh impetus following recent proposals and developments. T Lippert of the GRAL (going realistic and light) collaboration from the University of Wuppertal described the group’s attempts to explore the limit of small quark masses.

The activities of these collaborations are to a large extent coordinated by the recently launched European Network on Hadron Phenomenology from Lattice QCD.

New states of matter

Another interesting subject was explored by the EU Network for Finite Temperature Phase Transitions in Particle Physics, which is now tackling questions concerning new states of matter. These calculations are key to interpreting and guiding present and future experiments at Brookhaven’s RHIC heavy ion collider and at CERN. F Karsch and B Petersson, both from Bielefeld, presented the prospects.

The various presentations had one thing in common – all of the groups are starting to work with fully dynamical quarks and are thus going beyond the popular “quenched” approximation, which neglects internal mechanisms involving quarks.

Although this approximation works well in general, there are small differences between experiment and theory. To clarify whether these differences are signs of new physics or simply an artefact of the quenched approximation, lattice physicists now have to find additional computer power to simulate dynamical quarks – a quantum jump for the lattice community, as dynamical quarks are at least an order of magnitude more complicated.

This means that computers with multiTeraflop capacity will be required. All groups expressed their need for such computer resources in the coming years – only then can the European lattice community remain competitive with groups in Japan and the US.

Two projects that aim to realize this ambitious goal were presented at the workshop: the apeNEXT project (presented by L Tripiccione, Pisa), which is a joint collaboration of INFN in Italy with DESY and NIC in Germany and the University of Paris-sud in France; and the US-based QCDOC (QCD on a chip) project.

Ambitious computer projects

QCDOC and apeNEXT rely to a significant extent on custom-designed chips and networks, with QCDOC using a link to industry (IBM) to build machines with a performance of about 10 Tflop/s. Each of these projects is based on massively parallel architectures involving thousands of processors linked via a fast network. Both are well under way and there is strong optimism that 10 Tflop machines will be built by 2003. Apart from these big machines, the capabilities of lattice gauge theory machines based on PC clusters were discussed by K Schilling of Wuppertal and Z Fodor of Eotvos University, Budapest.

The calculations done using lattice techniques not only provide results that are interesting from a phenomenological point of view, but are also of great importance in the development of our understanding of quantum field theories in general. This aspect of lattice field theory was covered by a discussion on lattice chiral symmetry involving L Lellouch of Marseille, T Blum of Brookhaven and F Niedermayer of Bern. The structure of the QCD vacuum was covered by A DiGiacomo of Pisa.

There is great excitement in the lattice community that the coming years, with the advent of the next generation of massively parallel systems, will certainly bring new and fruitful results.

However, the proposed machines in the multiTeraflop range can only be an interim step. They will not be sufficient for generating higher-precision data for many observables. It is therefore not difficult to predict a future workshop in which lattice physicists will call for the subsequent generation of machines to reach the 100 Tflop range – a truly ambitious enterprise.