Celebrating anniversaries of three Nobel prizes for work that established a key element of the Standard Model.

Electromagnetism and the weak force might appear to have little to do with each other. Electromagnetism is our everyday world – it holds atoms together and produces light, while the weak force was for a long time known only for the relatively obscure phenomenon of beta-decay radioactivity.

The successful unification of these two apparently highly dissimilar forces is a significant milestone in the constant quest to describe as much as possible of the world around us from a minimal set of initial ideas.

“At first sight there may be little or no similarity between electromagnetic effects and the phenomena associated with weak interactions,” wrote Sheldon Glashow in 1960. “Yet remarkable parallels emerge…”

Both kinds of interactions affect leptons and hadrons; both appear to be “vector” interactions brought about by the exchange of particles carrying unit spin and negative parity; both have their own universal coupling constant, which governs the strength of the interactions.

These vital clues led Glashow to propose an ambitious theory that attempted to unify the two forces. However, there was one big difficulty, which Glashow admitted had to be put to one side. While electromagnetic effects were due to the exchange of massless photons (electromagnetic radiation), the carrier of weak interactions had to be fairly heavy for everything to work out right. The initial version of the theory could find no neat way of giving the weak carrier enough mass.

Then came the development of theories using “spontaneous symmetry breaking”, where degrees of freedom are removed. An example of such a symmetry breaking is the imposition of traffic rules (drive on the right, overtake on the left) to a road network where in principle anyone could go anywhere. Another example is the formation of crystals in a freezing liquid.

These symmetry-breaking theories at first introduced massless particles which were no use to anybody, but soon the so-called “Higgs mechanism” was discovered, which gives the carrier particles some mass. This was the vital development that enabled Steven Weinberg and Abdus Salam, working independently, to formulate their unified “electroweak” theory. One problem was that nobody knew how to handle calculations in a consistent way…

…It was Gerardus ’t Hooft’s and Martinus Veltman’s work that put this unification on the map, by showing that it was a viable theory that could make predictions possible.

Field theories have a habit of throwing up infinities that at first sight make sensible calculations difficult. This had been a problem with the early forms of quantum electrodynamics and was the despair of a whole generation of physicists. However, its reformulation by Richard Feynman, Julian Schwinger and Sin-Ichiro Tomonaga (Nobel prizewinners in 1965), showed how these infinities could be wiped clean by redefining quantities like electric charge.

Each infinity had a clear origin, a specific Feynman diagram, the skeletal legs of which denote the particles involved. However, the new form of quantum electrodynamics showed that the infinities can be made to disappear by including other Feynman diagrams, so that two infinities cancel each other out. This trick, difficult to accept at first, works very well, and renormalization then became a way of life in field theory. Quantum electrodynamics became a powerful calculator.

For such a field theory to be viable, it has to be “renormalizable”. The synthesis of weak interactions and electromagnetism, developed by Glashow, Weinberg and Salam – and incorporating the now famous “Higgs” symmetry-breaking mechanism – at first sight did not appear to be renormalizable. With no assurance that meaningful calculations were possible, physicists attached little importance to the development. It had not yet warranted its “electroweak” unification label.

The model was an example of the then unusual “non-Abelian” theory, in which the end result of two field operations depends on the order in which they are applied. Until then, field theories had always been Abelian, where this order does not matter.

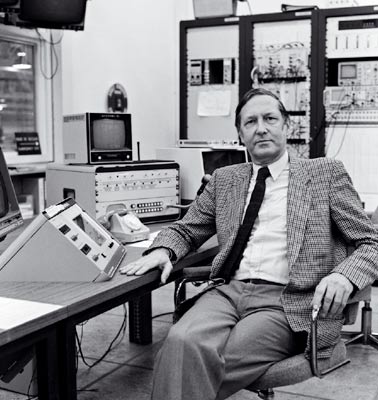

In the summer of 1970, ’t Hooft, at the time a student of Veltman in Utrecht, went to a physics meeting on the island of Corsica, where specialists were discussing the latest developments in renormalization theory. ’t Hooft asked them how these ideas should be applied to the new non-Abelian theories. The answer was: “If you are a student of Veltman, ask him!” The specialists knew that Veltman understood renormalization better than most other mortals, and had even developed a special computer program – Schoonschip – to evaluate all of the necessary complex field-theory contributions.

At first, ’t Hooft’s ambition was to develop a renormalized version of non-Abelian gauge theory that would work for the strong interactions that hold subnuclear particles together in the nucleus. However, Veltman believed that the weak interaction, which makes subnuclear particles decay, was a more fertile approach. The result is physics history. The unified picture based on the Higgs mechanism is renormalizable. Physicists sat up and took notice.

One immediate prediction of the newly viable theory was the “neutral current”. Normally, the weak interactions involve a shuffling of electric charge, as in nuclear beta decay, where a neutron decays into a proton. With the neutral current, the weak force could also act without switching electric charges. Such a mechanism has to exist to assure the renormalizability of the new theory. In 1973 the neutral current was discovered in the Gargamelle bubble chamber at CERN and the theory took another step forward.

The next milestone on the electroweak route was the discovery of the W and Z carriers, of the charged and neutral components respectively, of the weak force at CERN’s proton–antiproton collider. For this, Carlo Rubbia and Simon van der Meer were awarded the 1984 Nobel Prize for Physics…

…At CERN, the story began in 1968 when Simon van der Meer, inventor of the “magnetic horn” used in producing neutrino beams, had another brainwave. It was not until four years later that the idea (which van der Meer himself described as “far-fetched”) was demonstrated at the Intersecting Storage Rings. Tests continued at the ISR, but the idea – “stochastic beam cooling” – remained a curiosity of machine physics.

In the United States, Carlo Rubbia, together with David Cline of Wisconsin and Peter McIntyre, then at Harvard, put forward a bold idea to collide beams of matter and antimatter in existing large machines. At first, the proposal found disfavour, and it was only when Rubbia brought the idea to CERN that he found sympathetic ears.

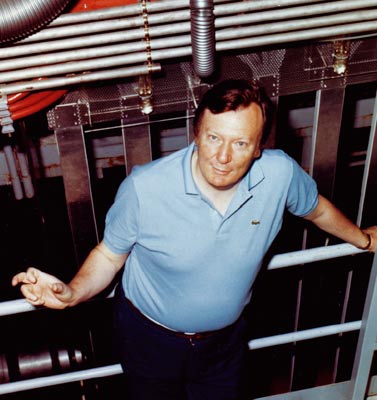

Stochastic cooling was the key, and experiments soon showed that antimatter beams could be made sufficiently intense for the scheme to work. With unprecedented boldness, CERN, led at the time by Leon Van Hove as research director-general and the late Sir John Adams as executive director-general, gave the green light.

At breathtaking speed, the ambitious project became a magnificently executed scheme for colliding beams of protons and antiprotons in the Super Proton Synchrotron, with the collisions monitored by sophisticated large detectors. The saga was chronicled in the special November 1983 issue of the CERN Courier, with articles describing the development of the electroweak theory, the accelerator physics that made the project possible and the big experiments that made the discoveries.

• Extracts from CERN Courier December 1979 pp395–397, December 1984 pp419–421 and November 1999 p5.