Astronomers, cosmologists, particle physicists and statisticians came together at SLAC to learn from each other about different aspects of statistical analysis techniques. Glen Cowan reports.

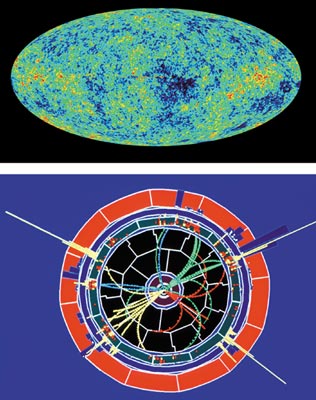

Given the impressive price tags of particle accelerators and telescopes, scientists are keen to extract the most from their hard-won data. In response to this, statistical analysis techniques have continued to grow in sophistication. To discuss recent developments in the field, 124 particle physicists, cosmologists, astronomers and statisticians met last September at SLAC for PHYSTAT 2003, the International Conference on Statistical Problems in Particle Physics, Astrophysics and Cosmology.

PHYSTAT 2003 was the most recent of several fruitful meetings devoted to data analysis, including the workshops on confidence limits at CERN in 2000 and Fermilab in 2001, and the Durham Conference on Advanced Statistical Techniques in Particle Physics in 2002. In contrast to these, however, PHYSTAT 2003 brought high-energy physicists together with astronomers and cosmologists in an effort to share insights on what for all has become a crucial aspect of their science. Conference organizer Louis Lyons of Oxford explained that, “In particle physics, astrophysics and cosmology, people work with different tools – accelerators versus telescopes – but nonetheless a lot of the data analysis techniques are very similar.”

Another difference from previous meetings was the strong presence of the professional statistics community. Half of the invited talks were by statisticians and the keynote address, “Bayesians, frequentists and physicists”, was given by Brad Efron of Stanford, the president of the American Statistical Association.

Old and new directions

Driven by the need to understand advanced data analysis methods, many experimental collaborations have recently established statistics working groups to advise and guide their colleagues. Frank Porter of Caltech gave the view from the BaBar collaboration, which studies the decays of more than 100,000,000 B mesons produced at the PEP-II B-factory at SLAC. Porter observed that, “statistical sophistication in particle physics has grown significantly, not so much in the choice of methods, which are often long-established, but in the understanding attached to them.”

Multivariate methods emerged as one of the most widely discussed issues at the meeting. In a high-energy particle collision, for example, one measures a large number of quantities, such as the energies of all of the particle tracks. In recent years physicists have become accustomed to using multivariate tools such as neural networks to reduce these inputs to a single usable number. It appears that the statistics community has moved on and that neural networks are no longer considered as “cutting edge” as other techniques, such as kernel density methods, decision trees and support vector machines. Statistician and physicist Jerome Friedman of Stanford reviewed recent advances in these areas, noting that: “As with any endeavour, one must match the tool to the problem.”

The Reverend Bayes reloaded

The long-standing controversy between frequentist and Bayesian approaches continued to provoke debate. The main difference boils down to whether a probability is meant to reflect the frequency of the outcome of a repeatable experiment, or rather a subjective “degree of belief”. For example, a physicist could ask: “what is the probability that I will produce a Higgs boson if I collide together two protons?” A frequentist theory can predict an answer, which can be tested by colliding many protons and seeing what happens what fraction of the time. The same physicist could also ask: “What is the probability that the Higgs mechanism is true?” It either is or it isn’t, and this won’t change no matter how many protons we collide. Nonetheless, Bayesian or subjective probability can reflect our state of knowledge about nature.

Physicist Fred James from CERN noted that one of the most widely used frequentist techniques, Pearson’s chi-square test, is used more than a million times per second in high-energy physics experiments, making it one of the most successful statistical devices in human history. On the other hand, Bayesian decision making is used implicitly by everyone every day. “Both of these elements must be part of every statistical philosophy,” said James, who teaches statistics using a “unified approach”. “If your principles restrict you to only one formalism, you won’t get the right answer to all your problems.” Members of the statistics community appeared to agree with this sentiment. Statistician Persi Diaconis from Stanford observed that in data analysis, as in other endeavours, it’s not as if you are restricted to using only a hammer or a saw – you’re allowed to use both.

Blinding bias

Many experimental groups have embraced “blind analyses”, whereby the physicists do not see the final result of a complicated measurement until the technique has been adequately optimized and checked. The idea is to avoid any bias in the final result from prior expectations. Aaron Roodman of SLAC, working with the BaBar experiment, explained that the collaboration had initial reservations about the blind analysis technique, but that it has become a standard method. “Results are presented and reviewed before they are unblinded, and changes are made while the analysis is still blind. Then when an internal review committee is satisfied, the result is unblinded, ultimately to be published.” Roodman noted that a similar practice in the medical community dates from the 17th century, when John Baptista van Helmont proposed a double-blind trial to determine the efficacy of blood letting!

For the particle physicists, finding an appropriate recipe for the treatment of systematic errors emerged as one of those problems that will not go away. With many measurements being based on very large samples of data, the “random” or “statistical” error in the result may become smaller than uncertainties from systematic bias. Pekka Sinervo of Toronto noted that many procedures exist more in the manner of “oral tradition”. Sinervo pointed out that some systematic effects reflect a “paradigm uncertainty”, having no relevant interpretation in frequentist statistics.

This focus on systematics seems not to have received comparable attention within the professional statistics community. John Rice from Berkeley noted in his conference summary, “Physicists are deeply concerned with systematic bias, much more so than is usual in statistics. In more typical statistical applications, bias is often swamped by variance.”

A PHYSTAT phrase book

A panel discussion led by Diaconis, Friedman, James and Tom Loredo, of Cornell, provided an insight into how well the physicists, statisticians and astronomers succeeded in sharing their knowledge. Helped by introductory talks on statistics in high-energy physics by Roger Barlow of Manchester, and in astronomy by Eric Feiglson of Pennsylvania State, language barriers were largely overcome. Nevertheless, many astronomers and statisticians puzzled over “cuts”, as physicists pondered “iid random variables” (identically and independently distributed).

Although the astronomers and physicists found much common ground, in a number of ways the approaches used by the two groups seemed to remain quite separate. John Rice noted in his summary, “High-energy physics relies on carefully designed experiments, and astronomy is necessarily observational. Formal inference thus plays a larger role in the former, and exploratory data analysis a larger role in the latter.” With respect to the development of new techniques, Rice voiced some concern. “The explosion of computing power, coupled with unfettered imagination, is leading to the degeneration of the stern discipline of statistics established by our forebears to a highly esoteric form of performance art.” However, he noted that “evolutionary pressures will lead to the extinction of the frail and the survival of the fittest techniques.”

The local organizing committee at SLAC was chaired by Richard Mount and included Arla LeCount, Joseph Perl and David Lee; the scientific committee was led by Louis Lyons. To the dismay of the organizers, four of the invited speakers were unable to attend owing to recent difficulties in obtaining US visas (CERN Courier November 2003 p50). A future meeting is planned for 2005, by which time one hopes that this situation will improve. The conference proceedings are now in preparation and will appear as a SLAC publication. More information and links to presentations can be found on the conference website at: www-conf.slac.stanford.edu/phystat2003.