Cosmologist and theoretical physicist Licia Verde discusses the current tension between early- and late-time measurements of the expansion rate of the universe.

Did you always want to be a cosmologist?

One day, around the time I started properly reading, somebody gave me a book about the sky, and I found it fascinating to think about what’s beyond the clouds and beyond where the planes and the birds fly. I didn’t know that you could actually make a living doing this kind of thing. At that age, you don’t know what a cosmologist is, unless you happen to meet one and ask what they do. You are just fascinated by questions like “how does it work?” and “how do you know?”.

Was there a point at which you decided to focus on theory?

Not really, and I still think I’m somewhat in-between, in the sense that I like to interpret data and am plugged-in to observational collaborations. I try to make connections to what the data mean in light of theory. You could say that I am a theoretical experimentalist. I made a point to actually go and serve at a telescope a couple of times, but you wouldn’t want to trust me in handling all of the nitty-gritty detail, or to move the instrument around.

What are your research interests?

I have several different research projects, spanning large-scale structure, dark energy, inflation and the cosmic microwave background. But there is a common philosophy: I like to ask how much can we learn about the universe in a way that is as robust as possible, where robust means as close as possible to the truth, even if we have to accept large error bars. In cosmology, everything we interpret is always in light of a theory, and theories are always at some level “spherical cows” – they are approximations. So, imagine we are missing something: how do I know I am missing it? It sounds vague, but I think the field of cosmology is ready to ask these questions because we are swimming in data, drowning in data, or soon will be, and the statistical error bars are shrinking.

This explains your current interest in the Hubble constant. What do you define as the Hubble tension?

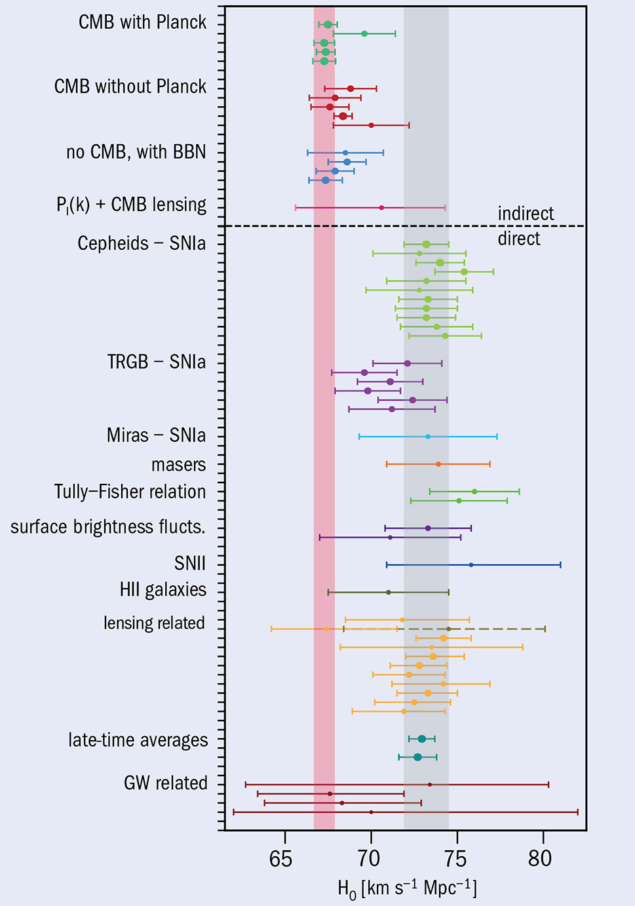

Yes, indeed. When I was a PhD student, knowing the Hubble constant at the 40–50% level was great. Now, we are declaring a crisis in cosmology because there is a discrepancy at the very-few-percent level. The Hubble tension is certainly one of the most intriguing problems in cosmology today. Local measurements of the current expansion rate of the universe, for example based on supernovae as standard candles, which do not rely heavily on assumptions about cosmological models, give values that cluster around 73 km s–1 Mpc–1. Then there is another, indirect route to measuring what we believe is the same quantity but only within a model, the lambda-cold-dark-matter (ΛCDM) model, which is looking at the baby universe via the cosmic microwave background (CMB). When we look at the CMB, we don’t measure recession velocities, but we interpret a parameter within the model as the expansion rate of the universe. The ΛCDM model is extremely successful, but the value of the Hubble constant using this method comes out at around 67 km s–1 Mpc–1, and the discrepancy with local measurements is now 4σ or more.

What are the implications if this tension cannot be explained by systematic errors or some other misunderstanding of the data?

The Hubble constant is the only cosmological parameter in the ΛCDM universe that can be measured both directly locally and from classical cosmological observations such as the CMB, baryon acoustic oscillations, supernovae and big-bang nucleosynthesis. It’s also easy to understand what it is, and the error bars are becoming small enough that it is really becoming make-or-break for the ΛCDM model. The Hubble tension made everybody wake up. But before we throw the model out of the window, we need something more.

How much faith do you put in the ΛCDM model compared to, say, the Standard Model of particle physics?

It is a model that has only six parameters, most constrained at the percent level, which explains most of the observations that we have of the universe. In the case of Λ, which quantifies what we call dark energy, we have many orders of magnitude between theory and experiment to understand, and for dark matter we are yet to find a candidate particle. Otherwise, it does connect to fundamental physics and has been extremely successful. For 20 years we have been riding a wave of confirmation of the ΛCDM model, so we need to ask ourselves: if we are going to throw it out, what do we substitute it with? The first thing is to take small steps away from the model, say by adding one parameter. For a while, you could say that maybe there is something like an effective neutrino species that might fix it, but a solution like this doesn’t quite fit the CMB data any more. I think the community may be split 50/50 between being almost ready to throw the model out and keeping working with it, because we have nothing better to use.

It is really becoming make-or-break for the ΛCDM model

Could it be that general relativity (GR) needs to be modified?

Perhaps, but where do we modify it? People have tried to tweak GR at early times, but it messes around with the observations and creates a bigger problem than we already have. So, let’s say we modify in middle times – we still need it to describe the shape of the expansion history of the universe, which is close to ΛCDM. Or we could modify it locally. We’ve tested GR at the solar-system scale, and the accuracy of GPS is a vivid illustration of its effectiveness at a planetary scale. So, we’d need to modify it very close to where we are, and I don’t know if there are modifications on the market that pass all of the observational tests. It could also be that the cosmological constant changes value as the universe evolves, in which case the form of the expansion history would not be the one of ΛCDM. There is some wiggle room here, but changing Λ within the error bars is not enough to fix the mismatch. Basically, there is such a good agreement between the ΛCDM model and the observations that you can only tinker so much. We’ve tried to put “epicycles” everywhere we could, and so far we haven’t found anything that actually fixes it.

What about possible sources of experimental error?

Systematics are always unknowns that may be there, but the level of sophistication of the analyses suggests that if there was something major then it would have come up. People do a lot of internal consistency checks; therefore, it is becoming increasingly unlikely that it is only due to dumb systematics. The big change over the past two years or so is that you typically now have different data sets that give you the same answer. It doesn’t mean that both can’t be wrong, but it becomes increasingly unlikely. For a while people were saying maybe there is a problem with the CMB data, but now we have removed those data out of the equation completely and there are different lines of evidence that give a local value hovering around 73 km s–1 Mpc–1, although it’s true that the truly independent ones are in the range 70–73 km s–1 Mpc–1. A lot of the data for local measurements have been made public, and although it’s not a very glamorous job to take someone else’s data and re-do the analysis, it’s very important.

Is there a way to categorise the very large number of models vying to explain the Hubble tension?

Until very recently, there was an interpretation of early versus late models. But if this is really the case, then the tension should show up in other observables, specifically the matter density and age of the universe, because it’s a very constrained system. Perhaps there is some global solution, so a little change here and a little in the middle, and a little there … and everything would come together. But that would be rather unsatisfactory because you can’t point your finger at what the problem was. Or maybe it’s something very, very local – then it is not a question of cosmology, but whether the value of the Hubble constant we measure here is not a global value. I don’t know how to choose between these possibilities, but the way the observations are going makes me wonder if I should start thinking in that direction. I am trying to be as model agnostic as possible. Firstly, there are many other people that are thinking in terms of models and they are doing a wonderful job. Secondly, I don’t want to be biased. Instead I am trying to see if I can think one-step removed, which is very difficult, from a particular model or parameterisation.

What are the prospects for more precise measurements?

For the CMB, we have the CMB-S4 proposal and the Simons Array. These experiments won’t make a huge difference to the precision of the primary temperature-fluctuation measurements, but will be useful to disentangle possible solutions that have been proposed because they will focus on the polarisation of the CMB photons. As for the local measurements, the Dark Energy Spectroscopic Instrument, which started observations in May, will measure baryon acoustic oscillations at the level of galaxies to further nail down the expansion history of the low-redshift universe. However, it will not help at the level of local measurements, which are being pursued instead by the SH0ES collaboration. There is also another programme in Chicago focusing on the so-called tip of the red-giant-branch technique, with more results to come out. Observations of multiple images from strong gravitational lensing is another promising avenue that is very actively pursued, and, if we are lucky, gravitational waves with optical counterparts will bring in another important piece of the puzzle.

If we are lucky, gravitational waves with optical counterparts will bring in another important piece of

the puzzle

How do we measure the Hubble constant from gravitational waves?

It’s a beautiful measurement, as you can get a distance measurement without having to build a cosmic distance ladder, which is the case with the other local measurements that build distances via Cepheids, supernovae, etc. The recession velocity of the GW source comes from the optical counterpart and its redshift. The detection of the GW170817 event enabled researchers to estimate the Hubble constant to be 70 km s–1 Mpc–1, for example, but the uncertainties using this novel method are still very large, in the region of 10%. A particular source of uncertainty comes from the orientation of the gravitational-wave source with respect to Earth, but this will come down as the number of events increases. So this route provides a completely different window on the Hubble tension. Gravitational waves have been dubbed, rather poetically, “standard sirens”. When these determinations of the Hubble constant become competitive with existing measurements really depends on how many events are out there. Upgrades to LIGO, VIRGO, plus next-generation gravitational-wave observatories will help in this regard, but what if the measurements end up clustering between or beyond the late- and early-time measurements? Then we really have to scratch our heads!

How can results from particle physics help?

Principally, if we learn something about dark matter it could force us to reconsider our entire way to fit the observations, perhaps in a way that we haven’t thought of because dark matter may be hot rather than cold, or something else that interacts in completely different ways. Neutrinos are another possibility. There are models where neutrinos don’t behave like the Standard Model yet still fit the CMB observations. Before the Hubble tension came along, the hope was to say that we have this wonderful model of cosmology that fits really well and implies that we live in a maximally boring universe. Then we could have used that to eventually make the connection to particle physics, say, by constraining neutrino masses or the temperature of dark matter. But if we don’t live in a maximally boring universe, we have to be careful about playing this game because the universe could be much, much more interesting than we assumed.