The first experience with data from the LHC beats out the rhythm for CHEP 2010.

Image credit: ASGC Taipei.

The conferences on Computing in High Energy and Nuclear Physics (CHEP), which are held approximately every 18 months, reached their silver jubilee with CHEP 2010, held at the Academia Sinica Grid Computing Centre (ASGC) in Taipei in October. ASGC is the LHC Computing Grid (LCG) Tier 1 site for Asia and the organizers are experienced in hosting large conferences. Their expertise was demonstrated again throughout the week-long meeting, drawing almost 500 participants from more than 30 countries, including 25 students sponsored by CERN’s Marie Curie Initial Training Network for Data Acquisition, Electronics and Optoelectronics for LHC Experiments (ACEOLE).

Appropriately, given the subsequent preponderance of LHC-related talks, the LCG project leader, Ian Bird of CERN, gave the opening plenary talk. He described the status of the LCG, how it got there and where it may go next, and presented some measures of its success. The CERN Tier 0 centre moves some 1 PB of data a day, in- and out-flows combined; it writes around 70 tapes a day; the worldwide grid supports some 1–million jobs a day; and it is used by more than 2000 physicists for analysis. Bird was particularly proud of the growth in service reliability, which he attributed to many years of preparation and testing. For the future, he believes that the LCG community needs to be concerned with sustainability, data issues and changing technologies. The status of the LHC experiments’ offline systems were summarized by Roger Jones of Lancaster University. He stated that the first year of operations had been a great success, as presentations at the International Conference on High Energy Physics in Paris had indicated. He paid tribute to CERN’s support of Tier–0 and he remarked that data distribution has been smooth.

In the clouds

As expected, there were many talks about cloud computing, including several plenary talks on general aspects, as well as technical presentations on practical experiences and tests or evaluations of the possible use of cloud computing in high-energy physics. It is sometimes difficult to separate hype from initiatives with definite potential but it is clear that clouds will find a place in high-energy physics computing, probably based more on private clouds rather than on the well known commercial offerings.

Image credit: ASGC Taipei.

Harvey Newman of Caltech described a new generation of high-energy physics networking and computing models. As the available bandwidth continues to grow exponentially in capacity, LHC experiments are increasingly benefiting from it – to the extent that experiment models are being modified to make more use of pulling data to a job rather than pushing jobs towards the data. A recently formed working group is gathering new network requirements for future networking at LCG sites.

Lucas Taylor of Fermilab addressed the issue of public communications in high-energy physics. Recent LHC milestones have attracted massive media interest and Taylor stated that the LHC community simply has no choice other than to be open, and welcome the attention. The community therefore needs a coherent policy, clear messages and open engagement with traditional media (TV, radio, press) as well as with new media (Web 2.0, Twitter, Facebook, etc.). He noted major video-production efforts undertaken by the experiments, for example ATLAS-Live and CMS TV, and encouraged the audience to contribute where possible – write a blog or an article for publication, offer a tour or a public lecture and help build relationships with the media.

There was an interesting presentation of the Facility for Antiproton and Ion Research (FAIR) being built at GSI, Darmstadt. Construction will start next year and switch-on is scheduled for 2018. Two of the planned experiments are the size of ALICE or LHCb, with similar data rates expected. Triggering is a particular problem and data acquisition will have to rely on event filtering, so online farms will have to be several orders of magnitude larger than at the LHC (10,000 to 100,000 cores). This is a major area of current research.

David South of DESY, speaking on behalf of the Study Group for Data Preservation and Long-term Analysis in High-Energy Physics set up by the International Committee for Future Accelerators, presented what is probably the most serious effort yet for data preservation in high-energy physics. The question is: what to do with data after the end of an experiment? With few exceptions, data from an experiment are often stored somewhere until eventually they are lost or destroyed. He presented some reasons why preservation is desirable but needs to be properly planned. Some important aspects include the technology used for storage (should it follow storage trends, migrating from one media format to the next?), as well as the choice of which data to store. Going beyond the raw data, this must also include software, documentation and publications, metadata (logbooks, wikis, messages, etc.) and – the most difficult aspect – people’s expertise.

Although some traditional plenary time had been scheduled for additional parallel sessions, there were still far too many submissions to be given as oral presentations. So, almost 200 submissions were scheduled as posters, which were displayed in two batches of 100 each over two days. The morning coffee breaks were extended to permit attendees to view them and interact with authors. There were also two so-called Birds of a Feather sessions on LCG Operations and LCG Service Co-ordination, which allowed the audience to discuss aspects of the LCG service in an informal manner.

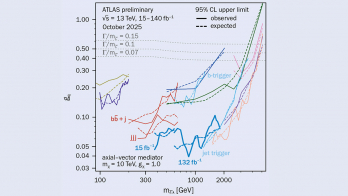

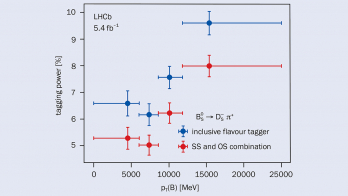

The parallel stream on Online Computing was, of course, dominated by LHC data acquisition (DAQ). The DAQ systems for all experiments are working well, leading to fast production of physics results. Talks on event processing provided evidence of the benefits of solid preparation and testing; simulation studies have proved to provide an amazingly accurate description of LHC data. Both the ATLAS and CMS collaborations report success with prompt processing at the LCG Tier 0 at CERN. New experiments, for example at FAIR, should take advantage of the experiment frameworks used currently by all of the LHC experiments, although the analysis challenges of the FAIR experiments exceed those of the LHC. There was also a word of caution – reconstruction works well today but how will it cope with increasing event pile-up in the future?

Presentations in the software engineering, data storage and databases stream covered a heterogeneous range of subjects, from quality assurance and performance monitoring to databases, software re-cycling and data preservation. Once again, the conclusion was that the software frameworks for the LHC are in good shape and that other experiments should be able to benefit from this.

The most popular parallel stream of talks was dedicated to distributed processing and analysis. A main theme was the successful processing and analysis of data in a distributed environment, dominated, of course, by the LHC. The message here is positive: the computing models are mainly performing as expected. The success of the experiments relies on the success of the Grid services and the sites but the hardest problems take far longer to solve than foreseen in the targeted service levels. The other two main themes were architecture for future facilities such as FAIR, the Belle II experiment, at the SuperKEKB upgrade in Japan, and the SuperB project in Italy; and improvements in infrastructure and services for distributed computing. The new projects are using a tier structure, but apparently with one layer fewer than in the LCG. Two new, non-high-energy-physics projects – the Fermi gamma-ray telescope and the Joint Dark Energy Mission – seem not to use Grid-like schemes.

Tools that work

The message from the computing fabrics and networking stream was that “hardware is not reliable, commodity or otherwise”; this statement from Bird’s opening plenary was illustrated in several talks. Deployments of upgrades, patches, new services are slow – another quote from Bird. Several talks showed that the community has the mechanism, so perhaps the problem is in communications and not in the technology? Yes, storage is an issue and there is a great deal of work going on in this area, as shown in several talks and posters. However, the various tools available today have proved that they work: via the LCG, the experiments have stored and made accessible the first months of LHC data. This stream included many talks and posters on different aspects and uses of virtualization. It was also shown that 40 Gbit and 100 Gbit networks are a reality: network bandwidth is there but the community must expect to have to pay for it.

Image credit: ASGC Taipei.

Compared with previous CHEP conferences, there was a shift in the Grid and cloud middleware sessions. These showed that pilot jobs are fully established, virtualization is entering serious large-scale production use and there are more cloud models than before. A number of monitoring and information system tools were presented, as well as work on data management. Various aspects of security were also covered. Regarding clouds, although the STAR collaboration at the Relativistic Heavy Ion Collider at Brookhaven reported impressive production experience and there were a few examples of successful uses of Amazon EC2 clouds, other initiatives are still at the starting gate and some may not get much further. There was a particularly interesting example linking CernVM and Boinc. It was in this stream that one of the more memorable quotes of the week occurred, from Rob Quick of Fermilab: “There is no substitute for experience.”

The final parallel stream covered collaborative tools, with two sessions. The first was dedicated to outreach (Web 2.0, ATLAS Live and CMS Worldwide) and new initiatives (Inspire); the second to tools (ATLAS Glance information system, EVO, Lecture archival scheme).

• The next CHEP will be held on 21–25 May, 2012, hosted by Brookhaven National Laboratory, at the NYU campus in Greenwich Village, New York, see www.chep2012.org/.