The Courier matched photos from CERN’s first 25 years to current experts and asked them to reflect on how much has changed across 70 years of science.

The past seven decades have seen remarkable cultural and technological changes. And CERN has been no passive observer. From modelling European cooperation in the aftermath of World War II to democratising information via the web and discovering a field that pervades the universe, CERN has nudged the zeitgeist more than once since its foundation in 1954.

It’s undeniable, though, that much has stayed the same. A high-energy physics lab still needs to be fast, cool, collaborative, precise, practically useful, deep, diplomatic, creative and crystal clear. Plus ça change, plus c’est la même chose.

This selection of (lightly colourised) snapshots from CERN’s first 25 years, accompanied by expert reflections from across the lab, show how things have changed in the intervening years – and what has stayed the same.

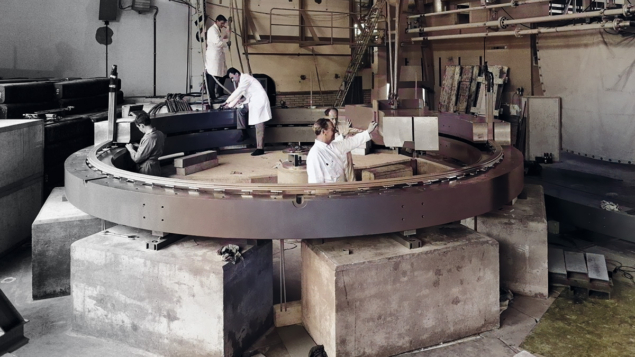

1960

The discovery that electrons and muons possess spin that precesses in a magnetic field has inspired generations of experimentalists and theorists to push the boundaries of precision. The key insight is that quantum effects modify the magnetic moment associated with the particles’ spins, making their gyromagnetic ratios (g) slightly larger than two, the value predicted by Dirac’s equation. For electrons, these quantum effects are primarily due to the electromagnetic force. For muons, the weak and strong forces also contribute measurably – as well, perhaps, as unknown forces. These measurements stand with the most beautiful and precise of all time, and their history is deeply intertwined with that of the Standard Model.

CERN physicists Francis Farley and Emilio Picasso were pioneers and driving forces behind the muon g–2 experimental programme. The second CERN experiment introduced the use of a 5 m diameter magnetic storage ring. Positive muons with 1.3 GeV momentum travelled around the ring until they decayed into positrons whose directions were correlated with the spin of the parent muons. The experiment tested the muon’s anomalous magnetic moment (g-2) with a precision of 270 parts per million. A brilliant concept, the “magic gamma”, was then introduced in the third CERN experiment in the late 1970s: by using muons at a momentum of 3.1 GeV, the effect of electric fields on the precession frequency cancelled out, eliminating a major source of systematic error. All subsequent experiments have relied on this principle, with the exception of an experiment using ultra-cold muons that is currently under construction in Japan. A friendly rivalry for precision between experimentalists and theorists continues today (Lattice calculations start to clarify muon g-2), with the latest measurement at Fermilab achieving a precision of 190 parts per billion.

Andreas Hoecker is spokesperson for the ATLAS collaboration.

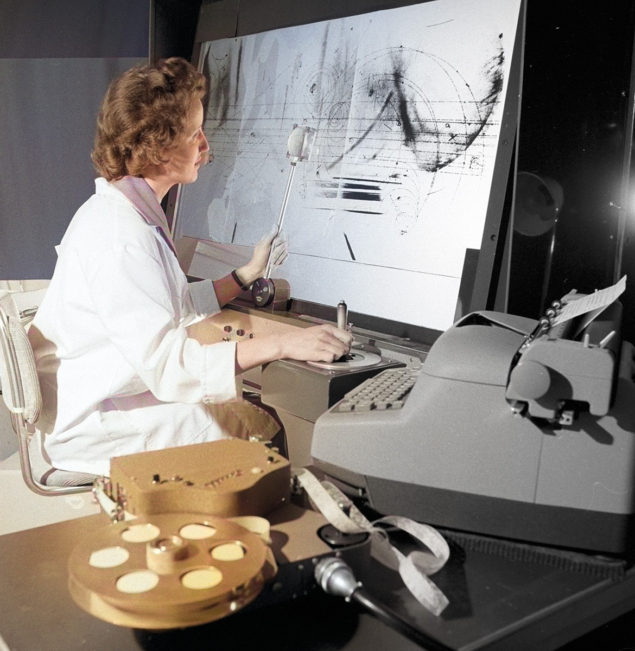

1961

The excitement of discovering new fundamental particles and forces made the 1950s and 1960s a golden era for particle physicists. A lot of creative energy was channelled into making new particle detectors, such as the liquid hydrogen (or heavier liquid) bubble chambers that paved the way to discoveries such as neutral currents, and seminal studies of neutrinos and strange and charmed baryons. As particles pass through, they make the liquid boil, producing bubbles that are captured to form images. In 1961, each had to be painstakingly inspected by hand, as depicted here, to determine the properties of each particle. Fortunately, in the decades since, physicists have found ways to preserve the level of detail they offer and build on this inspiration to prepare new technologies. Liquid–argon time-projection chambers such as CERN’s DUNE prototypes, which are currently the largest of their kind in the world, effectively give us access to bubble-chamber images in full colour, with the colour representing energy deposition (CERN Courier July/August 2024 p41). Millions of these images are now analysed algorithmically – essential, as DUNE is expected to generate one of the highest data rates in the world.

Laura Munteanu is a CERN staff scientist working on the T2K and DUNE experiments.

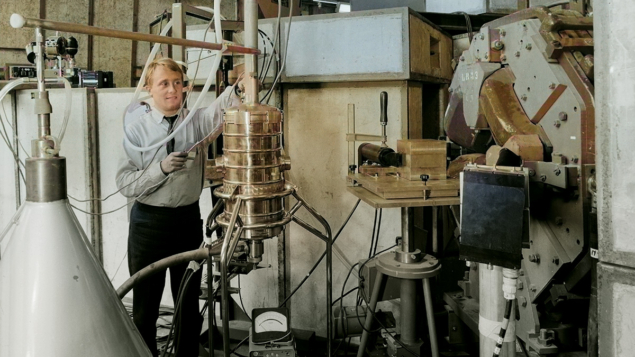

1965

This photograph shows the first experiment at CERN to use a superconducting magnet. The pictured physicist is adjusting a cryostat containing a stack of nuclear emulsions surrounded by a liquid–helium-cooled superconducting niobium–zirconium electromagnet. A pion beam from CERN’s synchrocyclotron passes through the quadrupole magnet at the right, collimated by the pile of lead bricks and detected by a small plastic scintillation counter before entering the cryostat. In this study of double charge exchange from π+ to π– in nuclear emulsions, the experiment consumed between one and two litres of liquid helium per hour from the container in the left foreground, with the vapour being collected for reuse (CERN Courier August 1965 p116).

Today, the LHC is the world’s largest scientific instrument, with more than 24 km of the machine operating at 1.9 K – and yet only one project among many at CERN requiring advanced cryogenics. As presented at the latest international cryogenic engineering conference organised here in July, there have never been so many cryogenics projects either implemented or foreseen. They include accelerators for basic research, light sources, medical accelerators, detectors, energy production and transmission, trains, planes, rockets and ships. The need for energy efficiency and long-term sustainability will necessitate cryogenic technology with an enlarged temperature range for decades to come. CERN’s experience provides a solid foundation for a new generation of engineers to contribute to society.

Serge Claudet is a former deputy group leader of CERN’s cryogenics group.

1966

Polishing a mirror at CERN in 1966. Are physicists that narcissistic? Perhaps some are, but not in this case. Ultra-polished mirrors are still a crucial part of a class of particle detectors based on the Cherenkov effect. Just as a shock wave of sound is created when an object flies through the sky at a speed greater than the speed of sound in air, so charged particles create a shock wave of light when they pass through a medium at a speed greater than the speed of light in that medium. This effect is extremely useful for measuring the velocity of a charged particle, because the emission angle of light packets relative to the trajectory of the particle is related to the velocity of the particle itself. By measuring the emission angle of Cherenkov light for an ultra-relativistic charged particle travelling through a transparent medium, such as a gas, the velocity of the particle can be determined. Together with the measurement of the particle’s momentum, it is then possible to obtain its identity card, i.e. its mass. Mirrors are used to reflect Cherenkov light to the photosensors. The LHCb experiment at CERN has the most advanced Cherenkov detector ever built. Years go by and technology evolves, but fundamental physics is about reality, and that’s unchangeable!

Vincenzo Vagnoni is spokesperson of the LHCb collaboration.

1970

In 1911, Heinke Kamerlingh Onnes made a groundbreaking discovery by measuring zero resistance in a mercury wire at 4.2 K, revealing the phenomenon of superconductivity. This earned him the 1913 Nobel Prize, decades in advance of Bardeen, Cooper and Schrieffer’s full theoretical explanation of 1957. It wasn’t until the 1960s that the first superconducting magnets exceeding 1 T were built. This delay stemmed from the difficulty in enabling bulk superconductors to carry large currents in strong magnetic fields – a challenge requiring significant research.

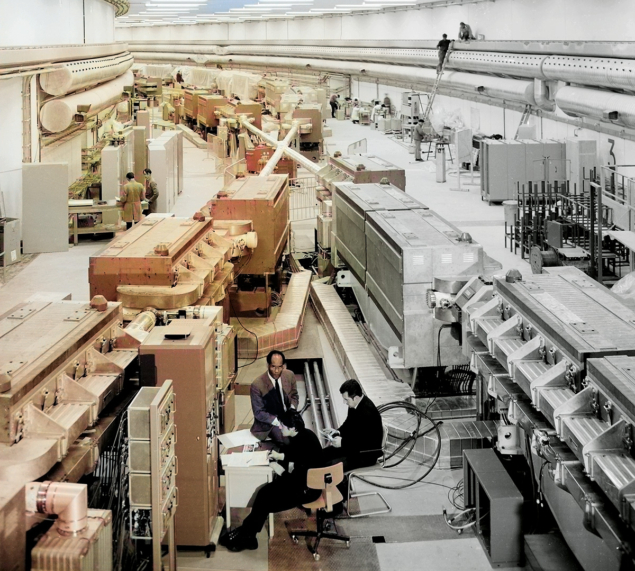

The world’s first proton–proton collider, CERN’s pioneering Intersecting Storage Rings (ISR, pictured below left), began operation in 1971, a year after this photograph was taken. One of its characteristic “X”-shaped vacuum chambers is visible, flanked by combined-function bending magnets on either side. In 1980, to boost its luminosity, eight superconducting quadrupole magnets based on niobium-titanium alloy were installed, each with a 173 mm bore and a peak field of 5.8 T, making the ISR the first collider to use superconducting magnets. Today, we continue to advance superconductivity. For the LHC’s high-luminosity upgrade, we are preparing to install the first magnets based on niobium-tin technology: 24 quadrupoles with a 150 mm aperture and a peak field of 11.3 T.

Susana Izquierdo Bermudez leads CERN’s Large Magnet Facility.

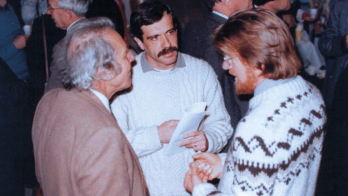

1972

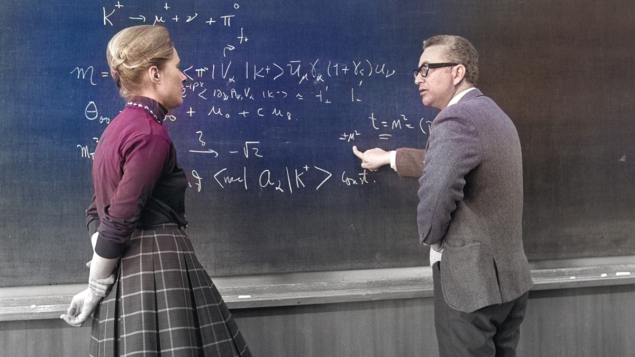

The Theoretical Physics Department, or Theory Division as it used to be known, dates back to the foundation of CERN, when it was first established in Copenhagen under the direction of Niels Bohr, before moving to Geneva in 1957. Theory flourished at CERN in the 1960s, hosting many scientists from CERN’s member states and beyond, working side-by-side with experimentalists with a particular focus on strong interactions.

In 1972, when Murray Gell-Mann visited CERN and had this discussion with Mary Gaillard, the world of particle physics was at a turning point. The quark model had been proposed by Gell-Mann in 1964 (similar ideas had been proposed by George Zweig and André Peterman) and the first experimental evidence of their reality had been discovered in deep-inelastic electron scattering at SLAC in 1968. However, the dynamics of quarks was a puzzle. The weak interactions being discussed by Gaillard and Gell-Mann in this picture were also puzzling, though Gerard ’t Hooft and Martinus Veltman had just shown that the unified theory of weak and electromagnetic interactions proposed earlier by Shelly Glashow, Abdus Salam and Steven Weinberg was a calculable theory.

The first evidence for this theory came in 1973 with the discovery of neutral currents by the Gargamelle neutrino experiment at CERN, and 1974 brought the discovery of

the charm quark, a key ingredient in what came to be known as the Standard Model. This quark had been postulated to explain properties of K mesons, whose decays are being discussed by Gaillard and Gell-Mann in this picture, and Gaillard, together with Benjamin Lee, went on to play a key role in predicting its properties. The discoveries of neutral currents and charm ushered in the Standard Model, and CERN theorists were active in exploring its implications – notably in sketching out the phenomenology of the Brout–Englert–Higgs mechanism. We worked with experimentalists particularly closely during the 1990s, making precise calculations and interpreting the results emerging from LEP that established the Standard Model.

CERN Theory in the 21st century has largely been focused on the LHC experimental programme and pursuing new ideas for physics beyond the Standard Model, often in relation to cosmology and astrophysics. These are likely to be the principal themes of theoretical research at CERN during its eighth decade.

John Ellis served as head of CERN’s Theoretical Physics Department from 1988 to 1994.

1974

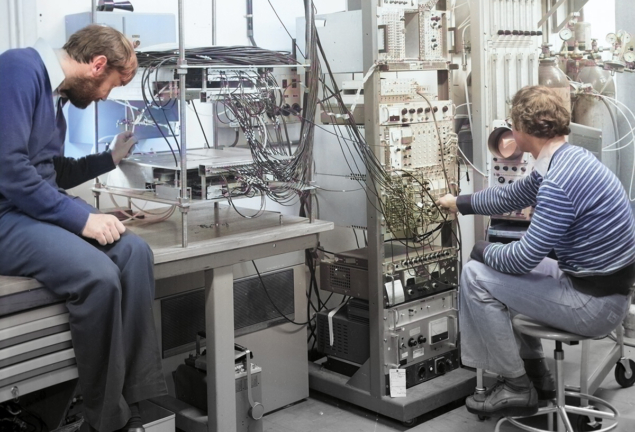

From 1959 to 1992, Linac1 accelerated protons to 50 MeV, for injection into the Proton Synchrotron, and from 1972 into the Proton Synchrotron Booster. In 1974, their journey started in this ion source. High voltage was used to achieve the first acceleration to a few percent of the speed of light. It wasn’t only the source itself that had to be at high voltage, but also the power supplies that feed magnets, the controllers for gas injection, the diagnostics and the controls. This platform was the laboratory for the ion source. When operational, the cubicle and everything in it was at 520 kV, meaning all external surfaces had to be smooth to avoid sparks. As pictured, hydraulic jacks could lift the lid to allow access for maintenance and testing, at which point a drawbridge would be lowered from the adjacent wall to allow the engineers and technicians to take a seat in front of the instruments.

Thanks to the invention of radio-frequency quadrupoles by Kapchinsky and Teplyakov, radio-frequency acceleration can now start from lower proton energies. Today, ion sources use much lower voltages, in the range of tens of kilovolts, allowing the source installations to shrink dramatically in size compared to the 1970s.

Richard Scrivens is CERN’s deputy head of accelerator and beam physics.

1974

CERN’s labyrinth of tunnels has been almost continuously expanding since the lab was founded 70 years ago. When CERN was first conceived, who would have thought that the 7 km-long Super Proton Synchrotron tunnel shown in this photograph would have been constructed, let alone the 27 km LEP/LHC tunnel? Similar questions were raised about the feasibility of the LEP tunnel to those that are being posed today about the proposed Future Circular Collider (FCC) tunnel. But if you take a step back and look at the history of CERN’s expanding tunnel network, it seems like the next logical step for the organisation.

This vintage SPS photograph from the 1970s shows the tunnel’s secondary lining being constructed. The concrete was transported from the surface down the 50 m-deep shafts and then pumped behind the metal formwork to create the tunnel walls. This technology is still used today, most recently for the HL-LHC tunnels. However, for a mega-project like the FCC, a much quicker and more sophisticated methodology is envisaged. The tunnels would be excavated using tunnel boring machines, which will install a pre-cast concrete segmental lining using robotics immediately after the excavation of the rock, allowing 20 m of tunnel to be excavated and lined with concrete per day.

John Osborne is a senior civil engineer at CERN.

1977

Detector development for fundamental physics always advances in symbiosis with detector development for societal applications. Here, Alan Jeavons (left) and David Townsend prepare the first positron-emission tomography (PET) scan of a mouse to be performed at CERN. A pair of high-density avalanche chambers (HIDACs) can be seen above and below Jeavons’ left hand. As in PET scans in hospitals today, a radioactive isotope introduced into the biological tissue of the mouse decays by emitting a positron that travels a few millimetres before annihilating with an electron. The resulting pair of coincident and back-to-back 511 keV photons was then converted into electron avalanches which were reconstructed in multiwire proportional chambers – a technology invented by CERN physicist Georges Charpak less than a decade earlier to improve upon bubble chambers and cloud chambers in high-energy physics experiments. The HIDAC detector later contributed to the development of three-dimensional PET image reconstruction. Such testing now takes place at dedicated pre-clinical facilities.

Today, PET detectors are based on inorganic scintillating crystals coupled to photodetectors – a technology that is also used in the CMS and ALICE experiments at the LHC. CERN’s Crystal Clear collaboration has been continuously developing this technology since 1991, yielding benefits for both fundamental physics and medicine.

One of the current challenges in PET is to improve time resolution in time-of-flight PET (TOF-PET) below 100 ps, and towards 10 ps. This will eventually enable positron annihilations to be pinpointed at the millimetre level, improving image quality, speeding up scans and reducing the dose injected into patients. Improvements in time resolution are also important for detectors in future high-energy experiments, and the future barrel timing layer of the CMS detector upgrade for the High-Luminosity LHC was inspired by TOF-PET R&D.

Etiennette Auffray Hillemanns is spokesperson for the Crystal Clear collaboration and technical coordinator for the CMS electromagnetic calorimeter.

1979

In this photo, we see Rafel Carreras, a remarkable science educator and communicator, sharing his passion for science with an eager audience of young learners. Known for his creativity and enthusiasm, Carreras makes the complex world of particle physics accessible and fun. His particle-physics textbook When Energy Becomes Matter includes memorable visualisations that we still use in our education activities today. One such visualisation is the “fruity strawberry collision”, wherein two strawberries collide and transform into a multitude of new fruits, illustrating how particle collisions produce a shower of new particles that didn’t exist before.

Today, we find fewer chalk boards at CERN and more casual clothing, but one thing remains the same: CERN’s dedication to education and communication. Over the years, CERN has trained more than 10,000 science teachers, significantly impacting science education globally. CERN Science Gateway, our new education and outreach centre, allows us to welcome about 400,000 visitors annually. It offers a wide range of activities, such as interactive exhibitions, science shows, guided tours and hands-on lab experiences, making science exciting and accessible for everyone. Thanks to hundreds of passionate and motivated guides, visitors leave inspired and curious to find out more about the fascinating scientific endeavours and extraordinary technologies at CERN.

Julia Woithe coordinates educational activities at CERN’s new Science Gateway.

- These photographs are part of a collection curated by Renilde Vanden Broeck, which will be exhibited at CERN in September.