How can high-energy physics data best be saved for the future?

High-energy-physics experiments collect data over long time periods, while the associated collaborations of experimentalists exploit these data to produce their physics publications. The scientific potential of an experiment is in principle defined and exhausted within the lifetime of such collaborations. However, the continuous improvement in areas of theory, experiment and simulation – as well as the advent of new ideas or unexpected discoveries – may reveal the need to re-analyse old data. Examples of such analyses already exist and they are likely to become more frequent in the future. As experimental complexity and the associated costs continue to increase, many present-day experiments, especially those based at colliders, will provide unique data sets that are unlikely to be improved upon in the short term. The close of the current decade will see the end of data-taking at several large experiments and scientists are now confronted with the question of how to preserve the scientific heritage of this valuable pool of acquired data.

To address this specific issue in a systematic way, the Study Group on Data Preservation and Long Term Analysis in High Energy Physics formed at the end of 2008. Its aim is to clarify the objectives and the means of preserving data in high-energy physics. The collider experiments BaBar, Belle, BES-III, CLEO, CDF, D0, H1 and ZEUS, as well as the associated computing centres at SLAC, KEK, the Institute of High Energy Physics in Beijing, Fermilab and DESY, are all represented, together with CERN, in the group’s steering committee.

Digital gold mine

The group’s inaugural workshop took place on 26–28 January at DESY, Hamburg. To form a quantitative view of the data landscape in high-energy physics, each of the participating experimental collaborations presented their computing models to the workshop, including the applicability and adaptability of the models to long-term analysis. Not surprisingly, the data models are similar – reflecting the nature of colliding-beam experiments.

The data are organized by events, with increasing levels of abstraction from raw detector-level quantities to N-tuple-like data for physics analysis. They are supported by large samples of simulated Monte Carlo events. The software is organized in a similar manner, with a more conservative part for reconstruction to reflect the complexity of the hardware and a more dynamic part closer to the analysis level. Data analysis is in most cases done in C++ using the ROOT analysis environment and is mainly performed on local computing farms. Monte Carlo simulation also uses a farm-based approach but it is striking to see how popular the Grid is for the mass-production of simulated events. The amount of data that should be preserved for analysis varies between 0.5 PB and 10 PB for each experiment, which is not huge by today’s standards but nonetheless a large amount. The degree of preparation for long-term data varies between experiments but it is obvious that no preparation was foreseen at an early stage of the programs; any conservation initiatives will take place in parallel with the end of the data analysis.

The main issue will be the communication between the experimental collaborations and the computing centres after final analyses

From a long-term perspective, digital data are widely recognized as fragile objects. Speakers from a few notable computing centres – including Fabio Hernandez of the Centre de Calcul de l’Institut, National de Physique Nucléaire et de Physique des Particules, Stephen Wolbers of Fermilab, Martin Gasthuber of DESY and Erik Mattias Wadenstein of the Nordic DataGrid Facility – showed that storage technology should not pose problems with respect to the amount of data under discussion. Instead, the main issue will be the communication between the experimental collaborations and the computing centres after final analyses and/or the collaborations where roles have not been clearly defined in the past. The current preservation model, where the data are simply saved on tapes, runs the risk that the data will disappear into cupboards while the read-out hardware may be lost, become impractical or obsolete. It is important to define a clear protocol for data preservation, the items of which should be transparent enough to ensure that the digital content of an experiment (data and software) remains accessible.

On the software side, the most popular analysis framework is ROOT, the object-oriented software and library that was originally developed at CERN. This offers many possibilities for storing and documenting high-energy-physics data and has the advantage of a large existing user community and a long-term commitment for support, as CERN’s René Brun explained at the workshop. One example of software dependence is the use of inherited libraries (e.g. CERNLIB or GEANT3), and of commercial software and/or packages that are no longer officially maintained but remain crucial to most running experiments. It would be an advantageous first step towards long-term stability of any analysis framework if such vulnerabilities could be removed from the software model of the experiments. Modern techniques of software emulation, such as virtualization, may also offer promising features, as Yves Kemp of DESY explained. Exploring such solutions should be part of future investigations.

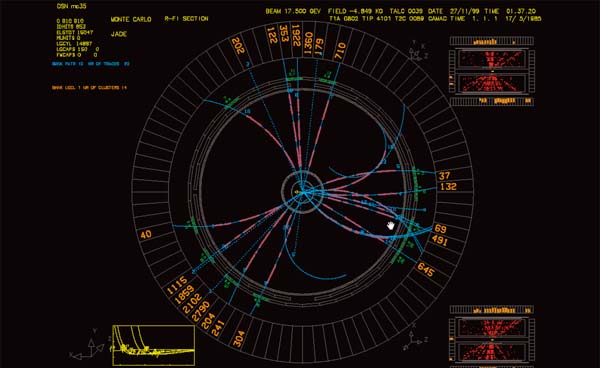

Examples of previous experience with data from old experiments show clearly that a complete re-analysis has only been possible when all of the ingredients could be accounted for. Siggi Bethke of the Max Planck Institute of Physics in Munich showed how a re-analysis of data from the JADE experiment (1979–1986), using refined theoretical input and a better simulation, led to a significant improvement in the determination of the strong coupling-constant as a function of energy. While the usual statement is that higher-energy experiments replace older, low-energy ones, this example shows that measurements at lower energies can play a unique role in a global physical picture.

The experience at the Large Electron-Positron (LEP) collider, which Peter Igo-Kemenes, André Holzner and Matthias Schroeder of CERN described, suggested once more that the definition of the preserved data should definitely include all of the tools necessary to retrieve and understand the information so as to be able to use it for new future analyses. The general status of the LEP data is of concern, and the recovery of the information – to cross-check a signal of new physics, for example – may become impossible within a few years if no effort is made to define a consistent and clear stewardship of the data. This demonstrates that both early preparation and sufficient resources are vital in maintaining the capability to reinvestigate older data samples.

The next-generation publications database, INSPIRE, offers extended data-storage capabilities that could be used immediately to enhance public or private information related to scientific articles

The modus operandi in high-energy physics can also profit from the rich experience accumulated in other fields. Fabio Pasian of Trieste told the workshop how the European Virtual Observatory project has developed a framework for common data storage of astrophysical measurements. More general initiatives to investigate the persistency of digital data also exist and provide useful hints as to the critical points in the organization of such projects.

There is also an increasing awareness in funding agencies regarding the preservation of scientific data, as David Corney of the UK’s Science and Technology Facilities Council, Salvatore Mele of CERN and Amber Boehnlein of the US Department of Energy described. In particular, the Alliance for Permanent Access and the EU-funded project in Framework Programme 7 on the Permanent Access to the Records of Science in Europe recently conducted a survey of the high-energy-physics community, which found that the majority of scientists strongly support the preservation of high-energy-physics data. One important aspect that was also positively appreciated in the survey answers was the question of open access to the data in conjunction with the organizational and technical matters, an issue that deserves careful consideration. The next-generation publications database, INSPIRE, offers extended data-storage capabilities that could be used immediately to enhance public or private information related to scientific articles, including tables, macros, explanatory notes and potentially even analysis software and data, as Travis Brooks of SLAC explained.

While this first workshop compiled a great deal of information, the work to synthesize it remains to be completed and further input in many areas is still needed. In addition, the raison d’être for data preservation should be clearly and convincingly formulated, together with a viable economic model. All high-energy-physics experiments have the capability of taking some concrete action now to propose models for data preservation. A survey of technology is also important, because one of the crucial factors may indeed be the evolution of hardware. Moreover, the whole process must be supervised by well defined structures and steered by clear specifications that are endorsed by the major laboratories and computing centres. A second workshop is planned to take place at SLAC in summer 2009 with the aim of producing a preliminary report for further reference, so that the “future of the past” will become clearer in high-energy physics.