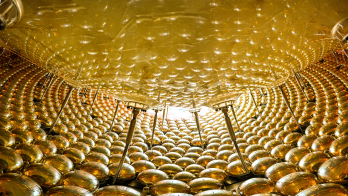

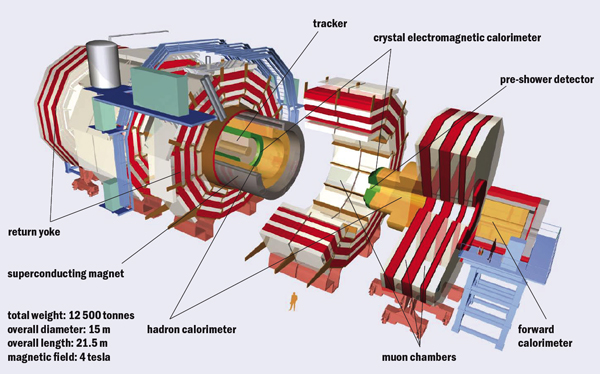

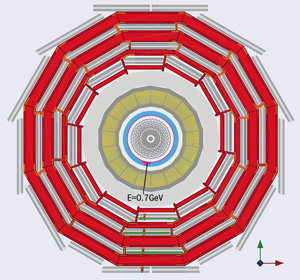

In July 2006, the huge segments of the CMS detector came together for the first time for the Magnet Test and Cosmic Challenge at the experiment’s site near Cessy in France. Within days the commissioning teams were recording data from cosmic rays bending in the 4 T magnetic field as they passed through a “slice” of the overall detector. This contained elements of all four main subdetectors: the particle tracker, the electromagnetic and hadron calorimeters and the muon system (figure 1). Vital steps remained, however, for CMS to be ready for particle collisions in the LHC. These tests in 2006 took place at the surface, using temporary cabling and a temporary electronics barrack, 100 m or so above the LHC ring.

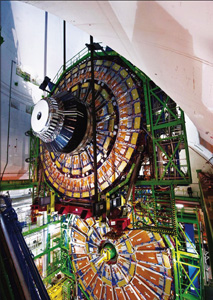

To prepare for the LHC start up, the segments had to be lowered into the cavern one at a time, where the complete system – from services to data delivery – was to be installed, integrated and checked thoroughly in tests with cosmic rays. The first segment – one of the two forward hadron calorimeters (HF) – descended into the CMS cavern at the beginning of November 2006 and a large section of the detector was in its final position little more than a year later, recording cosmic-ray muons through the complete data chain and delivering events to collaborators as far afield as California and China.

This feat marked an achievement, not only in technical terms, but also in human collaboration. The ultimate success of an experiment on the scale of CMS is not only the challenge of building and assembling all the complex pieces; it also involves orchestrating an ensemble of people to ensure the detector’s eventual smooth operation. The in situ operations to collect cosmic rays typically involved crews of up to 60 people from the different subdetectors at CERN, as well as colleagues around the globe who have put the distributed monitoring and analysis system through its paces. The teams worked together, in a relatively short space of time, to solve the majority of problems as they arose – in real time.

Installation of the readout electronics for the various subdetector systems began in the cavern in early 2007, soon after the arrival of the first large segments. There were sufficient components fully installed by May for commissioning teams to begin a series of “global runs” – over several days at the end of each month – using cosmic rays to trigger the readout. Their aim was to increase functionality and scale with each run, as additional components became available. The complete detector should be ready by May 2008 for the ultimate test in its final configuration with the magnetic field at its nominal value.

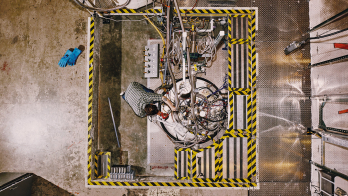

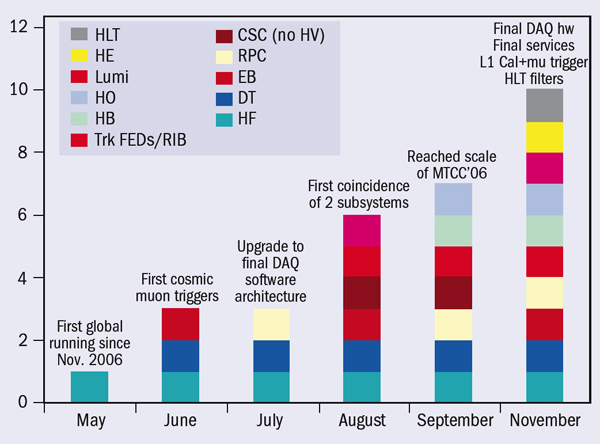

At the time of the first global run, on 24–30 May 2007, only one subdetector – half of the forward hadron calorimeter (HF+), which was the first piece to be lowered – was ready to participate (figure 2). It was accompanied by the global trigger, a reduced set of the final central data acquisition (DAQ) hardware installed in the service cavern, and data-quality monitoring (DQM) services to monitor the HF and the trigger. While this represented only a small fraction of the complete CMS detection system, the run marked a major step forward when it recorded the first data with CMS in its final position.

This initial global run confirmed the operation of the HF from the run-control system through to the production global triggers and delivery of data to the storage manager in the cavern. It demonstrated the successful concurrent operation of DQM tasks for the hadron calorimeter and the trigger, and real-time visualization of events by the event display. The chain of hardware and software processes governing the data transfer to the Tier-0 processing centre at CERN’s Computer Centre (the first level of the four-tier Grid-based data distribution system) already worked without problems from this early stage. Moreover, the central DAQ was able to run overnight without interruption.

The June global run saw the first real cosmic-muon triggers. By this time, the chambers made of drift tubes (DTs) for tracking muons through the central barrel of CMS were ready to participate. One forward hadron calorimeter (HF) plus a supermodule of the electromagnetic calorimeter (which at the time was being inserted in the CMS barrel) also joined in the run, which proved that the procedures to read out multiple detectors worked smoothly.

July’s run focused more on upgrading the DAQ software towards its final framework and included further subdetectors, in particular the resistive plate chambers (RPCs) in the muon barrel, which are specifically designed to provide additional redundancy to the muon triggers. This marked the successful upgrade to the final DAQ software architecture and integration of the RPC system.

The first coincidence between two subsystems was a major aim for the global run in August. For the first time, the run included parts of the barrel electromagnetic calorimeter (ECAL) with their final cabling, which were timed in to the DT trigger. The regular transfer of the data to the Tier-0 centre and some Tier-1s had now become routine.

By September, the commissioning team was able to exercise the full data chain from front-end readout for all types of subdetector through to Tier-1, Tier-2 and even Tier-3 centres, with data becoming available at the Tier-1 in Fermilab in less than an hour. The latter allowed collaboration members in Fermilab to participate in remote data-monitoring shifts via the Fermilab Remote Operations Centre. On the last day of the run, the team managed to insert a fraction of the readout modules for the tracker (working in emulation mode, given that the actual tracker was not yet installed) into the global run, together with readout for the muon DTs and RPCs – with the different muon detectors all contributing to the global muon trigger.

The scale of the operation was by now comparable to that achieved above ground with the Magnet Test and Cosmic Challenge in the summer of 2006. Moreover, as synchronization of different components for the random arrival times of cosmic-muon events is more complex than for the well timed collisions in the LHC, the ease in synchronizing the different triggers during this run was a good augur for the future.

The global-run campaign resumed again on 26 November. The principal change here was to use a larger portion of the final DAQ hardware on the surface rather than the mini-DAQ system. By this time the participating detector subsystems included all of the HCAL (as well as the luminosity system), four barrel ECAL supermodules, the complete central barrel wheel of muon DTs, and four sectors of RPCs on two of the barrel wheels. For the first time, a significant fraction of the readout for the final detector was taking part. The high-level trigger software unpacked nearly all the data during the run, ran local reconstructions in the muon DTs and ECAL barrel and created event streams enriched with muons pointing to the calorimeters. Prompt reconstruction took place on the Tier-0 processors and performed much of the reconstruction planned for LHC collisions.

To exercise the full data chain, the November run included a prototype tracking system, the “rod-in-a-box” (RIB), which contained six sensor modules of the strip tracking system. The experience in operating the RIB inside CMS provided a head-start for operation using the complete tracker once it is fully installed and cabled in early 2008 (see CMS installs the world’s largest silicon detector). The team also brought the final RPC trigger into operation, synchronizing it with the DT trigger and readout.

Installation in the CMS cavern continued apace, with the final segment – the last disc of the endcaps – lowered on 22 January 2008 (figure 5). The aim is to have sufficient cabling and services to read out more than half of CMS by March, including a large fraction of the tracker.

All seven Tier-1 centres have been involved since December, ranging from the US to Asia. In April, this worldwide collaboration will be exercised further with continuous 24 hour running, during which collaboration members in the remote laboratories will participate in data monitoring and analysis via centres such as the Fermilab ROC, as well as in a new CMS Centre installed in the old PS control room at CERN. By that stage, it will be true to say that the sun never sets on CMS data processing, as the collaboration puts in the final effort for the ultimate global run with the CMS wheels and discs closed and the magnet switched on before the first proton–proton collisions in the LHC.

• The authors are deeply indebted to the tremendous effort of their collaborators in preparing the CMS experiment.