Even before the Large Hadron Collider has accelerated its first beams, various groups have begun to plan an upgrade scheduled for around the middle of the next decade.

CERN’s Large Hadron Collider (LHC), first seriously discussed more than 20 years ago, is scheduled to begin operating in 2007. The possibility of upgrading the machine is, however, already being seriously studied. By about 2014, the quadrupole magnets in the interaction regions will be nearing the end of their expected radiation lifetime, having absorbed much of the power of the debris from the collisions. There will also be a need to reduce the statistical errors in the experimental data, which will require higher collision rates and hence an increase in the intensity of the colliding beams – in other words, in the machine’s luminosity.

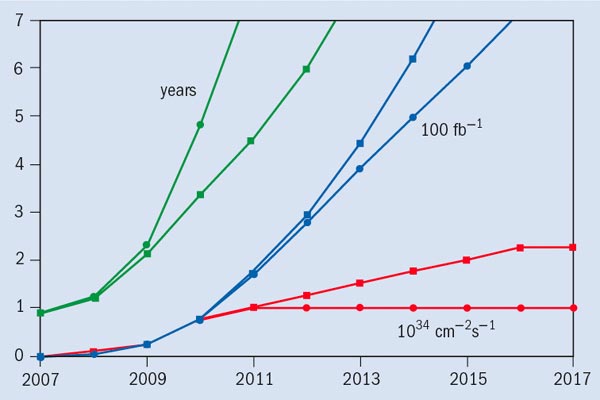

This twofold motivation for an upgrade in luminosity is illustrated in figure 1, which shows two possible scenarios compatible with the baseline design: one in which the luminosity stays constant from 2011 and one in which it reaches its ultimate value in 2016. An improved luminosity will also increase the physics potential, extending the reach of electroweak physics as well as the search for new modes in supersymmetric theories and new massive particles, some of which could be manifestations of extra dimensions.

The timescale for an upgrade of 10 years from now turns out to be just right for the development, prototyping and production of new superconducting magnets for the interaction regions and of other equipment, provided that an adequate R&D effort starts now. It is against this background that the European Community has supported the High-Energy High-Intensity Hadron-Beams (HHH) Networking Activity, which started in March 2004 as part of the Coordinated Accelerator Research in Europe (CARE) project. HHH has three objectives:

• to establish a roadmap for upgrading the European hadron accelerator infrastructure (at CERN with the LHC and also at Gesellschaft für Schwerionenforschung [GSI], the heavy-ion laboratory in Darmstadt);

• to assemble a community capable of sustaining the technical realization and scientific exploitation of these facilities;

• to propose the necessary accelerator R&D and experimental studies to achieve these goals.

The HHH activity is structured into three work packages. These are named Advancements in Accelerator Magnet Technology, Novel Methods for Accelerator Beam Instrumentation, and Accelerator Physics and Synchrotron Design.

The first workshop of the Accelerator Physics and Synchrotron Design work package, HHH-2004, was held at CERN on 8-11 November 2004. Entitled “Beam Dynamics in Future Hadron Colliders and Rapidly Cycling High-Intensity Synchrotrons”, it was attended by around 100 accelerator and particle physicists, mostly from Europe, but also from the US and Japan. With the subjects covered and the range of participants, the workshop was also able to reinforce vital links and co-operative approaches between high-energy and nuclear physicists and between accelerator-designers and experimenters.

The first session provided overviews of the main goals. Robert Aymar, director-general of CERN, reviewed the priorities of the laboratory until 2010, mentioning among them the development of technical solutions for a luminosity upgrade for the LHC to be commissioned around 2012-2015. The upgrade would be based on a new linac, Linac 4, to provide more intense proton beams, together with new high-field quadrupole magnets in the LHC interaction regions to allow for smaller beam sizes at the collision-points – even with the higher-intensity circulating beams. It would also include rebuilt tracking detectors for the ATLAS and CMS experiments. Jos Engelen, CERN’s chief scientific officer, encouraged the audience to consider the upgrade of the LHC and its injector chain as a unique opportunity for extending the physics reach of the laboratory in the areas of neutrino studies and rare hadron decays, without forgetting the requirements of future neutrino factories.

For the GSI laboratory, the director Walter Henning described the status of the Facility for Antiproton and Ion Research project (FAIR), and its scientific goals for nuclear physics. He pointed to the need for wide international collaboration to launch this accelerator project and to complete the required R&D.

Further talks in the session looked in more detail at the issues involved in an upgrade of the LHC. Frank Zimmermann and Walter Scandale from CERN presented overviews of the accelerator physics and the technological challenges, addressing possible new insertion layouts and scenarios for upgrading the injector-complex. The role of the US community through the LHC Accelerator Research Program (LARP) was described by Steve Peggs from the Brookhaven National Laboratory, who proposed closer coordination with the HHH activity. Finally, Daniel Denegri of CERN and the CMS experiment addressed the challenges to be faced if the LHC detectors are to make full use of a substantial increase in luminosity. He also reviewed the benefits expected for the various physics studies.

The five subsequent sessions were devoted to technical presentations and panel discussions on more specific topics, ranging from the challenges of high-intensity beam dynamics and fast-cycling injectors, to the development of simulation software. A poster session with a wide range of contributions provided a welcome opportunity to find out about further details, and a summary session closed the workshop.

The luminosity challenge

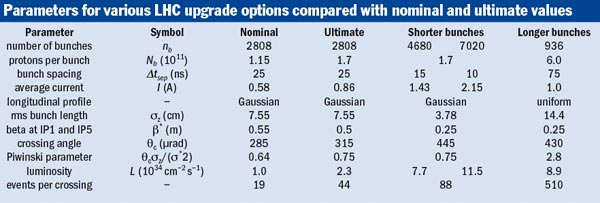

The basic proposal for the LHC upgrade is, after seven years of operation, to increase the luminosity by up to a factor of 10, from the current nominal value of 1034 cm-2 s-1 to 1035 cm -2 s-1. The table compares nominal and ultimate LHC parameters with those for three upgrade paths examined at the workshop.

The upgrade currently under discussion will include building essentially new interaction regions, with stronger or larger-aperture “low-beta” quadrupoles in order to reduce the spot size at the collision-point and to provide space for greater crossing angles. Moderate modifications of several subsystems, such as the beam dump, machine protection or collimation, will also be required because of the higher beam current. The choice between possible layouts for the new interaction regions is closely linked to both magnet design and beam dynamics; different approaches could accommodate smaller or larger crossing angles, possibly in combination with an electromagnetic compensation of long-range beam-beam collisions or “crab” cavities (as described below), respectively. A more challenging possibility also envisions the upgrade of the LHC injector chain, employing concepts similar to those being developed for the FAIR project at GSI.

The workshop addressed a broad range of accelerator-physics issues. These included the generation of long and short bunches, the effects of space charge and the electron cloud, beam-beam effects, vacuum stability and conventional beam instabilities.

A key outcome is the elimination of the “superbunch” scheme for the LHC upgrade, in which each proton beam is concentrated into only one or a few long bunches, with much larger local charge density. Speakers at the workshop underlined that this option would pose unsolvable problems for the detectors, the beam dump and the collimator system.

For the other upgrade schemes considered, straightforward methods exist to decrease or increase the bunch length in the LHC by a factor of two or more, possibly with a larger bunch intensity. Joachim Tuckmantel and Heiko Damerau of CERN proposed adding conventional radio-frequency (RF) systems operating at higher-harmonic frequencies to vary the bunch length and in some cases also the longitudinal emittance of the beam, while Ken Takayama promoted a novel scheme based on induction acceleration.

Experiments at CERN and GSI, reported by Giuliano Franchetti of GSI, have clarified mechanisms of beam loss and beam-halo generation, both of which occur as a result of synchrotron motion and space-charge effects due to the natural electrical repulsion between the beam particles. These mechanisms have been confirmed in computer simulations. Studies of the beam-beam interaction – the electromagnetic force on a particle in a beam of all the particles in the other beam – are in progress for the Tevatron at Fermilab, the Relativistic Heavy Ion Collider (RHIC) at Brookhaven, and the LHC.

Tanaji Sen of Fermilab showed that sophisticated simulations can reproduce the lifetimes observed for beam in the Tevatron, and Werner Herr of CERN presented self-consistent 3D simulations for beam-beam interactions in the LHC. Kazuhito Ohmi of KEK conjectured on the origin in hadron colliders of the beam-beam limit – the current threshold above which the size of colliding beams increases with increasing beam intensity. If the limit in the LHC arises from diffusion related to the crossing angle, then RF “crab” cavities, which tilt the particle bunches during the collision process, thus effectively providing head-on collisions despite the crossing angle of the bunch centroids, could raise the luminosity beyond the purely geometrical gain in making the beams collide head-on.

Another effect to consider in the LHC is the electron cloud created initially when synchrotron radiation from the proton releases photoelectrons at the beam-screen wall. The photoelectrons are pulled toward the positively charged proton bunch and in turn generate secondary electrons when they hit the opposite wall. Jie Wei of Brookhaven presented observations made at RHIC, which demonstrate that the electron cloud becomes more severe for shorter intervals between bunches. This may complicate an LHC upgrade based on shorter bunch-spacing. Oswald Gröbner of CERN also pointed out that secondary ionization of the residual gas by electrons from the electron cloud could compromise the stability of the vacuum.

The wake field generated by an electron cloud requires a modified description compared with a conventional wake field from a vacuum chamber, as Giovanni Rumolo of GSI discussed. His simulations for FAIR suggest that instabilities in a barrier RF system, with a flat bunch profile, qualitatively differ from those for a standard Gaussian bunch with sinusoidal RF. Elias Metral of CERN surveyed conventional beam instabilities and presented a number of countermeasures.

The simulation challenge

In the sessions on simulation tools, a combination of overview talks and panel discussions revisited existing tools and determined the future direction for software codes in the different areas of simulation. The tools available range from well established commercial impedance calculations to the rapidly evolving codes being developed to simulate the effects of the electron cloud. Benchmarking of codes to increase confidence in their predicative power is essential. Examples discussed included beam-beam simulations and experiments at the Tevatron, RHIC and the Super Proton Synchrotron at CERN; impedance calculations and bench measurements (e.g. for the LHC kicker magnets and collimators); observed and predicted impedance effects (at the Accelerator Test Facility at Brookhaven, DAFNE at Frascati and the Stanford Linear Collider at SLAC); single-particle optics calculations for HERA at DESY, SPEAR-3 at the Stanford Linear Accelerator Center, and the Advanced Light Source at Berkeley; and electron-cloud simulations.

Giulia Bellodi of the Rutherford Appleton Laboratory, Miguel Furman of Lawrence Berkeley National Laboratory, and Daniel Schulte of CERN suggested creating an experimental data bank and a set of standard models, for example for vacuum-chamber surface properties, which would ease future comparisons of different codes. New computing issues, such as parallelization, modern algorithms, numerical collisions, round-off errors and dispersion on a computing Grid were also discussed.

The simulation codes being developed should support all stages of an accelerator project that has shifting requirements; communication with other specialized codes is also often required. The workshop therefore recommended that codes should have toolkits and a modular structure as well as a standard input format, for example in the style of the Methodical Accelerator Design (MAD) software developed at CERN.

Frank Schmidt and Oliver Bruning of CERN stressed that the MAD-X program features a modular structure and a new style of code management. For most applications, complete, self-consistent, 3D descriptions of systems have to co-exist with the tendency towards fast, simplified, few-parameter models – conflicting aspects that can in fact be reconciled by a modular code structure.

The workshop established a list of priorities and future tasks for the various simulation needs and, in view of the rapidly growing computing power available, participants sketched the prospect of an ultimate universal code, as illustrated in figure 2.

• The HHH Networking Activity is supported by the European Community-Research Infrastructure Activity under the European Union’s Sixth Framework Programme “Structuring the European Research Area” (CARE, contract number RII3-CT-2003-506395).

Further reading

http://care-hhh.web.cern.ch/CARE-HHH/HHH-2004.