The first Sparks! Serendipity Forum at CERN will bring together world experts in artificial intelligence in a spirit of multidisciplinary collaboration. Mark Rayner spoke to some of the participants in the run-up to the September event.

Field lines arc through the air. By chance, a cosmic ray knocks an electron off a molecule. It hurtles away, crashing into other molecules and multiplying the effect. The temperature rises, liberating a new supply of electrons. A spark lights up the dark.

The absence of causal inference in practical machine learning touches on every aspect of AI research, application, ethics and policy

Vivienne Ming is a theoretical neuroscientist and a serial AI entrepreneur

This is an excellent metaphor for the Sparks! Serendipity Forum – a new annual event at CERN designed to encourage interdisciplinary collaborations between experts on key scientific issues of the day. The first edition, which will take place from 17 to 18 September, will focus on artificial intelligence (AI). Fifty leading thinkers will explore the future of AI in topical groups, with the outcomes of their exchanges to be written up and published in the journal Machine Learning: Science and Technology. The forum reflects the growing use of machine-learning techniques in particle physics and emphasises the importance that CERN and the wider community places on collaborating with diverse technological sectors. Such interactions are essential to the long-term success of the field.

AI is orders of magnitude faster than traditional numerical simulations. On the other side of the coin, simulations are being used to train AI in domains such as robotics where real data is very scarce

Anima Anandkumar is Bren professor at Caltech and director of machine learning research at NVIDIA

The likelihood of sparks flying depends on the weather. To take the temperature, CERN Courier spoke to a sample of the Sparks! participants to preview themes for the September event.

2020 revealed unexpectedly fragile technological and socio-cultural infrastructures. How we locate our conversations and research about AI in those contexts feels as important as the research itself

Genevieve Bell is director of the School of Cybernetics at the Australian National University and vice president at Intel

Back to the future

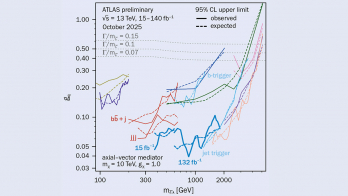

In the 1980s, AI research was dominated by code that emulated logical reasoning. In the 1990s and 2000s, attention turned to softening its strong syllogisms into probabilistic reasoning. Huge strides forward in the past decade have rejected logical reasoning, however, instead capitalising on computing power by letting layer upon layer of artificial neurons discern the relationships inherent in vast data sets. Such “deep learning” has been transformative, fuelling innumerable innovations, from self-driving cars to searches for exotica at the LHC (see Hunting anomalies with an AI trigger). But many Sparks! participants think that the time has come to reintegrate causal logic into AI.

Geneva is the home not only of CERN but also of the UN negotiations on lethal autonomous weapons. The major powers must put the evil genie back in the bottle before it’s too late

Stuart Russell is professor of computer science at the University of California, Berkeley and coauthor of the seminal text on AI

“A purely predictive system, such as the current machine learning that we have, that lacks a notion of causality, seems to be very severely limited in its ability to simulate the way that people think,” says Nobel-prize-winning cognitive psychologist Daniel Kahneman. “Current AI is built to solve one specific task, which usually does not include reasoning about that task,” agrees AAAI president-elect Francesca Rossi. “Leveraging what we know about how people reason and behave can help build more robust, adaptable and generalisable AI – and also AI that can support humans in making better decisions.”

AI is converging on forms of intelligence that are useful but very likely not human-like

Tomaso Poggio is a cofounder of computational neuroscience and Eugene McDermott professor at MIT

Google’s Nyalleng Moorosi identifies another weakness of deep-learning models that are trained with imperfect data: whether AI is deciding who deserves a loan or whether an event resembles physics beyond the Standard Model, its decisions are only as good as its training. “What we call the ground truth is actually a system that is full of errors,” she says.

We always had privacy violation, we had people being blamed falsely for crimes they didn’t do, we had mis-diagnostics, we also had false news, but what AI has done is amplify all this, and make it bigger

Nyalleng Moorosi is a research software engineer at Google and a founding member of Deep Learning Indaba

Furthermore, says influential computational neuroscientist Tomaso Poggio, we don’t yet understand the statistical behaviour of deep-learning algorithms with mathematical precision. “There is a risk in trying to understand things like particle physics using tools we don’t really understand,” he explains, also citing attempts to use artificial neural networks to model organic neural networks. “It seems a very ironic situation, and something that is not very scientific.”

This idea of partnership, that worries me. It looks to me like a very unstable equilibrium. If the AI is good enough to help the person, then pretty soon it will not need the person

Daniel Kahneman is a renowned cognitive psychologist and a winner of the 2002 Nobel Prize in Economics

Stuart Russell, one of the world’s most respected voices on AI, echoes Poggio’s concerns, and also calls for a greater focus on controlled experimentation in AI research itself. “Instead of trying to compete between Deep Mind and OpenAI on who can do the biggest demo, let’s try to answer scientific questions,” he says. “Let’s work the way scientists work.”

Good or bad?

Though most Sparks! participants firmly believe that AI benefits humanity, ethical concerns are uppermost in their minds. From social-media algorithms to autonomous weapons, current AI overwhelmingly lacks compassion and moral reasoning, is inflexible and unaware of its fallibility, and cannot explain its decisions. Fairness, inclusivity, accountability, social cohesion, security and international law are all impacted, deepening links between the ethical responsibilities of individuals, multinational corporations and governments. “This is where I appeal to the human-rights framework,” says philosopher S Matthew Liao. “There’s a basic minimum that we need to make sure everyone has access to. If we start from there, a lot of these problems become more tractable.”

We need to understand ethical principles, rather than just list them, because then there’s a worry that we’re just doing ethics washing – they sound good but they don’t have any bite

S Matthew Liao is a philosopher and the director of the Center for Bioethics at New York University

Far-term ethical considerations will be even more profound if AI develops human-level intelligence. When Sparks! participants were invited to put a confidence interval on when they expect human-level AI to emerge, answers ranged from [2050, 2100] at 90% confidence to [2040, ∞] at 99% confidence. Other participants said simply “in 100 years” or noted that this is “delightfully the wrong question” as it’s too human-centric. But by any estimation, talking about AI cannot wait.

Only a multi-stakeholder and multi-disciplinary approach can build an ecosystem of trust around AI. Education, cultural change, diversity and governance are equally as important as making AI explainable, robust and transparent

Francesca Rossi co-leads the World Economic Forum Council on AI for humanity and is IBM AI ethics global leader and the president-elect of AAAI

“With Sparks!, we plan to give a nudge to serendipity in interdisciplinary science by inviting experts from a range of fields to share their knowledge, their visions and their concerns for an area of common interest, first with each other, and then with the public,” says Joachim Mnich, CERN’s director for research and computing. “For the first edition of Sparks!, we’ve chosen the theme of AI, which is as important in particle physics as it is in society at large. Sparks! is a unique experiment in interdisciplinarity, which I hope will inspire continued innovative uses of AI in high-energy physics. I invite the whole community to get involved in the public event on 18 September.”