Beyond the setting of new records, precise knowledge of the luminosity at particle colliders is vital for future physics analyses, explains Georgios K Krintiras.

Year after year, particle physicists celebrate the luminosity records established at accelerators around the world. On 15 June 2020, for example, a new world record for the highest luminosity at a particle collider was claimed by SuperKEKB at the KEK laboratory in Tsukuba, Japan. Electron–positron collisions at the 3 km-circumference machine had reached an instantaneous luminosity of 2.22 × 1034 cm–2s–1 – surpassing the 27 km-circumference LHC’s record of 2.14 × 1034 cm–2s–1 set with proton–proton collisions in 2018. Within a year, SuperKEKB had celebrated a new record of 3.1 × 1034 cm–2s–1 (CERN Courier September/October 2021 p8).

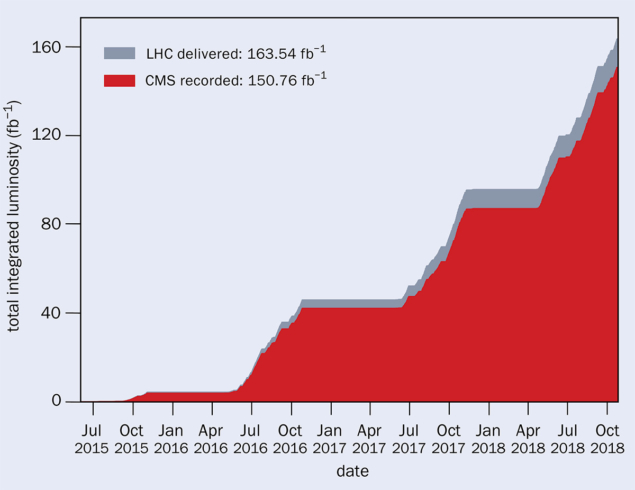

Beyond the setting of new records, precise knowledge of the luminosity at particle colliders is vital for physics analyses. Luminosity is our “standard candle” in determining how many particles can be squeezed through a given space (per square centimetre) at a given time (per second); the more particles we can squeeze into a given space, the more likely they are to collide, and the quicker the experiments fill up their tapes with data. Multiplied by the cross section, the luminosity gives the rate at which physicists can expect a given process to happen, which is vital for searches for new phenomena and precision measurements alike. Luminosity milestones therefore mark the dawn of new eras, like the B-hadron or top-quark factories at SuperKEKB and LHC (see “High-energy data” figure). But what ensures we didn’t make an accidental blunder in calculating these luminosity record values?

Physics focus

Physicists working at the precision frontier need to infer with percent-or-less accuracy how many collisions are needed to reach a certain event rate. Even though we can produce particles at an unprecedented event rate at the LHC, however, their cross section is either too small (as in the case of Higgs-boson production processes) or impacted too much by theoretical uncertainty (for example in the case of Z-boson and top-quark production processes) to enable us to establish the primary event rate with a high level of confidence. The solution comes down to extracting one universal number: the absolute luminosity.

The fundamental difference between quantum electrodynamics (QED) and chromodynamics (QCD) influences how luminosity is measured at different types of colliders. On the one hand, QED provides a straightforward path to high precision because the absolute rate of simple final states is calculable to very high accuracy. On the other, the complexity in QCD calculations shapes the luminosity determination at hadron colliders. In principle, the luminosity can be inferred by measuring the total number of interactions occurring in the experiment (i.e. the inelastic cross section) and normalising to the theoretical QCD prediction. This technique was used at the SppS and Tevatron colliders. A second technique, proposed by Simon van der Meer at the ISR (and generalised by Carlo Rubbia for the pp case), could not be applied to such single-ring colliders. However, this van der Meer-scan method is a natural choice at the double-ring RHIC and LHC colliders, and is described in the following.

Absolute calibration

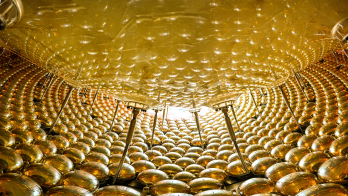

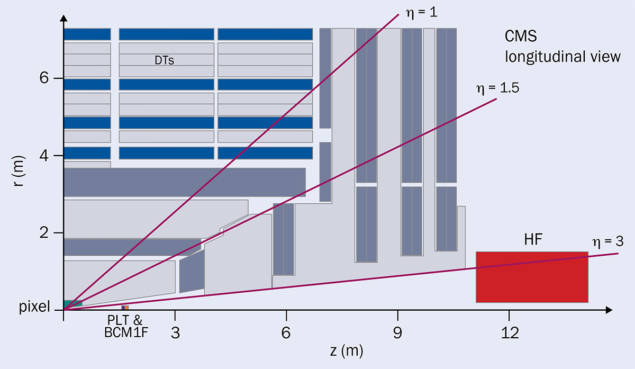

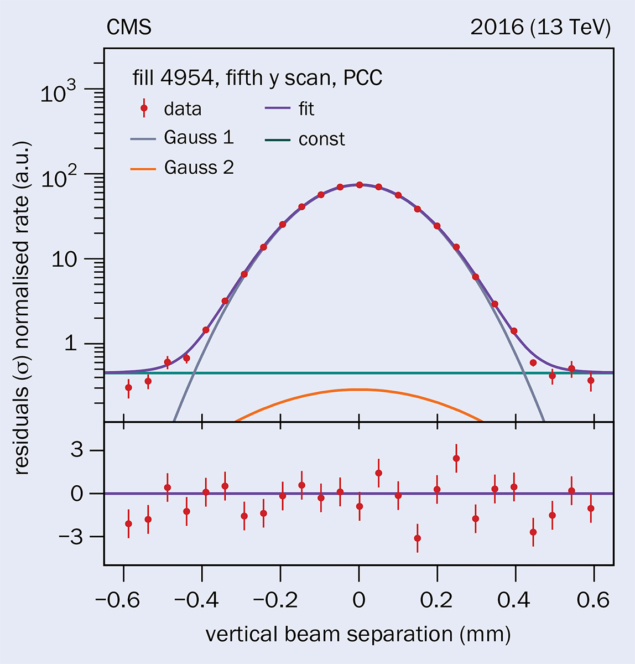

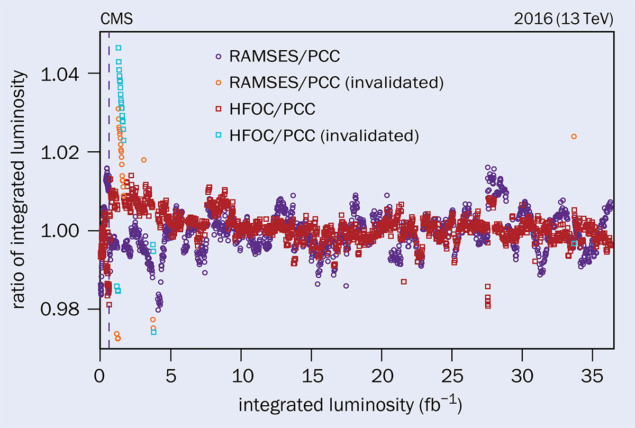

The LHC-experiment collaborations perform a precise luminosity inference from data (“absolute calibration”) by relating the collision rate recorded by the subdetectors to the luminosity of the beams. With the implementation of multiple collisions per bunch crossing (“pileup”) and intense collision-induced radiation, which acts as a background source, dedicated luminosity-sensitive detector systems called luminometers also had to be developed (see “Luminometers” figure). To maximise the precision of the absolute calibration, beams with large transverse dimensions and relatively low intensities are delivered by the LHC operators during a dedicated machine preparatory session, usually held once a year and lasting for several hours. During these unconventional sessions, called van der Meer beam-separation scans, the beams are carefully displaced with respect to each other in discrete steps, horizontally and vertically, while observing the collision rate in the luminometers (see “Closing in” figure). This allows the effective width and height of the two-dimensional interaction region, and thus the beam’s transverse size, to be measured. Sources of systematic uncertainty are either common to all experiments and are estimated in situ, for example residual differences between the measured beam positions and those provided by the operational settings of the LHC magnets, or depend on the scatter between luminometers. A major challenge with this technique is therefore to ensure that the obtained absolute calibration as extracted under the specialised van der Meer conditions is still valid when the LHC operates at nominal pileup (see “Stability shines” figure).

Stepwise approach

Using such a stepwise approach, the CMS collaboration obtained a total systematic uncertainty of 1.2% in the luminosity estimate (36.3 fb–1) of proton–proton collisions in 2016 – one of the most precise luminosity measurements ever made at bunched-beam hadron colliders. Recently, taking into account correlations between the years 2015–2018, CMS further improved on its preliminary estimate for the proton–proton luminosity at higher collision energies of 13 TeV. The full Run-2 data sample corresponds to a cumulative (“integrated”) luminosity of 140 fb–1 with a total uncertainty of 1.6%, which is comparable to the preliminary estimate from the ATLAS experiment.

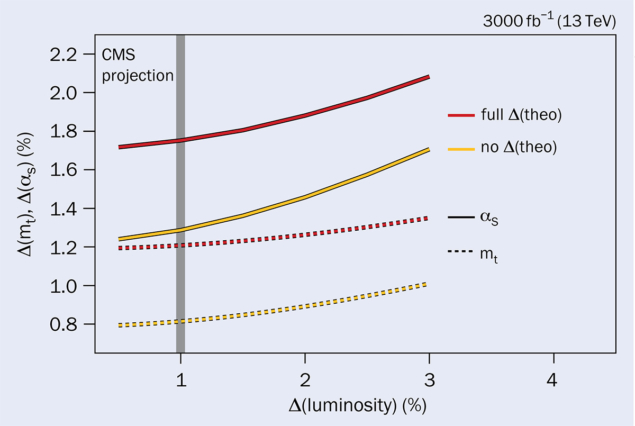

In the coming years, in particular when the High-Luminosity LHC (HL-LHC) comes online, a similarly precise luminosity calibration will become increasingly important as the LHC pushes the precision frontier further. Under those conditions, which are expected to produce 3000 fb–1 of proton–proton data by the end of LHC operations in the late 2030s (see “Precision frontier” figure), the impact from (at least some of) the sources of uncertainty is expected to be larger due to the expected high pileup. However, they can be mitigated using techniques already established in Run 2 and/or are currently under deployment. Overall, the strategy for the HL-LHC should combine three different elements: maintenance and upgrades of existing detectors; development of new detectors; and adding dedicated readouts to other planned subdetectors for luminosity and beam monitoring data. This will allow us to meet the tight luminosity performance target (≤ 1%) while maintaining a good diversity of luminometers.

Given that accurate knowledge of luminosity is a key ingredient of most physics analyses, experiments also release precision estimates for specialised data sets, for example using either proton–proton collisions at lower centre-of-mass energies or involving nuclear collisions at different per-nucleon centre-of-mass energies, as needed by the ALICE but also ATLAS, CMS and LHCb experiments. On top of the van der Meer method, the LHCb collaboration uniquely employs a “beam-gas imaging” technique in which vertices of interactions between beam particles and gas nuclei in the beam vacuum are used to measure the transverse size of the beams without the need to displace them. In all cases, and despite the fact that the experiments are located at different interaction points, their luminosity-related data are used in combination with input from the LHC beam instrumentation. Close collaboration among the experiments and LHC operators is therefore a key prerequisite for precise luminosity determination.

Protons versus electrons

Contrary to the approach at hadron colliders, the operation of the SuperKEKB accelerator with electron–positron collisions allows for an even more precise luminosity determination. Following well-known QED processes, the Belle II experiment recently reported an almost unprecedented precision of 0.7% for data collected during April–July 2018. Though electrons and positrons conceptually give the SuperKEKB team a slightly easier task, its new record for the highest luminosity set at a collider is thus well established.

SuperKEKB’s record is achieved thanks to a novel “crabbed waist” scheme, originally proposed by accelerator physicist Pantaleo Raimondi. In the coming years this will enable the luminosity of SuperKEKB is to be increased by a factor of almost 30 to reach its design target of 8 × 1035 cm–2 s–1. The crabbed waist scheme, which works by squeezing the vertical height of the beams at the interaction point, is also envisaged for the proposed Future Circular Collider (FCC-ee) at CERN. It also differs from the “crab-crossing” technology, based on special radiofrequency cavities, which is now being implemented at CERN for the high-luminosity phase of the LHC. While the LHC has passed the luminosity crown to SuperKEKB, taken together, novel techniques and the precise evaluation of their outcome continue to push forward both the accelerator and related physics frontiers.

Further reading

ALICE Collaboration 2021 ALICE-PUBLIC-2021-001.

ATLAS Collaboration 2019 ATLAS-CONF-2019-021.

Belle II Collaboration 2019 arXiv:1910.05365.

CMS Collaboration 2021 arXiv:2104.01927.

CMS Collaboration 2021 CERN-LHCC-2021-008.

P Grafström and W Kozanecki 2015 Prog. Part. Nucl. Phys. 81 97.

LHCb Collaboration 2014 arXiv:1410.0149.