Plenary talks during the opening of the open symposium of the European Strategy for Particle Physics highlighted the outstanding questions in the field, and the accelerator, detector and computing technologies necessary to tackle them.

The success of the Standard Model (SM) in describing elementary particles and their interactions is beyond doubt. Yet, as an all-encompassing theory of nature, it falls short. Why are the fermions arranged into three neat families? Why do neutrinos have a vanishingly small but non-zero mass? Why does the Higgs boson discovered fit the simplest “toy model” of itself? And what lies beneath the SM’s 26 free parameters? Similarly profound questions persist in the universe at large: the mechanism of inflation; the matter–antimatter asymmetry; and the nature of dark energy and dark matter.

Surveying outstanding questions in particle physics during the opening session of the update of the European Strategy for Particle Physics (ESPP) on Monday, theorist Pilar Hernández of the University of Valencia discussed the SM’s unique weirdness. Quoting Newton’s assertion “that truth is ever to be found in simplicity, and not in the multiplicity and confusion of things”, she argued that a deeper theory is needed to solve the model’s many puzzles. “At some energy scale the SM stops making sense, so there is a cut off,” she stated. “The question is where?”

This known unknown has occupied theorists ever since the SM came into existence. If it is assumed that the natural cut-off is the Planck scale, 12 orders of magnitude above the energies at the LHC where gravity becomes relevant to the quantum world, then fine tuning is necessary to explain why the Higgs boson (which generates mass via its interactions) is so light. Traditional theoretical solutions to this hierarchy problem – such as supersymmetry or large extra dimensions – imply the existence of new phenomena at scales higher than the mass of the Higgs boson. While initial results from the LHC severely constrain the most natural parameter spaces, the 10–100 TeV region is still an interesting scale to explore, says Hernández. At the same time, continues Hernández, there is a shift to more “bottom-up, rather than top-down”, approaches to beyond-SM (BSM) physics. “Particle physics could be heading to crisis or revolution. New BSM avenues focus on solving open problems such as the flavour puzzle, the origin of neutrino masses and the baryon asymmetry at lower scales.”

Introducing a “motivational toolkit” to plough the new territories ahead, Hernández named targets such as axion-like and long-lived particles, and the search for connections between the SM’s various puzzles. She noted in particular that 23 of the 26 free parameters of the SM are related in one way or another to the Higgs boson. “If we are looking for the suspect that could be hiding some secret, obviously the Higgs is the one!”

Linear versus circular

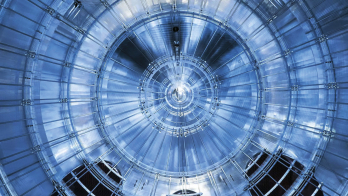

The accelerator, detector and computing technology needed for future fundamental exploration was the main focus of the scientific plenary session on day one of the ESPP update. Reviewing Higgs factory programmes, Vladimir Shiltsev, head of Fermilab’s Accelerator Physics Center, weighed up the pros and cons of linear versus circular machines. The former includes the International Linear Collider (ILC) and the Compact Linear Collider (CLIC); the latter a future circular electron–positron collider at CERN (FCCee) and the Circular Electron Positron Collider in China (CEPC). All need a high luminosity at the Higgs energy scale.

Linear colliders, said Shiltsev, are based on mature designs and organisation, are expandable to higher energies, and draw a wall-plug power similar to that of the LHC. On the other hand, they face potential challenges linked to their luminosity spectrum and beam current. Circular Higgs factories are also based on mature technology, with a strong global collaboration in the case of FCC. They offer a higher luminosity and more interaction points than linear options but require strategic R&D into high-efficiency RF sources and superconducting cavities, said Shiltsev. He also described a potential muon collider with a centre of mass energy of 126 GeV, which could be realised in a machine as short as 10 km. Although the cost would be relatively low, he said, the technology is not yet ready.

For energy-frontier colliders, the three current options – CERN’s HE-LHC (27 TeV) and FCC-hh (100 TeV), and China’s SppC (75 TeV) – demand high-field superconducting dipole magnets. These machines also present challenges such as how to deal with extreme levels of synchrotron radiation, collimation, injection and the overall machine design and energy efficiency. In a talk about the state-of-the-art and challenges in accelerator technology, Akira Yamamoto of CERN/KEK argued that, while a lepton collider could begin construction in the next few years, the dipoles necessary for a hadron collider might take 10 to 15 years of R&D before construction could start. There are natural constraints in such advanced-magnet development regardless of budget and manpower, he remarked.

Concerning more futuristic acceleration technologies based on plasma wakefields, which offer a factor 1000 more power than today’s RF systems, impressive results have been achieved recently at facilities such as BELLA at Berkeley and AWAKE at CERN. Responding to a question about when these technologies might supersede current ones, Shiltsev said: “Hopefully 20–30 years from now we should be able to know how many thousands of TeV will be possible by the end of the century.”

Recognising detectors and computing

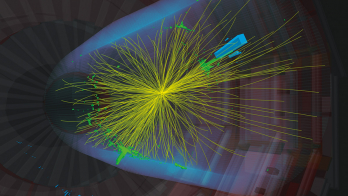

An energy-frontier hadron collider would produce radiation environments that current detectors cannot deal with, said Francesco Forti of INFN and the University of Pisa in his talk about the technological challenges of particle-physics experiments. Another difficulty for detectors is how to handle non-standard physics signals, such as long-lived particles and monopoles. Like accelerators, detectors require long time scales – it was the very early 1990s when the first LHC detector CDRs were written. From colliders to fixed-target to astrophysics experiments, detectors in high-energy physics face a huge variety of operating conditions and employ technologies that are often deeply entwined with developments in industry. The environmental credentials of detectors are also increasingly in the spotlight.

The focus of detector R&D should follow a “70–20–10” model, whereby 70% of efforts go into current detectors, 20% on future detectors and 10% blue-sky R&D, argued Forti. Given that detector expertise is distributed among many institutions, the field also needs solid co-ordination. Forti cited CERN’s “RD” projects in diamond detectors, silicon radiation-hard devices, micro-pattern gas detectors and pixel readout chips for ATLAS and CMS as good examples of coordination towards common goals. Finally, he argued strongly for greater consideration of the “human factor”, stating that the current career model “just doesn’t work very well.” Your average particle physicist cannot be expert and innovative simultaneously in analysis, detectors, computing, teaching, outreach and other areas, he reasoned. “Career opportunities for detector physicists must be greatly strengthened and kept open in a systematic way, he said. “Invest in the people and in the murky future.”

Computing for high-energy physics faces similar challenges. “There is an increasing gap between early-career physicists and the profile needed to program new architectures, such as greater parallelisation,” said Simone Campana of CERN and the HEP software foundation in a presentation about future computing challenges. “We should recognise the efforts of those who specialise in software because they can really change things like the speed of analyses and simulations.”

In terms of data processing, the HL-LHC presents a particular challenge. DUNE, FAIR, BELLE II and other experiments will also create massive data samples. Then there is the generation of Monte Carlo samples. “Computing resources in HEP will be more constrained in the future,” said Campana. “We enter a regime where existing projects are entering a challenging phase, and many new projects are competing for resources – not just in HEP but in other sciences, too.” At the same time, the rate of advances in hardware performance has slowed in recent years, encouraging the community to adapt to take advantage of developments such as GPUs, high-performance computing and commercial cloud services.

The HEP software foundation released a community white paper in 2018 setting out the radical changes in computing and software – not just for processing but also for data storage and management – required to ensure the success of the LHC and other high-energy physics experiments into the 2020s.

Closing out

Closer examination of linear and circular colliders took place during subsequent parallel sessions on the first day of the ESPP update. Dark matter, flavour physics and electroweak and Higgs measurements were the other parallel themes. A final discussion session focusing on the capability of future machines for precision Higgs physics generated particularly lively exchanges between participants. It illuminated both the immensity of efforts to evaluate the physics reach of the high-luminosity LHC and future colliders, and the unenviable task faced by ESPP committees in deciding which post-LHC project is best for the field. It’s a point summed up well in the opening address by the chair of the ESPP strategy secretariat, Halina Abramowicz: “This is a very strange symposium. Normally we discuss results at conferences, but here we are discussing future results.”