50 years ago CERN’s Intersecting Storage Rings set in motion a series of hadron colliders charting nature at the highest possible energies, write Lyn Evans and Peter Jenni.

The ability to collide high-energy beams of hadrons under controlled conditions transformed the field of particle physics. Until the late 1960s, the high-energy frontier was dominated by the great proton synchrotrons. The Cosmotron at Brookhaven National Laboratory and the Bevatron at Lawrence Berkeley National Laboratory were soon followed by CERN’s Proton Synchrotron and Brookhaven’s Alternating Gradient Synchrotron, and later by the Proton Synchrotron at Serpukov near Moscow. In these machines protons were directed to internal or external targets in which secondary particles were produced.

The kinematical inefficiency of this process, whereby the centre-of-mass energy only increases as the square root of the beam energy, was recognised from the outset. In 1943, Norwegian engineer Rolf Widerøe proposed the idea of colliding beams, keeping the centre of mass at rest in order to exploit the full energy for the production of new particles. One of the main problems was to get colliding beam intensities high enough for a useful event rate to be achieved. In the 1950s the prolific group at the University of Wisconsin Midwestern Universities Research Association (MURA), led by Donald Kerst, worked on the problem of “stacking” particles, whereby successive pulses from an injector synchrotron are superposed to increase the beam intensity. They mainly concentrated on protons, where Liouville’s theorem (which states that for a continuous fluid under the action of conservative forces the density of phase space cannot be increased) was thought to apply. Only much later, ways to beat Liouville and to increase the beam density were found. At the 1956 International Accelerator Conference at CERN, Kerst made the first proposal to use stacking to produce colliding beams (not yet storage rings) of sufficient intensity.

At that same conference, Gerry O’Neill from Princeton presented a paper proposing that colliding electron beams could be achieved in storage rings by making use of the natural damping of particle amplitudes by synchrotron-radiation emission. A design for the 500 MeV Princeton–Stanford colliding beam experiment was published in 1958 and construction started that same year. At the same time, the Budker Institute for Nuclear Research in Novosibirsk started work on VEP-1, a pair of rings designed to collide electrons at 140 MeV. Then, in March 1960, Bruno Touschek gave a seminar at Laboratori Nazionali di Frascati in Italy where he first proposed a single-ring, 0.6 m-circumference 250 MeV electron–positron collider. “AdA” produced the first stored electron and positron beams less than one year later – a far cry from the time it takes today’s machines to go from conception to operation! From these trailblazers evolved the production machines, beginning with ADONE at Frascati and SPEAR at SLAC. However, it was always clear that the gift of synchrotron-radiation damping would become a hindrance to achieving very high energy collisions in a circular electron–positron collider because the power radiated increases as the fourth power of the beam energy and the inverse fourth power of mass, so is negligible for protons compared with electrons.

A step into the unknown

Meanwhile, in the early 1960s, discussion raged at CERN about the next best step for particle physics. Opinion was sharply divided between two camps, one pushing a very high-energy proton synchrotron for fixed-target physics and the other using the technique proposed at MURA to build an innovative colliding beam proton machine with about the same centre-of-mass energy as a conventional proton synchrotron of much larger dimensions. In order to resolve the conflict, in February 1964, 50 physicists from among Europe’s best met at CERN. From that meeting emerged a new committee, the European Committee for Future Accelerators, under the chairmanship of one of CERN’s founding fathers, Edoardo Amaldi. After about two years of deliberation, consensus was formed. The storage ring gained most support, although a high-energy proton synchrotron, the Super Proton Synchrotron (SPS), was built some years later and would go on to play an essential role in the development of hadron storage rings. On 15 December 1965, with the strong support of Amaldi, the CERN Council unanimously approved the construction of the Intersecting Storage Rings (ISR), launching the era of hadron colliders.

On 15 December 1965, the CERN Council unanimously approved the construction of the ISR, launching the era of hadron colliders

First collisions

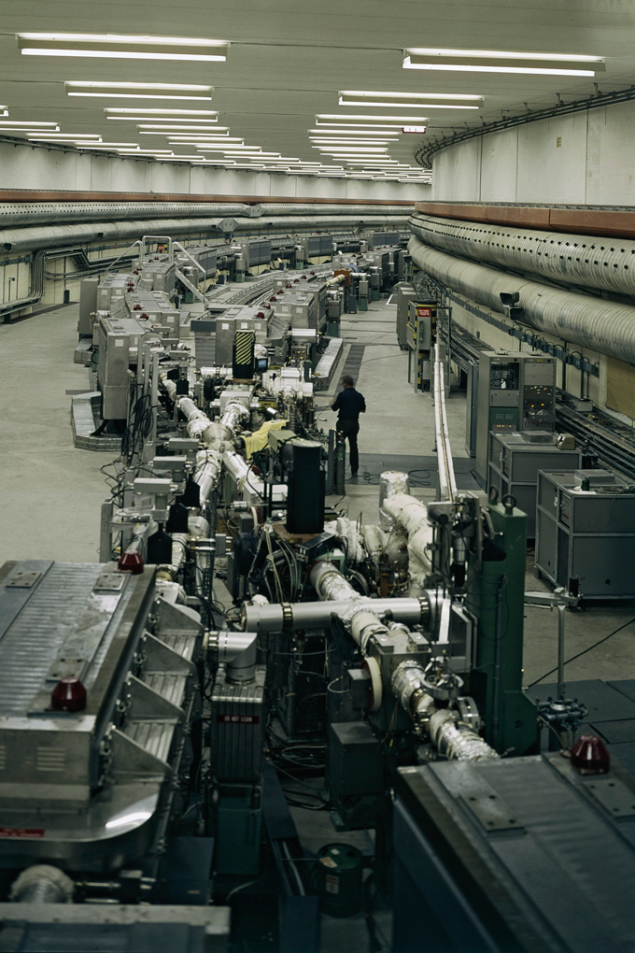

Construction of the ISR began in 1966 and first collisions were observed on 27 January 1971. The machine, which needed to store beams for many hours without the help of synchrotron-radiation damping to combat inevitable magnetic field errors and instabilities, pushed the boundaries in accelerator science on all fronts. Several respected scientists doubted that it would ever work. In fact, the ISR worked beautifully, exceeding its design luminosity by an order of magnitude and providing an essential step in the development of the next generation of hadron colliders. A key element was the performance of its ultra-high-vacuum system, which was a source of continuous improvement throughout the 13 year-long lifetime of the machine.

For the experimentalists, the ISR’s collisions (which reached an energy of 63 GeV) opened an exciting adventure at the energy frontier. But they were also learning what kind of detectors to build to fully exploit the potential of the machine – a task made harder by the lack of clear physics benchmarks known at the time in the ISR energy regime. The concept of general-purpose instruments built by large collaborations, as we know them today, was not in the culture of the time. Instead, many small collaborations built experiments with relatively short lifecycles, which constituted a fruitful learning ground for what was to come at the next generation of hadron colliders.

There was initially a broad belief that physics action would be in the forward directions at a hadron collider. This led to the Split Field Magnet facility as one of the first detectors at the ISR, providing a high magnetic field in the forward directions but a negligible one at large angle with respect to the colliding beams (the nowadays so-important transverse direction). It was with subsequent detectors featuring transverse spectrometer arms over limited solid angles that physicists observed a large excess of high transverse momentum particles above low-energy extrapolations. With these first observations of point-like parton scattering, the ISR made a fundamental contribution to strong-interaction physics. Solid angles were too limited initially, and single-particle triggers too biased, to fully appreciate the hadronic jet structure. That feat required third-generation detectors, notably the Axial Field Spectrometer (AFS) at the end of the ISR era, offering full azimuthal central calorimeter coverage. The experiment provided evidence for the back-to-back two-jet structure of hard parton scattering.

For the detector builders, the original AFS concept was interesting as it provided an unobstructed phi-symmetric magnetic field in the centre of the detector, however, at the price of massive Helmholtz coil pole tips obscuring the forward directions. Indeed, the ISR enabled the development of many original experimental ideas. A very important one was the measurement of the total cross section using very forward detectors in close proximity to the beam. These “Roman Pots”, named for their inventors, made their appearance in all later hadron colliders, confirming the rising total pp cross section with energy.

It is easy to say after the fact, still with regrets, that with an earlier availability of more complete and selective (with electron-trigger capability) second- and third-generation experiments at the ISR, CERN would not have been left as a spectator during the famous November revolution of 1974 with the J/ψ discoveries at Brookhaven and SLAC. These, and the ϒ resonances discovered at Fermilab three years later, were clearly observed in the later-generation ISR experiments.

SPS opens new era

However, events were unfolding at CERN that would pave the way to the completion of the Standard Model. At the ISR in 1972, the phenomenon of Schottky noise (density fluctuations due to the granular nature of the beam in a storage ring) was first observed. It was this very same noise that Simon van der Meer speculated in a paper a few years earlier could be used for what he called “stochastic cooling” of a proton beam, beating Liouville’s theorem by the fact that a beam of particles is not a continuous fluid. Although it is unrealistic to detect the motion of individual particles and damp them to the nominal orbit, van der Meer showed that by correcting the mean transverse motion of a sample of particles continuously, and as long as the statistical nature of the Schottky signal was continuously regenerated, it would be theoretically possible to reduce the beam size and increase its density. With the bandwidth of electronics available at the time, van der Meer concluded that the cooling time would be too long to be of practical importance. But the challenge was taken up by Wolfgang Schnell, who built a state-of-the-art feedback system that demonstrated stochastic cooling of a proton beam for the first time. This would open the door to the idea of stacking and cooling of antiprotons, which later led to the SPS being converted into a proton–antiproton collider.

Another important step towards the next generation of hadron colliders occurred in 1973 when the collaboration working on the Gargamelle heavy-liquid bubble chamber published two papers revealing the first evidence for weak neutral currents. These were important observations in support of the unified theory of electromagnetic and weak interactions, for which Sheldon Glashow, Abdus Salam and Steven Weinberg were to receive the Nobel Prize in Physics in 1979. The electroweak theory predicted the existence and approximate masses of two vector bosons, the W and the Z, which were too high to be produced in any existing machine. However, Carlo Rubbia and collaborators proposed that, if the SPS could be converted into a collider with protons and antiprotons circulating in opposite directions, there would be enough energy to create them.

To achieve this the SPS would need to be converted into a storage ring like the ISR, but this time the beam would need to be kept “bunched” with the radio-frequency (RF) system working continuously to achieve a high enough luminosity (unlike the ISR where the beams were allowed to de-bunch all around the ring). The challenges here were two-fold. Noise in the RF system causes particles to diffuse rapidly from the bunch. This was solved by a dedicated feedback system. It was also predicted that the beam–beam interaction would limit the performance of a bunched-beam machine with no synchrotron-radiation damping due to the strongly nonlinear interactions between a particle in one beam with the global electromagnetic field in the other beam.

A much bigger challenge was to build an accumulator ring in which antiprotons could be stored and cooled by stochastic cooling until a sufficient intensity of antiprotons would be available to transfer into the SPS, accelerate to around 300 GeV and collide with protons. This was done in two stages. First a proof-of-principle was needed to show that the ideas developed at the ISR transferred to a dedicated accumulator ring specially designed for stochastic cooling. This ring was called the Initial Cooling Experiment (ICE), and operated at CERN in 1977–1978. In ICE transverse cooling was applied to reduce the beam size and a new technique for reducing the momentum spread in the beam was developed. The experiment proved to be a big success and the theory of stochastic cooling was refined to a point where a real accumulator ring (the Antiproton Accumulator) could be designed to accumulate and store antiprotons produced at 3.5 GeV by the proton beam from the 26 GeV Proton Synchrotron. First collisions of protons and antiprotons at 270 GeV were observed on the night of 10 July 1981, signalling the start of a new era in colliding beam physics.

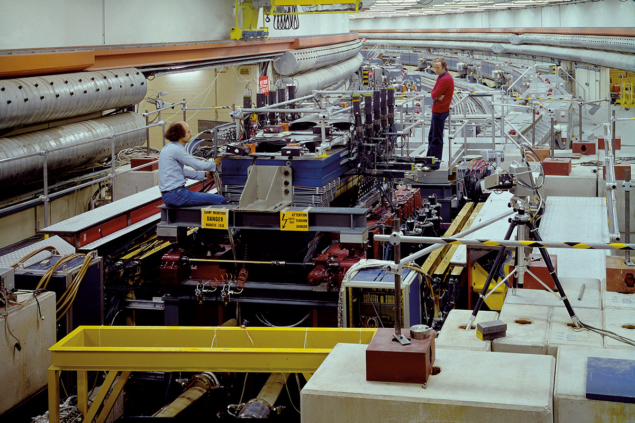

A clear physics goal, namely the discovery of the W and Z intermediate vector bosons, drove the concepts for the two main SppS experiments UA1 and UA2 (in addition to a few smaller, specialised experiments). It was no coincidence that the leaders of both collaborations were pioneers of ISR experiments, and many lessons from the ISR were taken on board. UA1 pioneered the concept of a hermetic detector that covered as much as possible the full solid angle around the interaction region with calorimetry and tracking. This allows measurements of the missing transverse energy/momentum, signalling the escaping neutrino in the leptonic W decays. Both electrons and muons were measured, with tracking in a state-of-the-art drift chamber that provided bubble-chamber-like pictures of the interactions. The magnetic field was provided by a dipole-magnet configuration, an approach not favoured in later generation experiments because of its inherent lack of azimuthal symmetry. UA2 featured a (at the time) highly segmented electromagnetic and hadronic calorimeter in the central part (down to 40 degrees with respect to the beam axis), with 240 cells pointing to the interaction region. But it had no muon detection, and in its initial phase only limited electromagnetic coverage in the forward regions. There was no magnetic field except for the forward cones with toroids to probe the W polarisation.

In 1983 the SppS experiments made history with the direct discoveries of the W and Z. Many other results were obtained, including the first evidence of neutral B-meson particle–antiparticle mixing at UA1 thanks to its tracking and muon detection. The calorimetry of UA2 provided immediate unambiguous evidence for a two-jet structure in events with large transverse energy. Both UA1 and UA2 pushed QCD studies far ahead. The lack of hermeticity in UA2’s forward regions motivated a major upgrade (UA2′) for the second phase of the collider, complementing the central part with new fully hermetic calorimetry (both electromagnetic and hadronic), and also inserting a new tracking cylinder employing novel technologies (fibre tracking and silicon pad detectors). This enabled the experiment to improve searches for top quarks and supersymmetric particles, as well as making almost background-free first precision measurements of the W mass.

Meanwhile in America

At the time the SppS was driving new studies at CERN, the first large superconducting synchrotron (the Tevatron, with a design energy close to 1 TeV) was under construction at Fermilab. In view of the success of the stochastic cooling experiments, there was a strong lobby at the time to halt the construction of the Tevatron and to divert effort instead to emulate the SPS as a proton–antiproton collider using the Fermilab Main Ring. Wisely this proposal was rejected and construction of the Tevatron continued. It came into operation as a fixed-target synchrotron in 1984. Two years later it was also converted into a proton–antiproton collider and operated at the high-energy frontier until its closure in September 2011.

A huge step was made with the detector concepts for the Tevatron experiments, in terms of addressed physics signatures, sophistication and granularity of the detector components. This opened new and continuously evolving avenues in analysis methods at hadron colliders. Already the initial CDF and DØ detectors for Run I (which lasted until 1996) were designed with cylindrical concepts, characteristic of what we now call general-purpose collider experiments, albeit DØ still without a central magnetic field in contrast to CDF’s 1.4 T solenoid. In 1995 the experiments delivered the first Tevatron highlight: the discovery of the top quark. Both detectors underwent major upgrades for Run II (2001–2011) – a theme now seen for the LHC experiments – which had a great impact on the Tevatron’s physics results. CDF was equipped with a new tracker, a silicon vertex detector, new forward calorimeters and muon detectors, while DØ added a 1.9 T central solenoid, vertexing and fibre tracking, and new forward muon detectors. Alongside the instrumentation was a breath-taking evolution in real-time event selection (triggering) and data acquisition to keep up with the increasing luminosity of the collider.

The physics harvest of the Tevatron experiments during Run II was impressive, including a wealth of QCD measurements and major inroads in top-quark physics, heavy-flavour physics and searches for phenomena beyond the Standard Model. Still standing strong are its precision measurements of the W and top masses and of the electroweak mixing angle sin2θW. The story ended in around 2012 with a glimpse of the Higgs boson in associated production with a vector boson. The CDF and DØ experience influenced the LHC era in many ways: for example they were able to extract the very rare single-top production cross-section with sophisticated multivariate algorithms, and they demonstrated the power of combining mature single-experiment measurements in common analyses to achieve ultimate precision and sensitivity.

For the machine builders, the pioneering role of the Tevatron as the first large superconducting machine was also essential for further progress. Two other machines – the Relativistic Heavy Ion Collider at Brookhaven and the electron–proton collider HERA at DESY – derived directly from the experience of building the Tevatron. Lessons learned from that machine and from the SppS were also integrated into the design of the most powerful hadron collider yet built: the LHC.

The Large Hadron Collider

The LHC had a difficult birth. Although the idea of a large proton–proton collider at CERN had been around since at least 1977, the approval of the Superconducting Super Collider (SSC) in the US in 1987 put the whole project into doubt. The SSC, with a centre-of-mass energy of 40 TeV, was almost three times more powerful than what could ever be built using the existing infrastructure at CERN. It was only the resilience and conviction of Carlo Rubbia, who shared the 1984 Nobel Prize in Physics with van der Meer for the project leading to the discovery of the W and Z bosons, that kept the project alive. Rubbia, who became Director-General of CERN in 1989, argued that, in spite of its lower energy, the LHC could be competitive with the SSC by having a luminosity an order of magnitude higher, and at a fraction of the cost. He also argued that the LHC would be more versatile: as well as colliding protons, it would be able to accelerate heavy ions to record energies at little extra cost.

The SSC was eventually cancelled in 1993. This made the case for the LHC even stronger, but the financial climate in Europe at the time was not conducive to the approval of a large project. For example, CERN’s largest contributor, Germany, was struggling with the cost of reunification and many other countries were getting to grips with the introduction of the single European currency. In December 1993 a plan was presented to the CERN Council to build the machine over a 10-year period by reducing the other experimental programmes at CERN to the absolute minimum, with the exception of the full exploitation of the flagship Large Electron Positron (LEP) collider. Although the plan was generally well received, it became clear that Germany and the UK were unlikely to agree to the budget increase required. On the positive side, after the demise of the SSC, a US panel on the future of particle physics recommended that “the government should declare its intentions to join other nations in constructing the LHC”. Positive signals were also being received from India, Japan and Russia.

In June 1994 the proposal to build the LHC was made once more. However, approval was blocked by Germany and the UK, which demanded substantial additional contributions from the two host states, France and Switzerland. This forced CERN to propose a “missing magnet” machine where only two thirds of the dipole magnets would be installed in a first stage, allowing operation at reduced energy for a number of years. Although costing more in the long run, the plan would save some 300 million Swiss Francs in the first phase. This proposal was put to Council in December 1994 by the new Director-General Christopher Llewellyn Smith and, after a round of intense discussions, the project was finally approved for two-stage construction, to be reviewed in 1997 after non-Member States had made known their contributions. The first country to do so was Japan in 1995, followed by India, Russia and Canada the next year. A final sting in the tail came in June 1996 when Germany unilaterally announced that it intended to reduce its CERN subscription by between 8% and 9%, prompting the UK to demand a similar reduction and forcing CERN to take out loans. At the same time, the two-stage plan was dropped and, after a shaky start, the construction of the full LHC was given the green light.

The fact that the LHC was to be built at CERN, making full use of the existing infrastructure to reduce cost, imposed a number of strong constraints. The first was the 27 km-circumference of the LEP tunnel in which the machine was to be housed. For the LHC to achieve its design energy of 7 TeV per beam, its bending magnets would need to operate at a field of 8.3 T, about 60% higher than ever achieved in previous machines. This could only be done using affordable superconducting material by reducing the temperature of the liquid-helium coolant from its normal boiling point of 4.2 K to 1.9 K – where helium exists in a macroscopic quantum state with the loss of viscosity and a very large thermal conductivity. A second major constraint was the small (3.8 m) tunnel diameter, which made it impossible to house two independent rings like the ISR. Instead, a novel and elegant magnet design, first proposed by Bob Palmer at Brookhaven, with the two rings separated by only 19 cm in a common yoke and cryostat was developed. This also considerably reduced the cost.

This journey is now poised to continue, as we look ahead towards how a general-purpose detector at a future 100 TeV hadron collider might look like

At precisely 09:30 on 10 September 2008, almost 15 years after the project’s approval, the first beam was injected into the LHC, amid global media attention. In the days that followed good progress was made until disaster struck: during a ramp to full energy, one of the 10,000 superconducting joints between the magnets failed, causing extensive damage which took more than a year to recover from. Following repairs and consolidation, on 29 November 2009 beam was once more circulating and full commissioning and operation could start. Rapid progress in ramping up the luminosity followed, and the LHC physics programme, at an initial energy of 3.5 TeV per beam, began in earnest in March 2010.

LHC experiments

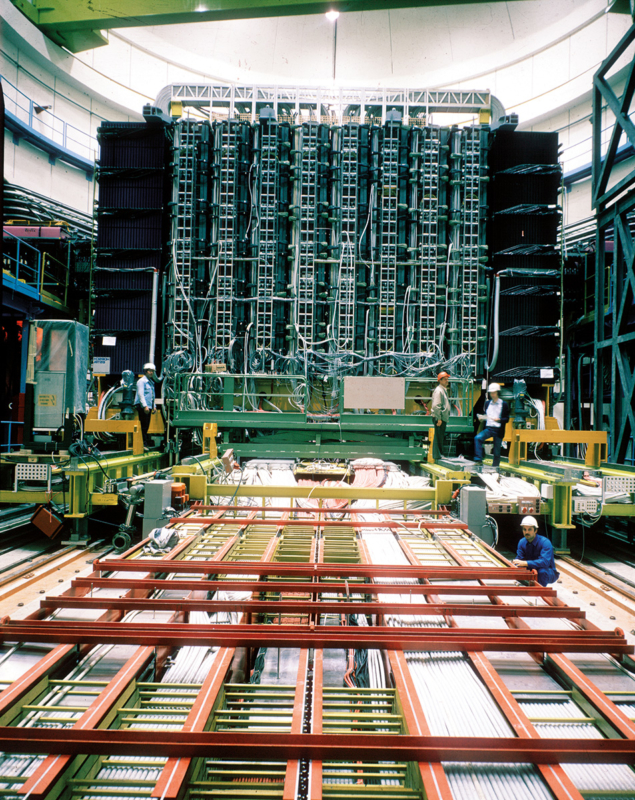

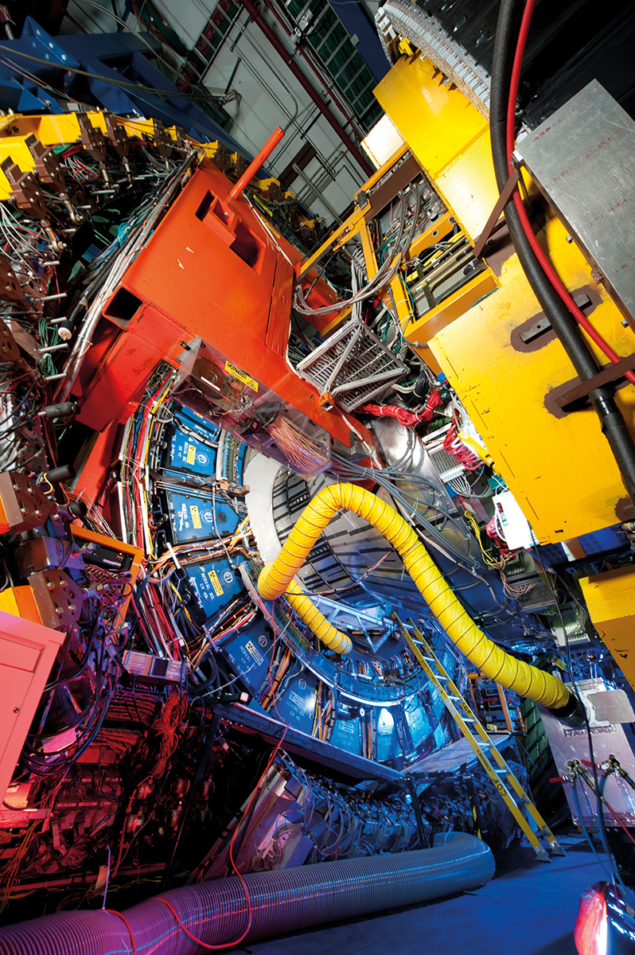

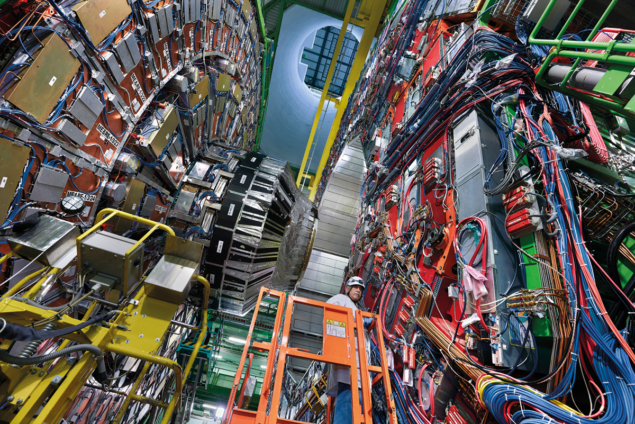

Yet a whole other level of sophistication was realised by the LHC detectors compared to those at previous colliders. The priority benchmark for the designs of the general-purpose detectors ATLAS and CMS was to unambiguously discover (or rule out) the Standard Model Higgs boson for all possible masses up to 1 TeV, which demanded the ability to measure a variety of final states. The challenges for the Higgs search also guaranteed the detectors’ potential for all kinds of searches for physics beyond the Standard Model, which was the other driving physics motivation at the energy frontier. These two very ambitious LHC detector designs integrated all the lessons learned from the experiments at the three predecessor machines, as well as further technology advances in other large experiments, most notably at HERA and LEP.

Just a few simple numbers illustrate the giant leap from the Tevatron to the LHC detectors. CDF and DØ, in their upgraded versions operating at a luminosity of up to 4 × 1032 cm–2s–1, typically had around a million channels and a triggered event rate of 100 Hz, with event sizes of 500 kB. The collaborations were each about 600 strong. By contrast, ATLAS and CMS operated during LHC Run 2 at a luminosity of 2 × 1034 cm–2s–1 with typically 100 million readout channels, and an event rate and size of 500 Hz and 1500 kB. Their publications have close to 3000 authors.

For many major LHC-detector components, complementary technologies were selected. This is most visible for the superconducting magnet systems, with an elegant and unique large 4 T solenoid in CMS serving both the muon and inner tracking measurements, and an air-core toroid system for the muon spectrometer in ATLAS together with a 2 T solenoid around the inner tracking cylinder. These choices drove the layout of the active detector components, for instance the electromagnetic calorimetry. Here again, different technologies were implemented: a novel-configuration liquid-argon sampling calorimeter for ATLAS and lead-tungstate crystals for CMS.

From the outset, the LHC was conceived as a highly versatile collider facility, not only for the exploration of high transverse-momentum physics. With its huge production of b and c quarks, it offered the possibility of a very fruitful programme in flavour physics, exploited with great success by the purposely designed LHCb experiment. Furthermore, in special runs the LHC provides heavy-ion collisions for studies of the quark–gluon plasma – the field of action for the ALICE experiment.

As the general-purpose experiments learned from the history of experiments in their field, the concepts of both LHCb and ALICE also evolved from a previous generation of experiments in their fields, which would be interesting to trace back. One remark is due: the designs of all four main detectors at the LHC have turned out to be so flexible that there are no strict boundaries between these three physics fields for them. All of them have learned to use features of their instruments to contribute at least in part to the full physics spectrum offered by the LHC, of which the highlight so far was the July 2012 announcement of the discovery of the Higgs boson by the ATLAS and CMS collaborations. The following year the collaborations were named in the citation for the 2013 Nobel Prize in Physics awarded to François Englert and Peter Higgs.

Since then, the LHC has exceeded its design luminosity by a factor of two and delivered an integrated luminosity of almost 200 fb–1 in proton–proton collisions, while its beam energy was increased to 6.5 TeV in 2015. The machine has also delivered heavy ion (lead–lead) and even lead–proton collisions. But the LHC still has a long way to go before its estimated end of operations in the mid-to-late 2030s. To this end, the machine was shut down in November 2018 for a major upgrade of the whole of the CERN injector complex as well as the detectors to prepare for operation at high luminosities, ultimately up to a “levelled” luminosity of 7 × 1034 cm–2s–1. The High Luminosity LHC (HL-LHC) upgrade is pushing the boundaries of superconducting magnet technology to the limit, particularly around the experiments where the present focusing elements will be replaced by new magnets built from high-performance Nb3Sn superconductor. The eventual objective is to accumulate 3000 fb–1 of integrated luminosity.

In parallel, the LHC-experiment collaborations are preparing and implementing major upgrades to their detectors using novel state-of-art technologies and revolutionary approaches to data collection to exploit the tenfold data volume promised by the HL-LHC. Hadron-collider detector concepts have come a long way in sophistication over the past 50 years. However, behind the scenes are other factors paramount to their success. These include an equally spectacular evolution in data-flow architectures, software and the computing approaches, and analysis methods – all of which have been driven into new territories by the extraordinary needs for dealing with rare events within the huge backgrounds of ordinary collisions at hadron colliders. Worthy of particular mention in the success of all LHC physics results is the Worldwide LHC Computing Grid. This journey is now poised to continue, as we look ahead towards how a general-purpose detector at a future 100 TeV hadron collider might look like.

Beyond the LHC

Although the LHC has at least 15 years of operations ahead of it, the question now arises, as it did in 1964: what is the next step for the field? The CERN Council has recently approved the recommendations of the 2020 update of the European strategy for particle physics, which includes, among other things, a thorough study of a very high-energy hadron collider to succeed the LHC. A technical and financial feasibility study for a 100 km circular collider at CERN with a collision energy of at least 100 TeV is now under way. While a decision to proceed with such a facility is to come later this decade, one thing is certain: lessons learned from 50 years of experience with hadron colliders and their detectors will be crucial to the success of our next step into the unknown.