The increase in computing demands expected this decade puts high-energy physics in a similar position to 1995 when the field moved to PCs, argues Sverre Jarp.

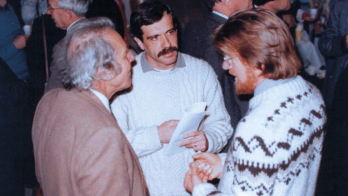

Twenty-five years ago in Rio de Janeiro, at the 8th International Conference on Computing in High-Energy and Nuclear Physics (CHEP-95), I presented a paper on behalf of my research team titled “The PC as Physics Computer for LHC”. We highlighted impressive improvements in price and performance compared to other solutions on offer. In the years that followed, the community started moving to PCs in a massive way, and today the PC remains unchallenged as the workhorse for high-energy physics (HEP) computing.

HEP-computing demands have always been greater than the available capacity. However, our community does not have the financial clout to dictate the way computing should evolve, demanding constant innovation and research in computing and IT to maintain progress. A few years before CHEP-95, RISC workstations and servers had started complementing the mainframes that had been acquired at high cost at the start-up of LEP in 1989. We thought we could do even better than RISC. The increased-energy LEP2 phase needed lots of simulation, and the same needs were already manifest for the LHC. These were our inspirations that led PC servers to start populating our computer centres – a move that was also helped by a fair amount of luck.

Fast change

HEP programs need good floating-point compute capabilities and early generations of the Intel x86 processors, such as the 486/487 chips, offered mediocre capabilities. The Pentium processors that emerged in the mid-1990s changed the scene significantly, and the competitive race between Intel and AMD was a major driver of continued hardware innovation.

Another strong tailwind came from the relentless efforts to shrink transistor sizes in line with Moore’s law, which saw processor speeds increase from 50/100 MHz to 2000/3000 MHz in little more than a decade. After 2006, when speed increases became impossible for thermal reasons, efforts moved to producing multi-core chips. However, HEP continued to profit. Since all physics events at colliders such as the LHC are independent of all others, it was sufficient to split a job into multiple jobs across all cores.

The HEP community was also lucky with software. Back in 1995 we had chosen Windows/NT as the operating system, mainly because it supported multiprocessing, which significantly enhanced our price/performance. Physicists, however, insisted on Unix. In 1991, Linus Thorvalds released Linux version 0.01 and it quickly gathered momentum as a worldwide open-source project. When release 2.0 appeared in 1996, multiprocessing support was included and the operating system was quickly adopted by our community.

Furthermore, HEP adopted the Grid concept to cope with the demands of the LHC. Thanks to projects such as Enabling Grids for E-science, we built the Worldwide LHC Computing Grid, which today handles more than two million tasks across one million PC cores every 24 hours. Although grid computing remained mainly amongst scientific users, the analogous concept of cloud computing had the same cementing effect across industry. Today, all the major cloud-computing providers overwhelmingly rely on PC servers.

In 1995 we had seen a glimmer, but we had no idea that the PC would remain an uncontested winner during a quarter of a century of scientific computing. The question is whether it will last for another quarter century?

The contenders

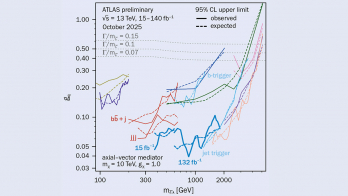

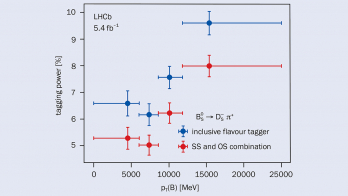

The end of CPU scaling, argued a recent report by the HEP Software Foundation, demands radical changes in computing and software to ensure the success of the LHC and other experiments into the 2020s and beyond. There are many contenders that would like to replace the x86 PC architecture. It could be graphics processors, where both Intel, AMD and Nvidia are active. A wilder guess is quantum computing, whereas a more conservative guess would be processors similar to the x86, but based on other architectures, such as ARM or RISC-V.

The end of CPU scaling demands radical changes to ensure the success of the LHC and other high-energy physics experiments

During the PC project we collaborated with Hewlett-Packard, which had a division in Grenoble, not too far away. Such R&D collaborations have been vital to CERN and the community since the beginning and they remain so today. They allow us to get insight into forthcoming products and future plans, while our feedback can help to influence the products in plan. CERN openlab, which has been the focal point for such collaborations for two decades, early-on coined the phrase “You make it, we break it”. However, whatever the future holds, it is fair to assume that PCs will remain the workhorse for HEP computing for many years to come.