Sonograms can be used to better understand human motor control.

Résumé

La sonification des données fait son entrée dans le domaine biomédical

La musique et les sciences de la vie ont beaucoup d’affinités : les deux disciplines font intervenir les concepts de cycles, de périodicité, de fluctuations, de transition et même, curieusement, d’harmonie. En utilisant la technique de la sonification, les scientifiques sont capables de percevoir et de quantifier la coordination des mouvements du corps humain, ce qui permet d’améliorer notre connaissance et notre compréhension du contrôle moteur en tant que système dynamique auto-organisé passant par des états stables et instables en fonction de changements dans les contraintes s’exerçant au niveau de l’organisme, des tâches et de l’environnement.

Image credit: Piotr Traczyk.

Resonances, periodicity, patterns and spectra are well-known notions that play crucial roles in particle physics, and that have always been at the junction between sound/music analysis and scientific exploration. Detecting the shape of a particular energy spectrum, studying the stability of a particle beam in a synchrotron, and separating signals from a noisy background are just a few examples where the connection with sound can be very strong, all sharing the same concepts of oscillations, cycles and frequency.

In 1619, Johannes Kepler published his Harmonices Mundi (the “harmonies of the world”), a monumental treatise linking music, geometry and astronomy. It was one of the first times that music, an artistic form, was presented as a global language able to describe relations between time, speed, repetitions and cycles.

The research we are conducting is based on the same ideas and principles: music is a structured language that enables us to examine and communicate periodicity, fluctuations, patterns and relations. Almost every notion in life sciences is linked with the idea of cycles, periodicity, fluctuations and transitions. These properties are naturally related to musical concepts such as pitch, timbre and modulation. In particular, vibrations and oscillations play a crucial role, both in life sciences and in music. Take, for example, the regulation of glucose in the body. Insulin is produced from the pancreas, creating a periodic oscillation in blood insulin that is thought to stop the down-regulation of insulin receptors in target cells. Indeed, these oscillations in the metabolic process are so key that constant inputs of insulin can jeopardise the system.

Oscillations are also the most crucial concept in music. What we call “sound” is the perceived result of regular mechanical vibrations happening at characteristic frequencies (between 20 and 20,000 times per second). Our ears are naturally trained to recognise the shape of these oscillations, their stability or variability, the way they combine and their interactions. Concepts such as pitch, timbre, harmony, consonance and dissonance, so familiar to musicians, all have a formal description and characterisation that can be expressed in terms of oscillations and vibrations.

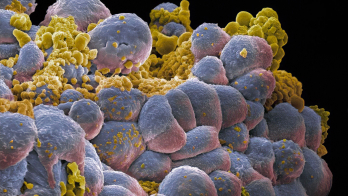

Many human movements are cyclic in nature. An important example is gait – the manner of walking or running. If we track the position of any point on the body in time, for example the shoulder or the knee, we would see it describing a regular, cyclic movement. If the gait is stable, as in walking at a constant speed, the frequency associated would be regular, with small variations due to the inherent variability of the system. By measuring, for example, the vertical displacement of the centre of each joint while walking or running, we would have a series of one-dimensional oscillating waveforms. The collection of these waveforms provides a representation of co-ordinated movement of the body. Studying their properties, such as phase relations, frequencies and amplitudes, then provides a way to investigate the order parameters that define modes of co-ordination.

Previous methods of examining the relation between components of the body have included statistical techniques such as principal-component analysis, or analysis of coupled oscillators through vector coding or continuous relative phase. However, a problem is that data are lost using statistical techniques, and small variations due to the inherent variability of the system are ignored. Conversely, a coupled oscillator can cope only with two components contributing to the co-ordination.

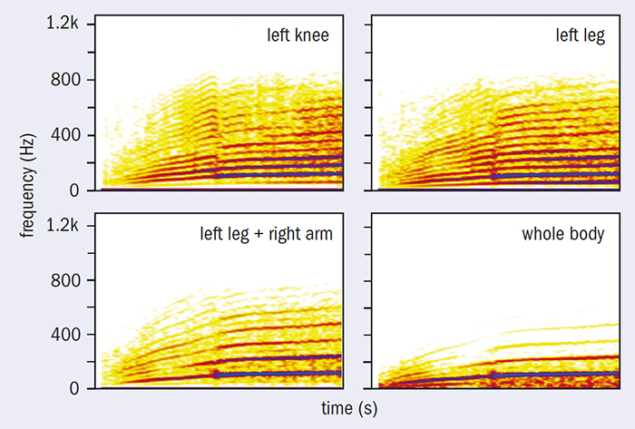

Sonograms to study body movements

Our approach is based on the idea of analysing the waveforms and their relations by translating them into audible signals and using the natural capability of the ear to distinguish, characterise and analyse waveform shapes, amplitudes and relations. This process is called data sonification, and one of the main tools to investigate the structure of the sound is the sonogram (sometimes also called a spectrogram). A sonogram is a visual representation of how the spectrum of a certain sound signal changes with time, and we can use sonograms to examine the phase relations between a large collection of variables without having to reduce the data. Spectral analysis is a particularly relevant tool in many scientific disciplines, for example in high-energy physics, where the interest lies in energy spectra, pattern and anomaly detections, and phase transitions.

Using a sonogram to examine the movement of multiple markers on the body in the frequency domain, we can obtain an individual and situation-specific representation of co-ordination between the major limbs. Because anti-phase frequencies cancel, in-phase frequencies enhance each other, and a certain degree of variability in the phase of the oscillation results in a band of frequencies, we are able to represent the co-ordination within the system through the resulting spectrogram.

Image credit: Domenico Vicinanza and Genevieve Williams.

In our study, we can see exactly this. A participant ran on a treadmill that was accelerating between speeds of 0 and 18 km/h for two minutes. A motion-analysis system was used to collect 3D kinematic data from 24 markers placed bilaterally on the head, neck, shoulders, elbows, wrists, hand, pelvis, hips, knees, heels, ankles and toes of the participant (sampling frequency 100 Hz, trial length 120 s). Individual and combined sensor measurements were resampled to generate audible waveforms. Sonograms were then computed using moving-frequency Hanning analysis windows for all of the sound signals computed for each marker and combination of markers.

Sonification of individual and combined markers is shown above right. Sonification of an individual marker placed on the left knee (top left in the figure) shows the frequencies underpinning the marker movement on that particular joint-centre. By combining the markers, say of a whole limb such as the leg, we can examine the relations of single markers, through the cancellation and enhancement of frequencies involved. The result will show some spectral lines strengthening, others disappearing and others stretching to become bands (top right). The nature of the collective movements and oscillations that underpin the mechanics of an arm or a leg moving regularly during the gait can then be analysed through the sound generated by the superposition of the relative waveforms.

A particularly interesting case appears when we combine audifications of marker signals coming from opposing limbs, for example left leg/right arm or right leg/left arm. The sonogram bottom left in the figure is the representation of the frequency content of the oscillations related to the combined sensors on the left leg and the right arm (called additive synthesis, in audio engineering). If we compare the sonogram of the left leg alone (top right) and the combination with the opposing arm, we can see that some spectral lines disappear from the spectrum, because of the phase opposition between some of the markers, for example the left knee and the right elbow, the left ankle and the right hand.

The final result of this cancellation is a globally simpler dynamical system, described by a smaller number of frequencies. The frequencies themselves, their sharpness (variability) and the point of transition provide key information about the system. In addition, we are able to observe and hear the phase transition between the walking and running state, indicating that our technique is suitable for examining these order-parameter states. By examining movement in the frequency domain, we obtain an individual and situation-specific representation of co-ordination between the major limbs.

Sonification of movement as audio feedback

Sonification, as in the example above, does not require data reduction. It can provide us with unique ways of quantifying and perceiving co-ordination in human movement, contributing to our knowledge and understanding of motor control as a self-organised dynamical system that moves through stable and unstable states in response to changes in organismic, task and environmental constraints. For example, the specific measurement described above is a tool to increase our understanding of the adaptability of human motor control to something like a prosthetic limb. The application of this technique will aid diagnosis and tracking of pathological and perturbed gait, for example highlighting key changes in gait with ageing or leg surgeries.

In addition, we can also use sonification of movements as a novel form of audio feedback. Movement is key to healthy ageing and recovery from injuries or even pathologies. Physiotherapists and practitioners prescribe exercises that take the human body through certain movements, creating certain forces. The precise execution of these exercises is fundamental to the expected benefits, and while this is possible under the watchful eye of the physiotherapist, it can be difficult to achieve when alone at home.

In precisely executing exercises, there are three main challenges. First, there is the patient’s memory of what the correct movement or exercise should look like. Second, there is the ability of the patient to execute correctly the movement that they are required to do, working the right muscles to move the joints and limbs through the correct space, over the right amount of time or through an appropriate amount of force. Last, finding the motivation to perform sometimes painful, strenuous or boring exercises, sometimes many times a day, is a challenge.

Sonification can provide not only real-time audio feedback but also elements of feed-forward, which provides a quantitative reference for the correct execution of movements. This means that the patient has access to a map of the correct movements through real-time feedback, enabling them to perform correctly. And let’s not forget about motivation. Through sonification, in response to the movements, the patient can generate not only waveforms but also melodies and sounds that are pleasing.

Another possible application of generating melodies associated with movement is in the artistic domain. Accelerometers, vibration sensors and gyroscopes can turn gestures into melodic lines and harmonies. The demo organised during the public talk of the International Conference on Translational Research in Radio-Oncology – Physics for Health in Europe (ICTR-PHE), on 16 February in Geneva, was based on that principle. Using accelerometers connected to the arm of a flute player, we could generate melodies related to the movements naturally occurring when playing, in a sort of duet between the flute and the flutist. Art and science and music and movement seem to be linked in a natural but profound way by a multitude of different threads, and technology keeps providing the right tools to continue the investigation just as Kepler did four centuries ago.