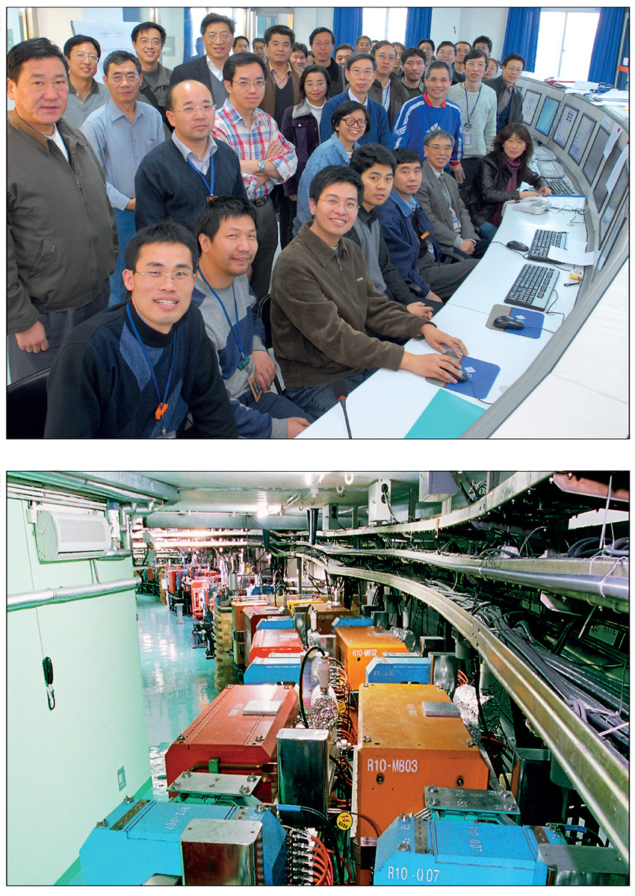

It’s 12 months since the International Center for Quantum-field Measurement Systems for Studies of the Universe and Particles (or QUP for short) was unveiled as the latest addition to the World Premier International Research Center Initiative (WPI) run by Japan’s Ministry of Education, Culture, Sports, Science and Technology (MEXT). Based in Tsukuba City, 60 km north-east of Tokyo, the new centre represents a high-profile addition to the scientific powerhouse that is KEK, Japan’s High Energy Accelerator Research Organisation.

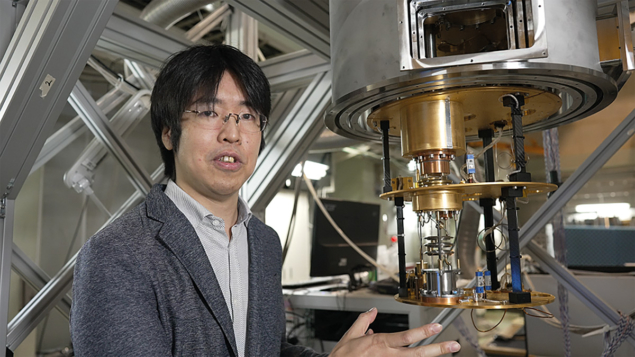

Within KEK, QUP has since assumed its place alongside the organisation’s other flagship research laboratories – among them the Institute of Particle and Nuclear Studies (IPNS), the Accelerator Laboratory (ACCL) and the Japan Proton Accelerator Research Complex (J-PARC) – in order to ensure that the outcomes of its ambitious research endeavours dovetail with, and reinforce, KEK’s core discovery programme in elementary particle physics. Here QUP director Masashi Hazumi tells CERN Courier about the centre’s progress to date and the opportunities for talented early-career scientists prepared to take risks and look beyond the comfort zone of their core disciplinary specialisms.

How would you pitch QUP to a talented postdoc thinking about the next big career move?

QUP’s mission is to “bring new eyes to humanity” by inventing advanced measurement systems – novel electronic and quantum detectors that will unlock exciting discoveries in cosmology and particle physics. Examples include superconducting detectors to study cosmic inflation (for the LiteBIRD space mission) and low-temperature quasiparticle detection systems to search for “light dark matter”. We are also keen on applying our unique capabilities to broader academic fields and industrial and societal applications – a case in point being our close engagement with Toyota Central R&D Laboratories.

In this way, QUP will return to the essence of physics, conducting interdisciplinary research to develop new methodologies while integrating particle physics, astrophysics, condensed-matter physics, measurement science, and systems science. QUP’s inventions and innovations will exploit the most fundamental object in nature – the quantum field – and thereby open up a new era in measurement science: quantum-field measurement systemology.

What sets the QUP approach apart from other quantum measurement centres?

QUP’s strength lies in the breadth of technologies covered and the ability to transition seamlessly between studies of fundamental physics to the execution of large-scale projects on next-generation scientific instruments and quantum technologies. The aim is simple: to create a cross-disciplinary “melting pot” that encourages a fusion of ideas across diverse fields of science, technology and engineering. As such, we’re recruiting a team of “multidisciplinarians” – scientists who can apply their domain knowledge and expertise creatively and flexibly across subject boundaries.

A good example is the quantum diamond sensor – an enabling technology that exploits so-called NV defects in the carbon lattice – which QUP is developing to support precise temperature measurements of instrumentation (down to the 1 mK level at room temperature) in future studies of the cosmic microwave background (CMB). Notably, that same quantum sensing technology is also attracting early-stage interest from our colleagues at Toyota, with QUP particle physicists and industrial scientists openly sharing ideas. Unanticipated connections like this can create intriguing opportunities for young scientists, opening up new research pathways and long-term career opportunities.

How important is QUP’s positioning as part of the KEK research organisation?

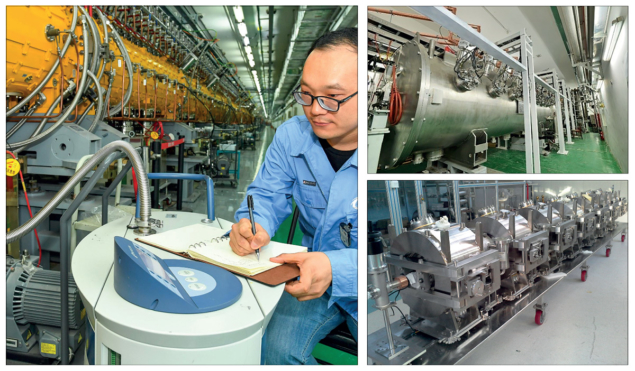

QUP is unique among Japan’s WPI programmes because it is the only centre focused on measurement systems, while the integration within KEK means collaboration is hard-wired into our working model. We are already establishing networks to link QUP scientists with researchers across KEK’s accelerator facilities and laboratories. An early success story is the QUP/KEK machine-learning research cluster, which is exploiting AI in a range of high-energy physics contexts and industrial applications (e.g. autonomous vehicles).

Presumably that collaborative, open mindset extends beyond KEK?

Correct. We are in the process of establishing three satellite sites for QUP – at Toyota Central R&D Laboratories in Aichi; the Japan Aerospace Exploration Agency (JAXA) Institute of Space and Astronautical Science (ISAS) in Kanagawa; and the University of California, Berkeley, in the US. These activities are already bearing fruit: Hideo Iizuka, a senior scientist at Toyota and one of our principal investigators at QUP, is developing applications of the Casimir effect (the attractive force between two surfaces in a vacuum), with a long-term goal of demonstrating a zero-friction shaft bearing for energy-efficient vehicles.

You highlighted the LiteBIRD space mission earlier. What is QUP’s role in LiteBIRD?

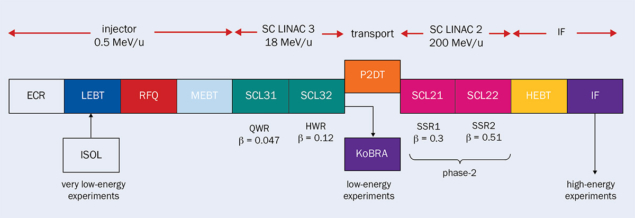

One of QUP’s flagship projects centres around the contribution we’re making to the JAXA LiteBIRD space mission, which will study aspects of primordial cosmology and fundamental physics. I am one of the founders of LiteBIRD, an international, large-class mission with an expected launch date in the late 2020s using JAXA’s H3 rocket. When deployed, LiteBIRD will orbit the Sun–Earth Lagrangian point L2, where it will map CMB polarisation over the entire sky for three years. The primary scientific objective is to search for the signal from cosmic inflation, either making a discovery or ruling out well-motivated inflationary models of the universe. LiteBIRD will also provide insights into the quantum nature of gravity and new physics beyond the standard models of particle physics and cosmology. The focus of QUP’s contribution is development of the superconducting detector subsystem for LiteBIRD’s low-frequency telescope.

Project Q is another of QUP’s flagship initiatives. What is it?

Project Q is still a work-in-progress. Essentially, we are putting together a framework to invent and develop a novel system for the measurement of a new quantum field. Last month, as part of the requirements-gathering exercise, QUP and the KEK Theory Center jointly organised a dedicated workshop called “Toward Project Q”. The hybrid event attracted 91 participants – a mix of QUP staff and international colleagues – who shared a range of ideas on potential lines of enquiry for Project Q, including cryogenic measurements, space missions, accelerator and non-accelerator experiments, as well as the use of novel quantum sensors. Watch this space.

What are your near-term priorities as director of QUP?

My number-one priority is to hire the best researchers and position QUP for long-term scientific success. The open nature of Project Q represents a useful conversation-starter in this regard. We have 27 scientists on the staff just now and the plan is to grow the research team to about 70 people by early 2024 – a cohort that will ultimately comprise around 15 principal investigators supported by a team of research professors and postdocs (and with WPI guidelines stating that 30% or more of the QUP team should eventually come from abroad). When it comes to recruitment, I’m looking for scientists who are enterprising, creative and not afraid to take risks – there may well be some candidates who fit the profile among the CERN Courier readership! I like that sort of spirit. If you go big, the success rate may not be high, but unexpected insights and opportunities will often emerge.

QUP in brief

QUP is the fourteenth, and latest, addition to Japan’s WPI programme, a long-running, government-backed initiative to attract the “brightest and best” scientific talent from around the world, creating a network of highly visible research centres focused on grand challenges in the physical sciences, life sciences and applied R&D. Other WPI research centres specialising in the physical sciences include: the Kavli Institute for the Physics and Mathematics of the Universe (University of Tokyo); the Advanced Institute for Materials Research (Tohoku University); and the International Center for Materials Nanoarchitectonics (part of the National Institute for Materials Science).

Right now, QUP’s research priorities cover the following themes:

- Development and implementation of the superconducting detector array for the LiteBIRD CMB space mission.

- The invention of methods (e.g. those using quasiparticles) for measuring novel quantum fields (e.g. axions); also the proposal and promotion of new projects based on these methods (Project Q).

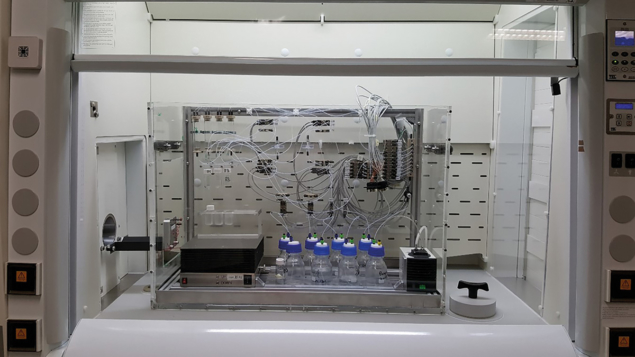

- The invention of a new generation of low-temperature detectors, quantum detectors and radiation-hard detectors.

- Pioneering the most efficient means for large-scale projects in basic science (e.g. automated ASIC design) and modelling these approaches based on current/idealised best practice (establishing “systemology”).

- Applications with industrial and societal implications (e.g. autonomous driving and smart cities).

Axel Lindner was working in accelerator-based particle physics, astroparticle physics and management before he engaged in WISP searches in 2007 as the spokesperson of the ALPS I experiment. Since 2018 he has been leading a new experimental group at DESY in Hamburg in charge of realizing non-accelerator-based particle physics experiments on-site. Axel has been a member of the MADMAX and IAXO collaborations and spokesperson of ALPS II since 2012.

Axel Lindner was working in accelerator-based particle physics, astroparticle physics and management before he engaged in WISP searches in 2007 as the spokesperson of the ALPS I experiment. Since 2018 he has been leading a new experimental group at DESY in Hamburg in charge of realizing non-accelerator-based particle physics experiments on-site. Axel has been a member of the MADMAX and IAXO collaborations and spokesperson of ALPS II since 2012.