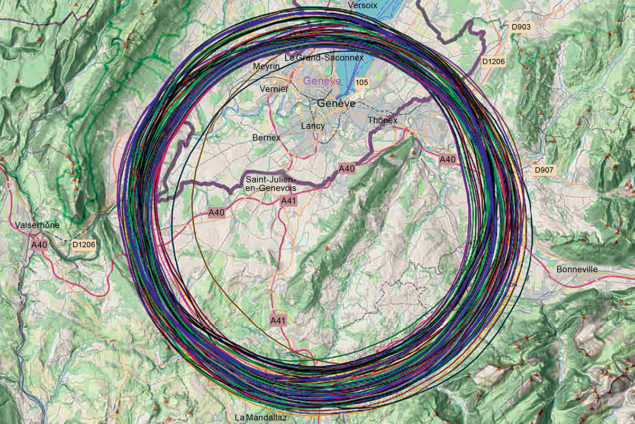

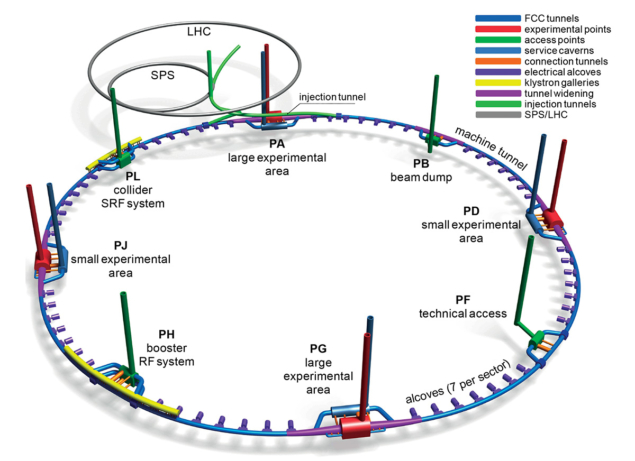

CERN has been burrowing beneath the French–Swiss border for half a century. Its first major underground project was the 7 km-circumference Super Proton Synchrotron (SPS), constructed at a depth of around 40 m by a single tunnel boring machine (TBM). This was followed by the Large Electron–Positron (LEP) collider at an average depth of around 100 m, for which the 27 km tunnel was constructed between 1983 and 1989 using three TBMs and a more traditional “drill and blast” method for the sector closest to the Jura mountain range. With a circumference of 90.7 km, weaving through the molasse and limestone beneath Lake Geneva and around Mont Salève, the proposed Future Circular Collider (FCC) would constitute the largest tunnel ever constructed at CERN and be considered a major global civil-engineering project in its own right.

Should the FCC be approved, civil engineering will be the first major on-site activity to take place. The mid-term review of the FCC feasibility study schedules ground-breaking for the first shafts to begin in 2033, after which it would take between six and eight years for each underground sector to be made available for the installation of the technical infrastructure, the machine and the experiments.

Evolving engineering

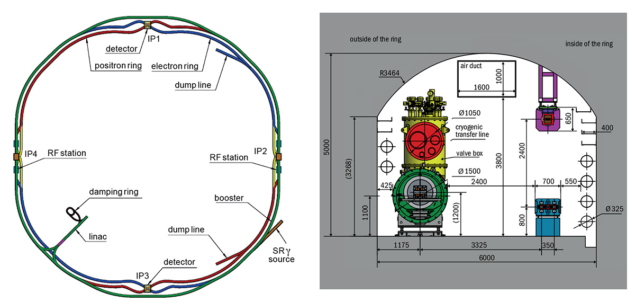

Since the completion of the FCC conceptual design report in 2018, several significant changes have been made to the civil engineering. These include a 7 km reduction in the overall circumference of the main tunnel, a reduction in the number of surface sites from 12 to eight, and a reduction in the number of permanent shafts from 18 to 12 (two at each of the four experiment sites, one at each of the four technical sites). A temporary shaft will also be required for the construction of the transfer tunnel connecting the injection system to the FCC tunnel, although it may be possible to re-use an existing but unused shaft for this purpose. Additional underground civil engineering for the RF systems will also be required. The diameter of the main tunnel (5.5 m) and its inclination (0.5%) remain unchanged, resulting in a tunnel depth that varies between approximately 50 m – where it passes under the Rhône River – and 500 m – where it passes beneath the Borne plateau on the eastern side of the study site.

The tunnel would be constructed using up to eight TBMs, which are able to excavate and install the tunnel lining in a single-pass operation. Desktop studies show that the geology that would be encountered during most of the underground construction would be favourable, since the molasse rock is usually watertight and can be easily supported using a range of standard rock-support measures. The main beam tunnel will, however, need to pass through about 4.4 km of limestone, which may require the drill-and-blast method to be utilised. These geological assumptions need to be confirmed via a major in situ site investigation campaign planned for 2024–2025.

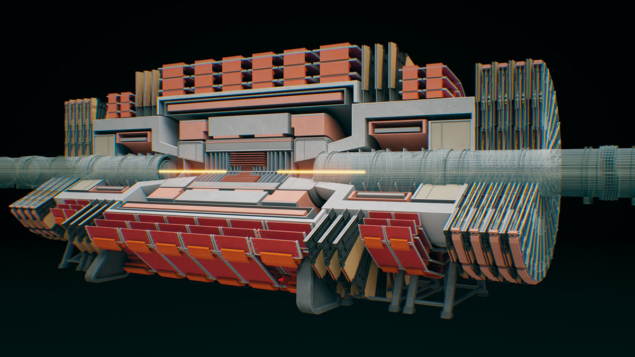

Two sizes of experiment cavern complexes are envisaged, serving both the lepton and hadron FCC stages. One includes a cavern to house the largest planned FCC-hh detector with dimensions of 35 × 35 × 66 m (similar to the existing ATLAS cavern) and the other a 25 × 25 × 66 m cavern to house the smaller FCC-ee detectors (similar to the CMS cavern). A second cavern 25 m wide and up to 100 m long would be required at each experiment area to house various technical infrastructure, while a 50 m-thick rock pillar between the detector and the service caverns would provide electromagnetic shielding from the detector as well as the overall structural stability of the cavern complex. Numerous smaller caverns and interconnecting tunnels and galleries will be required to link the main structures, and these are expected to be excavated using road-header machines or rock breakers.

Conceptual layouts for two of the eight new surface sites have been prepared under a collaboration agreement with Fermilab, and studies of the experiment site at Ferney-Voltaire in France and the technical site at Choulex/Presinge in Switzerland have been undertaken. The requirements for other surface sites will be developed into preliminary designs in the second half of the feasibility study. In addition, several locations have been investigated for a new high-energy linac, which has been proposed as an alternative to using the SPS as a pre-injector for the FCC, with the most promising site located close to the existing CERN Prévessin site.

Feasibility campaign

As an essential step in demonstrating the feasibility of underground civil-engineering works for the FCC, CERN has been working with international consultants and the University of Geneva to develop a 3D geological model using information gathered from previous borehole and geophysical investigations. To improve this understanding, a targeted campaign of subsurface investigations using a combination of geophysical analyses and deep-borehole drilling has been planned in the areas of highest geological uncertainty. The campaign, which is currently being tendered with specialist companies, will commence in 2024 and continue into 2025 to ensure that the results are available before the end of the feasibility study. About 30 boreholes will be drilled and used in conjunction with 80 km of seismic lines to investigate the location of the molasse rock, in particular under Lake Geneva, the Rhône river and in those areas where limestone formations may be close to the planned tunnel horizon.

On the surface, there is scope for staging the construction of buildings. All the buildings that are only required for the FCC-hh phase would be postponed, but the land areas needed for them would be reserved and included in the overall site perimeter. Buried networks, roads and technical galleries would be designed and constructed such that they can be extended later to accommodate the FCC-hh structures.

With a total of around 15 million tonnes of rock and soil to be excavated, sustainability is a major focus of the FCC civil-engineering studies. To this end, in the framework of the European Union co-funded FCC Innovation Study, CERN and the University of Leoben launched an international challenge-based competition, “Mining the Future”, in 2021 to identify credible and innovative ways to reuse the molasse. The results of the competition include the use of limestone for concrete production and stabilisation of constructions within the project, the re-use of excavated materials to back-fill quarries and mines, the transformation of sterile molasse into fertile soil for agriculture and forestry, the production of bricks from compressed molasse, and the development of novel construction materials with molasse ingredients for use in the project as far as technically suitable. The next step is the implementation of a pilot “Open Sky Laboratory” permitting the demonstration of the separation techniques of the winning consortium (led by BG engineering), and collaboration with CERN’s host states and other stakeholders to identify suitable locations for its use. In addition, the FCC feasibility study is working towards a full assessment to minimise the carbon footprint during construction.

The civil-engineering plans for the FCC project have been presented several times to the global tunnelling community, most recently at the 2023 World Tunnel Congress in Athens. The scale and technical complexity of the project is creating a great deal of interest from designers and contractors, and has even triggered a dedicated visit to CERN from the executive committee of the International Tunnelling Association, which reinforces the significant progress that has been made.