For David P Anderson, project leader of SETI@home, the future of scientific computing is public.

Most of the world’s computing power is no longer concentrated in supercomputer centres and machine rooms. Instead it is distributed around the world in hundreds of millions of PCs and game consoles, of which a growing fraction are connected to the Internet.

A new computing paradigm, “public-resource computing”, uses these resources to perform scientific supercomputing. This enables previously unfeasible research and has social implications as well: it catalyses global communities centred on common interests and goals; it encourages public awareness of current scientific research; and it gives the public a measure of control over the directions of science progress.

The number of Internet-connected PCs is growing and is projected to reach 1 billion by 2015. Together they could provide 1015 floating point operations per second (FLOPS) of power. The potential for distributed disk storage is also huge.

Public-resource computing emerged in the mid-1990s with two projects: GIMPS (looking for large prime numbers); and Distributed.net, (solving cryptographic codes). In 1999 our group launched a third project, SETI@home, which searches radiotelescope data for signs of extraterrestrial intelligence. The appeal of this challenge extended beyond hobbyists; it attracted millions of participants worldwide and inspired a number of other academic projects as well as efforts to commercialize the paradigm. SETI@home currently runs on about 1 million PCs, providing a processing rate of more than 60 teraFLOPS. In contrast, the largest conventional supercomputer, the NEC Earth Simulator, offers in the region of 35 teraFLOPs.

Public-resource computing is effective only if many participate. This relies on publicity. For example, SETI@home has received coverage in the mass-media and in Internet news forums like Slashdot. This, together with its screensaver graphics, seeded a large-scale “viral marketing” effect.

Retaining participants requires an understanding of their motivations. A poll of SETI@home users showed that many are interested in the science, so we developed Web-based educational material and regular scientific news. Another key factor is “credit” – a numerical measure of work accomplished. SETI@home provides website “leader boards” where users are listed in order of their credit.

SETI@home participants contribute more than just CPU time. Some have translated the SETI@home website into 30 languages, and developed add-on software and ancillary websites. It is important to provide channels for these contributions. Various communities have formed around SETI@home. A single, worldwide community interacts through the website and its message boards. Meanwhile, national and language-specific communities have their own websites and message boards. These have been particularly effective in recruiting new participants.

All the world’s a computer

We are developing software called BOINC (Berkeley Open Infrastructure for Network Computing), which facilitates creating and operating public-resource computing projects. Several BOINC-based projects are in progress, including SETI@home, Folding@home and Climateprediction.net. BOINC participants can register with multiple projects and can control how their resources are shared. For example, a user might devote 60% of his CPU time to studying global warming and 40% to SETI.

We hope that BOINC will stimulate public interest in scientific research. Computer owners can donate their resources to any of a number of projects, so they will study and evaluate them, learning about their goals, methods and chances of success. Further, control over resource allocation for scientific research will shift slightly from government funding agencies to the public. This offers a uniquely direct and democratic influence on the directions of scientific research.

What other computational projects are amenable to public-resource computing? The task must be divisible into independent parts whose ratio of computation to data is fairly high (or the cost of Internet data transfer may exceed the cost of doing the computation centrally). Also, the code needed to run the task should be stable over time and require a minimal computational environment.

Climateprediction.net is a recent example of such an effort in the public-resource computing field. Models of complex physical systems, such as global climate, are often chaotic. Studying their statistics requires large numbers of independent simulations with different boundary conditions.

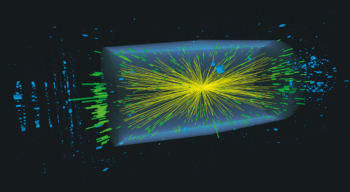

CPU-intensive data-processing applications include analysis of radiotelescope data, and some applications stemming from high-energy physics are also amenable to public computing: CERN has been testing BOINC in house to simulate particle orbits in the LHC. Other possibilities include biomedical applications, such as virtual drug design and gene-sequence analysis. Early pioneers in this field include Folding@home from Stanford University.

In the long run, the inexorable march of Moore’s law, and the corresponding increase of storage capacity on PCs and the bandwidth available to home computers on the Internet, means that public-resource computing should improve both qualitatively and quantitatively, which should open an ever-widening range of opportunities for this new paradigm in scientific computing.