Long the preserve of professional engineers coding in low-level languages, FPGAs can now be programmed in C++ and Java, bringing machine learning and complex algorithms within the scope of trigger-level analysis.

Teeming with radiation and data, the heart of a hadron collider is an inhospitable environment in which to make a tricky decision. Nevertheless, the LHC experiment detectors have only microseconds after each proton–proton collision to make their most critical analysis call: whether to read out the detector or reject the event forever. As a result of limitations in read-out bandwidth, only 0.002% of the terabits per second of data generated by the detectors can be saved for use in physics analyses. Boosts in energy and luminosity – and the accompanying surge in the complexity of the data from the high-luminosity LHC upgrade – mean that the technical challenge is growing rapidly. New techniques are therefore needed to ensure that decisions are made with speed, precision and flexibility so that the subsequent physics measurements are as sharp as possible.

The front-end and read-out systems of most collider detectors include many application-specific integrated circuits (ASICs). These custom-designed chips digitise signals at the interface between the detector and the outside world. The algorithms are baked into silicon at the foundries of some of the biggest companies in the world, with limited prospects for changing their functionality in the light of changing conditions or detector performance. Minor design changes require substantial time and money to fix, and the replacement chip must be fabricated from scratch. In the LHC era, the tricky trigger electronics are therefore not implemented with ASICs, as before, but with field-programmable gate arrays (FPGAs). Previously used to prototype the ASICs, FPGAs may be re-programmed “in the field”, without a trip to the foundry. Now also prevalent in high-performance computing, with leading tech companies using them to accelerate critical processing in their data centres, FPGAs offer the benefits of task-specific customisation of the computing architecture without having to set the chip’s functionality in stone – or in this case silicon.

Architecture of a chip

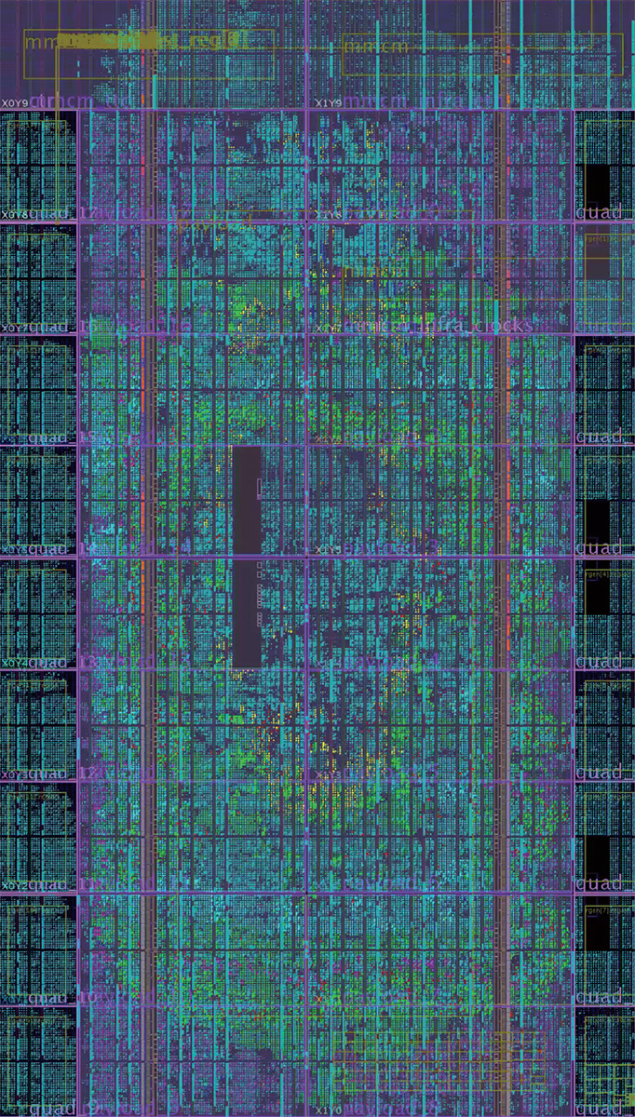

FPGAs can compete with other high-performance computing chips due to their massive capability for parallel processing and relatively low power consumption per operation. The devices contain many millions of programmable logic gates that can be configured and connected together to solve specific problems. Because of the vast numbers of tiny processing units, FPGAs can be programmed to work on many different parts of a task simultaneously, thereby achieving massive throughput and low latency – ideal for increasingly popular machine-learning applications. FPGAs can also support high bandwidth inputs and outputs of up to about 100 dedicated high-speed serial links, making them ideal workhorses to process the deluge of data that streams out of particle detectors (see CERN Courier September 2016 p21).

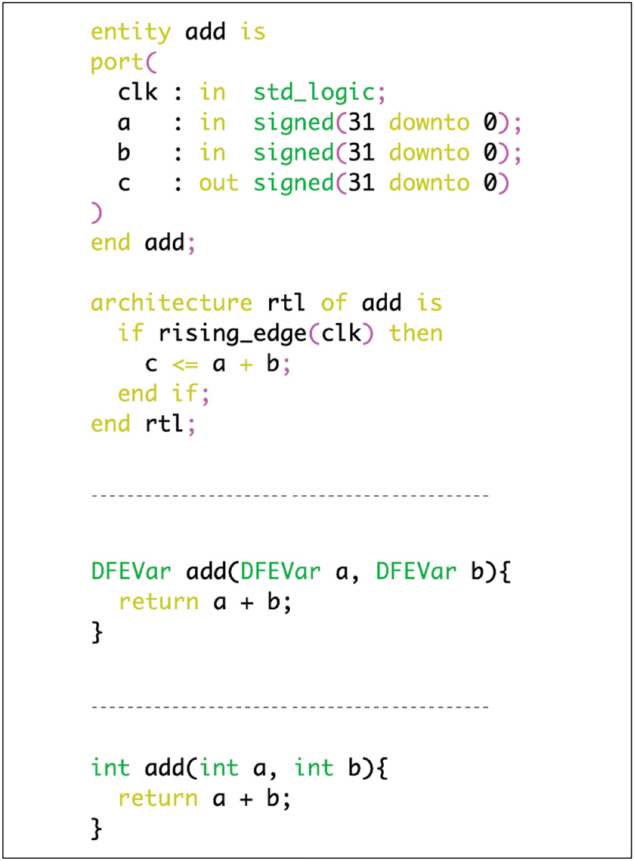

The difficulty is that programming FPGAs is traditionally the preserve of engineers coding low-level languages such as VHDL and Verilog, where even simple tasks can be tricky. For example, a function to sum two numbers together requires several lines of code in VHDL, with the designer even required to define when the operations happen relative to the processor clock (figure 1). Outsourcing the coding is impractical, given the imminent need to implement elaborate algorithms featuring machine learning in the trigger to quickly analyse data from high-granularity detectors in high-luminosity environments. During the past five years, however, tools have matured, allowing FPGAs to be programmed in variants of high-level languages such as C++ and Java, and bringing FPGA coding within the reach of physicists themselves.

But can high-level tools produce FPGA code with low-enough latency for trigger applications? And can their resource usage compete with professionally developed low-level code? During the past couple of years CMS physicists have trialled the use of a Java-based language, MaxJ, and tools from Maxeler Technologies, a leading company in accelerated computing and data-flow engines, who were partners in the studies. More recently the collaboration has also gained experience with the C++-based Vivado high-level synthesis (HLS) tool of the FPGA manufacturer Xilinx. The work has demonstrated the potential for ground-breaking new tools to be used in future triggers, without significantly increasing resource usage and latency.

Track and field-programmable

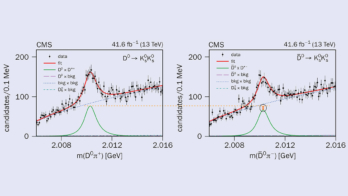

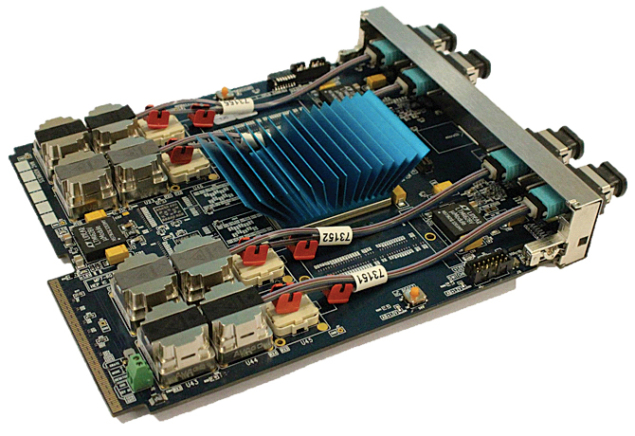

Tasked with finding hadronic jets and calculating missing transverse energy in a few microseconds, the trigger of the CMS calorimeter handles an information throughput of 6.5 terabits per second. Data are read out from the detector into the trigger-system FPGAs in the counting room in a cavern adjacent to CMS. The official FPGA code was implemented in VHDL over several months each of development, debugging and testing. To investigate whether high-level FPGA programming can be practical, the same algorithms were implemented in MaxJ by an inexperienced doctoral student (figure 2), with the low-level clocking and management of high-speed serial links still undertaken by the professionally developed code. The high-level code had comparable latency and resource usage with one exception: the hand-crafted VHDL was superior when it came to quickly sorting objects by their transverse momentum. With this caveat, the study suggests that using high-level development tools can dramatically lower the bar for developing FPGA firmware, to the extent that students and physicists can contribute to large parts of the development of labyrinthine electronics systems.

Kalman filtering is an example of an algorithm that is conventionally used for offline track reconstruction on CPUs, away from the low-latency restrictions of the trigger. The mathematical aspects of the algorithm are difficult to implement in a low-level language, for example requiring trajectory fits to be iteratively optimised using sequential matrix algebra calculations. But the advantages of a high-level language could conceivably make Kalman filtering tractable in the trigger. To test this, the algorithm was implemented for the phase-II upgrade of the CMS tracker in MaxJ. The scheduler of Maxeler’s tool, MaxCompiler, automatically pipelines the operations to achieve the best throughput, keeping the flow of data synchronised. This saves a significant amount of effort in the development of a complicated new algorithm compared to a low-level language, where this must be done by hand. Additionally, MaxCompiler’s support for fixed-point arithmetic allows the developer to make full use of the capability of FPGAs to use custom data types. Tailoring the data representation to the problem at hand results in faster, more lightweight processing, which would be prohibitively labour-intensive in a low-level language. The result of the study was hundreds of simultaneous track fits in a single FPGA in just over a microsecond.

Ghost in the machine

Deep neural networks, which have become increasingly prevalent in offline analysis and event reconstruction thanks to their ability to exploit tangled relationships in data, are another obvious candidate for processing data more efficiently. To find out if such algorithms could be implemented in FPGAs, and executed within the tight latency constraints of the trigger, an example application was developed to identify fake tracks – the inevitable byproducts of overlapping particle trajectories – in the output of the MaxJ Kalman filter described above. Machine learning has the potential to distinguish such bogus tracks better than simple selection cuts, and a boosted decision tree (BDT) proved effective here, with the decision step, which employs many small and independent decision trees, implemented with MaxCompiler. A latency of a few hundredths of a microsecond – much shorter than the iterative Kalman filter as BDTs are inherently very parallelisable – was achieved using only a small percentage of the silicon area of the FPGA, so leaving room for other algorithms. Another tool capable of executing machine-learning models in tens of nanoseconds is the “hls4ml” FPGA inference engine for deep neural networks, built on the Vivado HLS compiler of Xilinx. With the use of such tools, non-FPGA experts can trade-off latency and resource usage – two critical metrics of performance, which would require significant extra effort to balance in collaboration with engineers writing low-level code.

Though requiring a little extra learning and some knowledge of the underlying technology, it is now possible for ordinary physicists to programme FPGAs in high-level languages, such as Maxeler’s MaxJ and Xilinx’s Vivado HLS. Development time can be cut significantly, while maintaining latency and resource usage at a similar level to hand-crafted FPGA code, with the fast development of mathematically intricate algorithms an especially promising use case. Opening up FPGA programming to physicists will allow offline approaches such as machine learning to be transferred to real-time detector electronics.

Novel approaches will be critical for all aspects of computing at the high-luminosity LHC. New levels of complexity and throughput will exceed the capability of CPUs alone, and require the extensive use of heterogenous accelerators such as FPGAs, graphics processing units (GPUs) and perhaps even tensor processing units (TPUs) in offline computing. Recent developments in FPGA interfaces are therefore most welcome as they will allow particle physicists to execute complex algorithms in the trigger, and make the critical initial selection more effective than ever before.

Further reading

R Aggleton et al. 2017 JINST 12 P12019.

J Duarte et al. 2018 JINST 13 P07027.

O Pell and V Averbukh 2012 Comput. Sci. Eng. 14 98.

S Summers et al. 2019 EPJ Web Conf 214 01003.

S Summers et al. 2017 JINST 12 C02015.